LOOP-i

2024

AI Hardware. Wearable Design. Spatial Interaction.

Project Duration

2 Months · Group Project (Led by Me)

My Roles

Project Management, Interaction Designer, Industrial Designer

Goal

Exploring the potential of AI technologies and new hardwares designed to leverage them

Collaboration

OPPO x Royal College of Art

London Design Festival 2024

OPPO x RCA: Connective Intelligence

With the advancement of AI technologies, I believe the best way to harness its potential is by integrating it into scenarios where it is truly needed.

LOOP-i is an AI-powered wearable camera that reimagines the vlogging experience. It uses multimodal inputs to perceive creators’ intent and capture what they focus on. By leveraging generative AI, it enables creators to generate dynamic content based on their surroundings.

Context

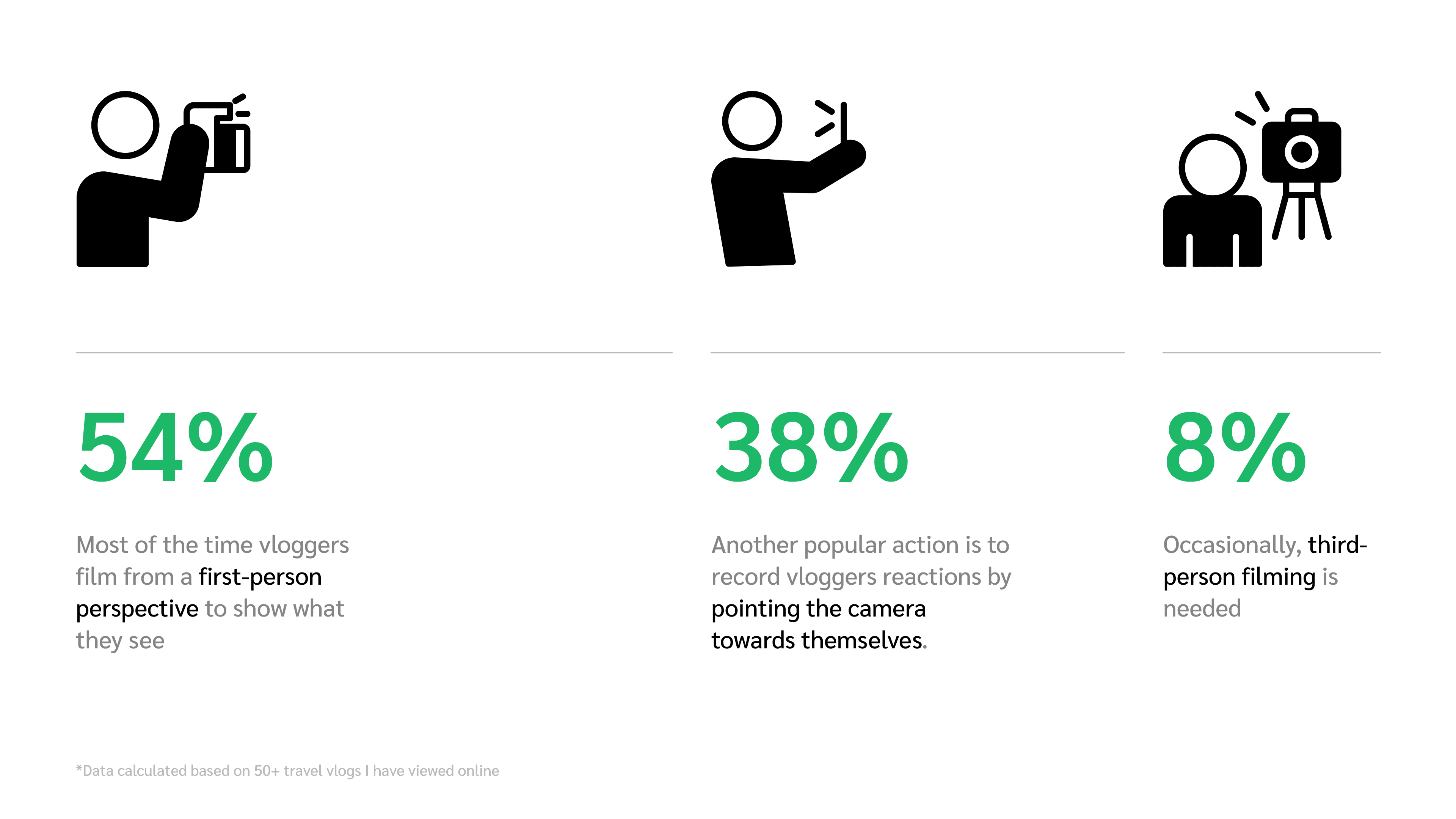

In the past few years vlogging has been trending rapidly, especially travel vlog. Here are some data. Among all vlogs, travel vlog takes around 1/3. Travel vlogs also have higher profit increase and there is a 106% annual growth of travel vlog channels.

LOOP-i

Project Duration

2 Months · Group Project (Led by Me)

My Roles

Project Management, Interaction Designer, Industrial Designer

Goal

Exploring the potential of AI technologies and new hardwares designed to leverage them

Collaboration

OPPO x Royal College of Art

2024

AI Hardware. Wearable Design. Spatial Interaction.

With the advancement of AI technologies, I believe the best way to harness its potential is by integrating it into scenarios where it is truly needed.

LOOP-i is an AI-powered wearable camera that reimagines the vlogging experience. It uses multimodal inputs to perceive creators’ intent and capture what they focus on. By leveraging generative AI, it enables creators to generate dynamic content based on their surroundings.

London Design Festival 2024

OPPO x RCA: Connective Intelligence

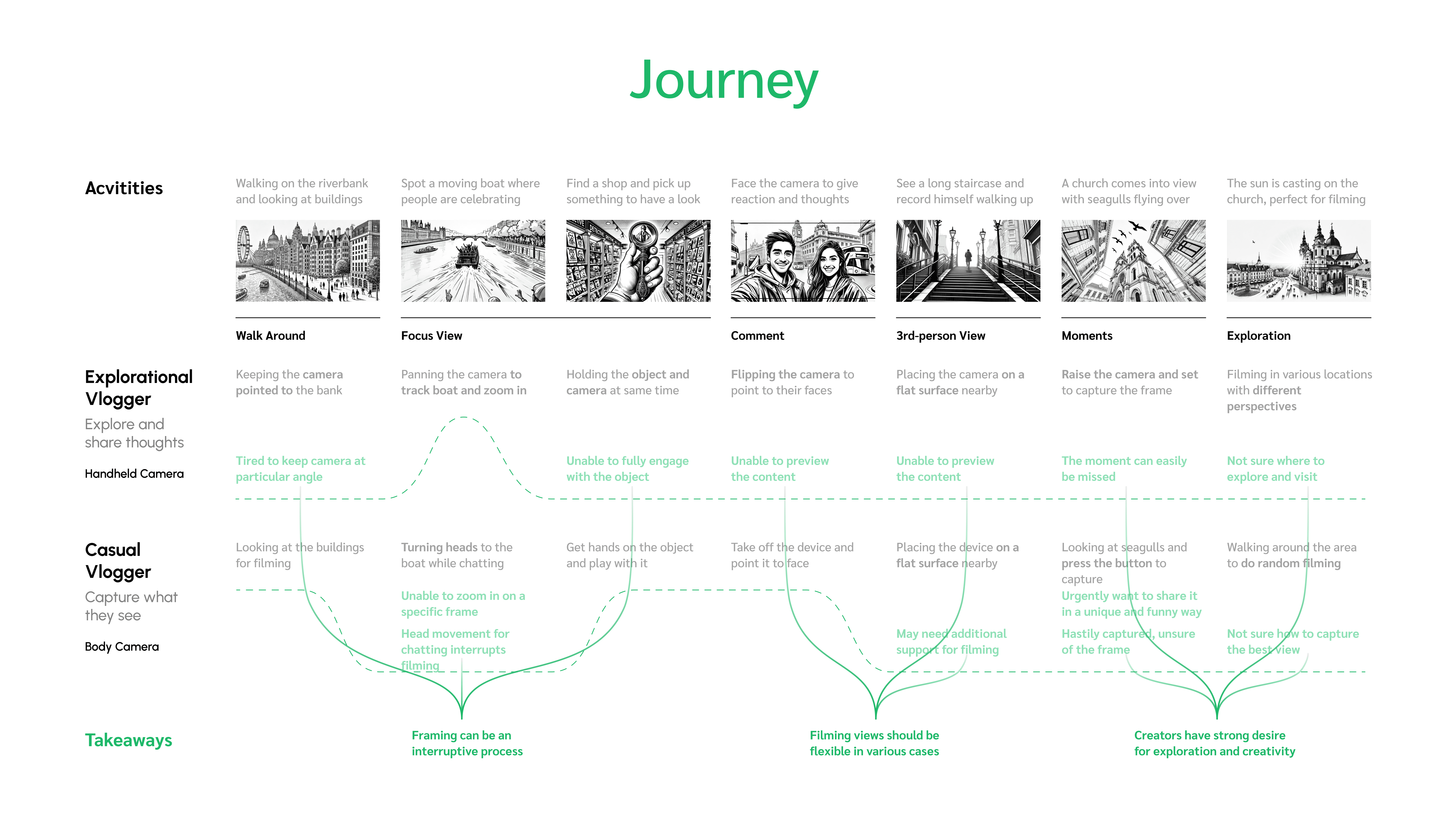

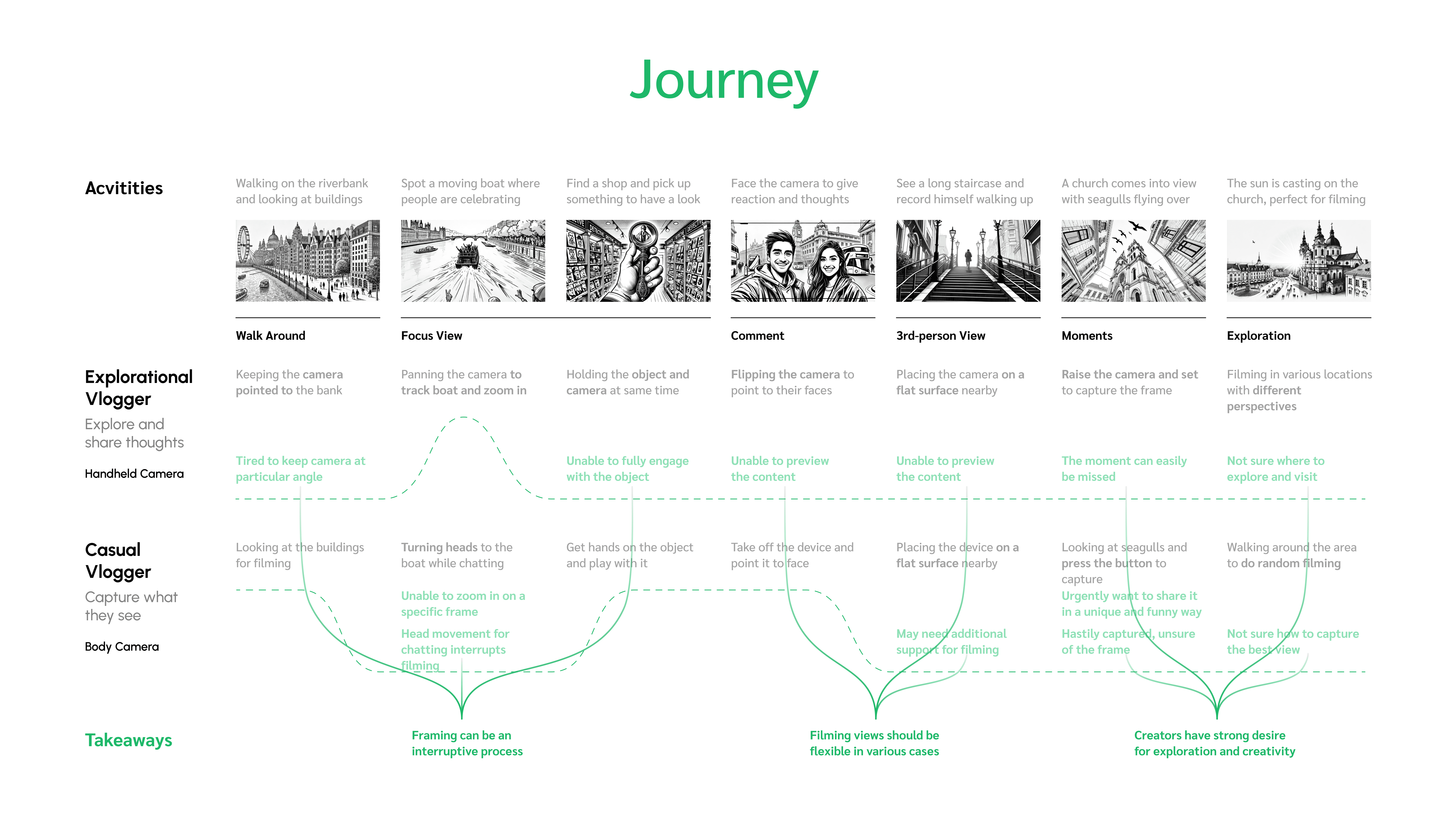

Challenge

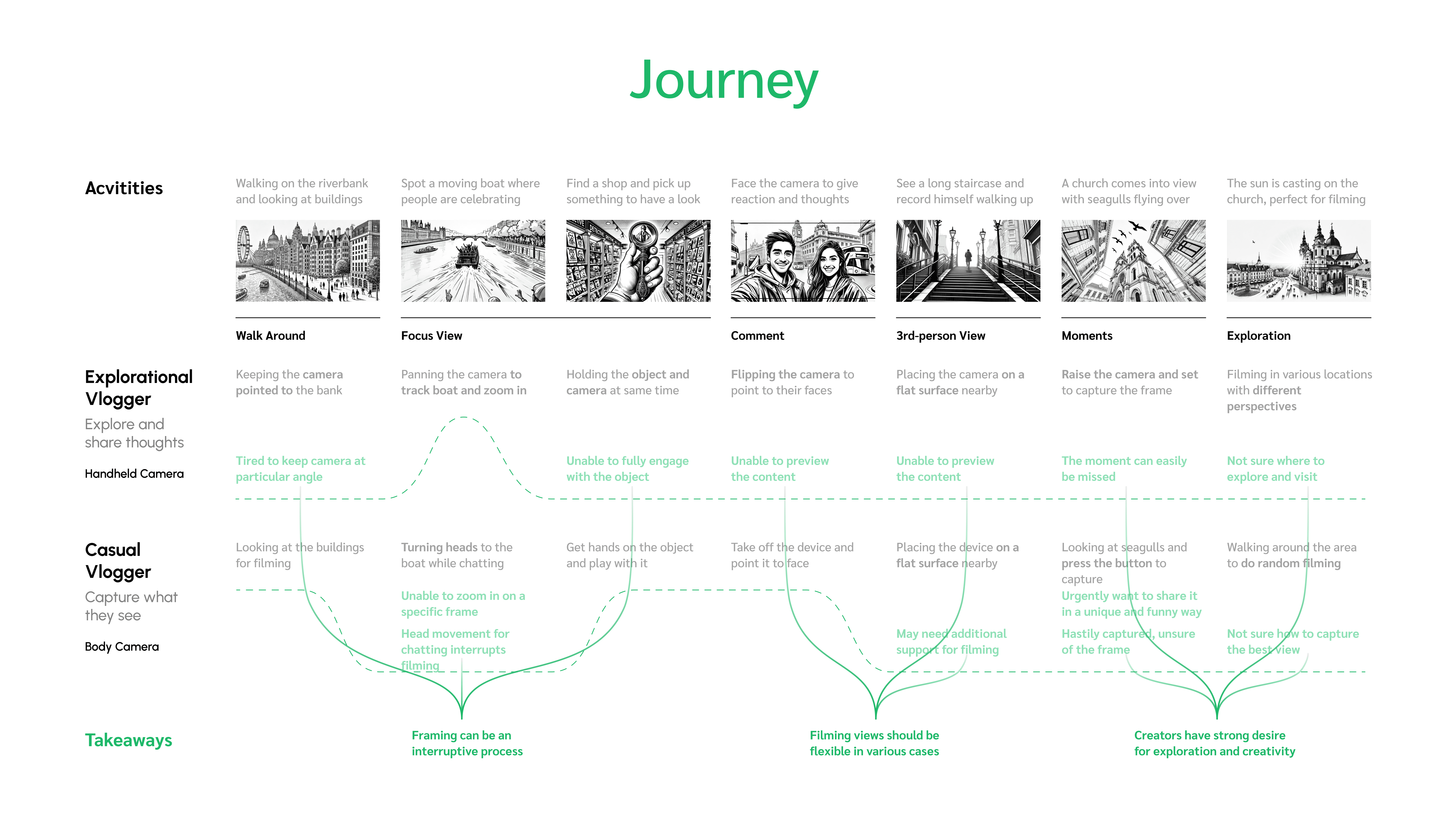

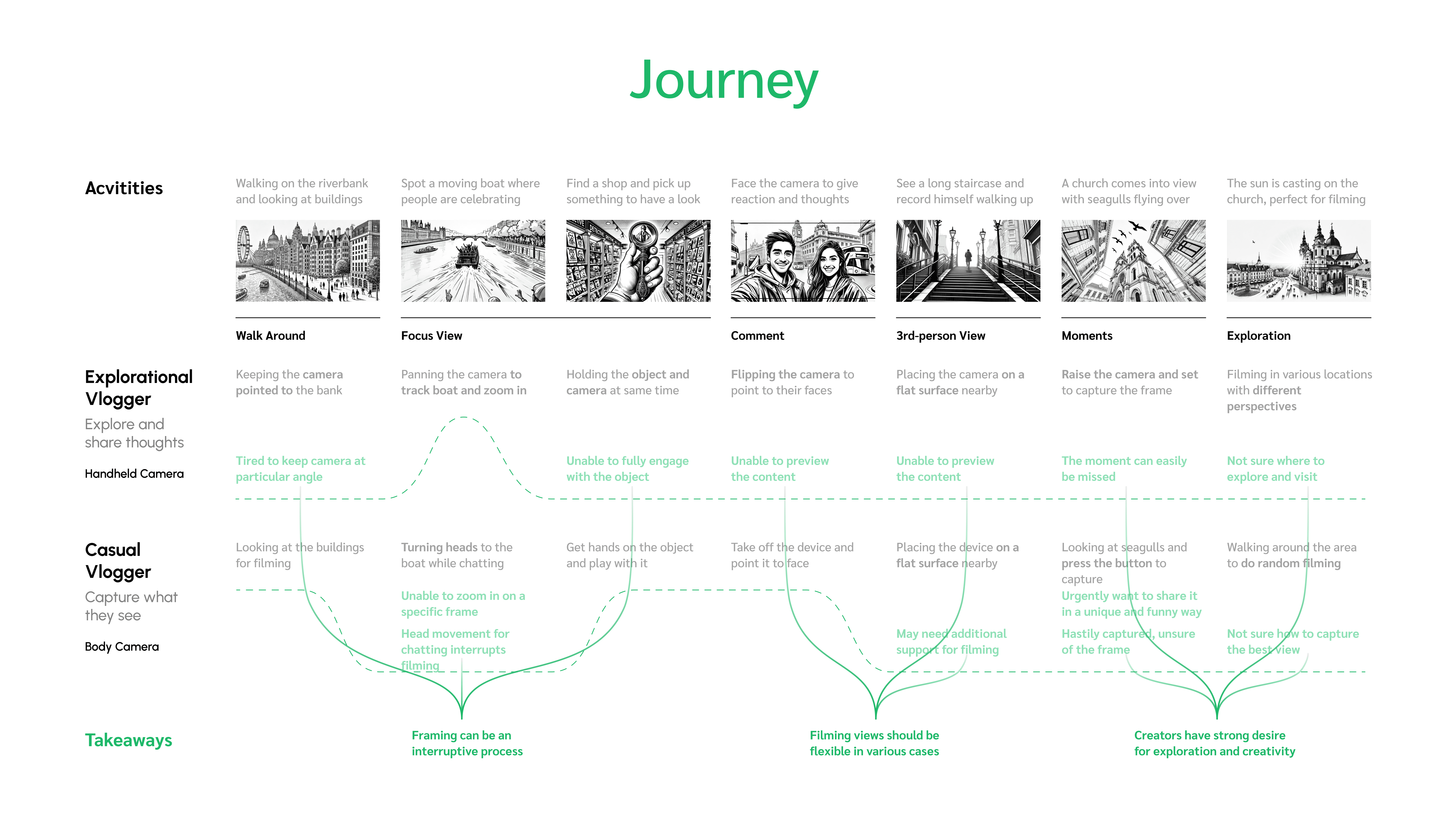

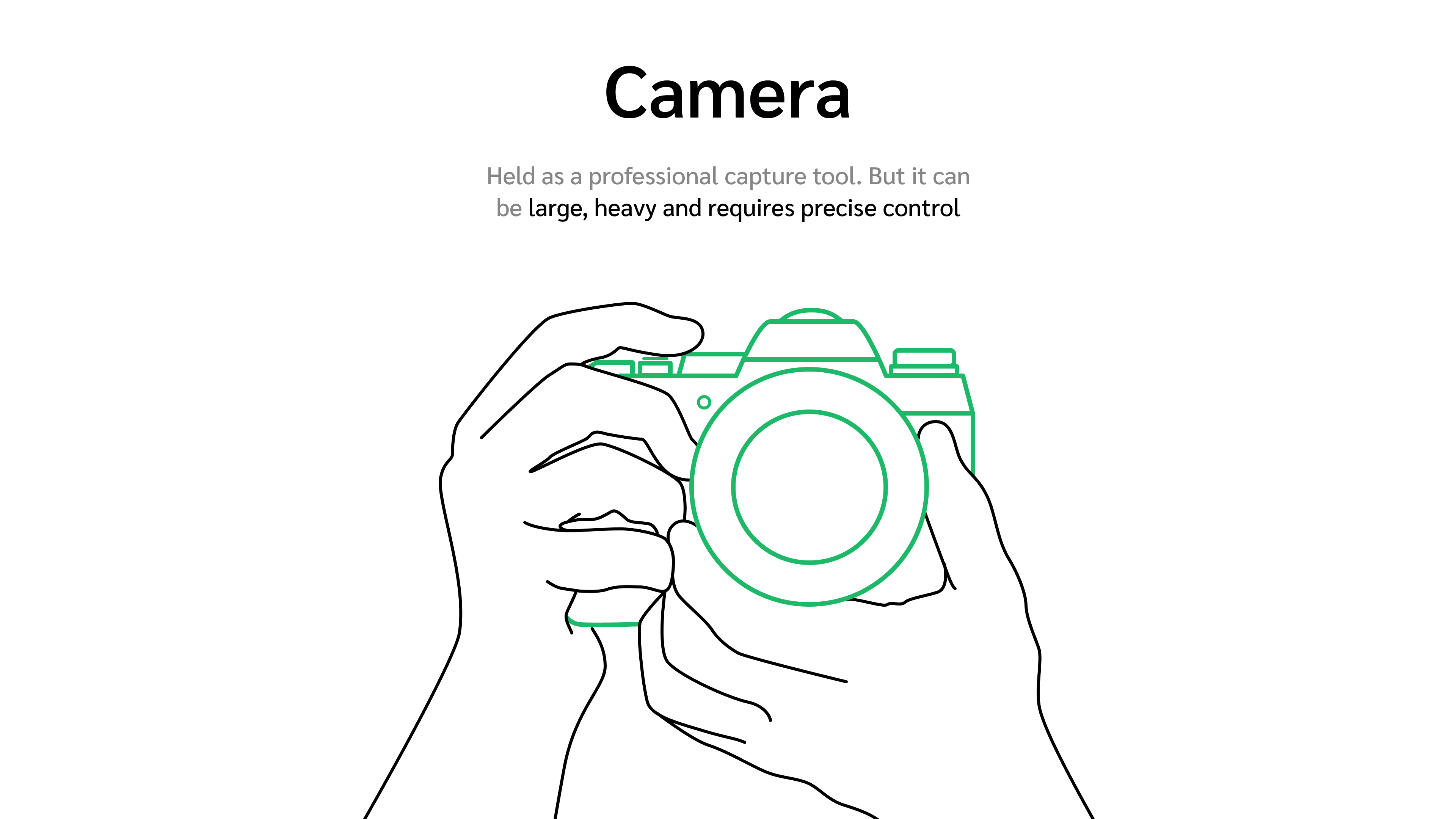

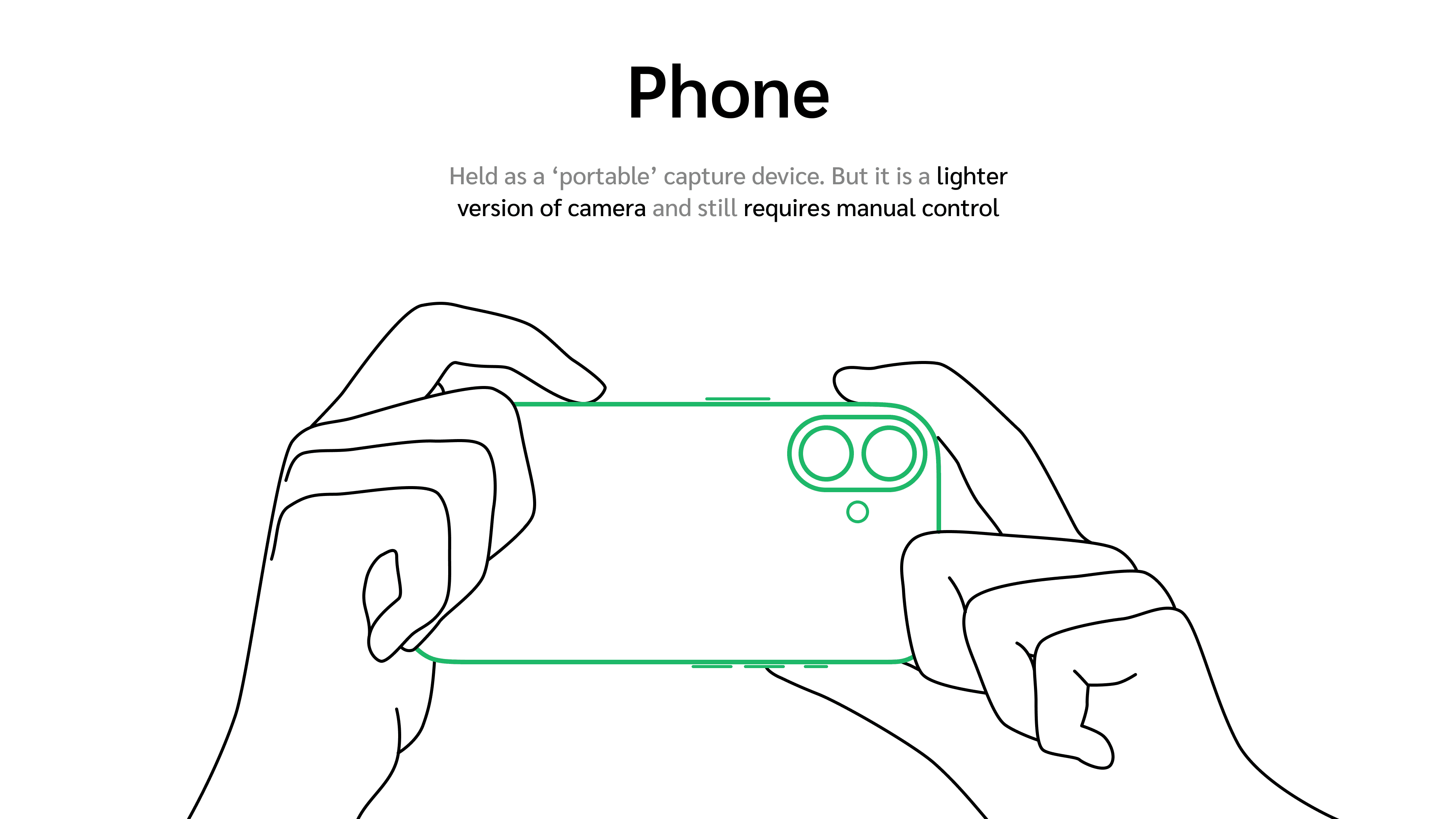

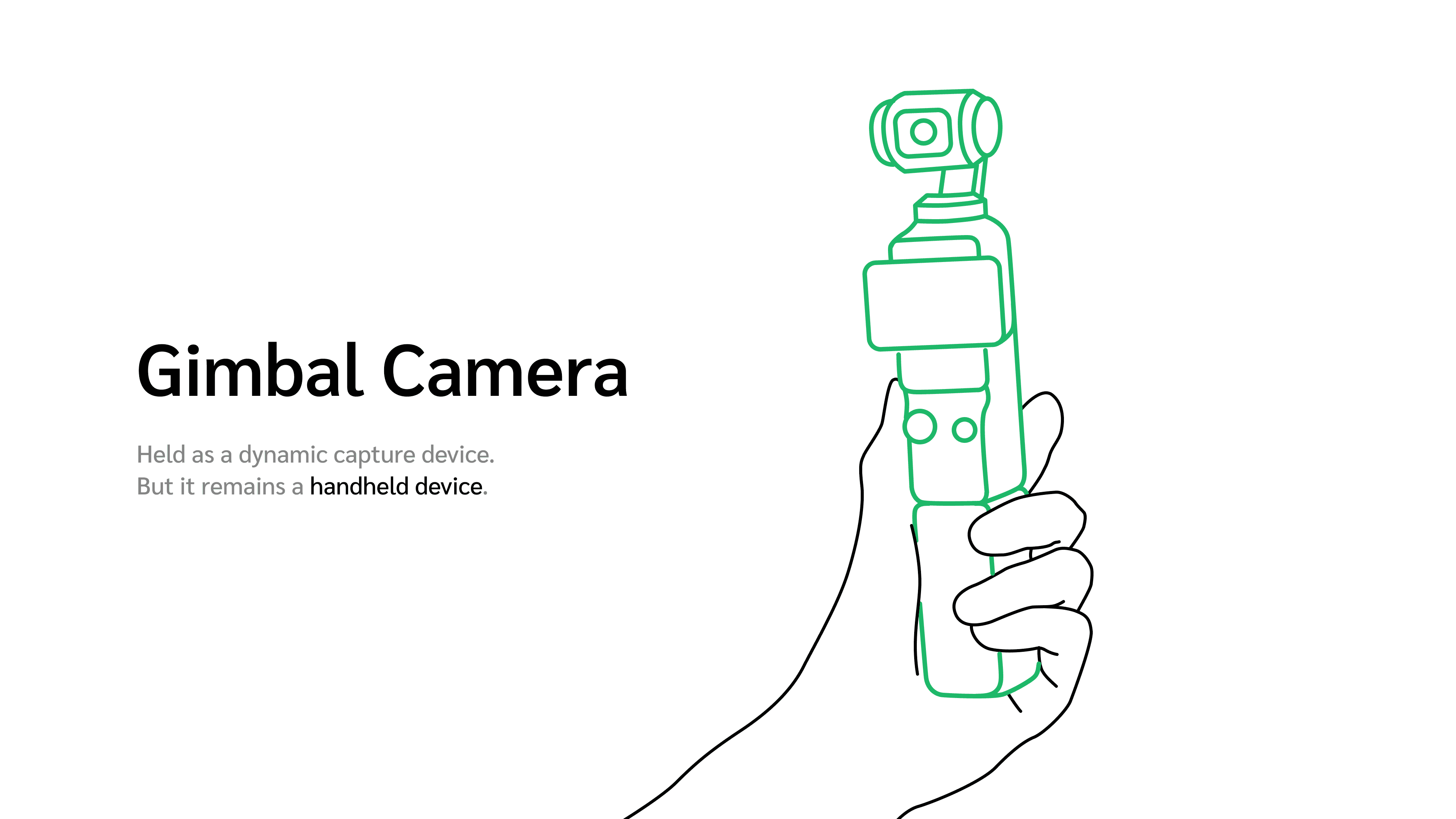

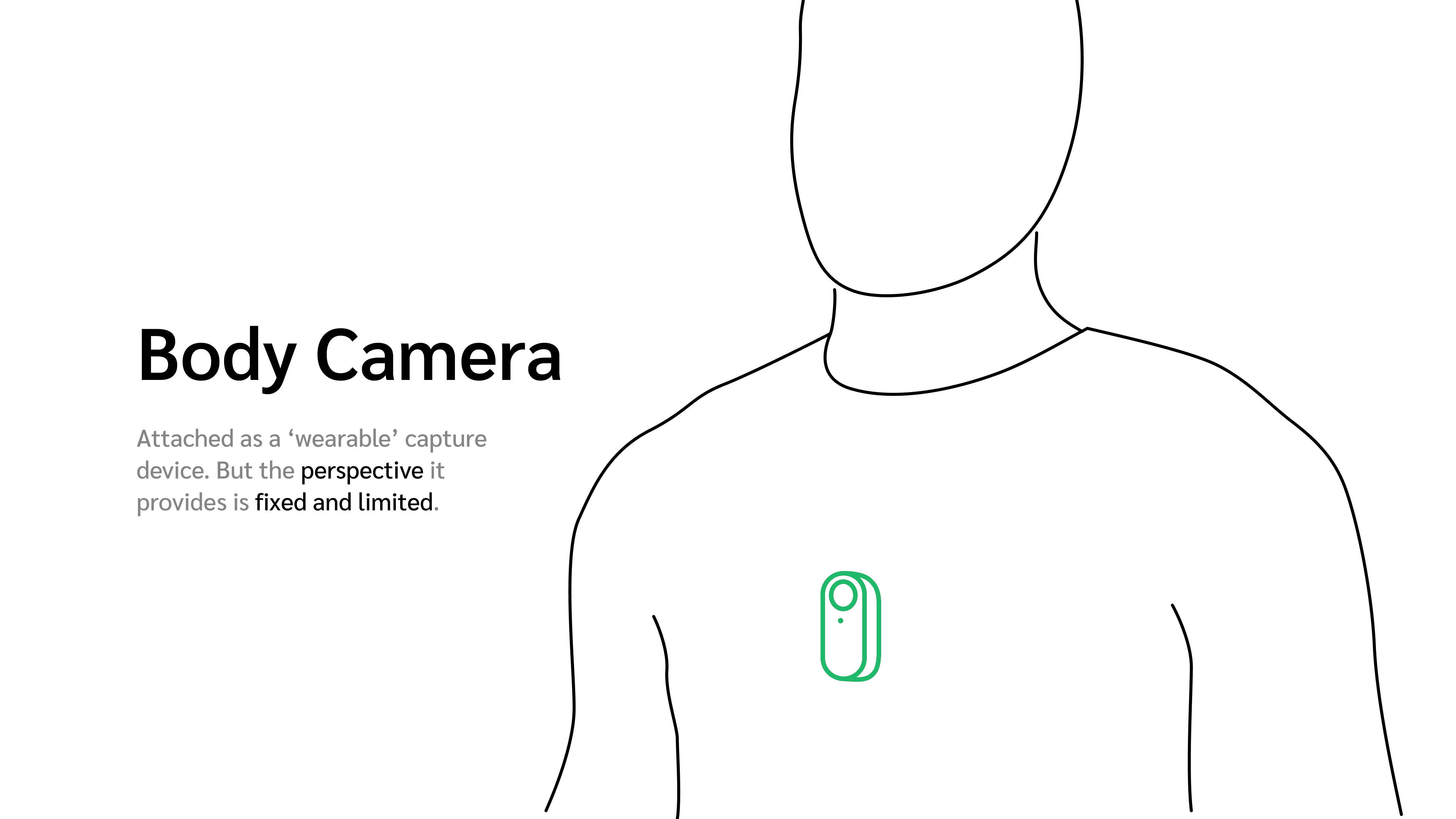

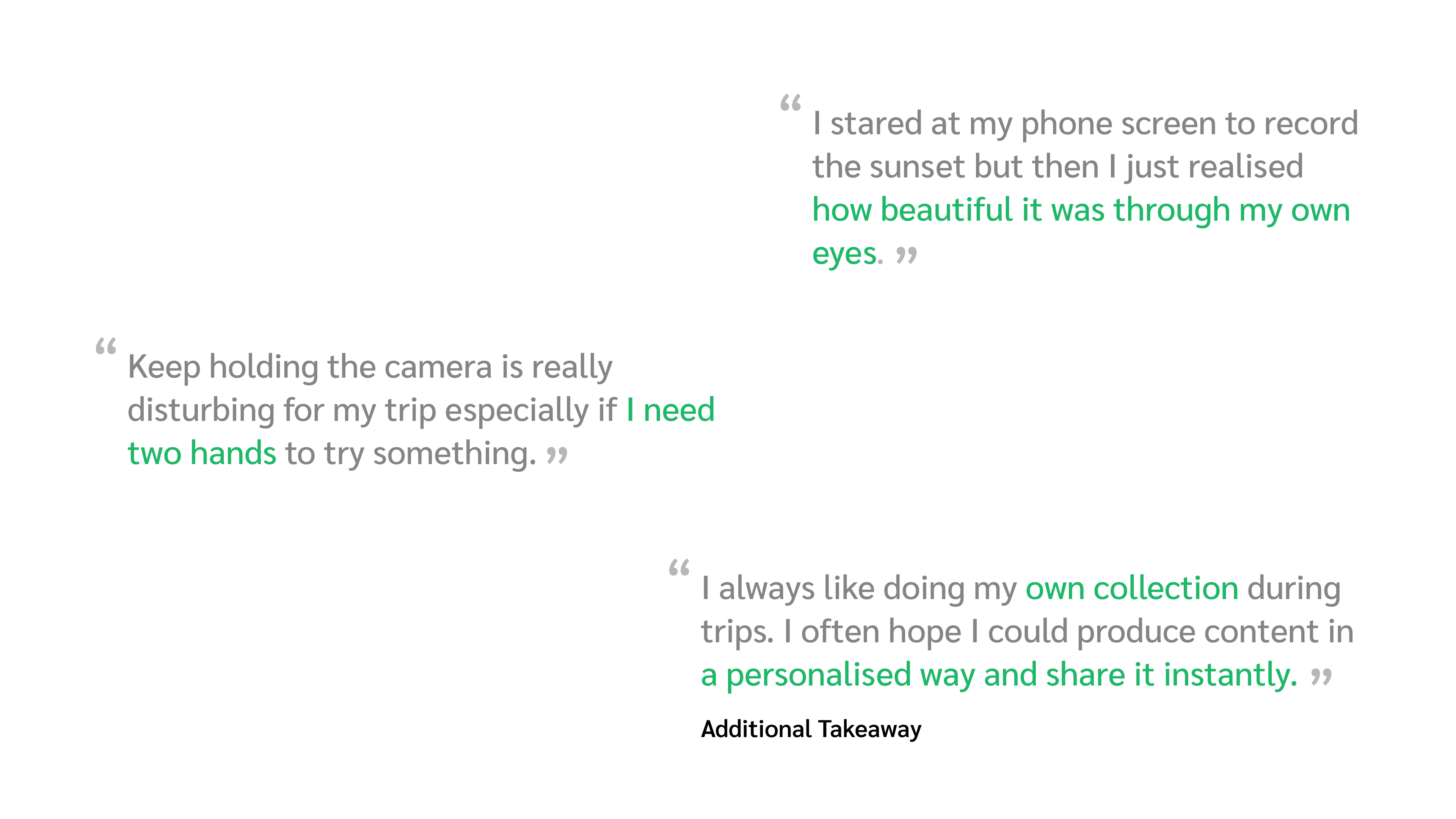

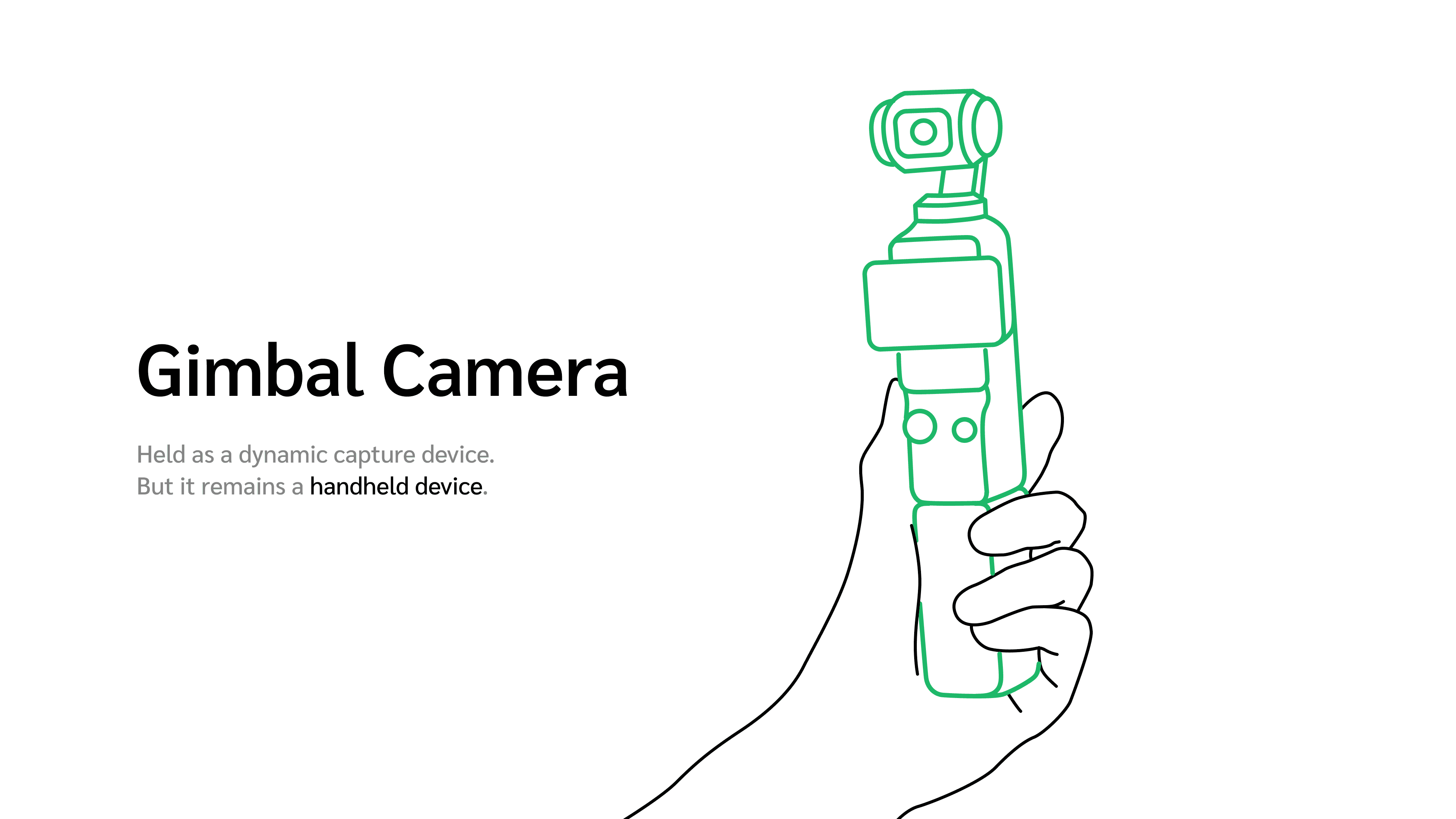

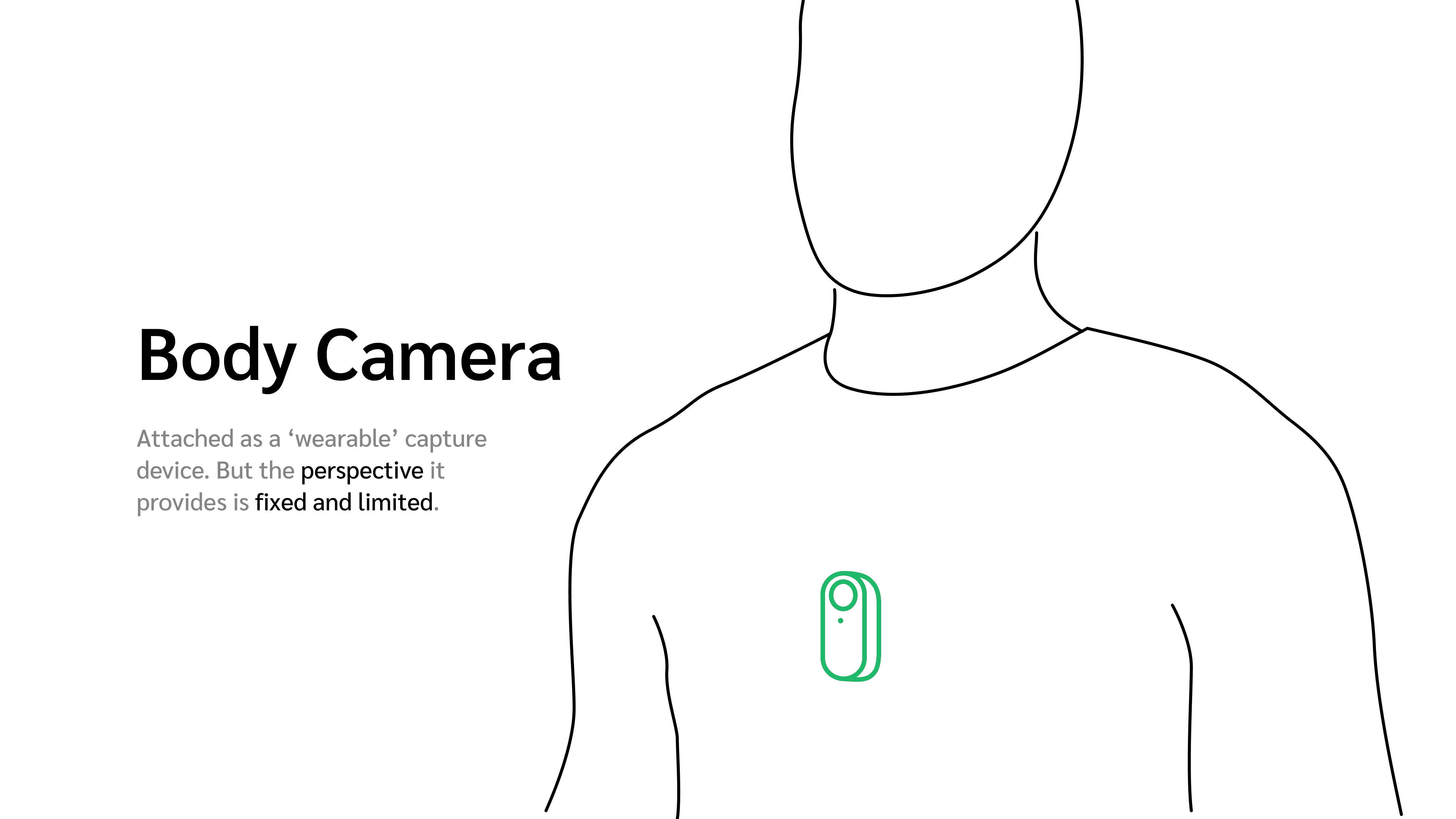

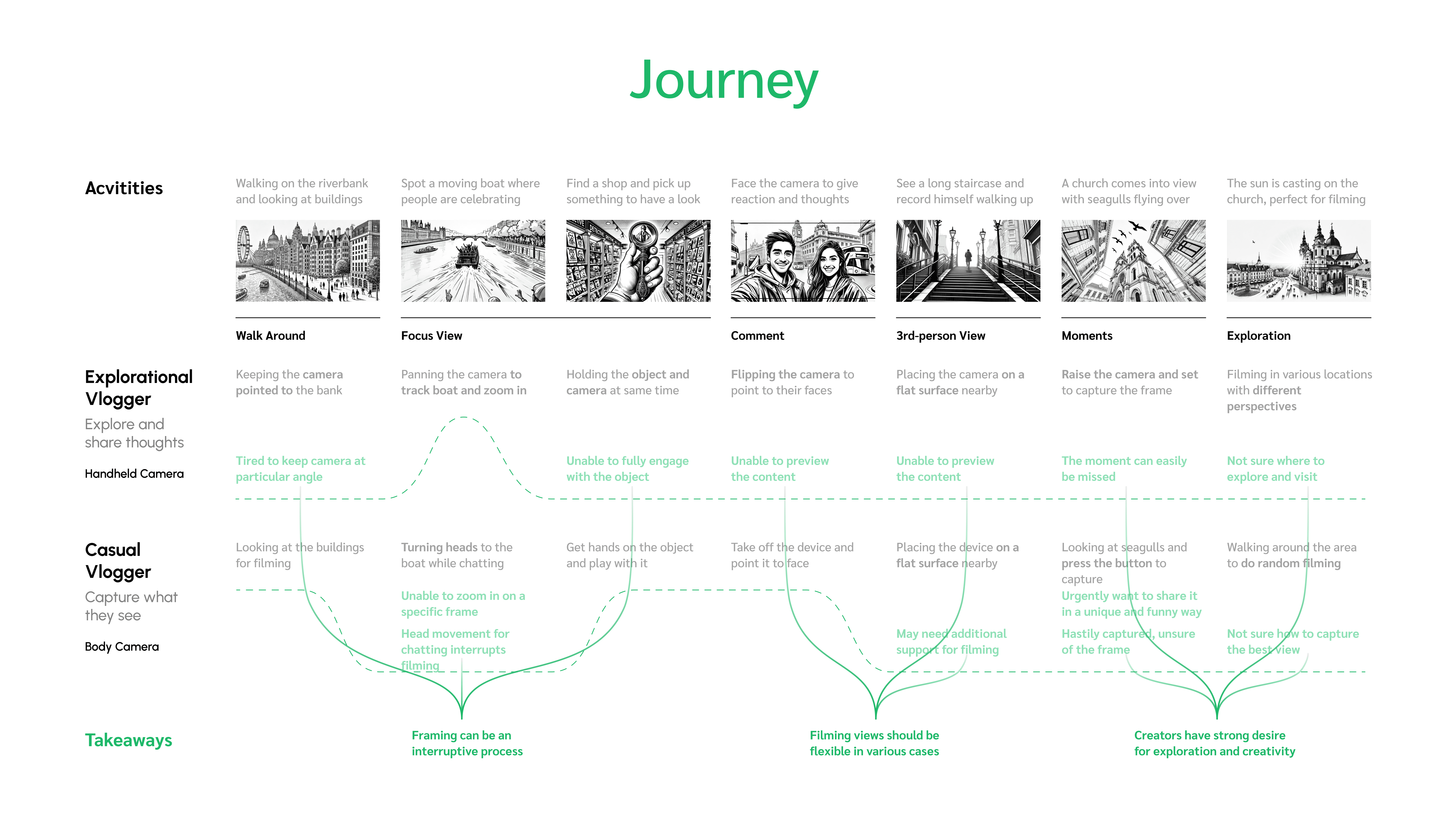

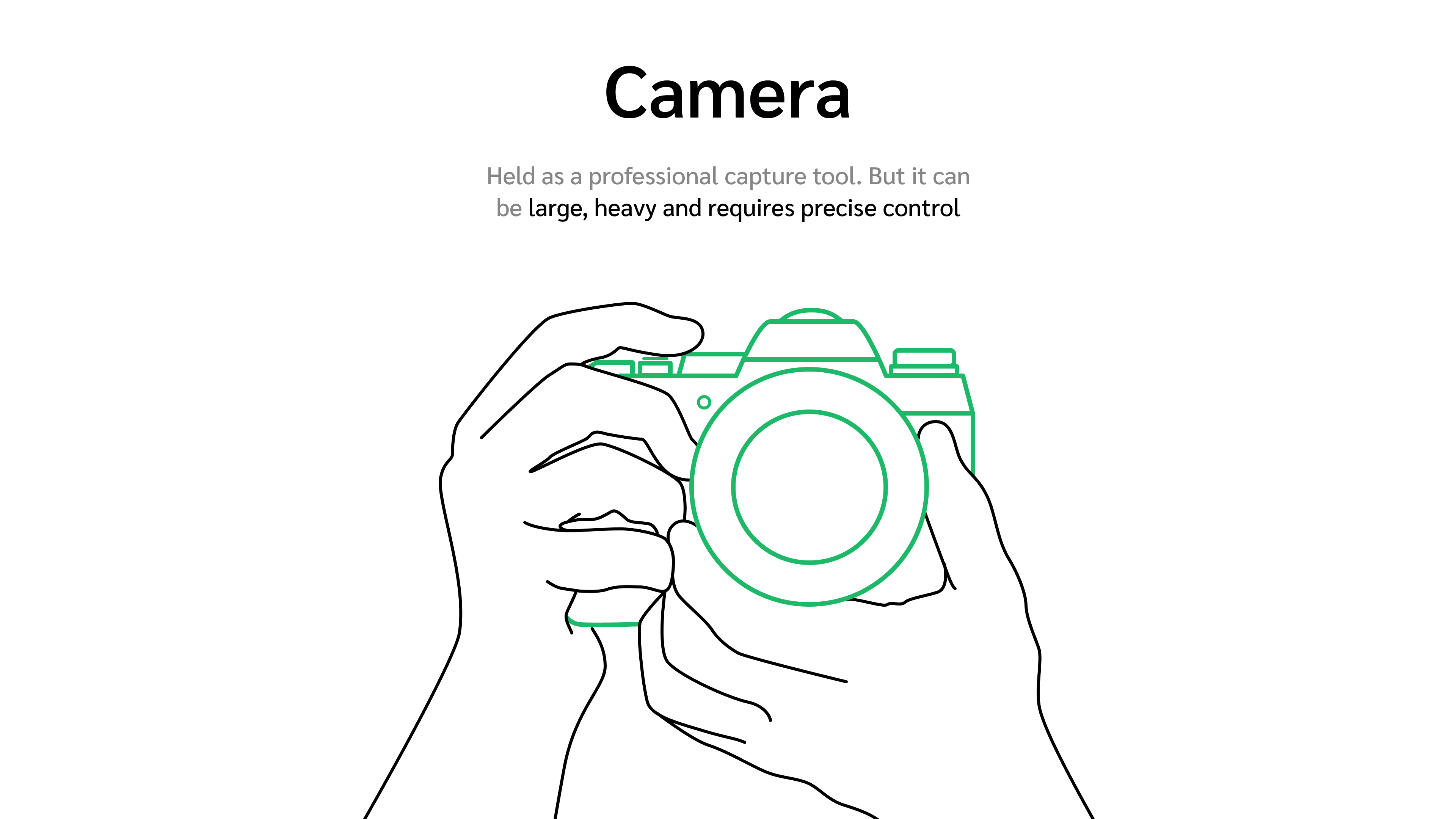

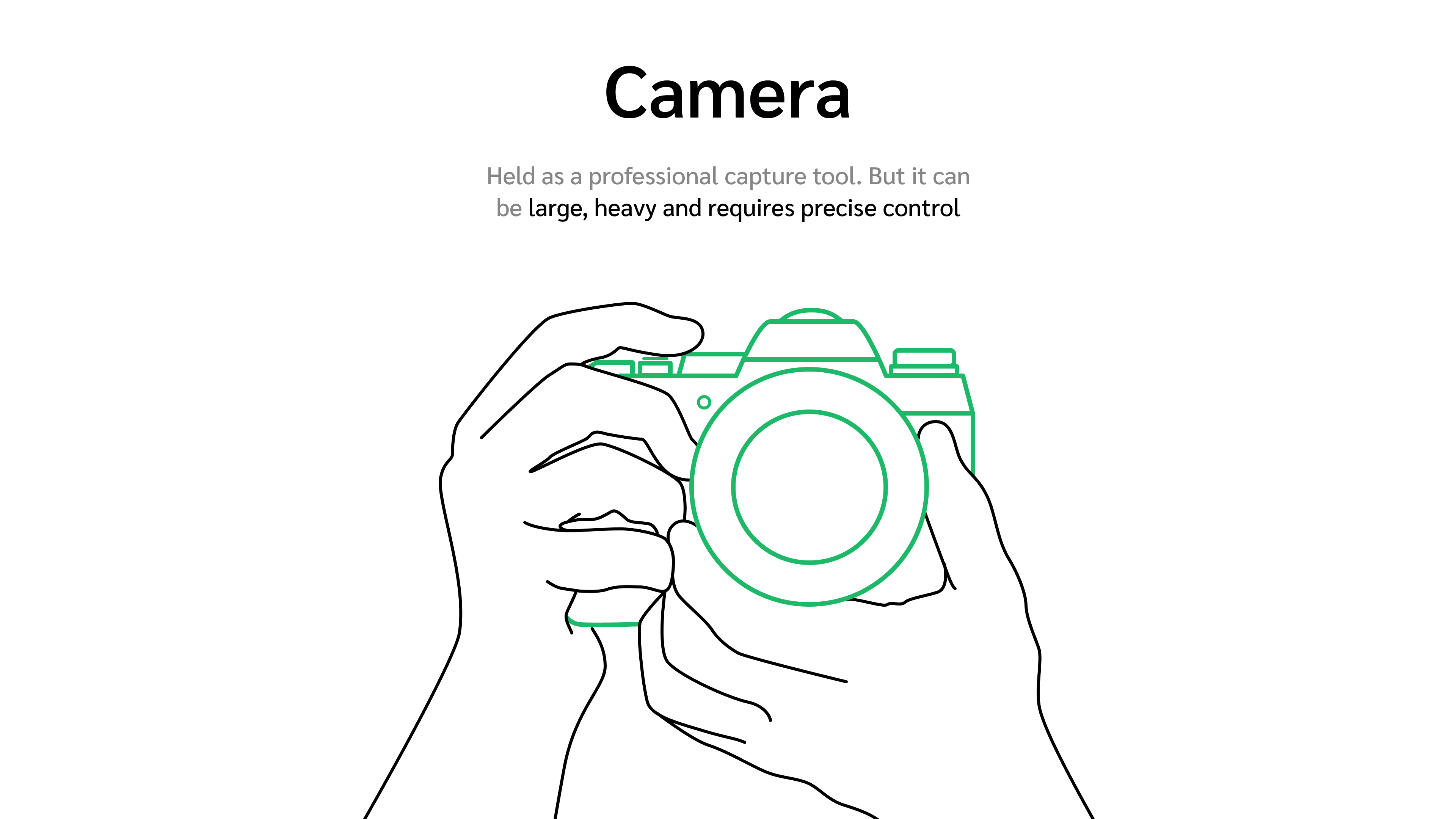

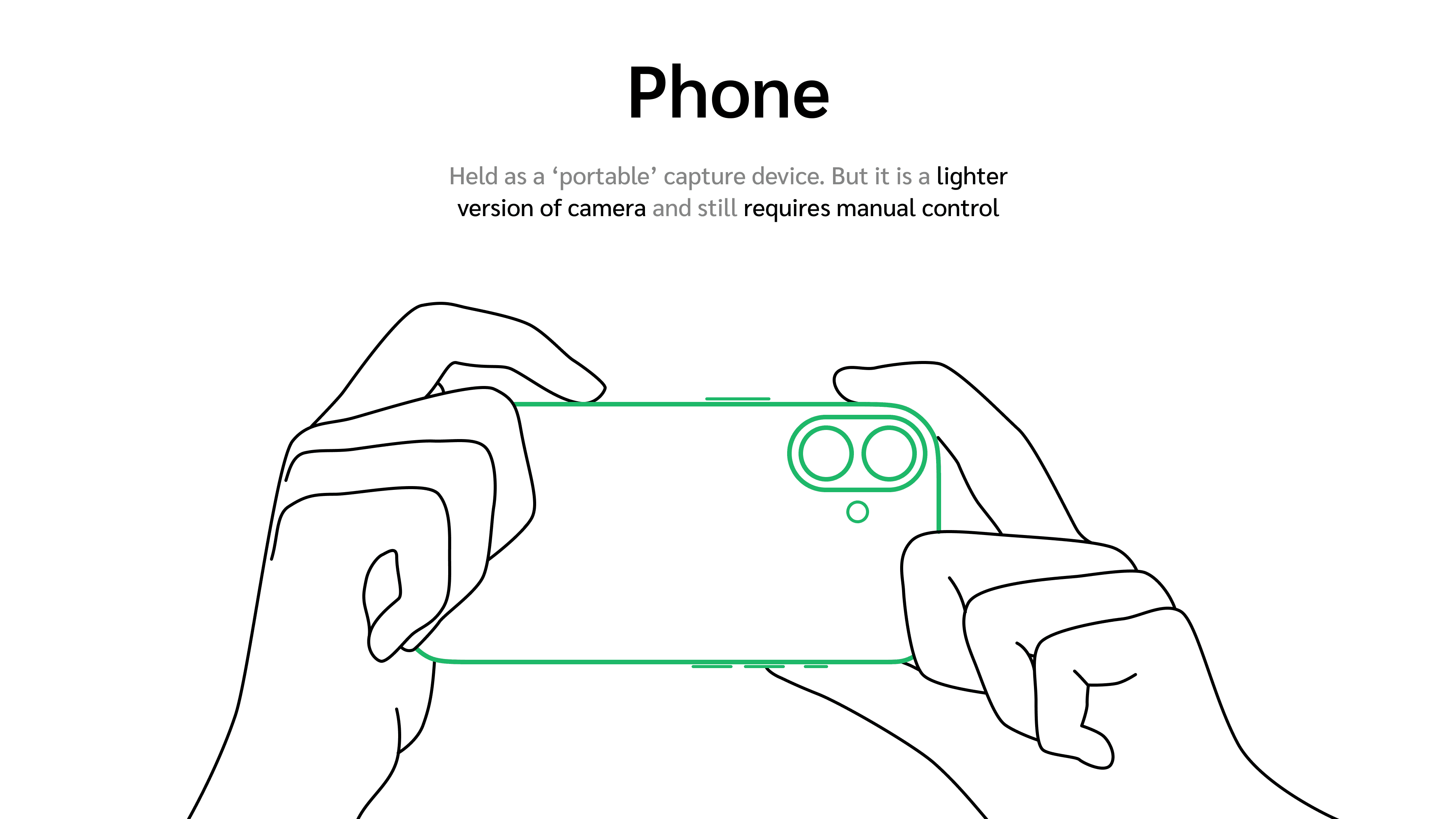

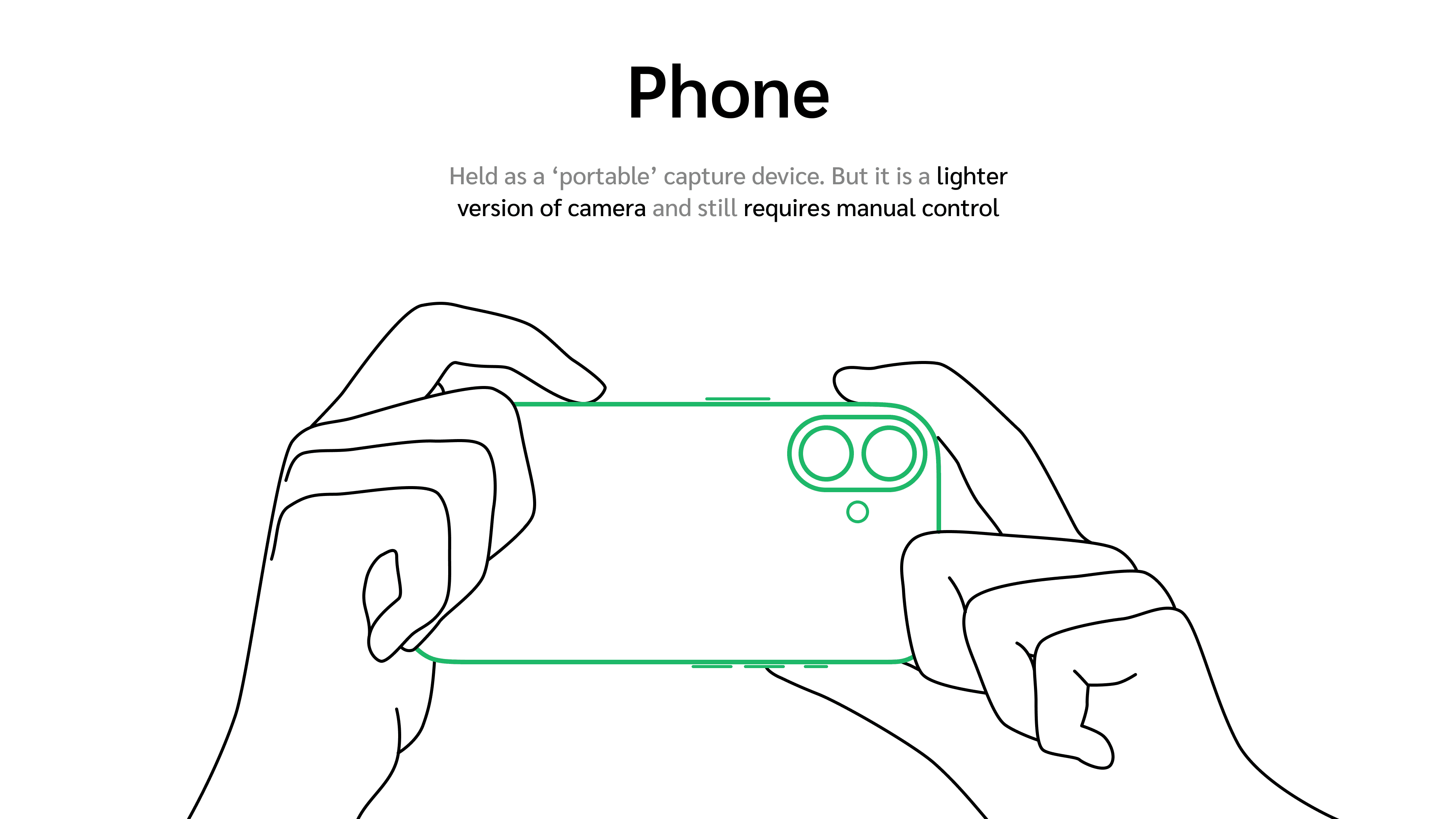

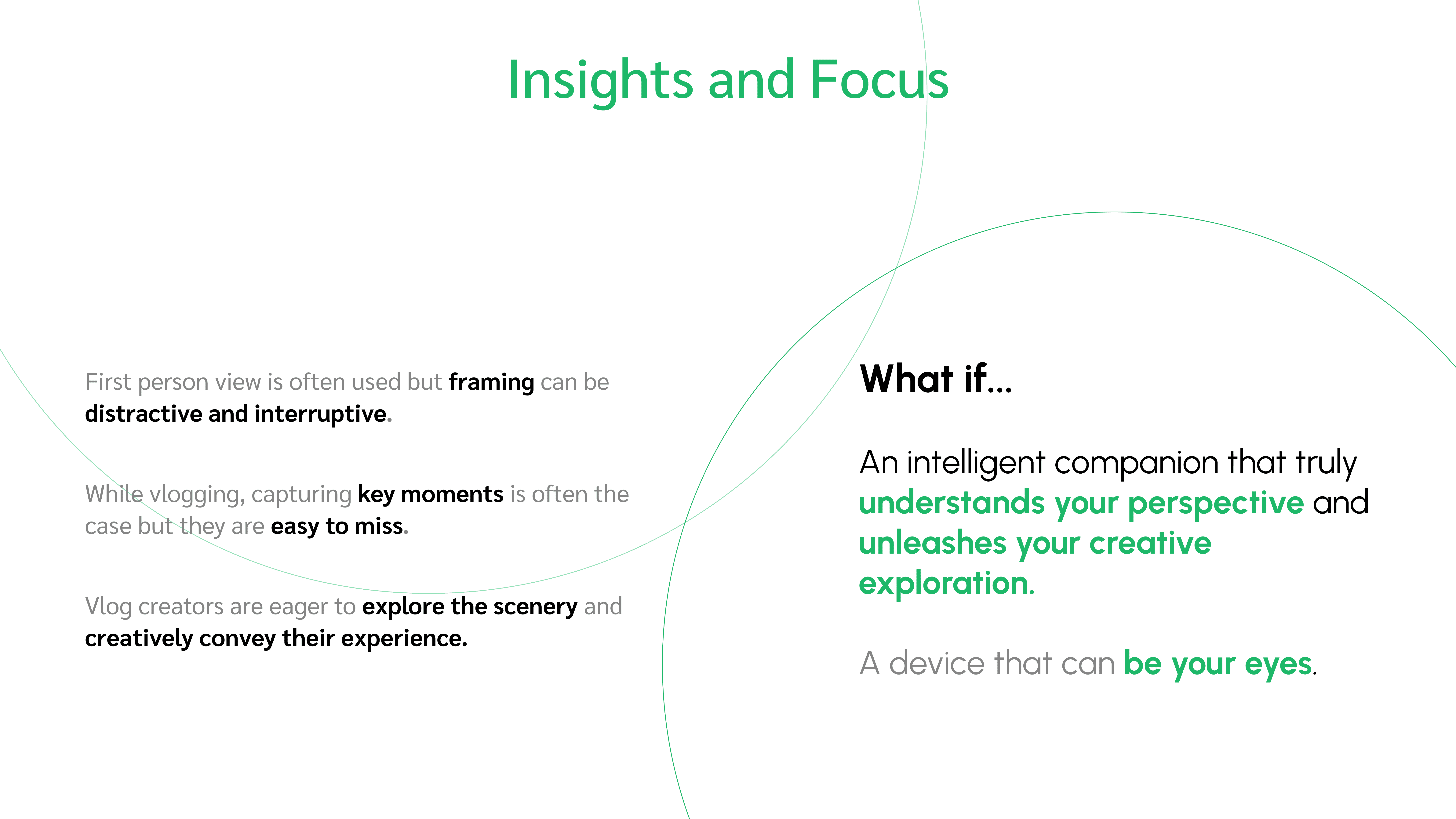

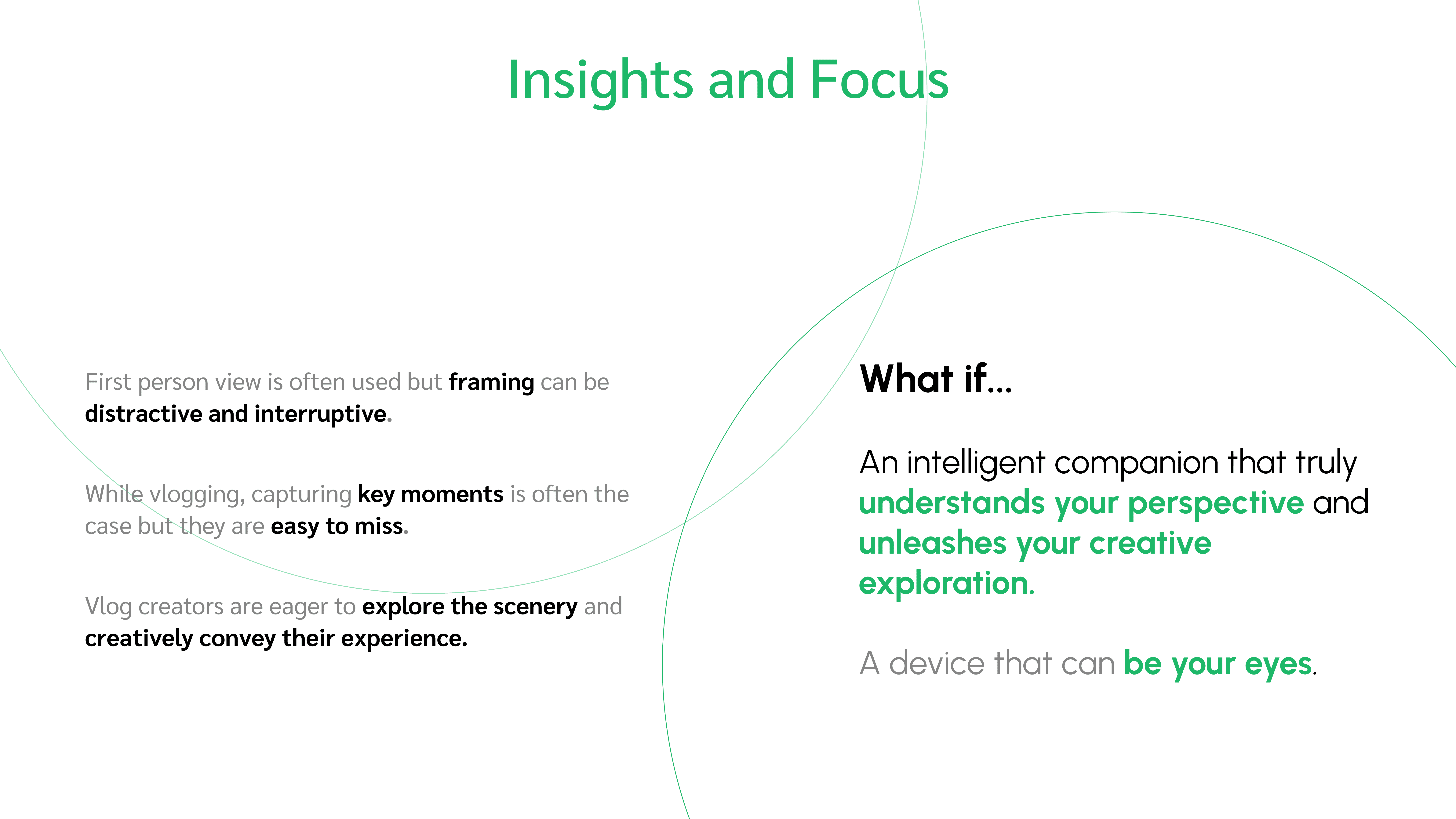

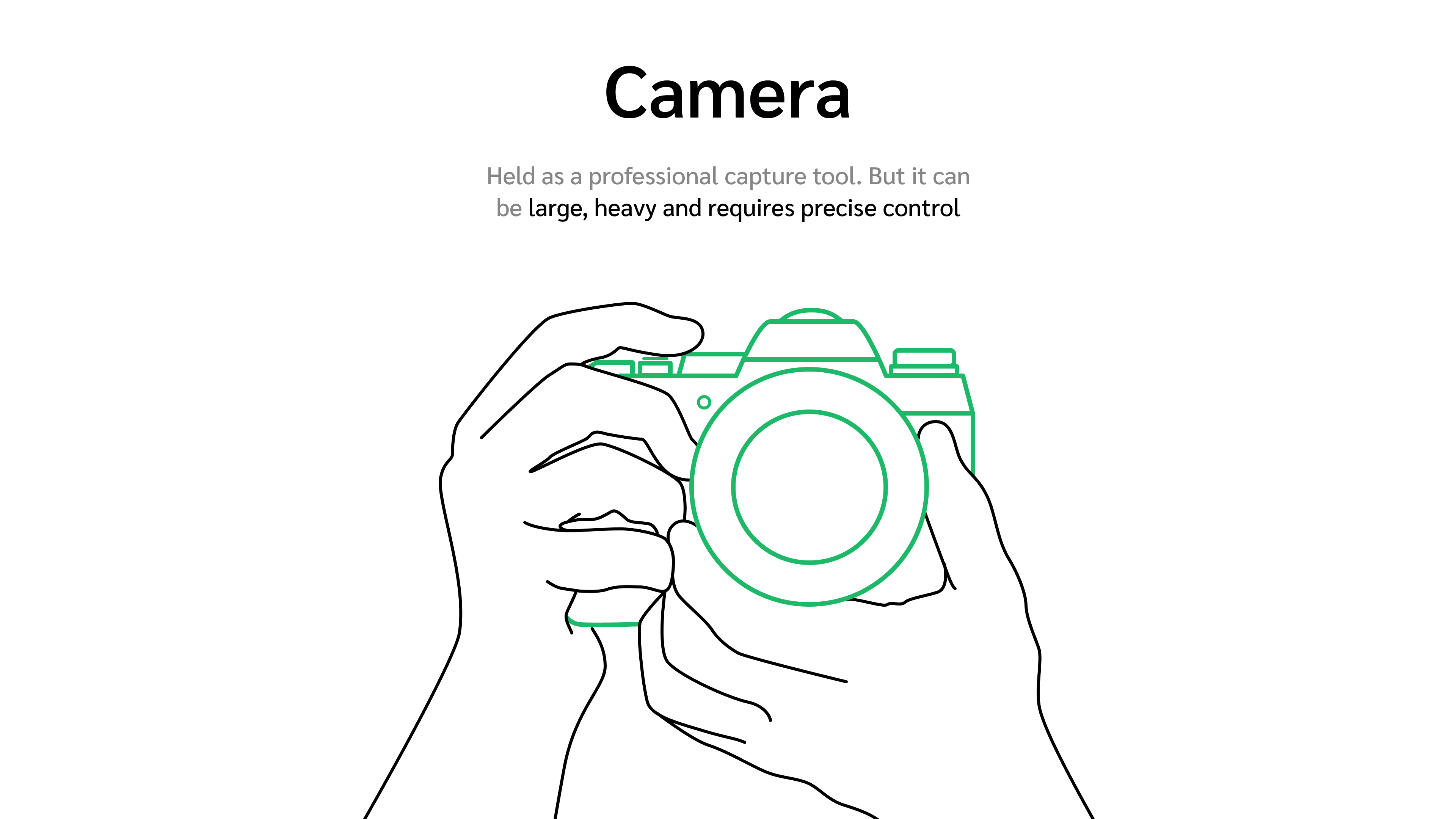

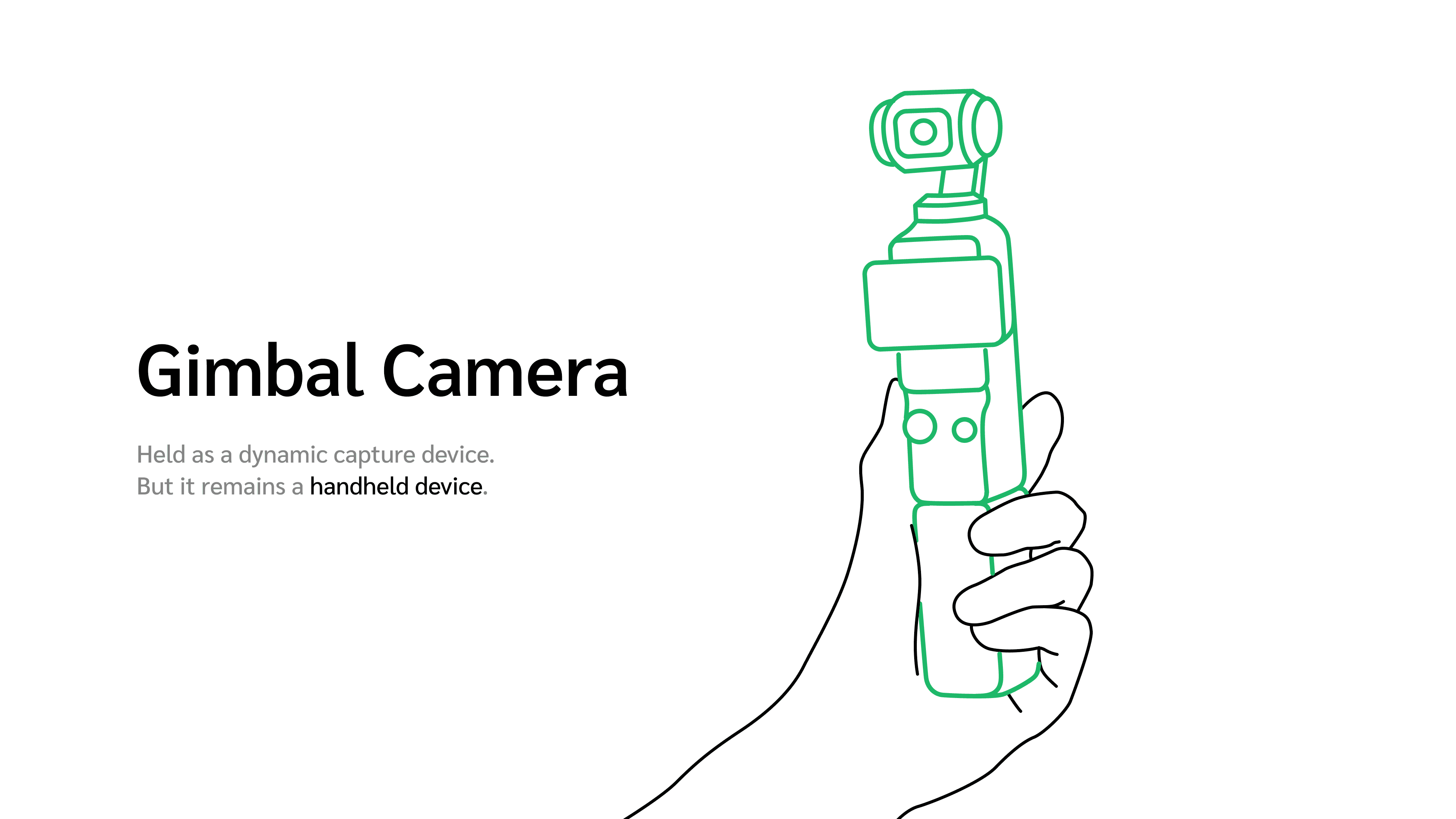

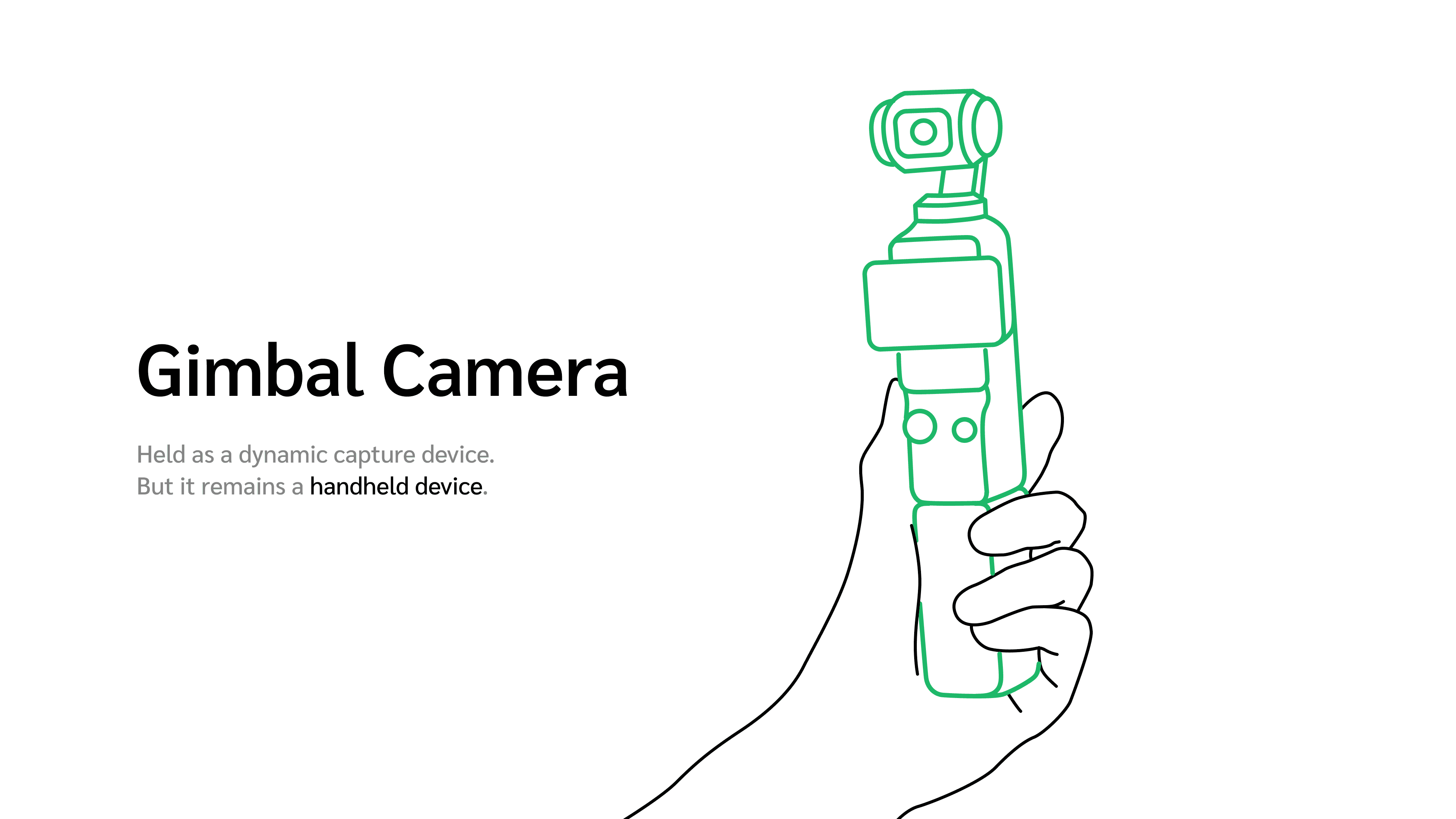

Travel vlogging has been so popular but the recording devices remains traditional. Here we are targeting at amateur vloggers, but even they don’t have many choices.

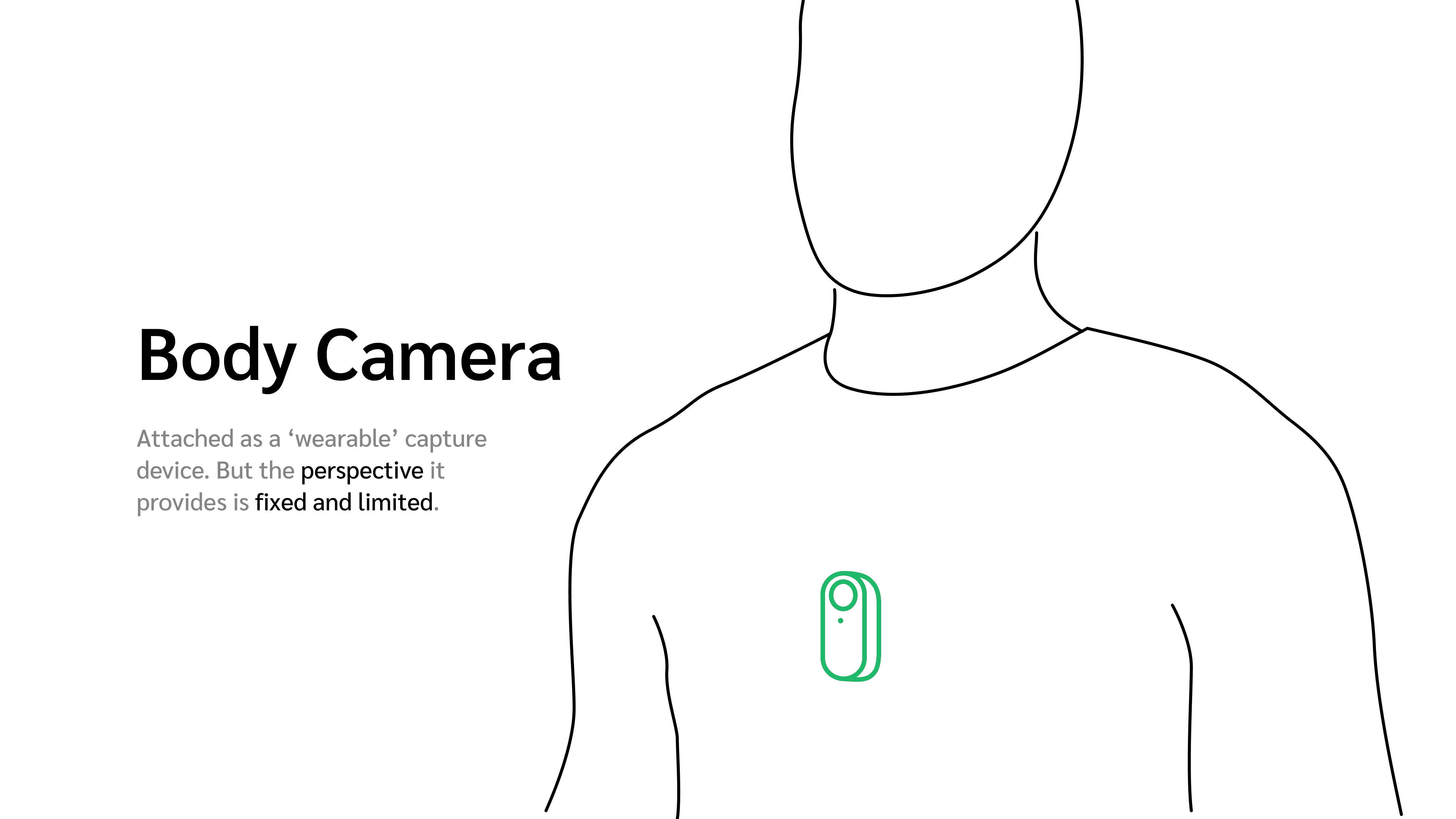

Most people take hand-held cameras for recording, which really interrupts their travel experience as their hands are always occupied. There are smaller devices like the body cam but it has fixed perspective and are not that flexible to change dynamically.

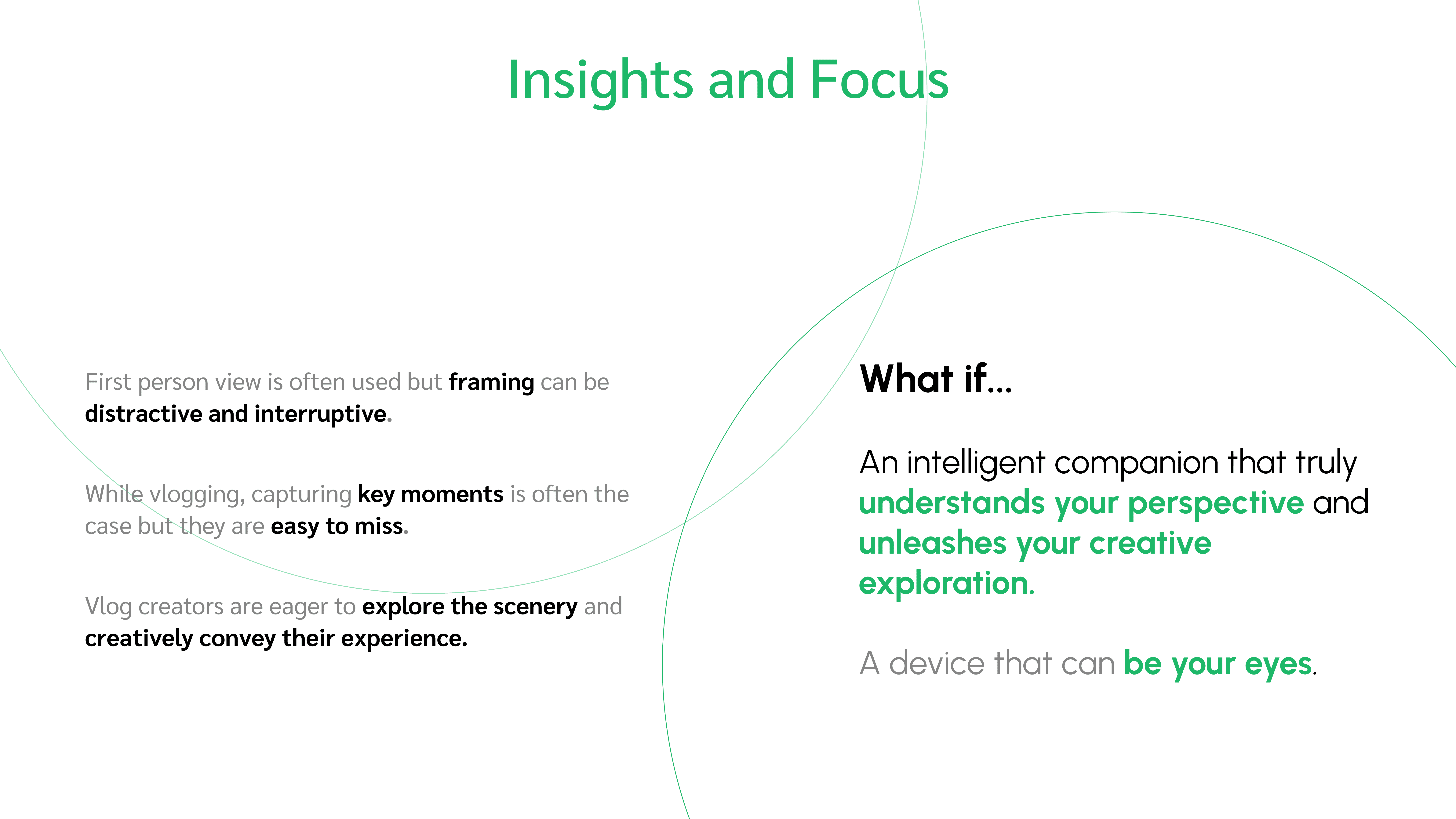

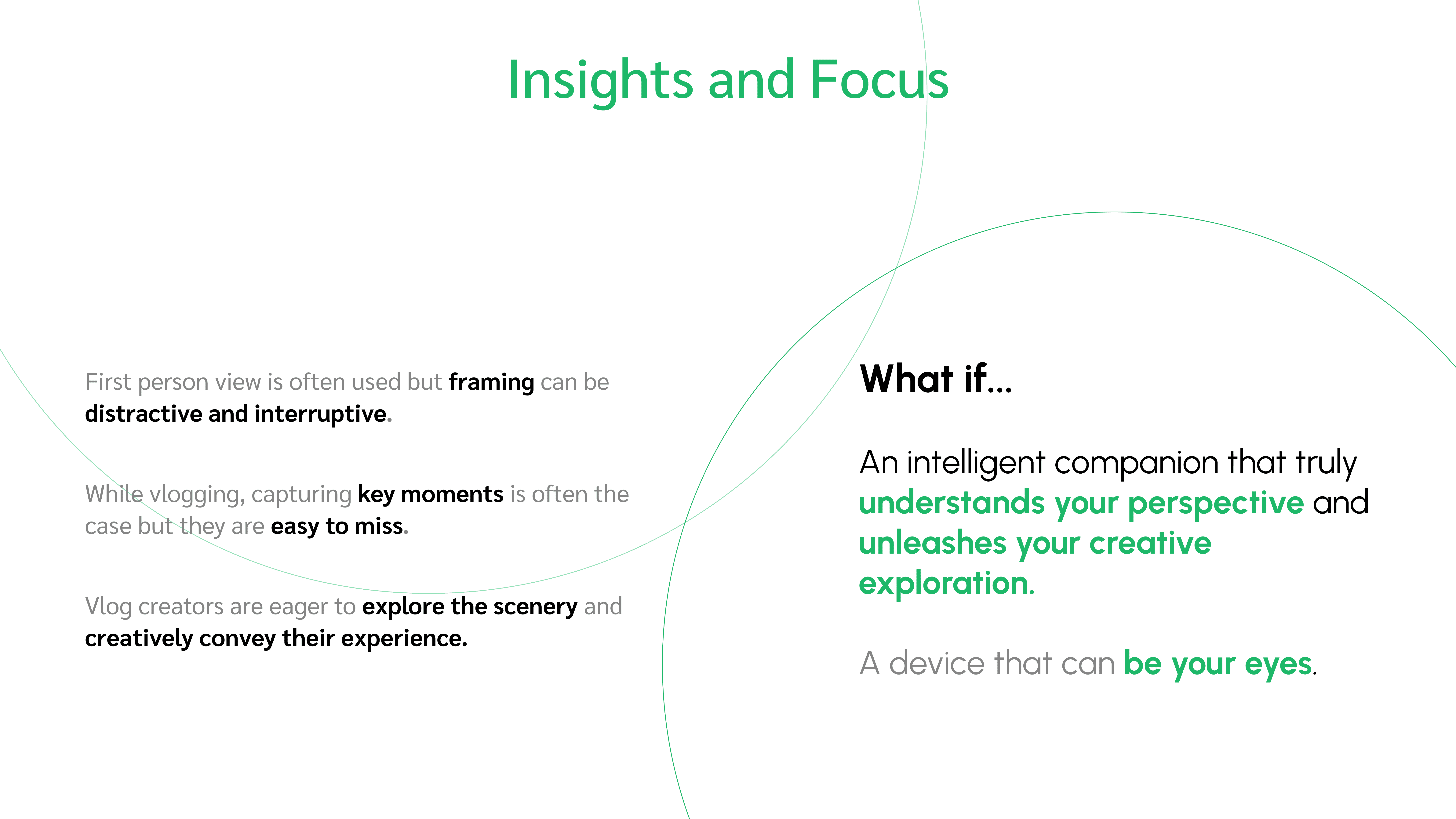

What if there is an intelligent companion that truly understands your perspective and unleashes your creative exploration?

Concept

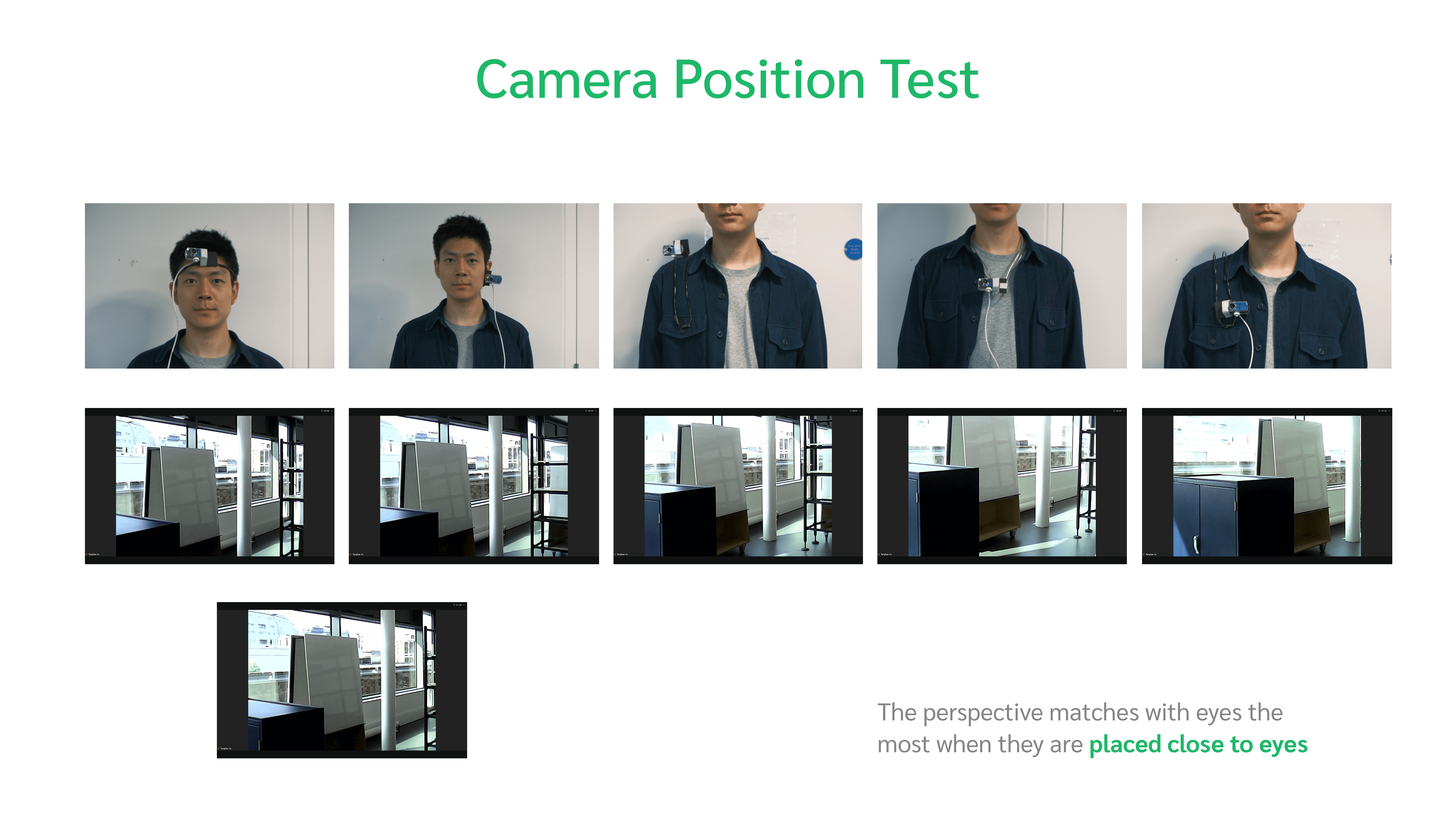

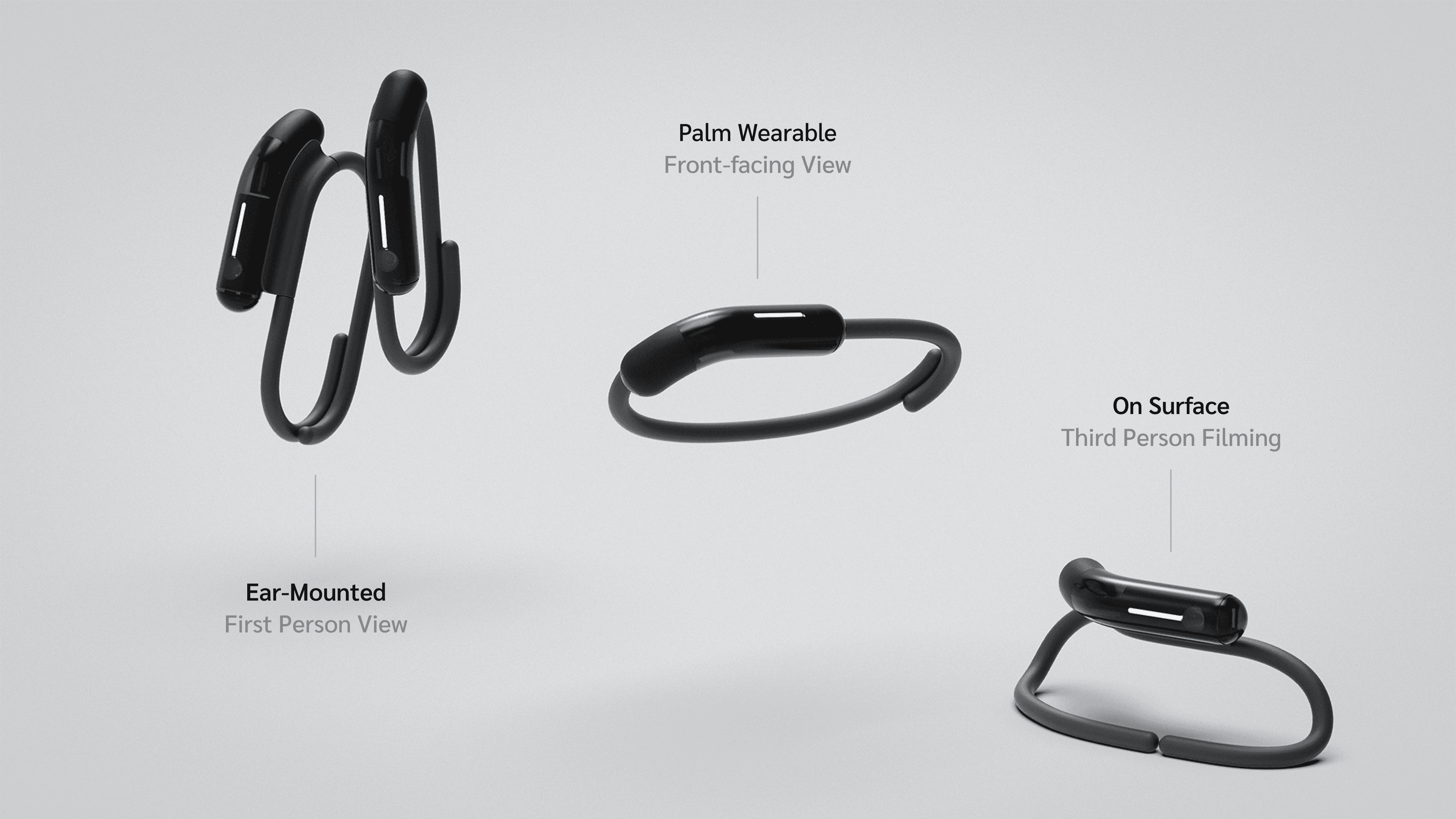

LOOP-i is a pair of AI-powered wearable cameras designed for creators, capturing content from an eye-level perspective. Worn primarily on the ears, it provides a natural, immersive view of what users focus on.

LOOP-i leverages AI in two key ways: First, it uses multimodal inputs—such as images, voice, and hand gestures—to automate content recording and simplify the process. Second, it incorporates generative AI, enabling users to creatively transform and stylize their footage based on their surroundings, unlocking new possibilities for personalized content creation.

LOOP-i follows head direction by default to capture first person view

Scenario View

Expected Camera View

LOOP-i centers targets in the frame based on creators’ speech and where their fingers are pointing

Scenario View

Expected Camera View

Multimodal Inference (Technical Prototyping)

Voice input and camera visuals are used to infer and track the user’s intended target (Built with OpenAI’s API and OpenCV)

Crop hand poses provides an intuitive way to quickly frame the view

Gesture-based interaction offers more opportunities to capture key moments

ML Based Gesture Interaction (Technical Prototyping)

Classic crop hand gestures are used to enable intuitive content capture (Supported by Google’s MediaPipe)

The combination of crop gestures and Gen AI allows stylised and creative filming creation

Beyond video content generation can be applied to photos and selected targets

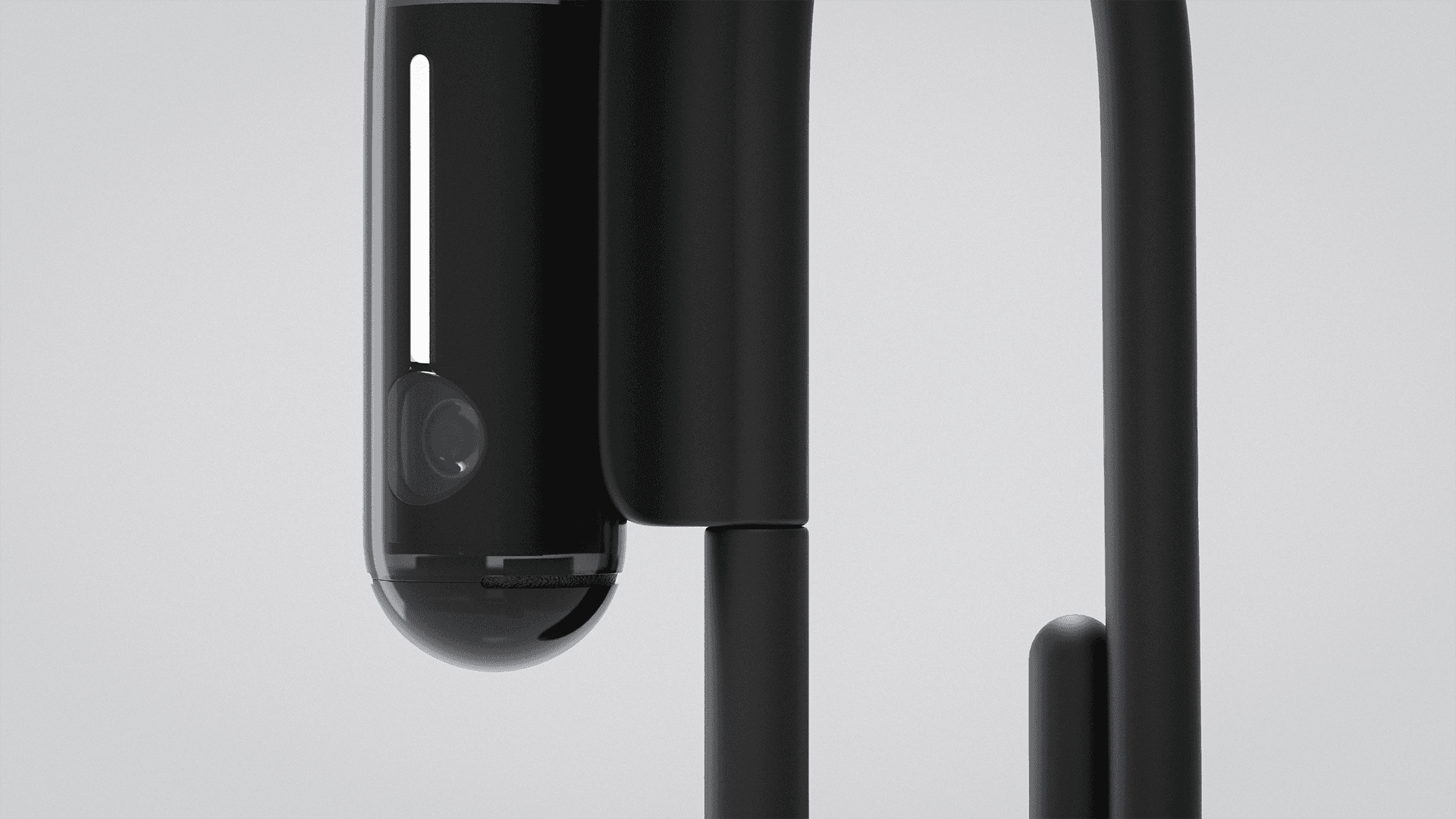

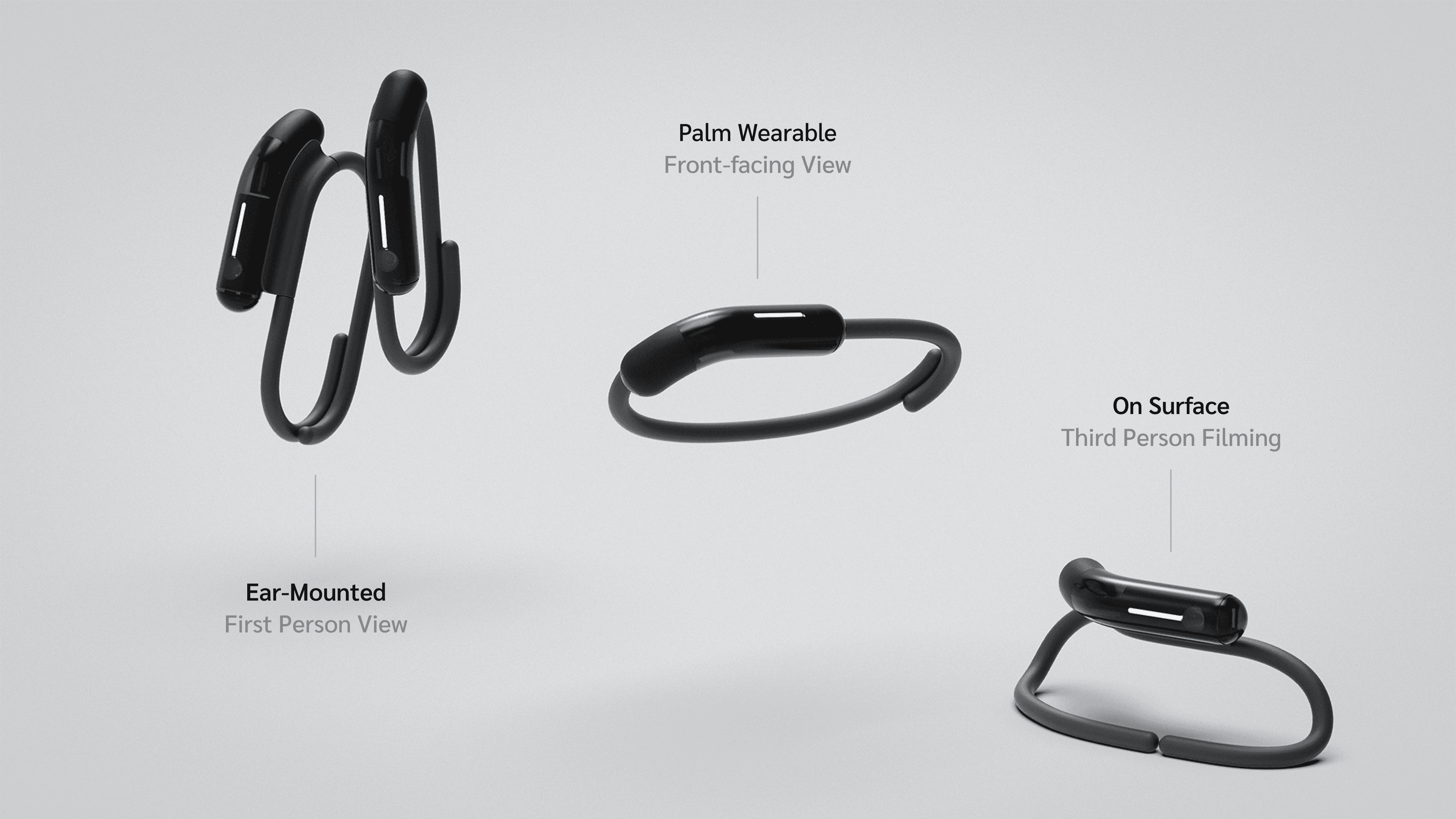

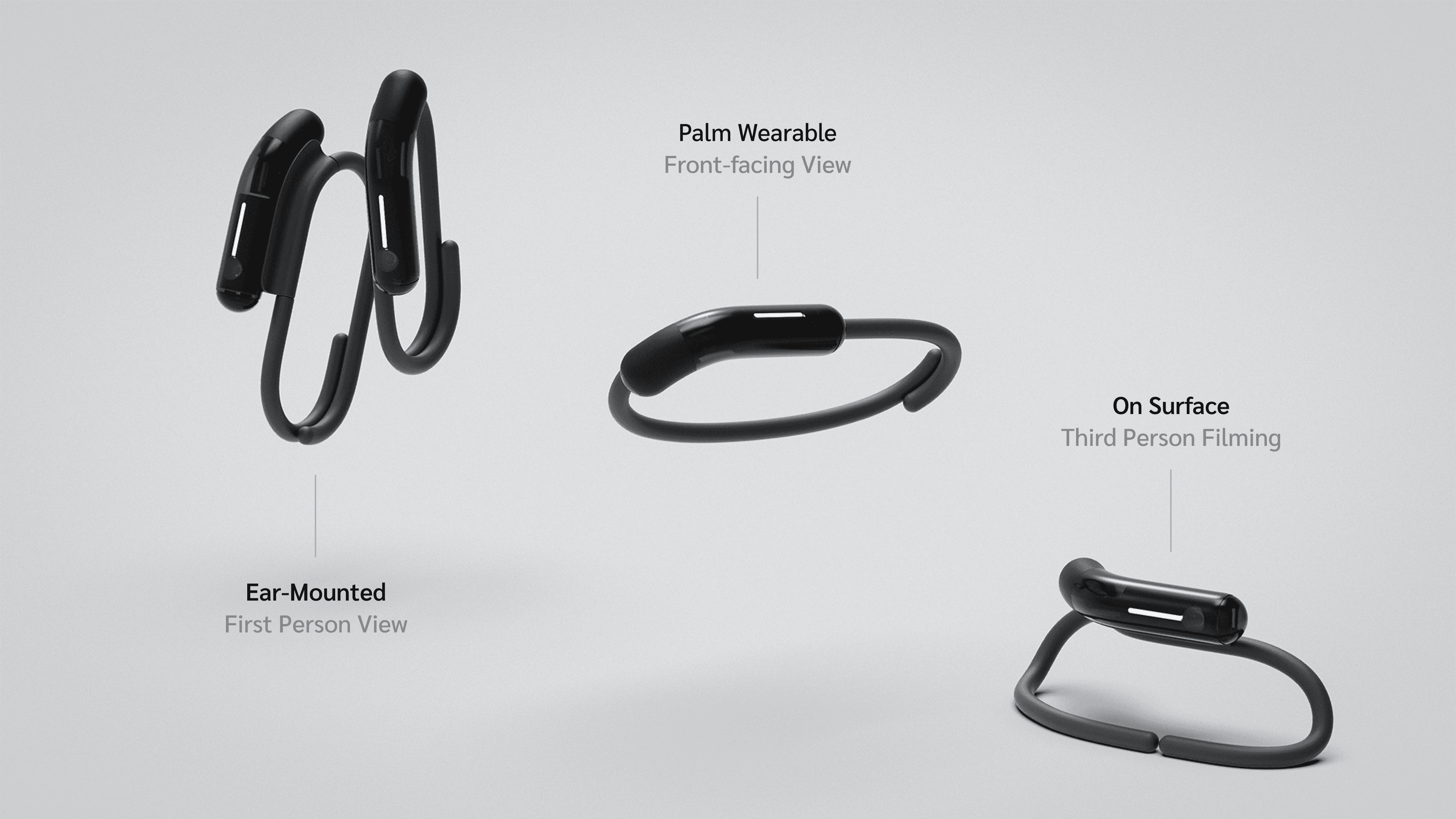

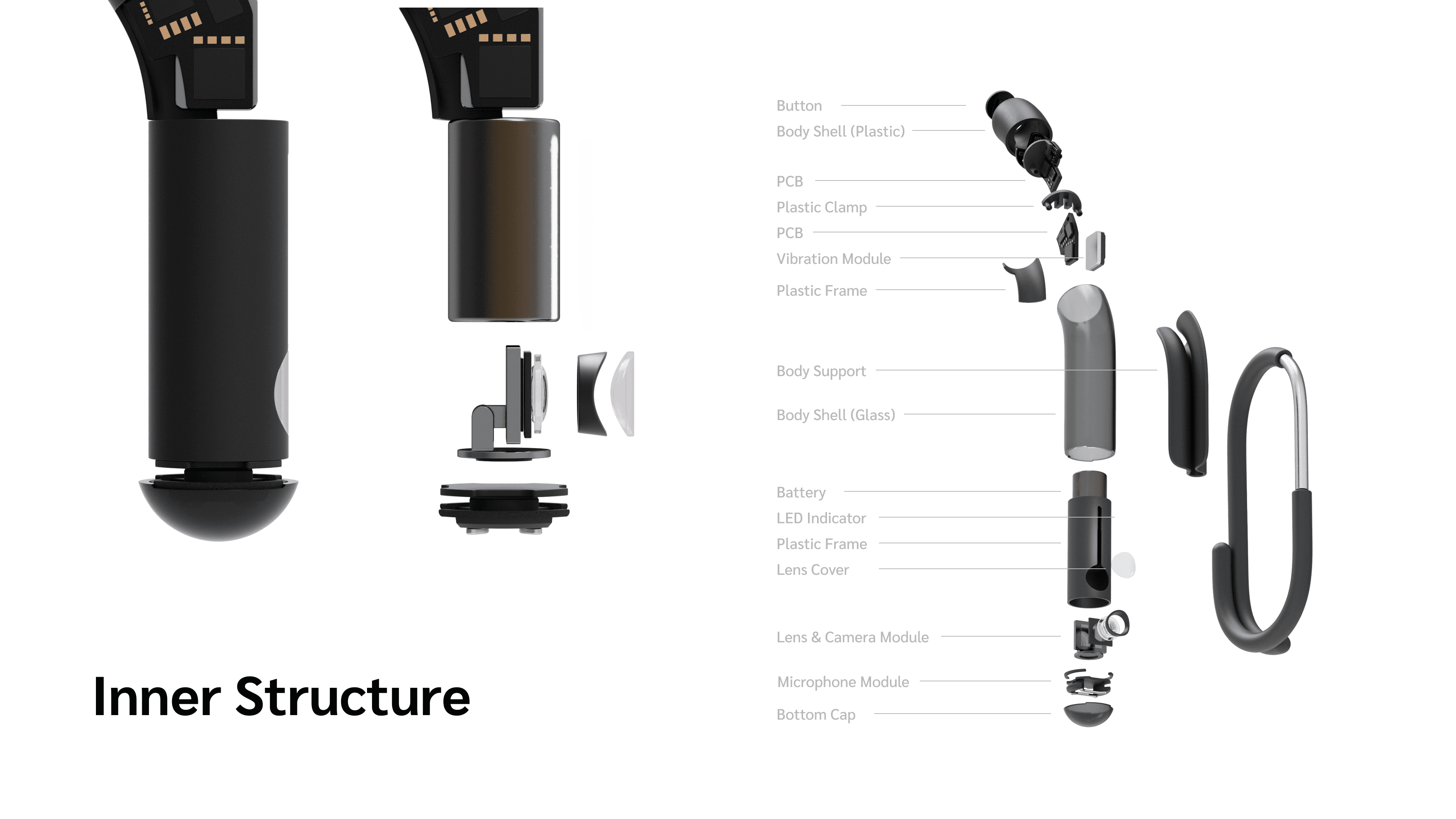

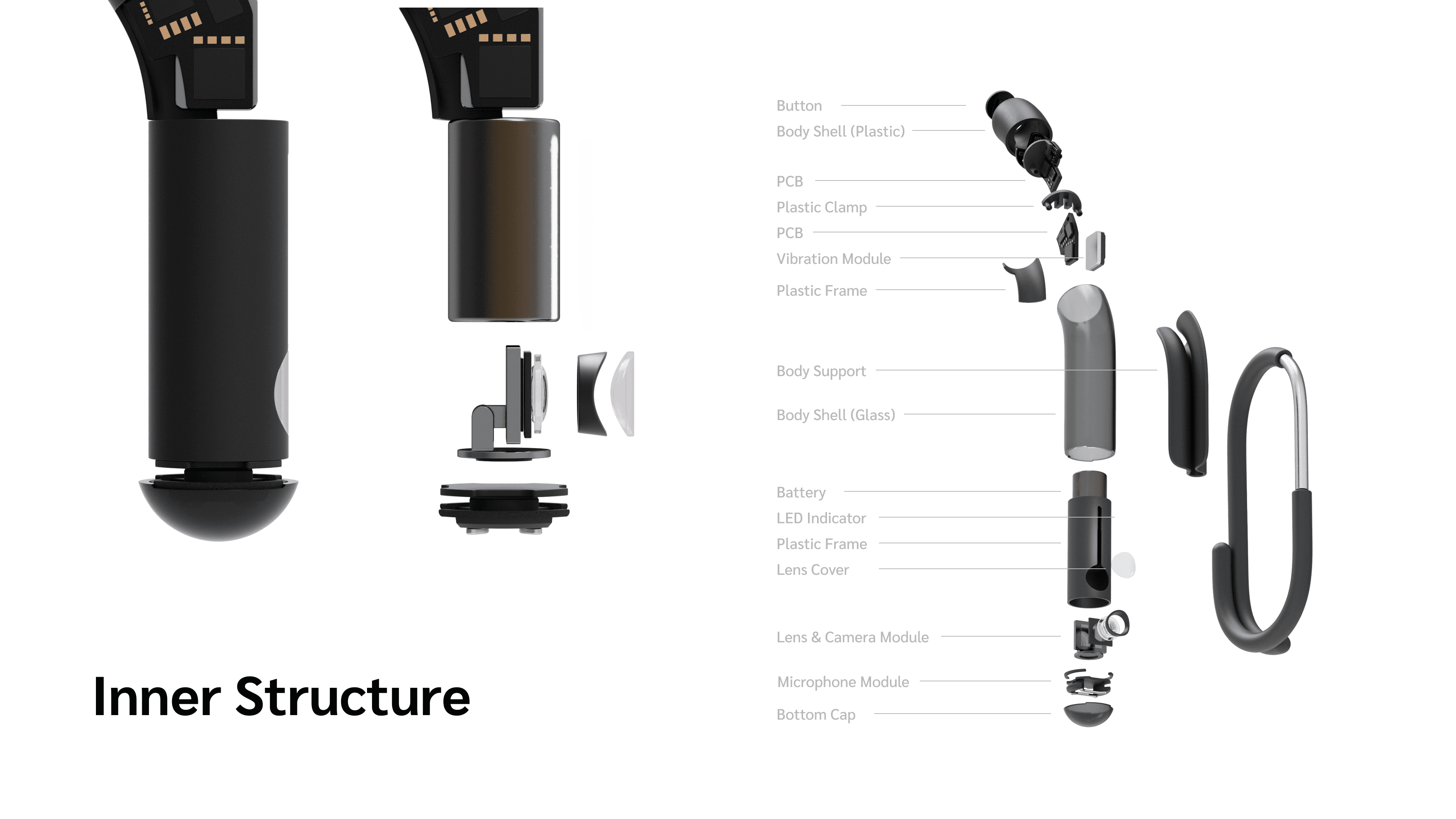

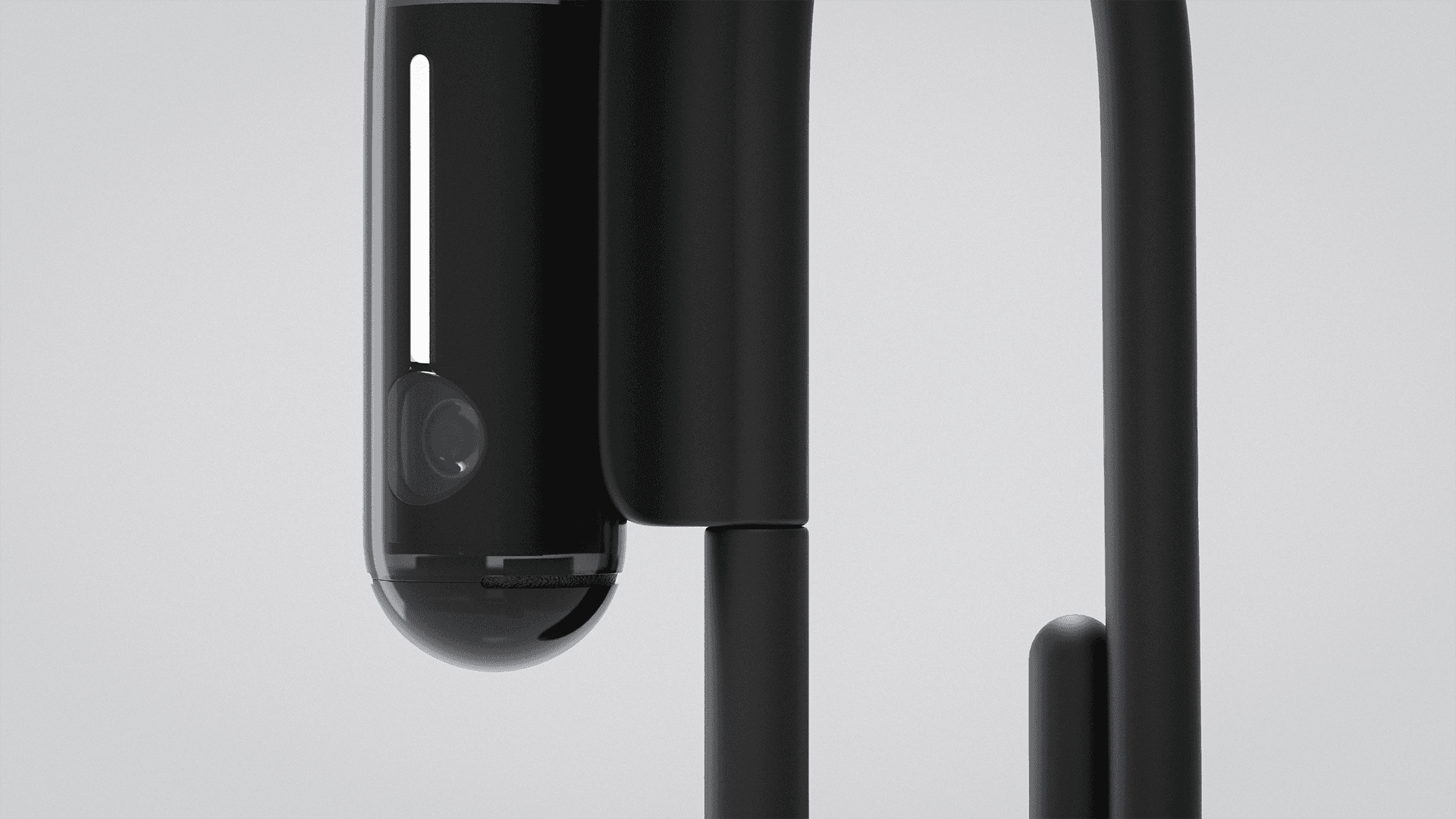

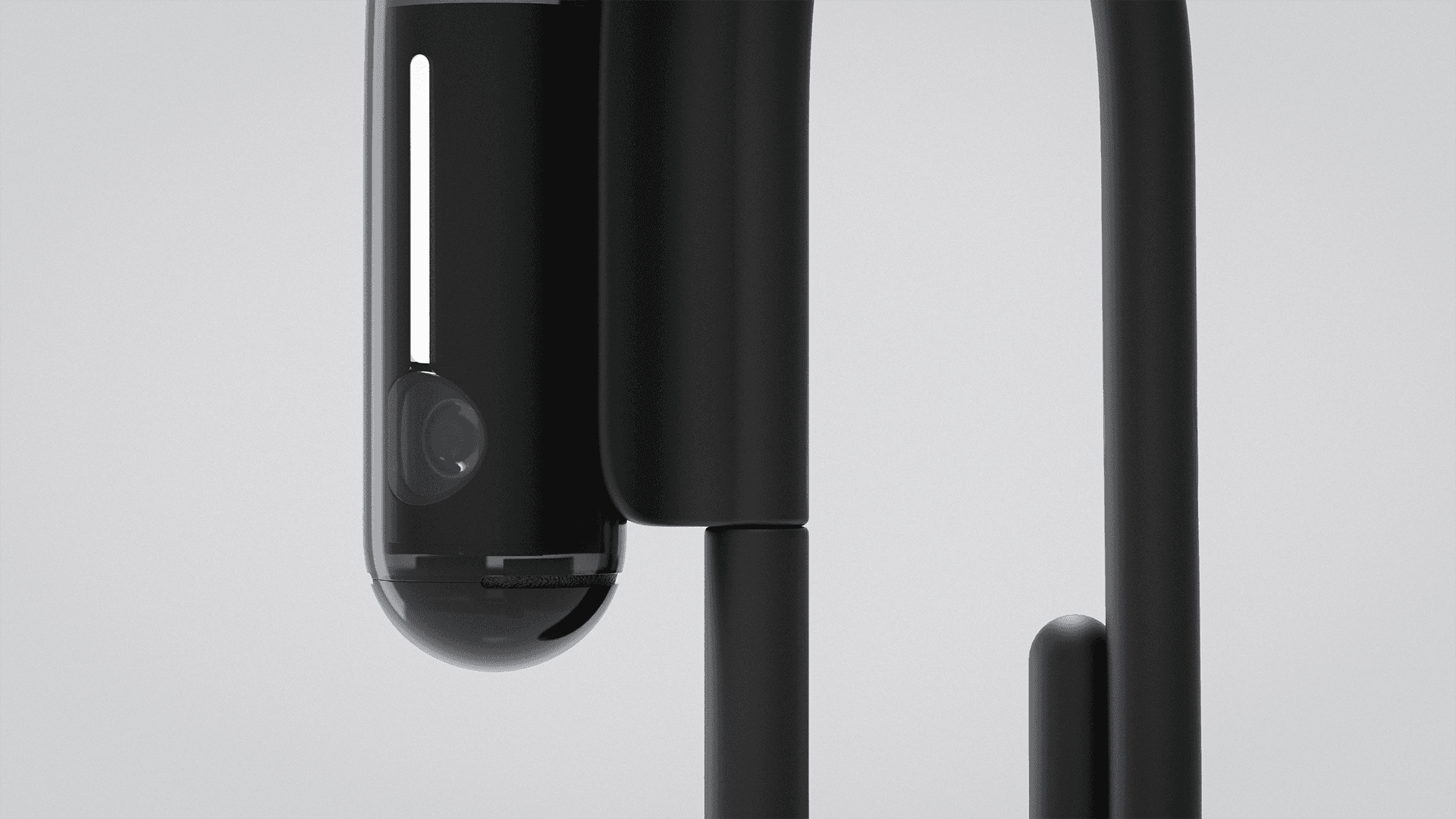

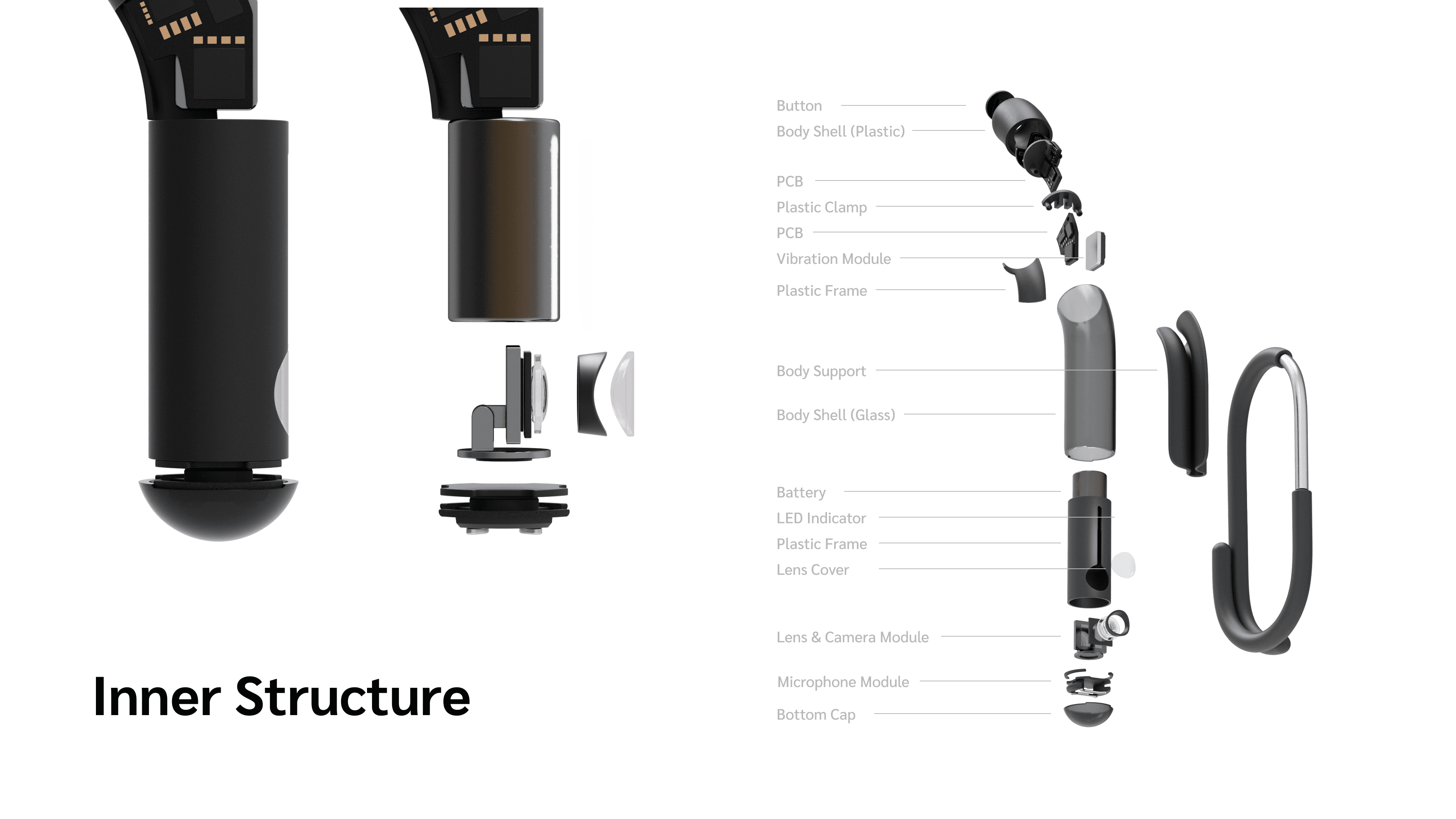

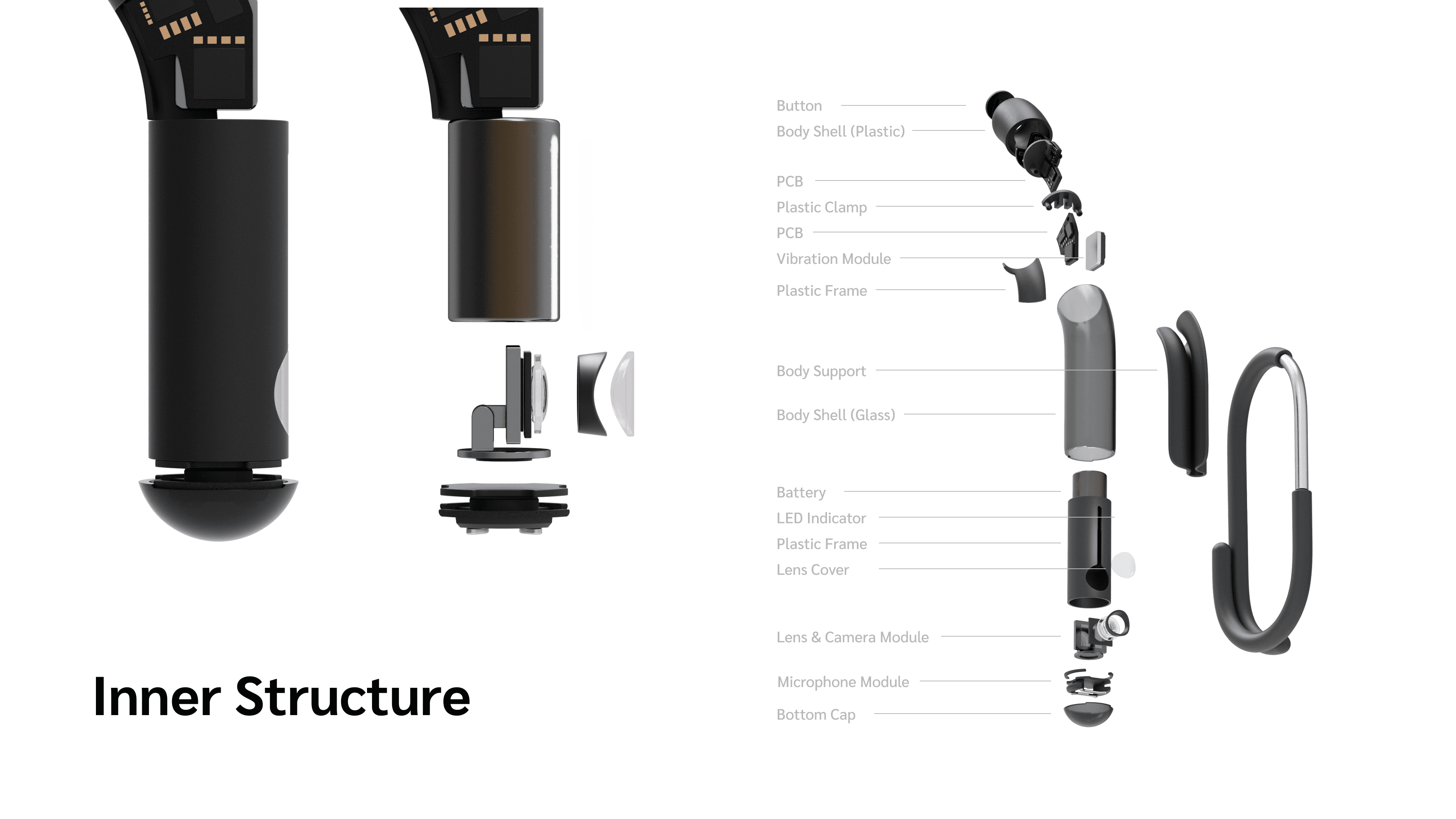

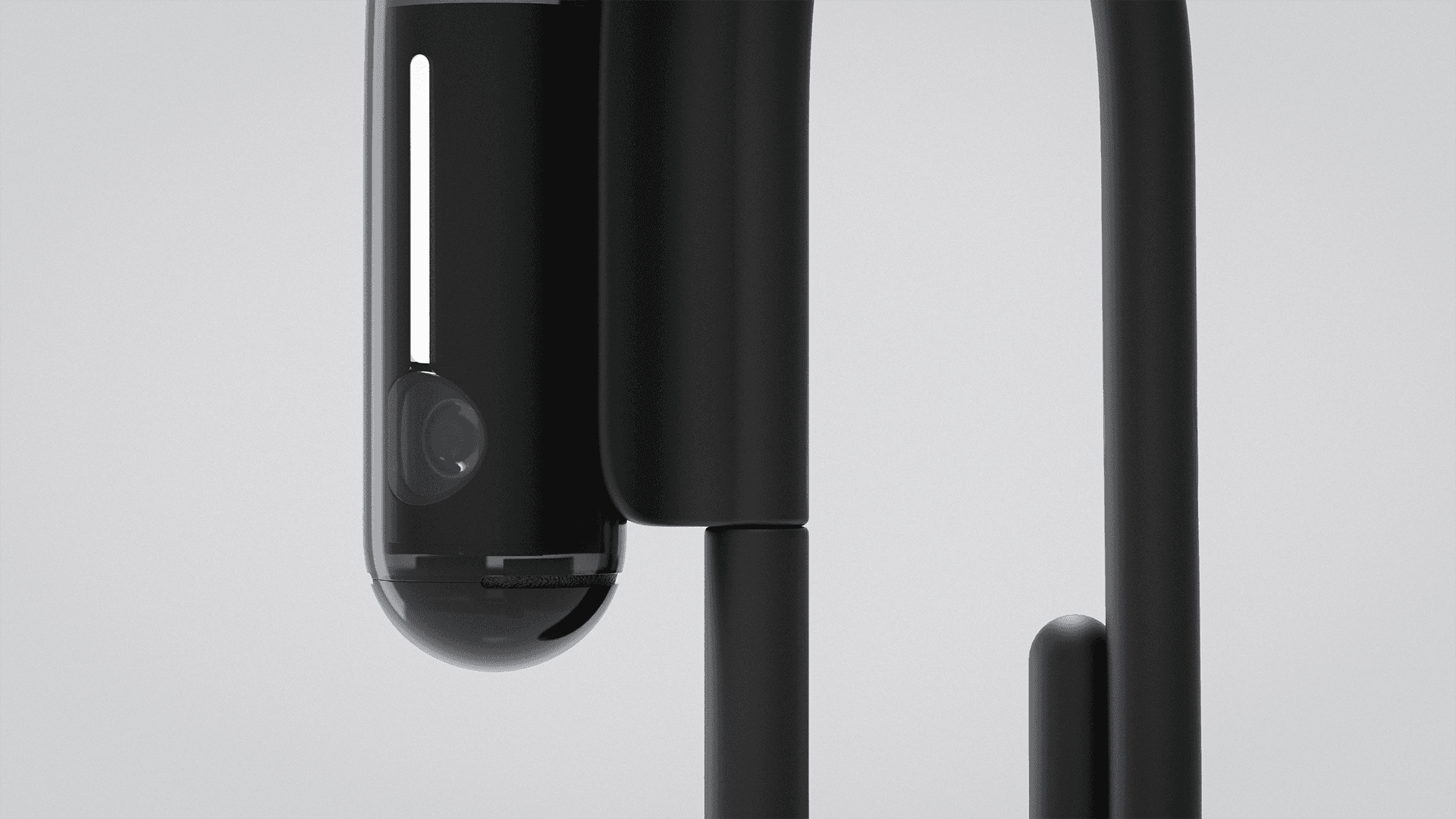

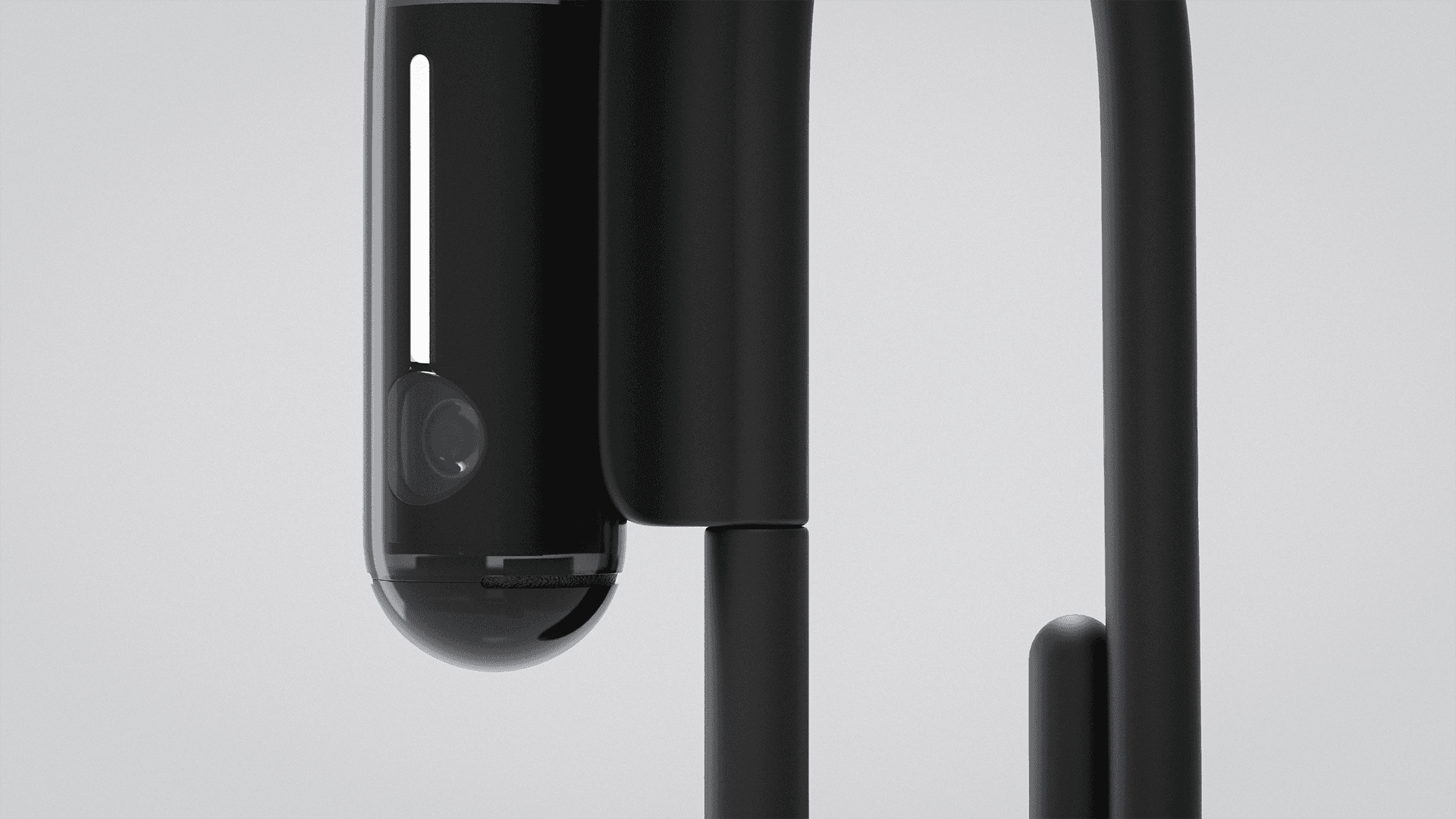

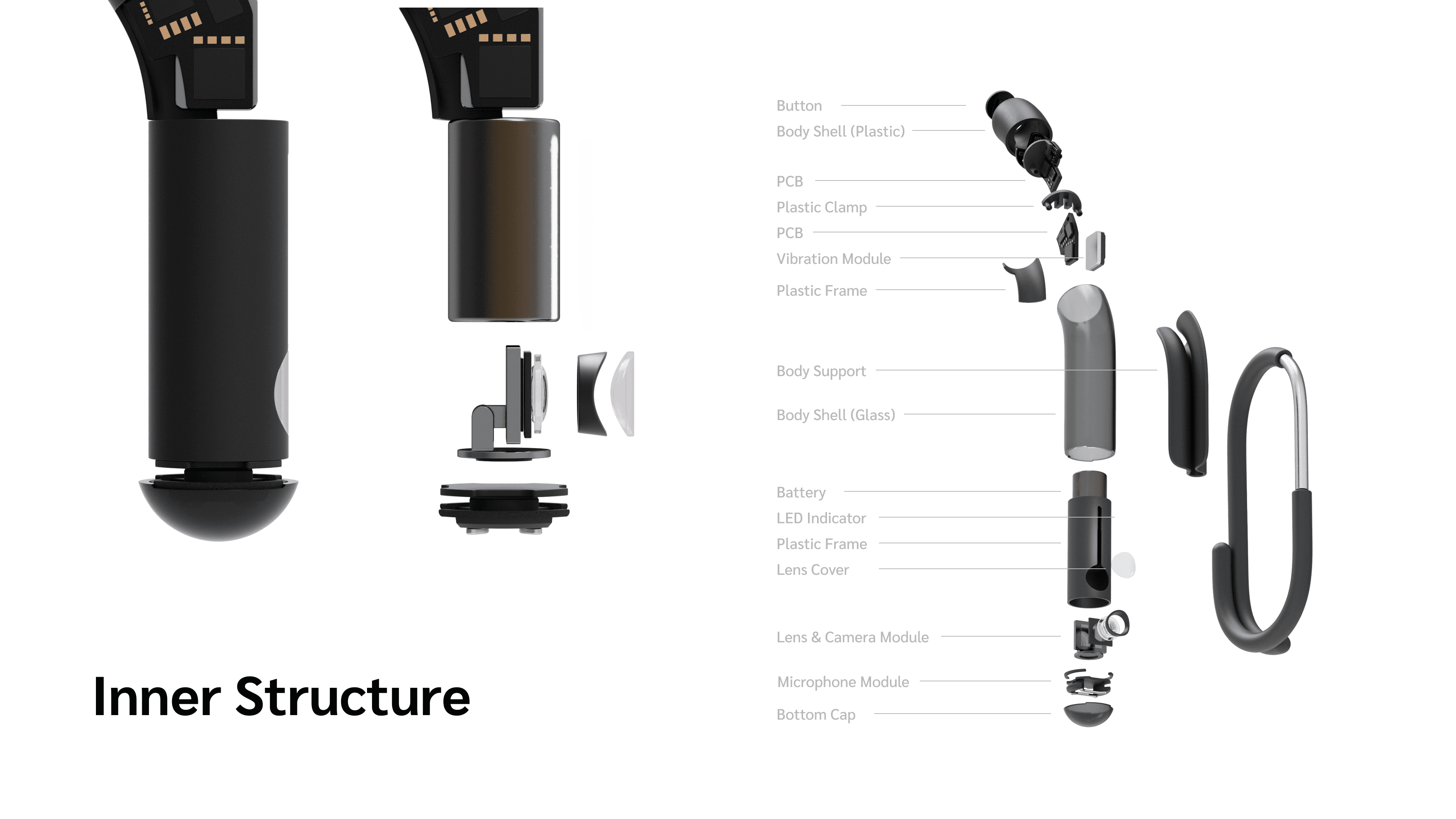

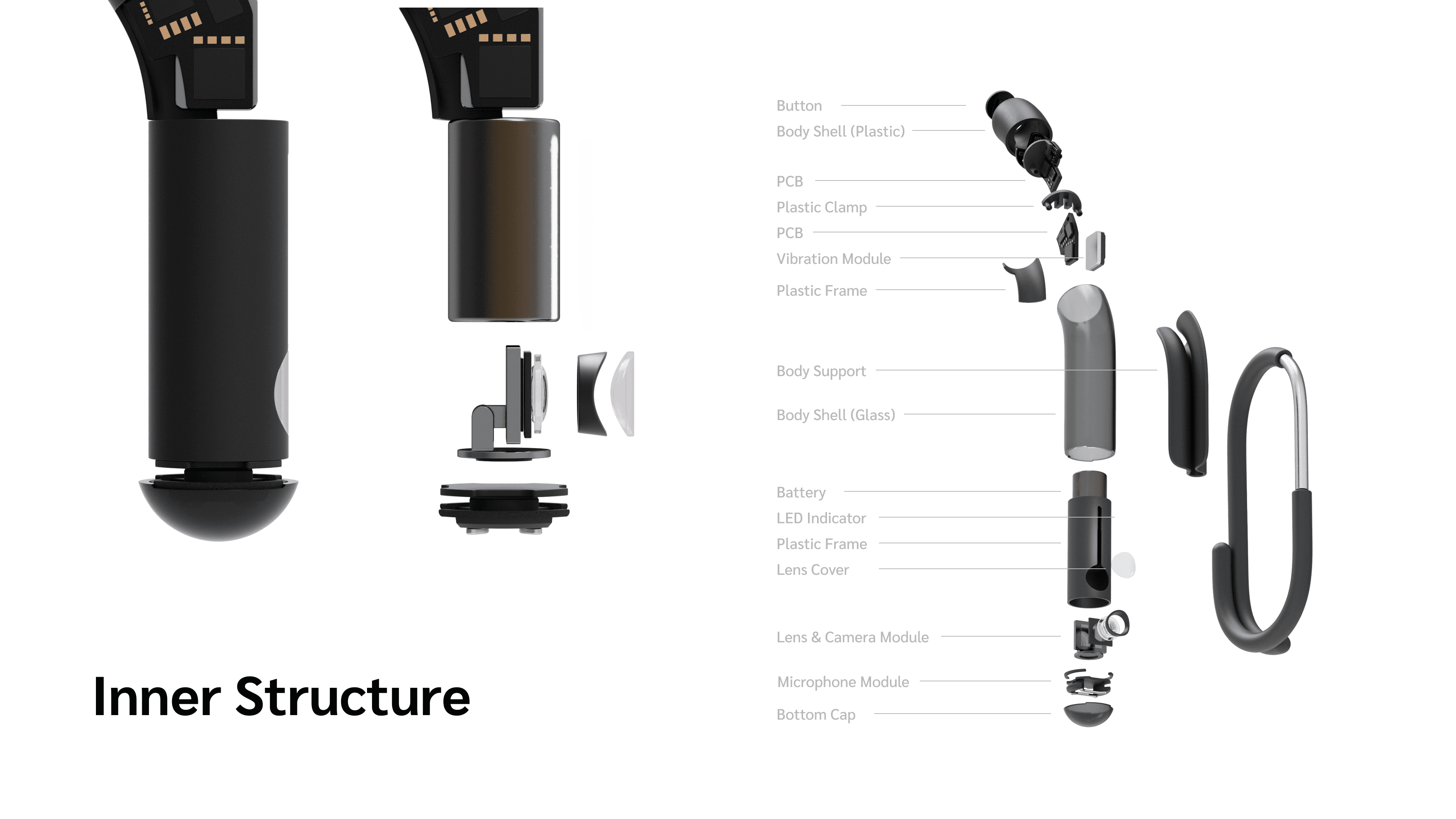

Product Details

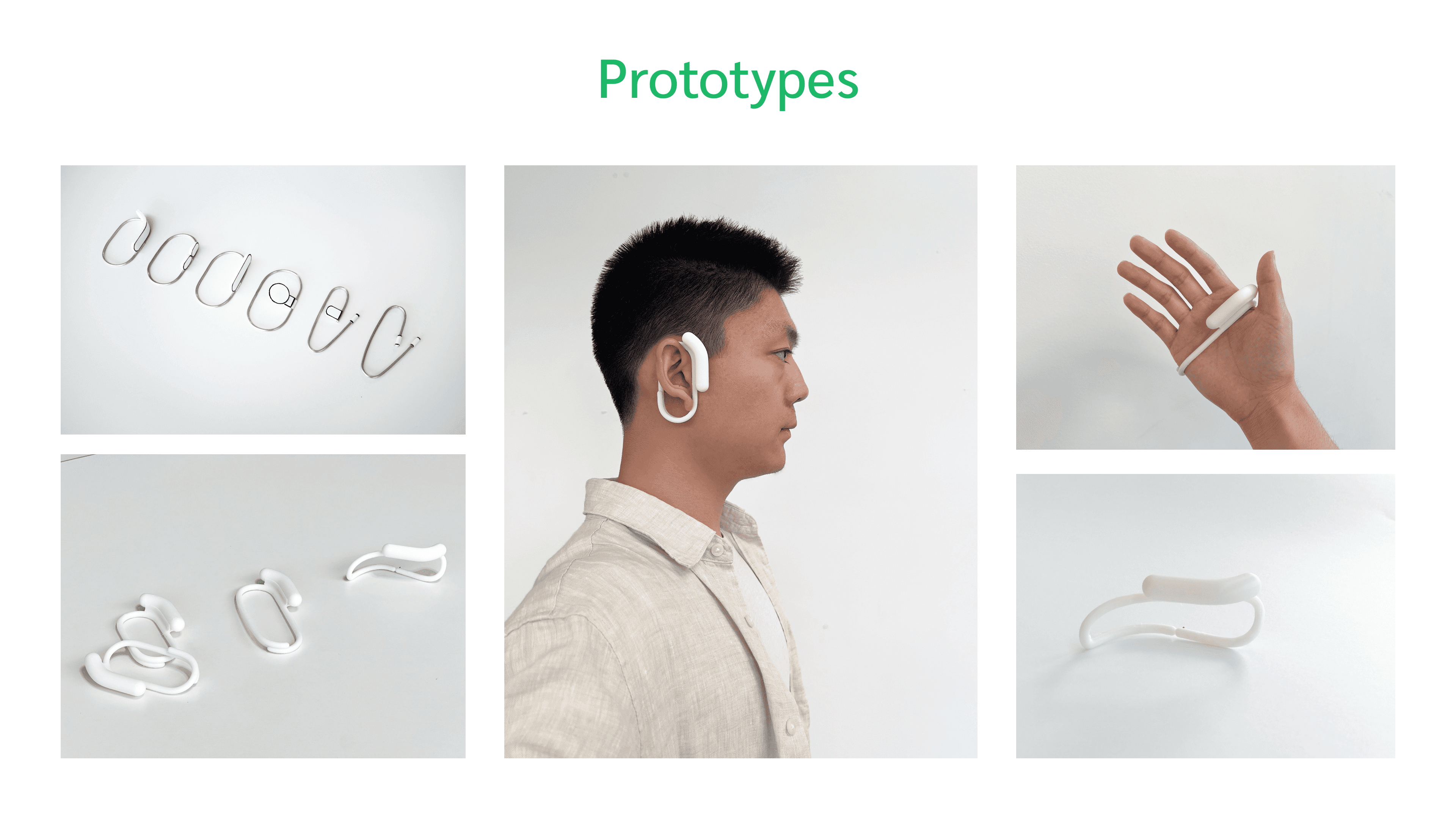

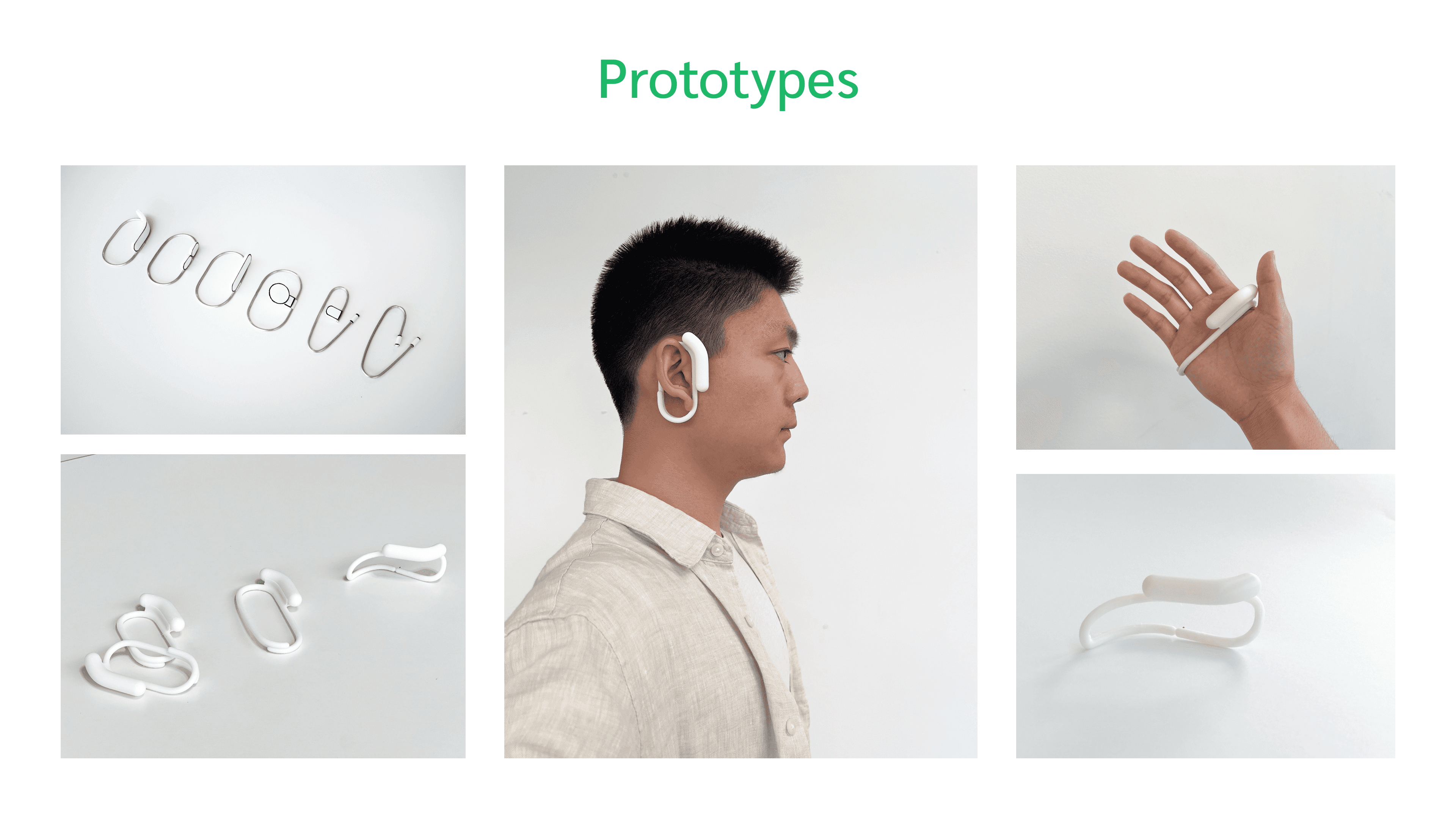

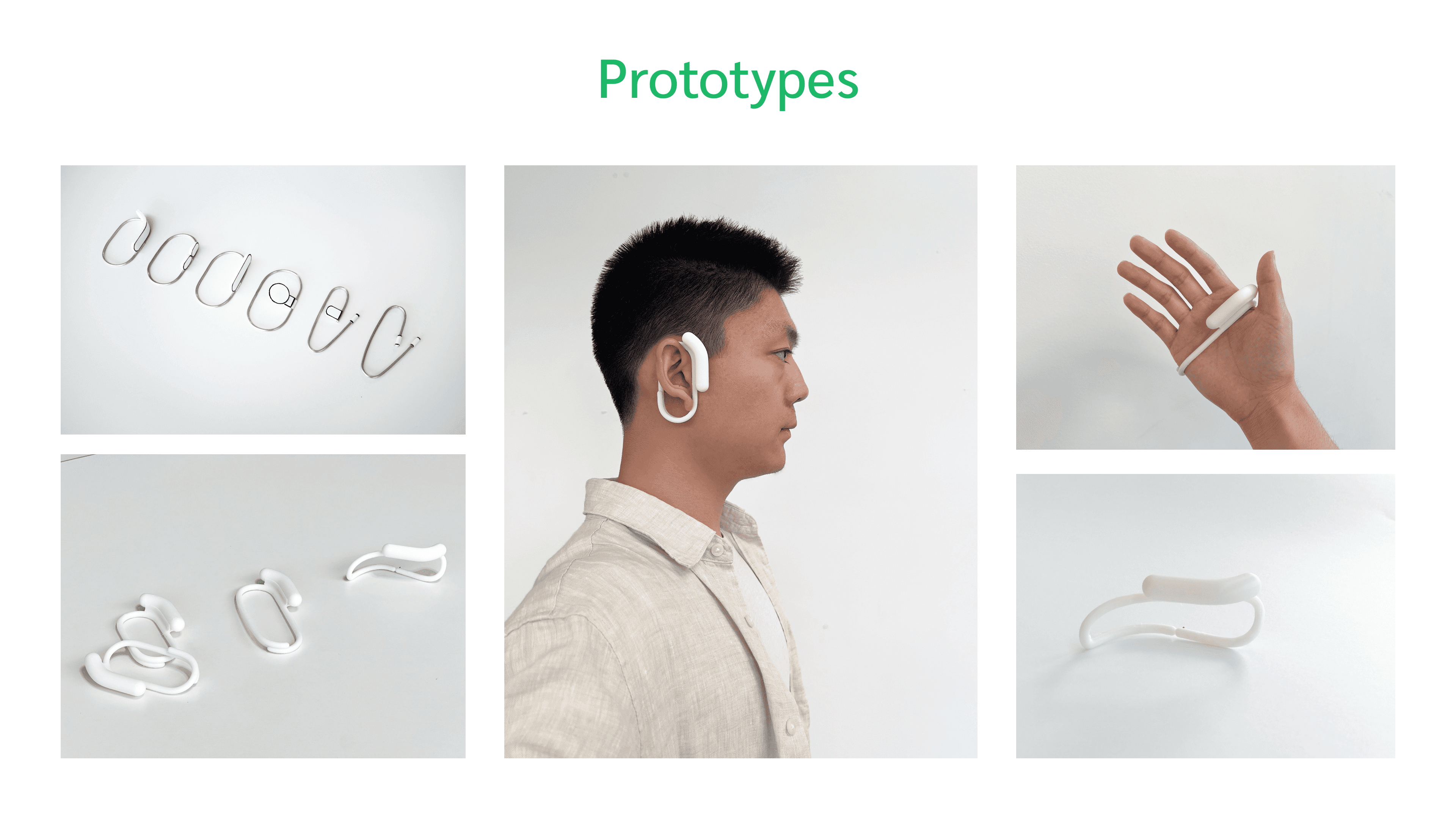

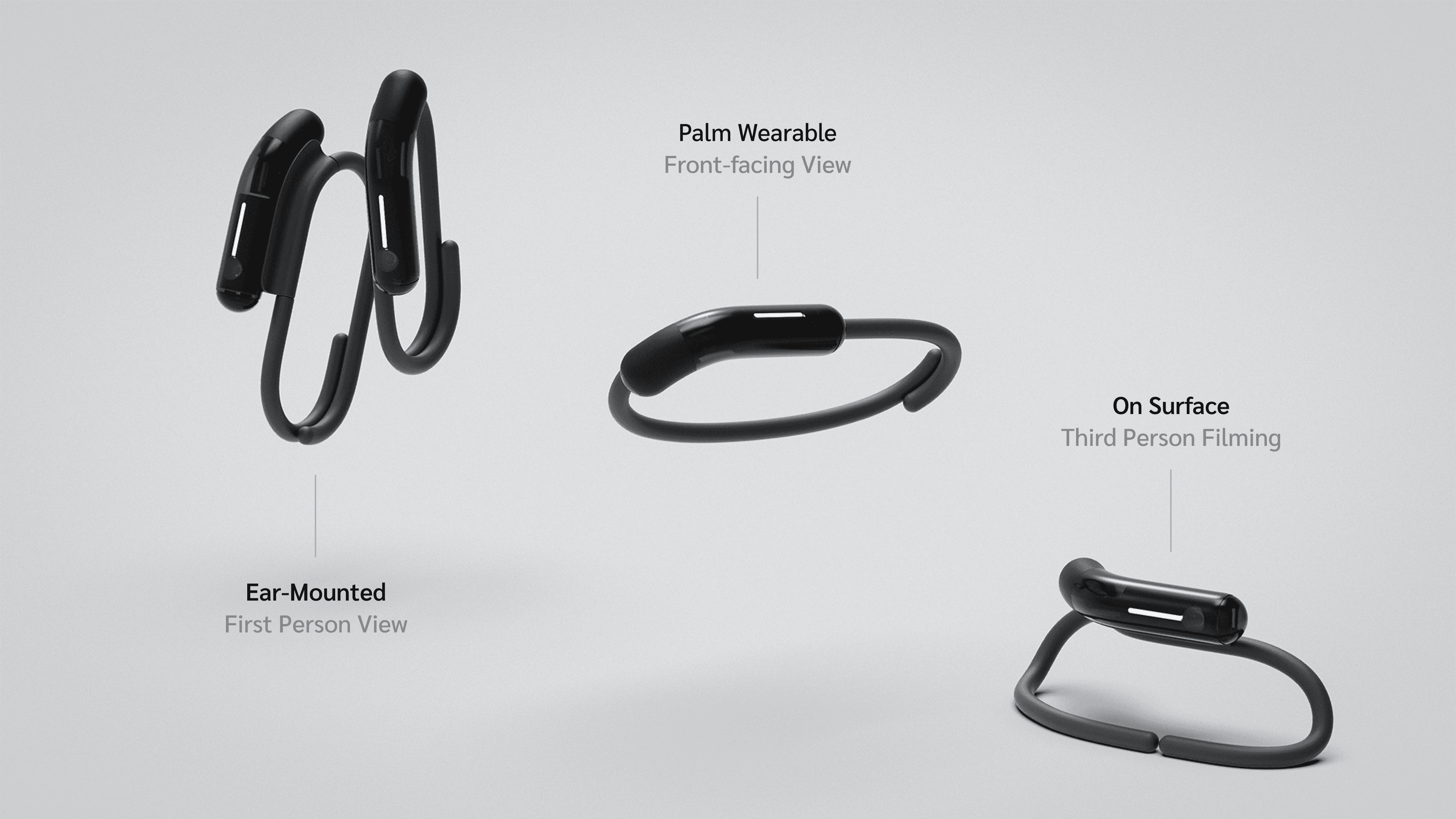

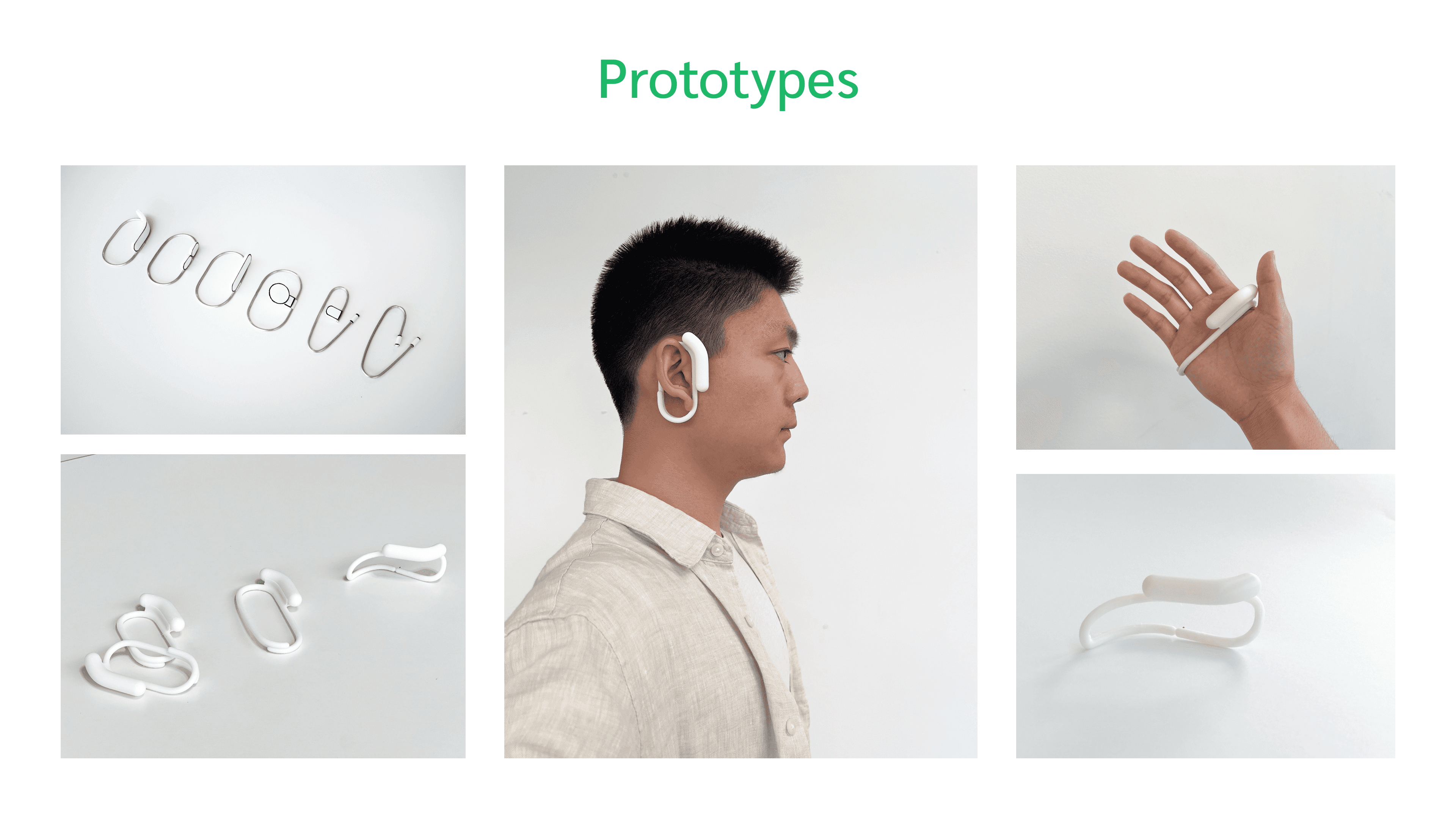

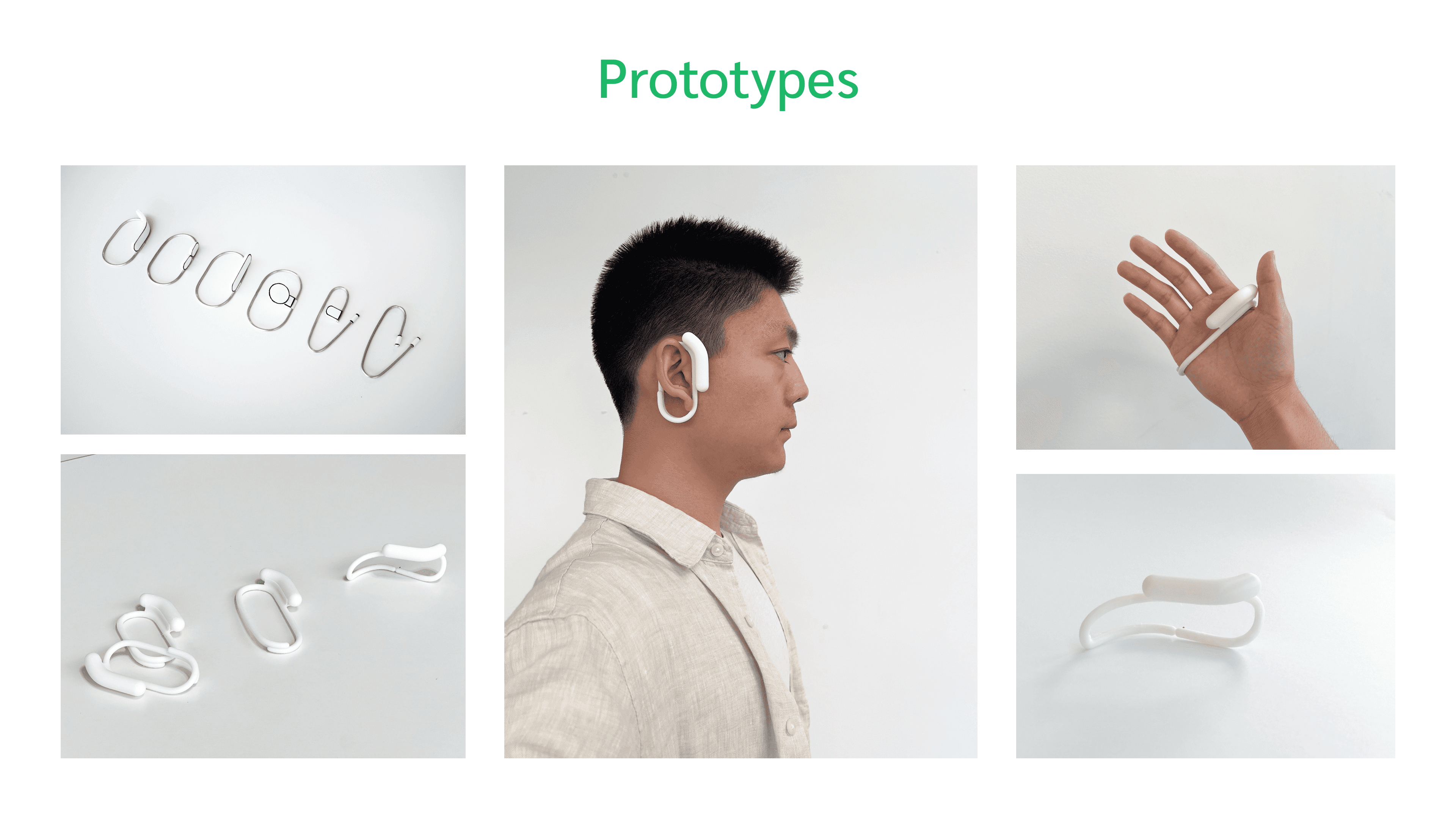

The style of design language is approachable and futuristic. The body as well as the support is applied with rounded form. The combination of dark glass, camera and LED provides a feeling that the device is alive and understand people.

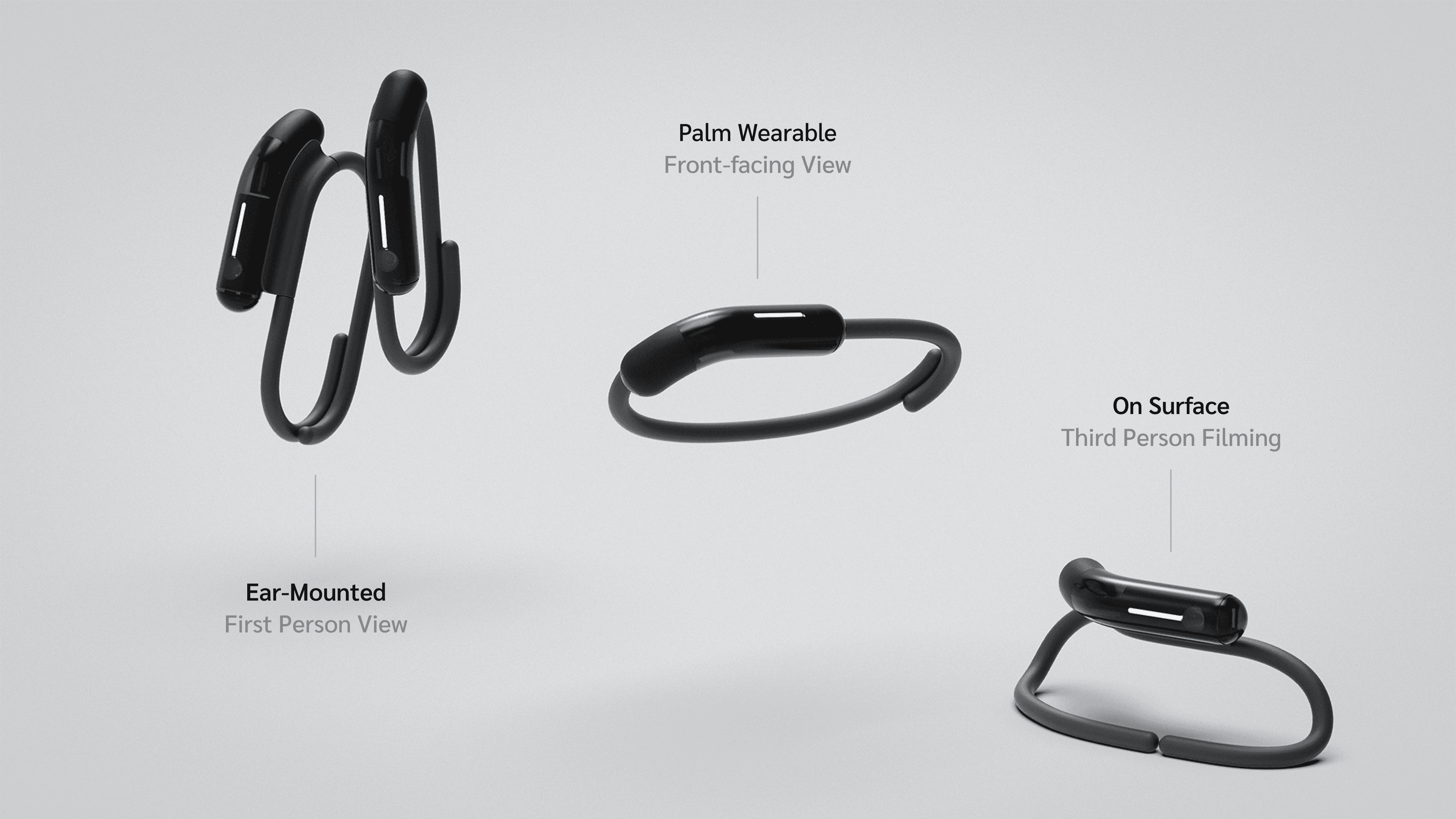

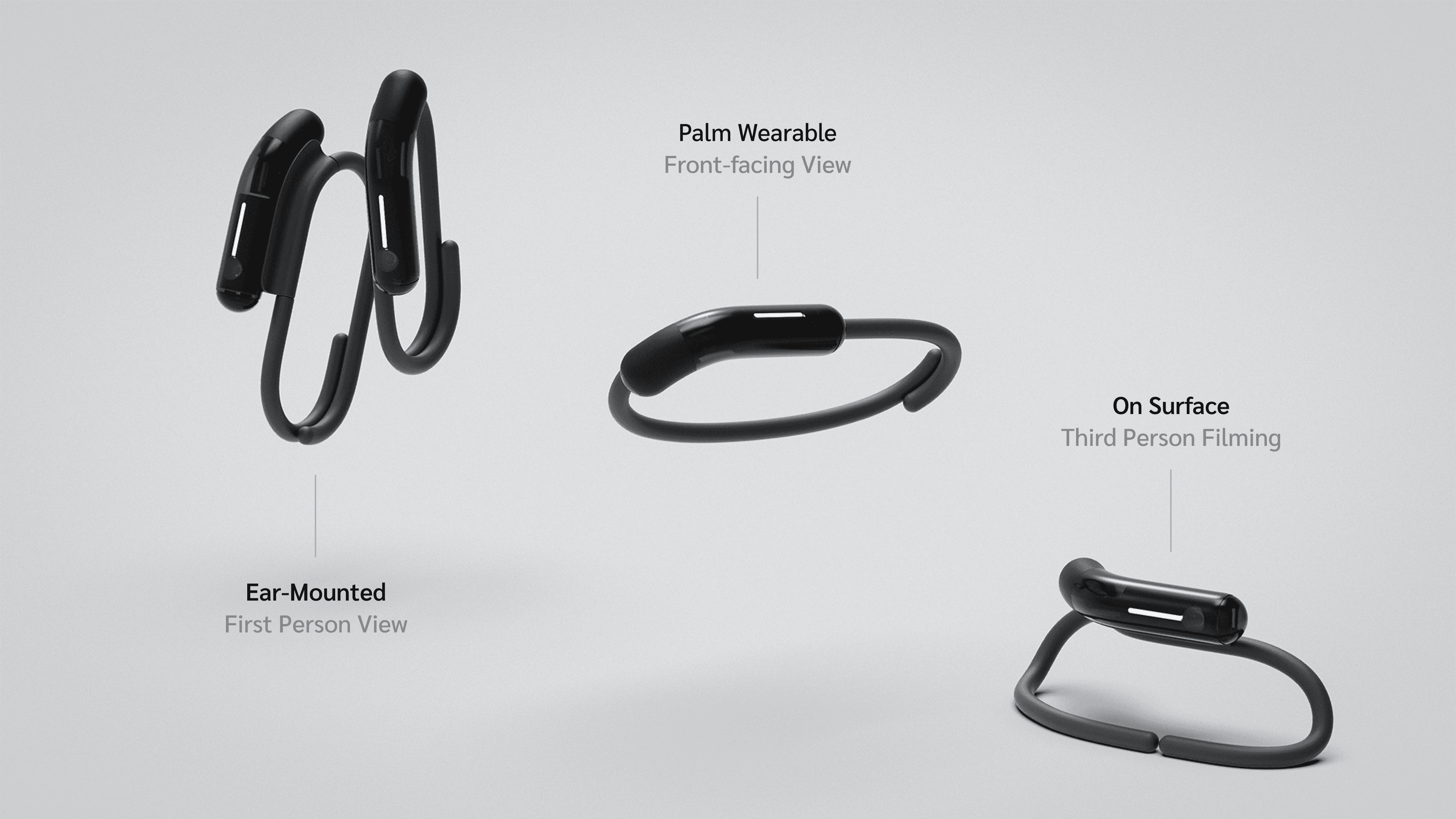

LOOP-i is also designed to be used in a diverse ways. By default it is designed be to ear-mounted but can also be attached on palm for selfie or placed on a surface to capture 3rd person-view.

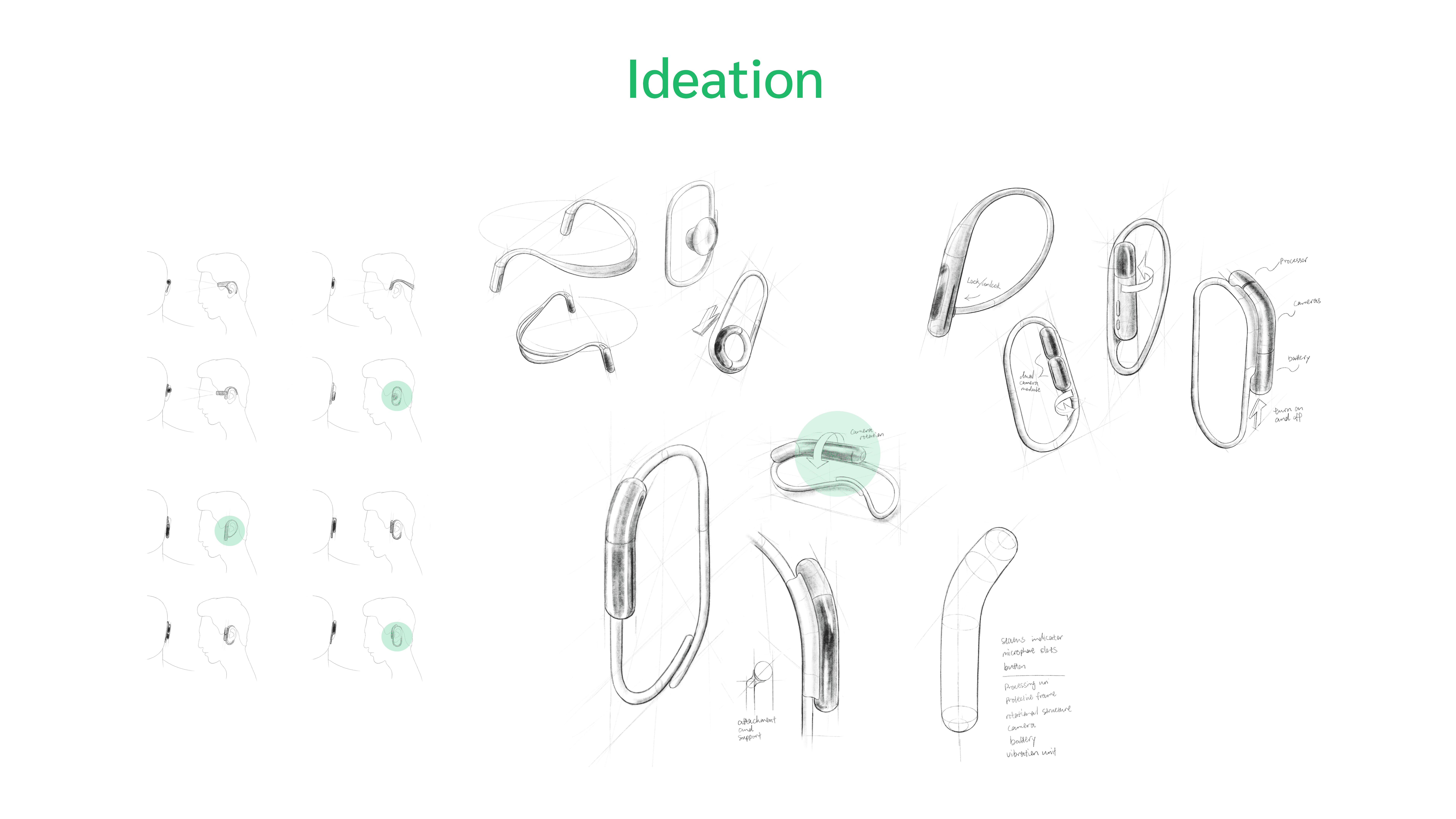

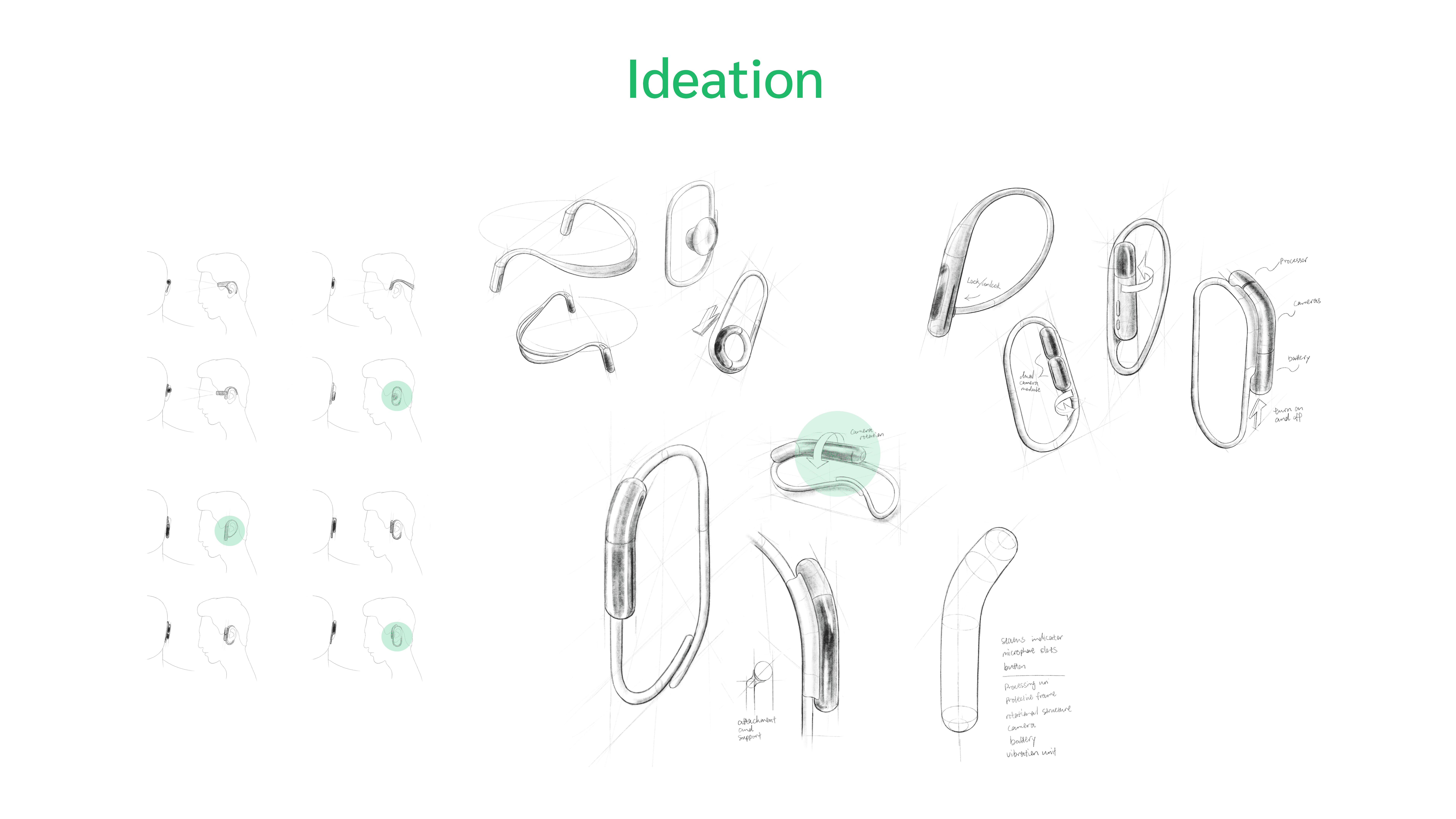

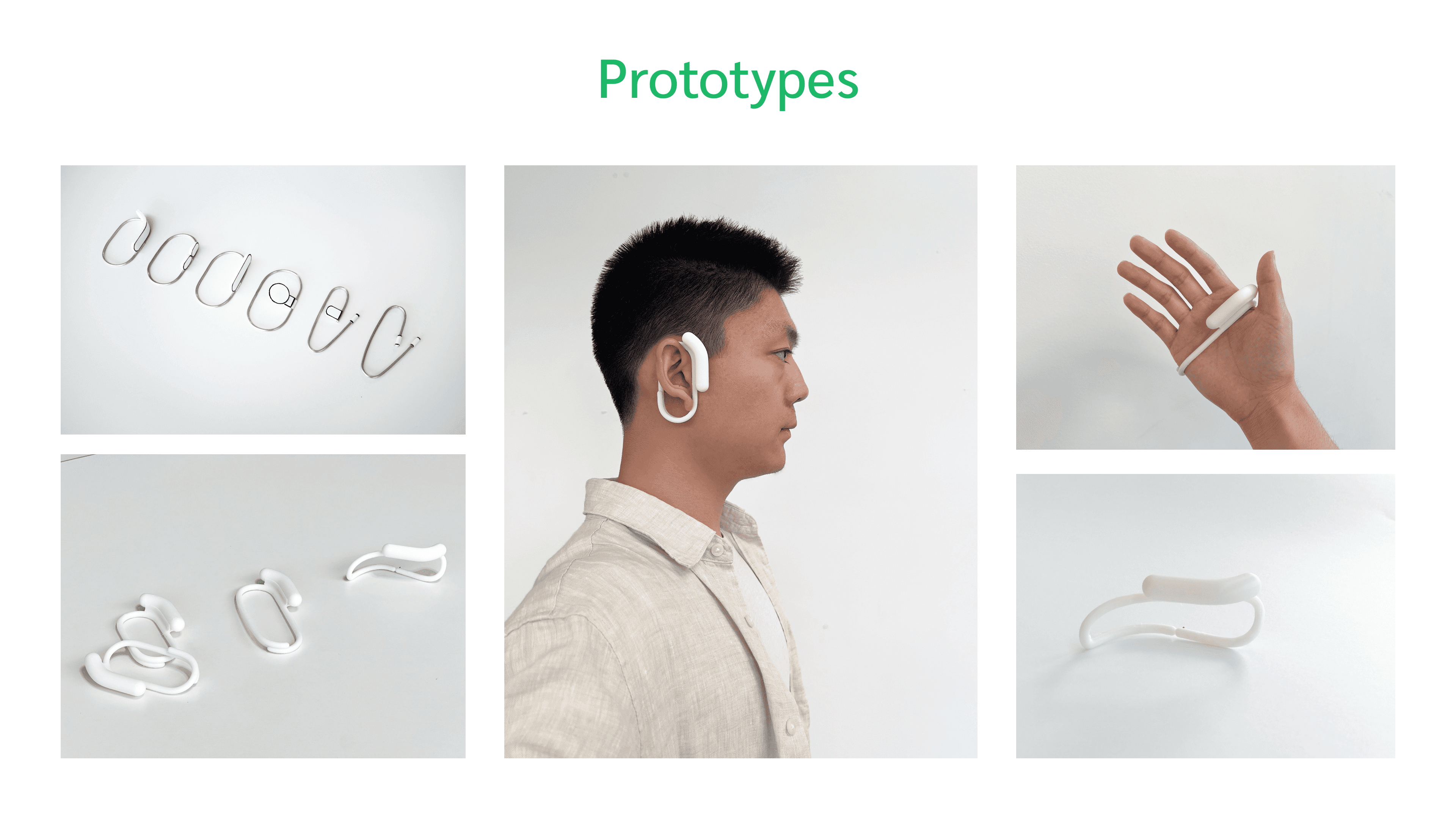

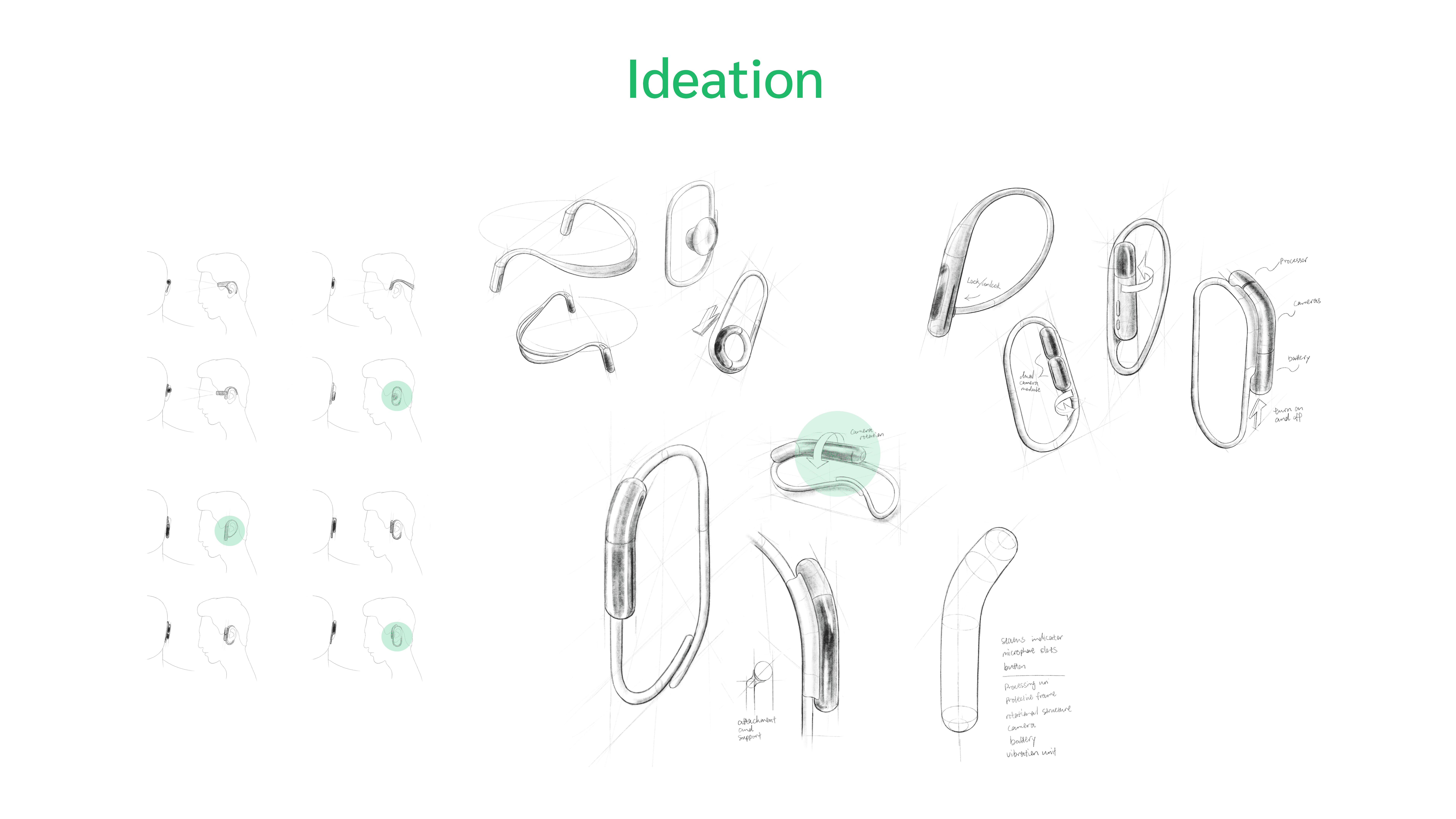

Process

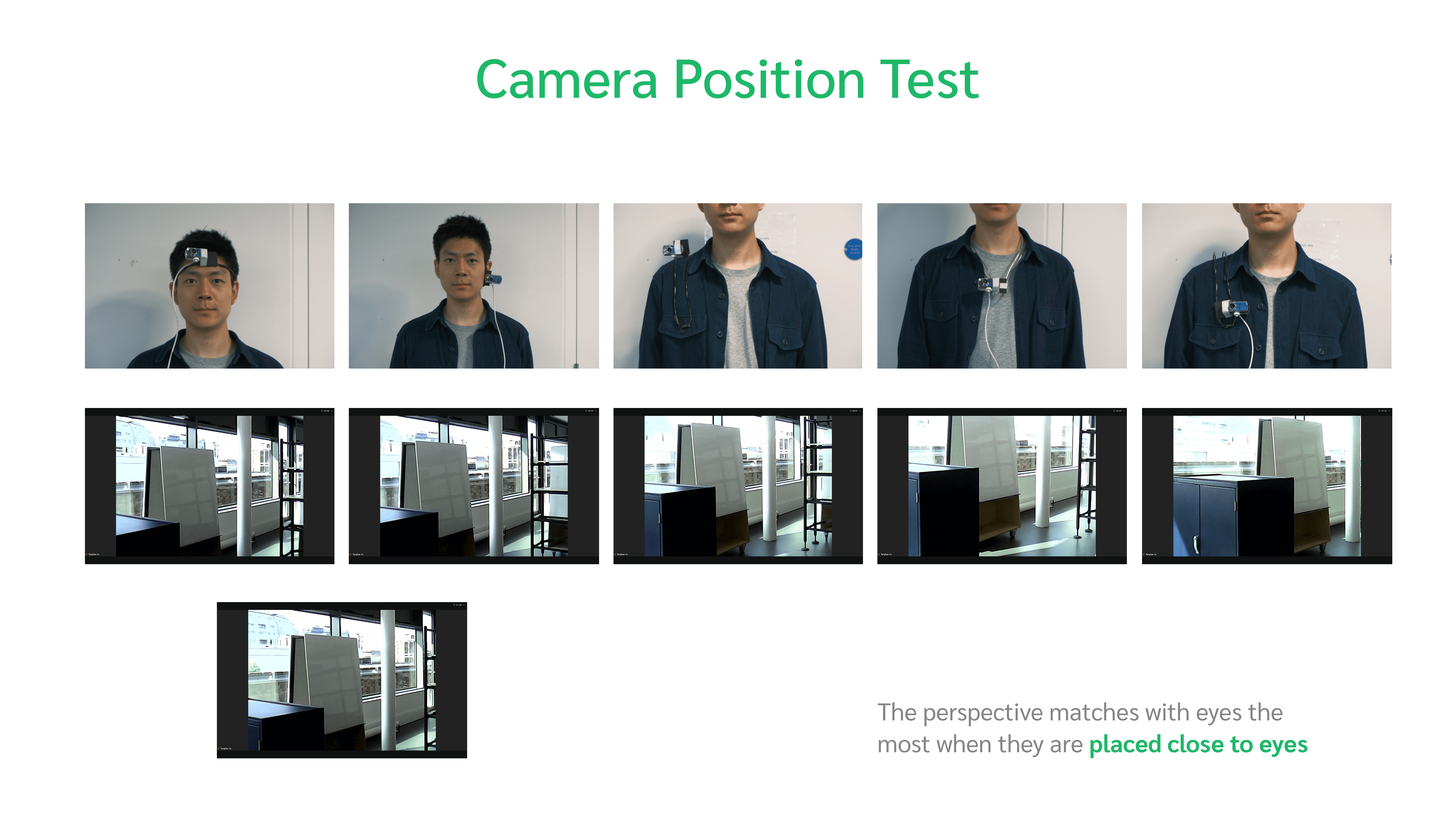

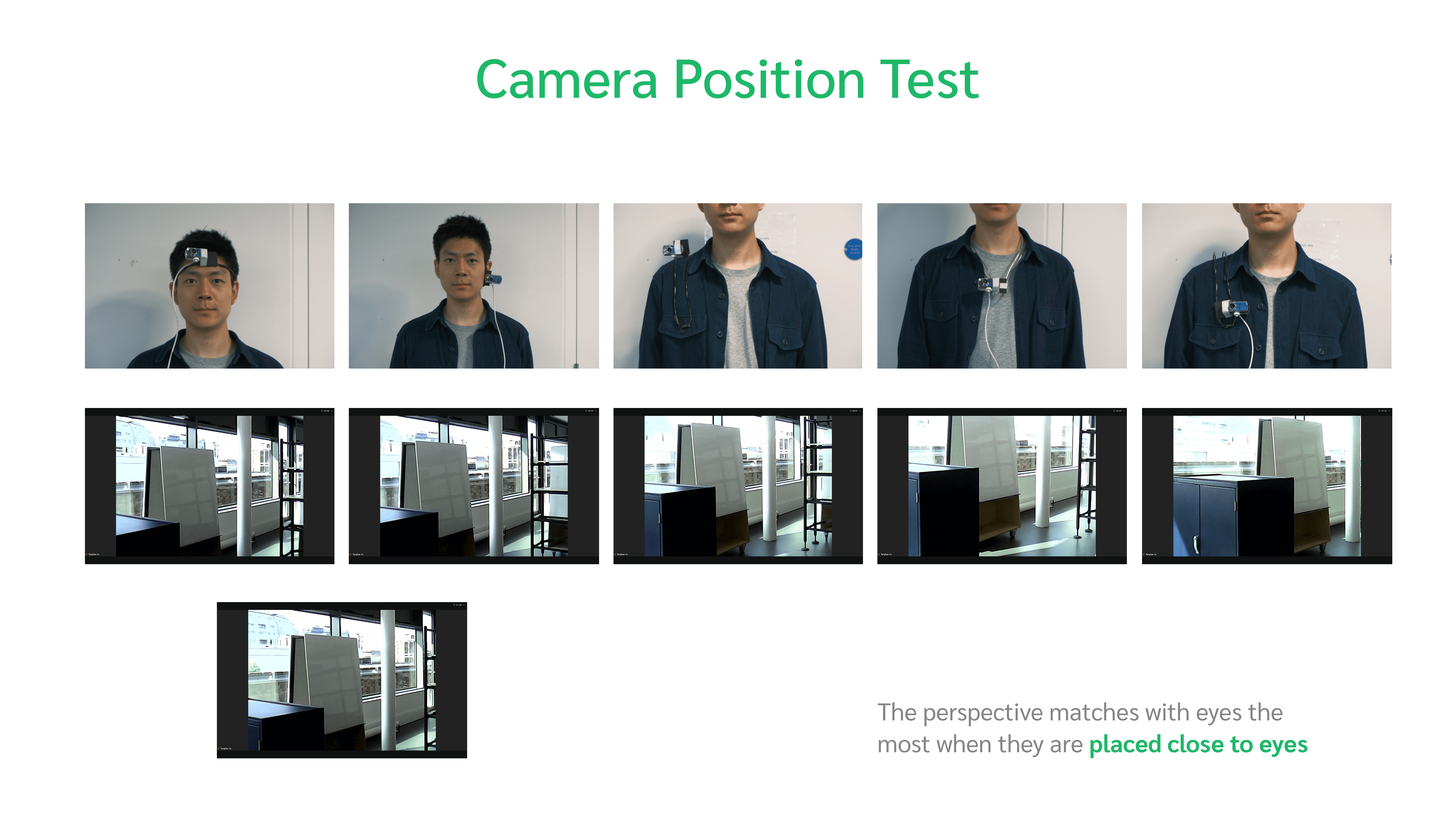

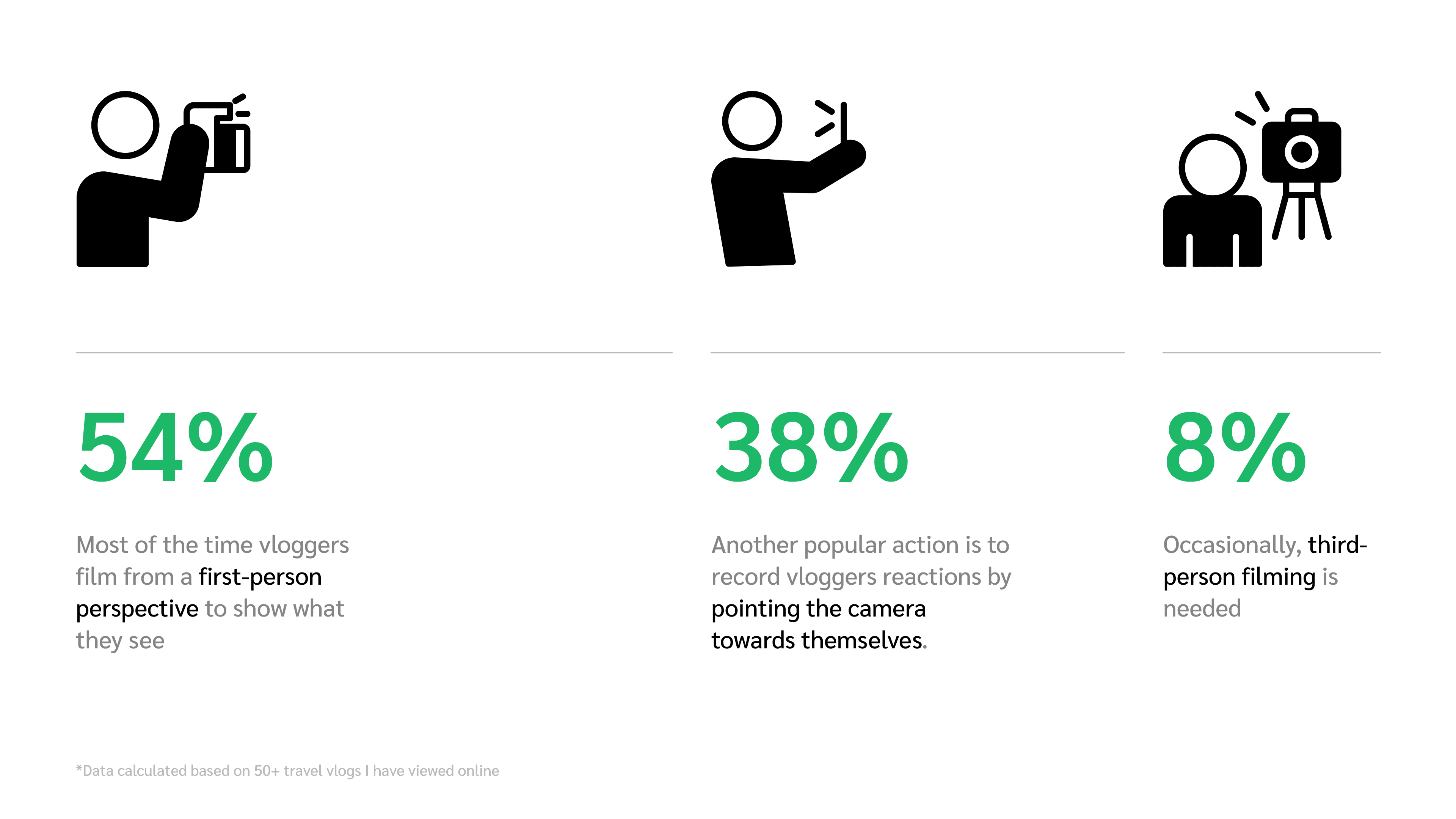

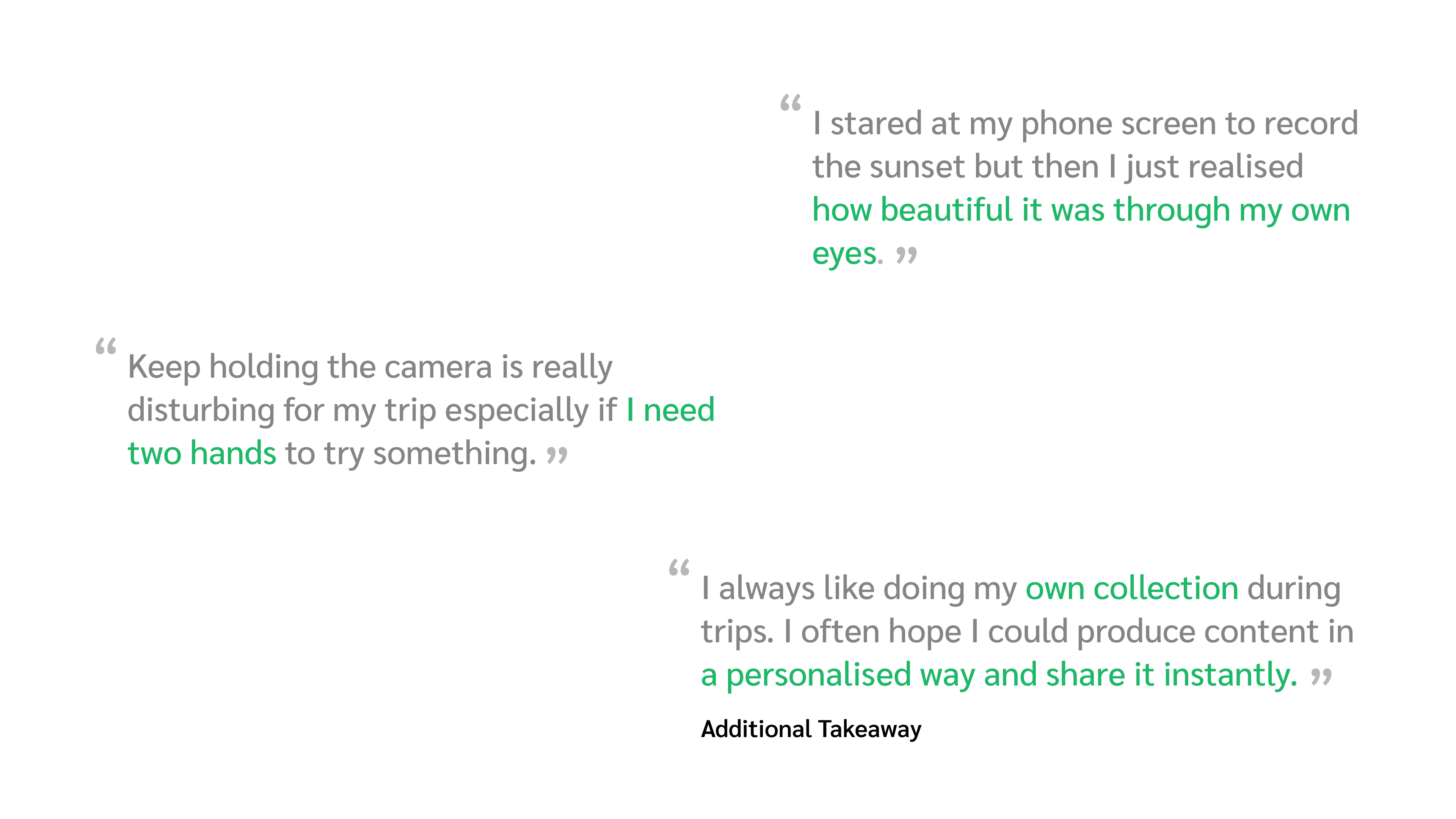

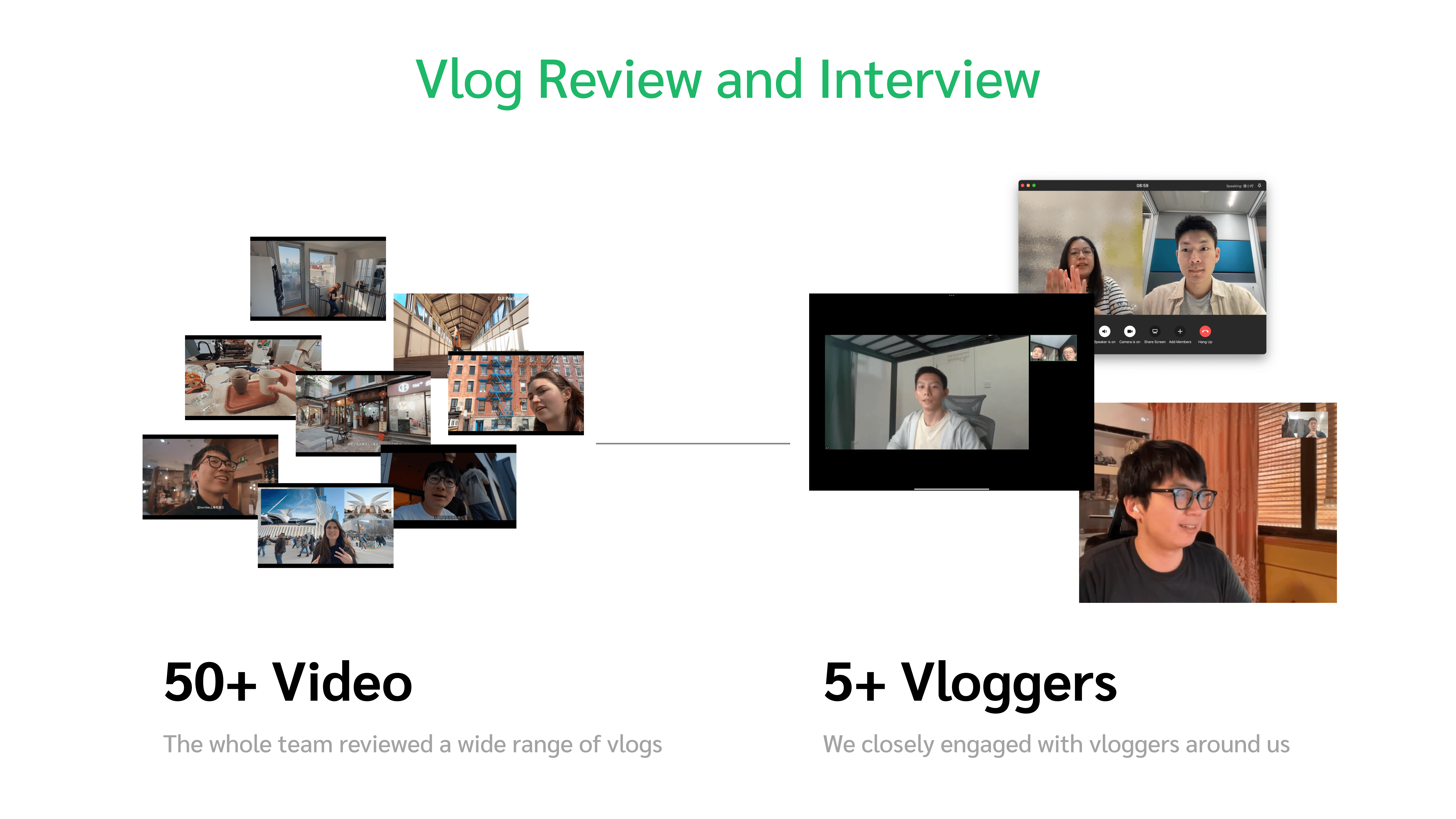

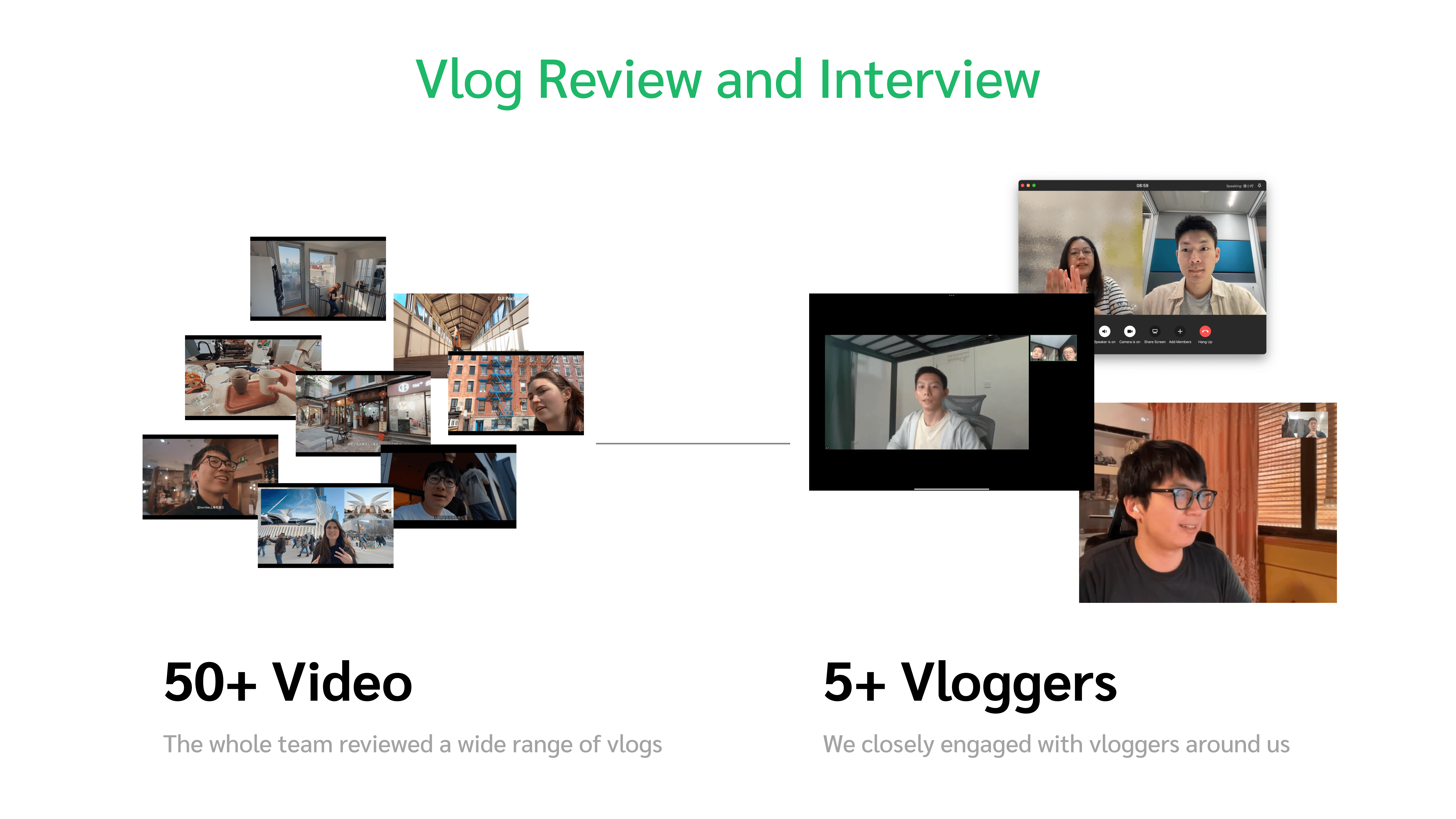

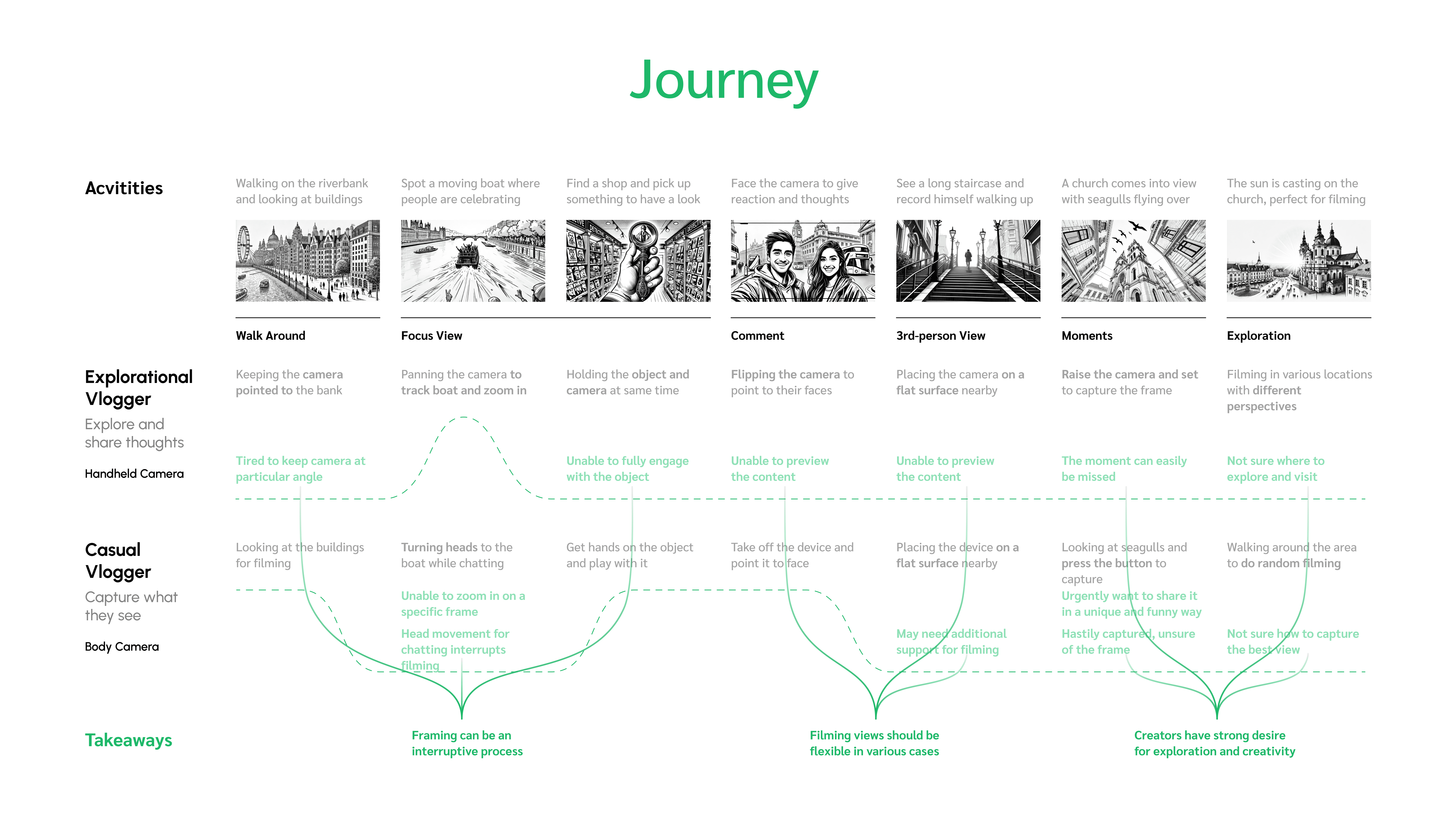

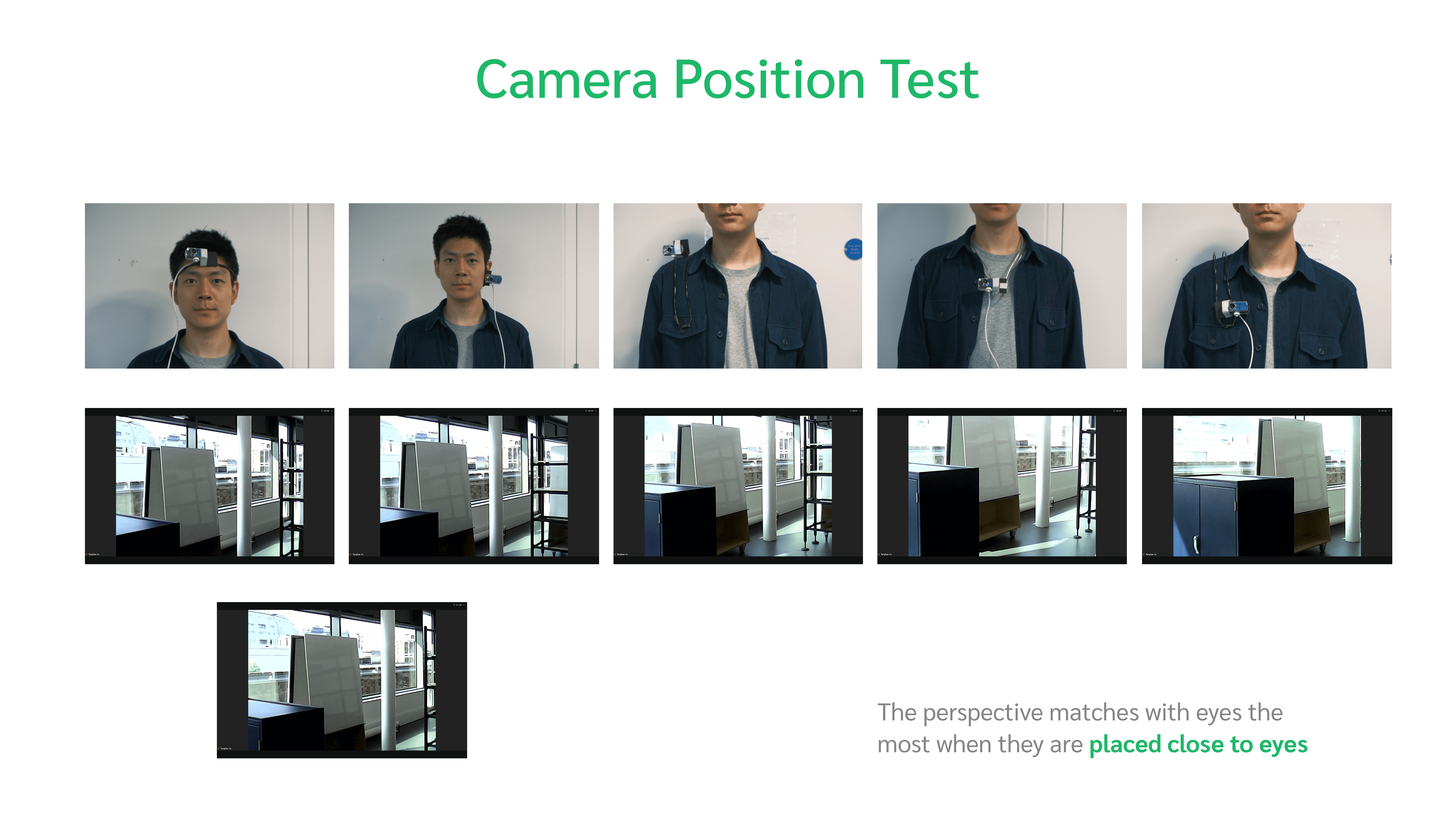

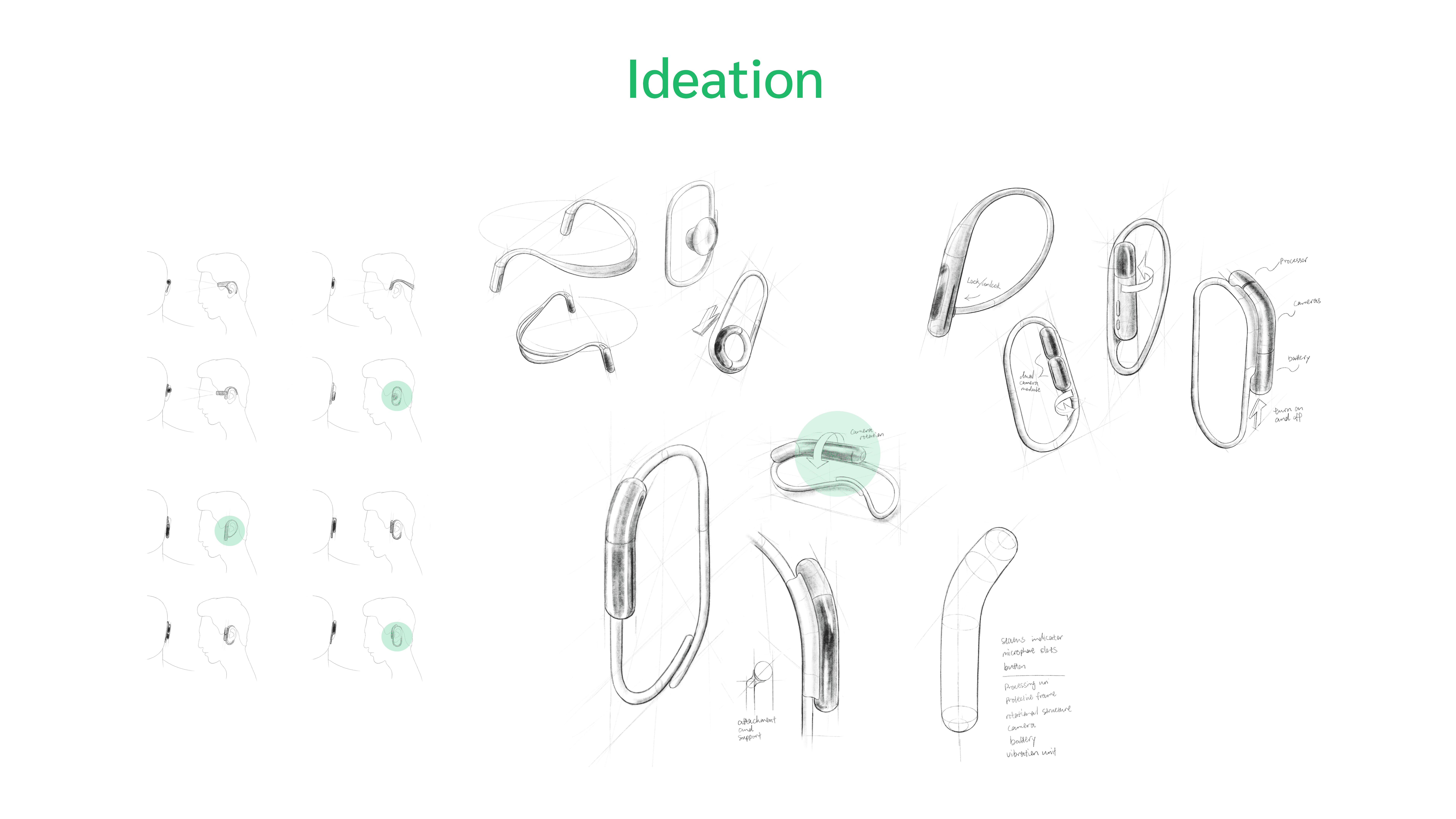

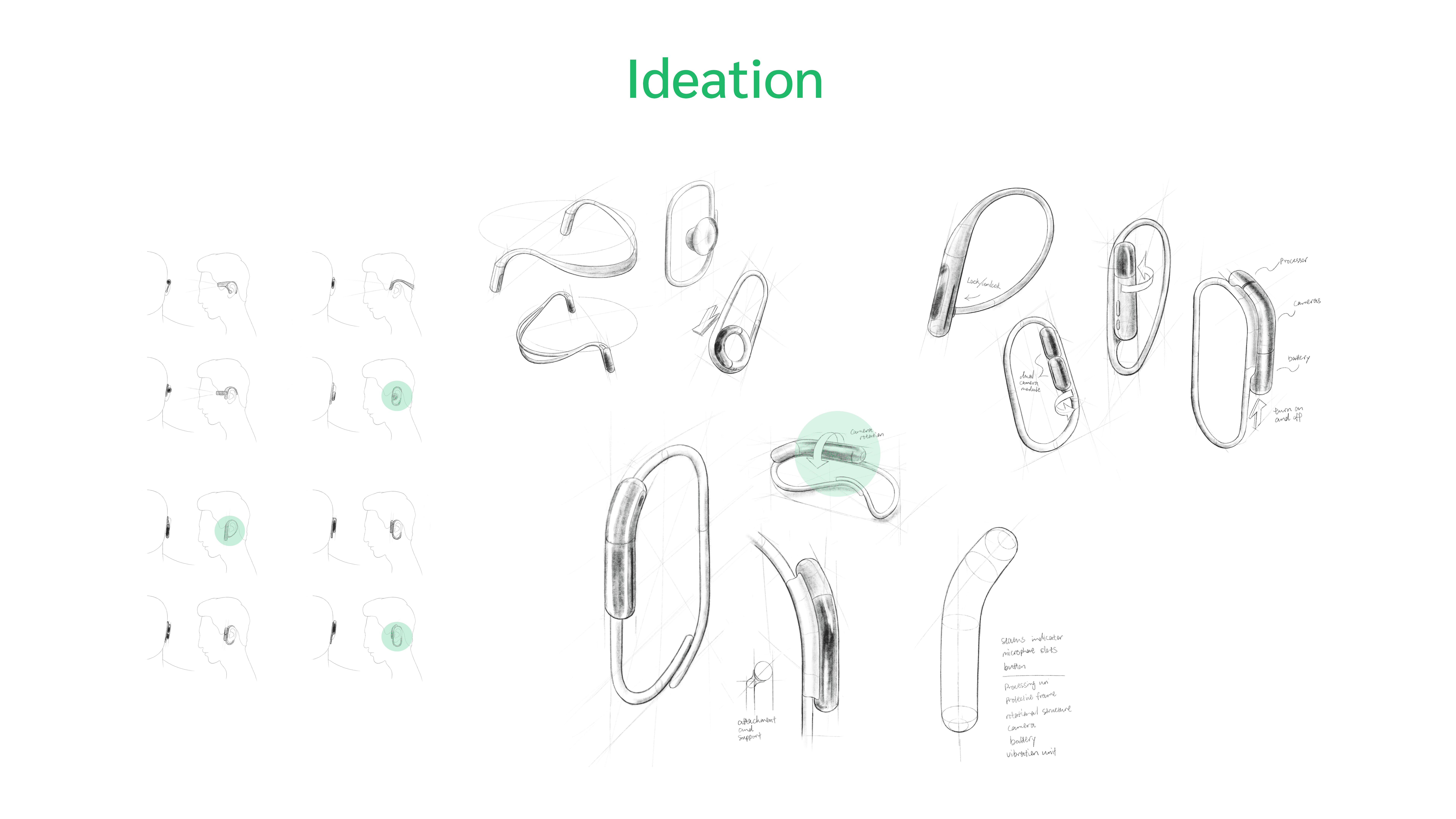

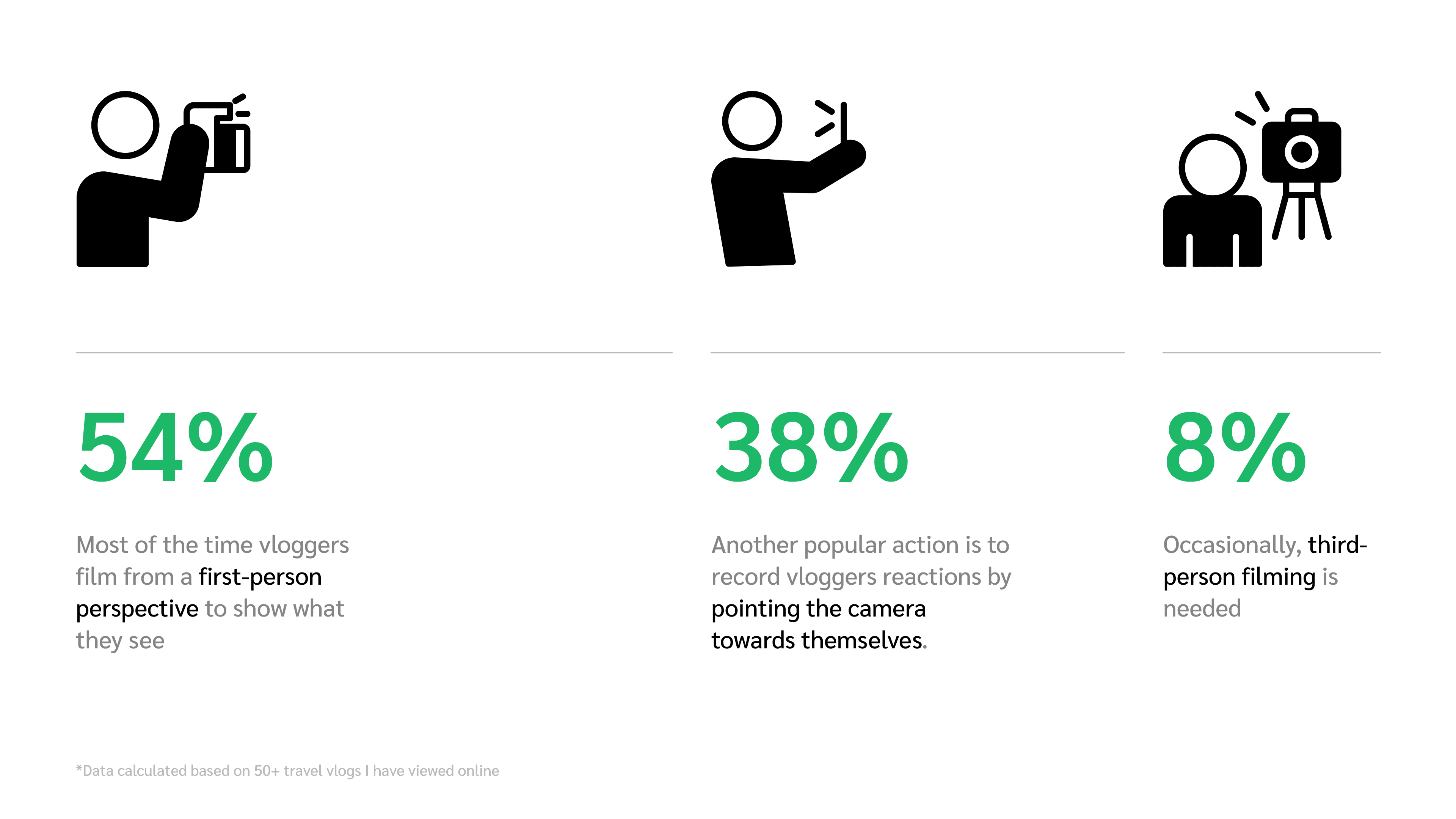

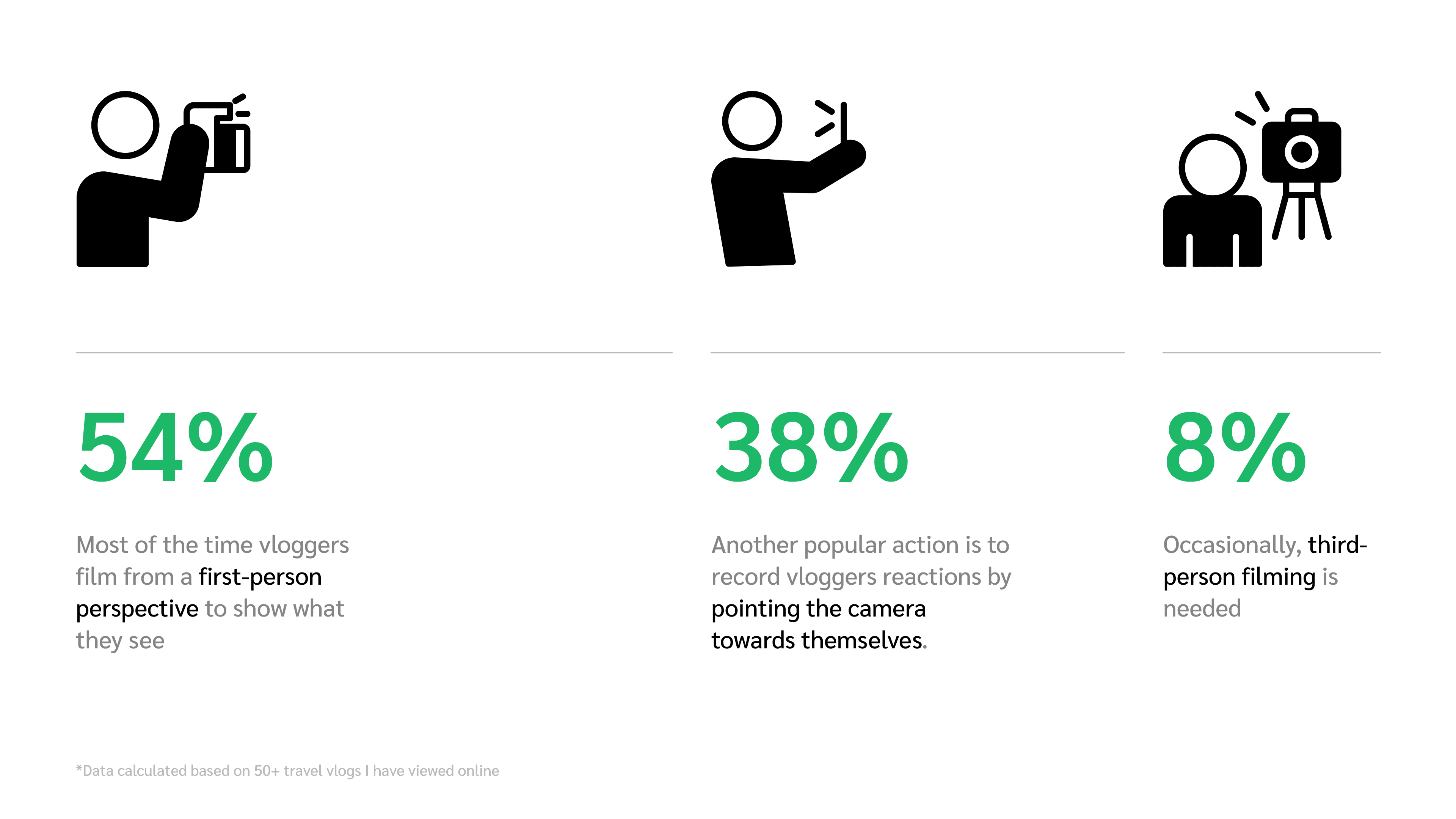

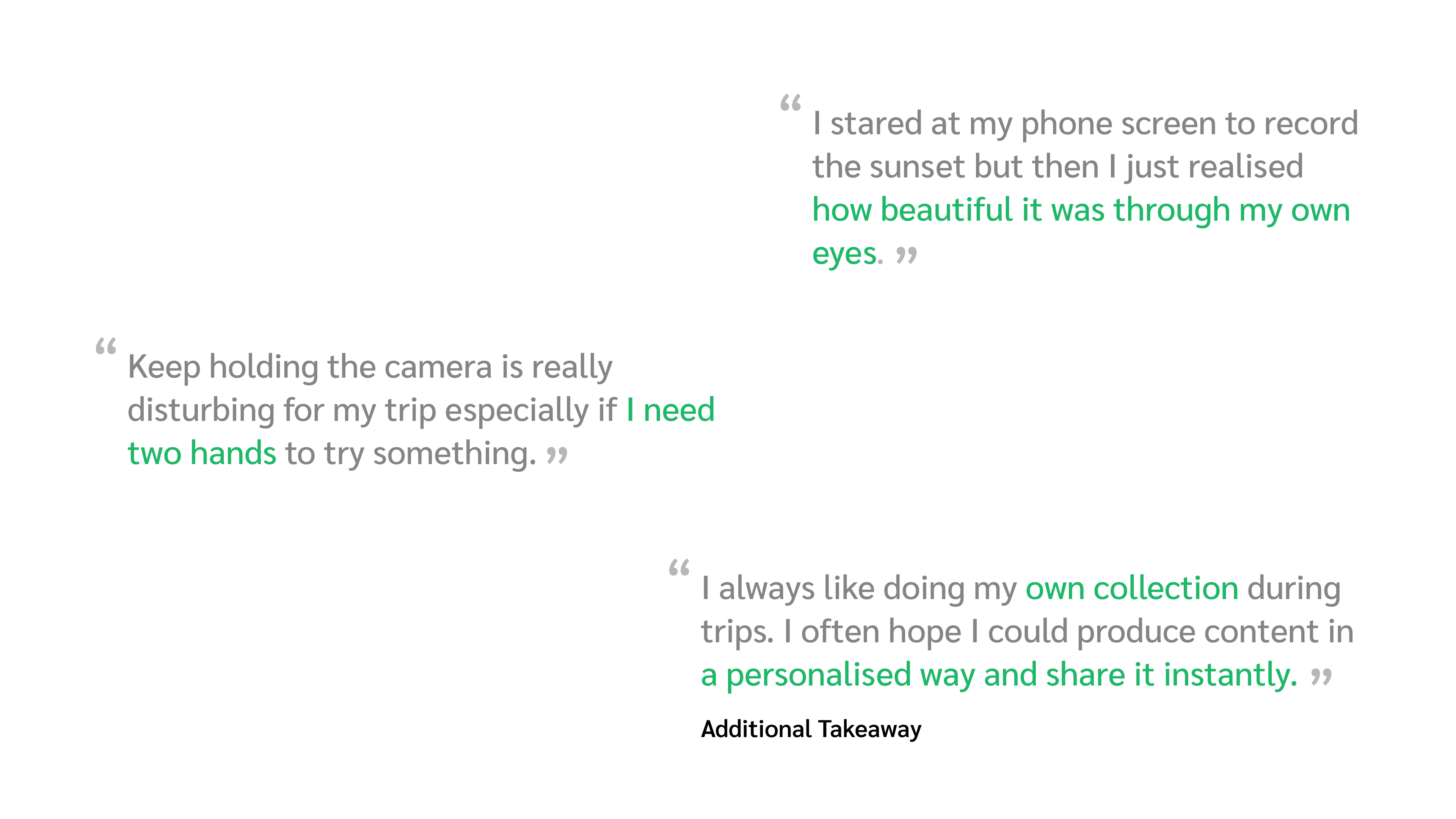

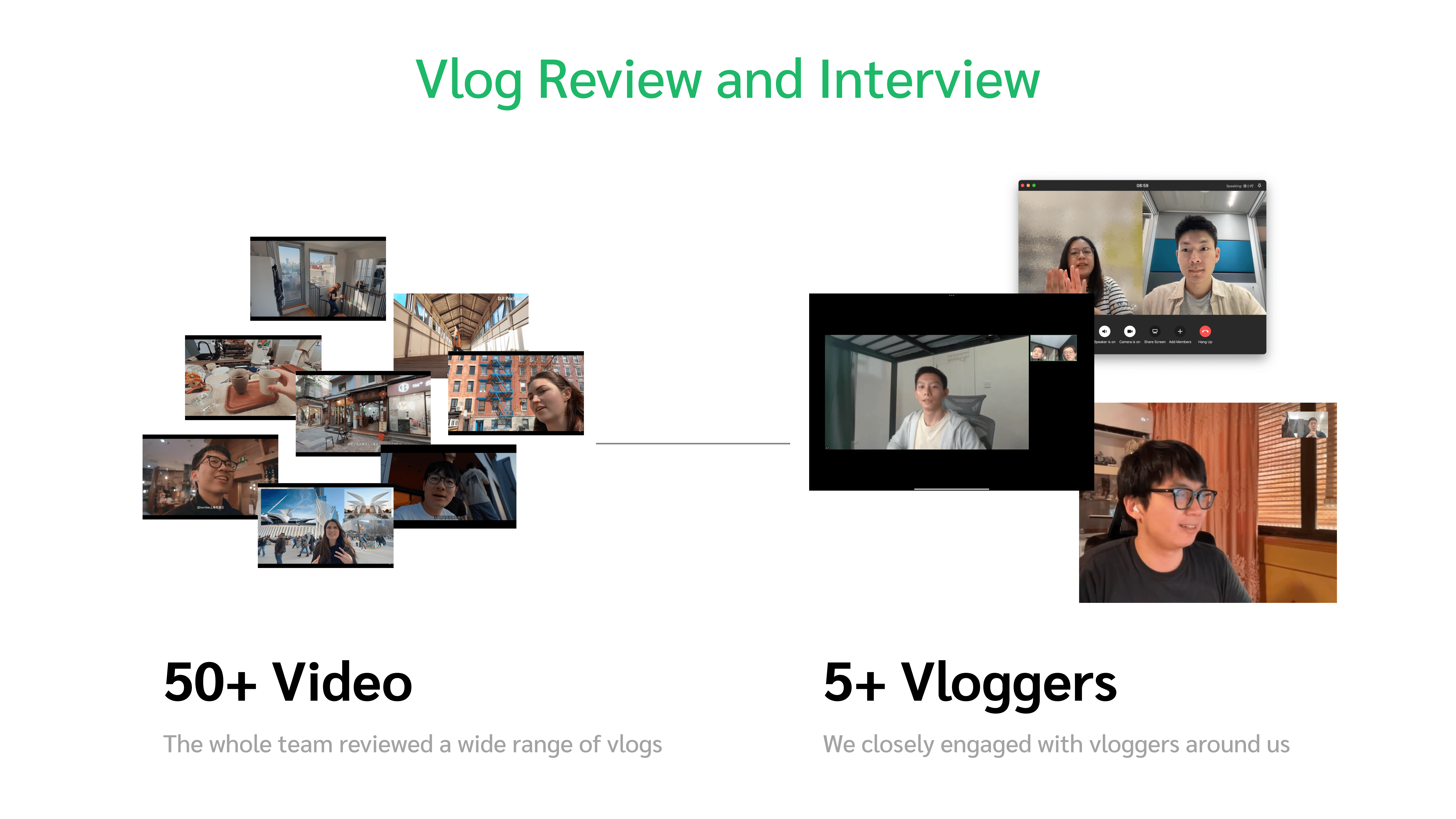

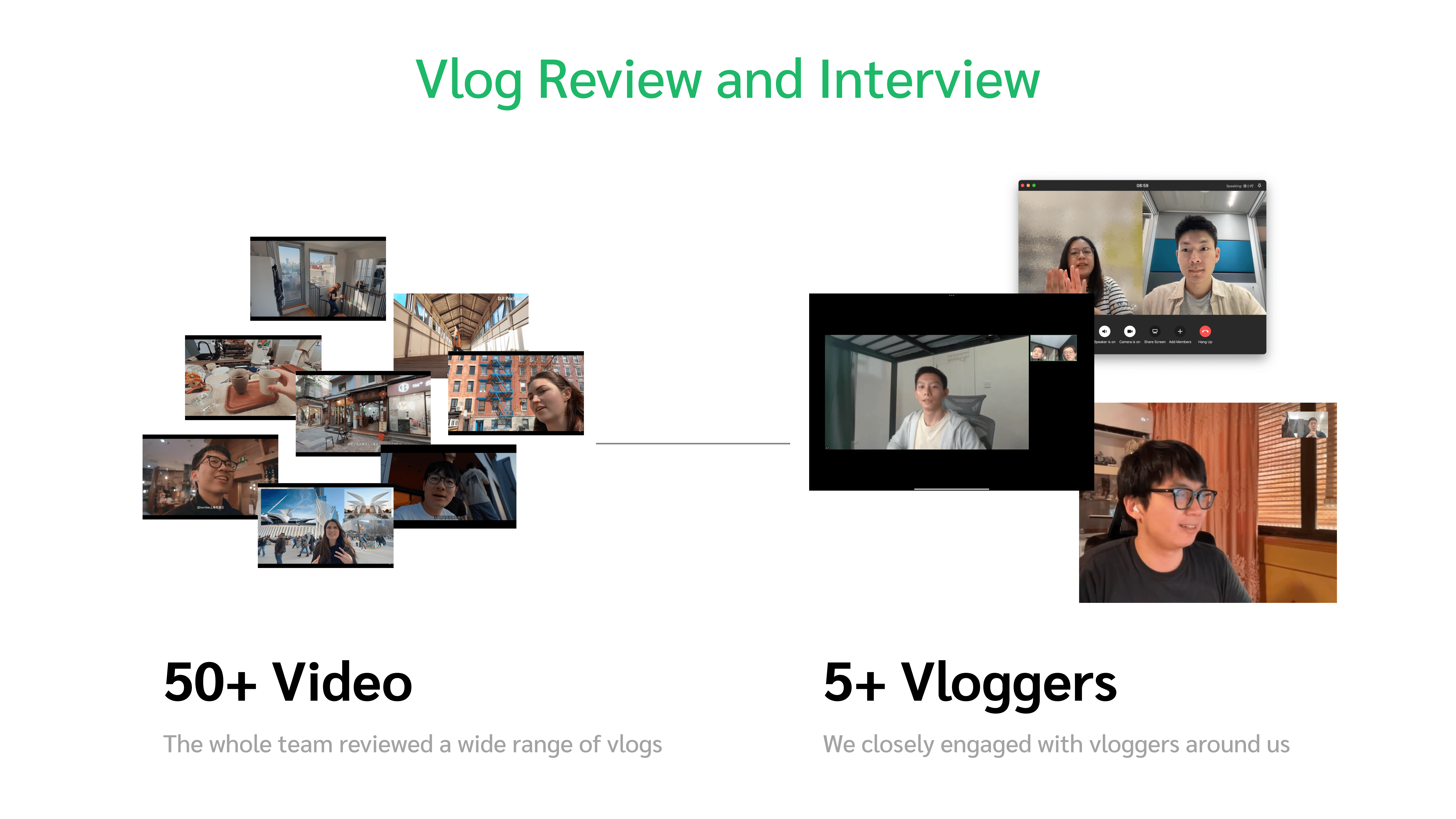

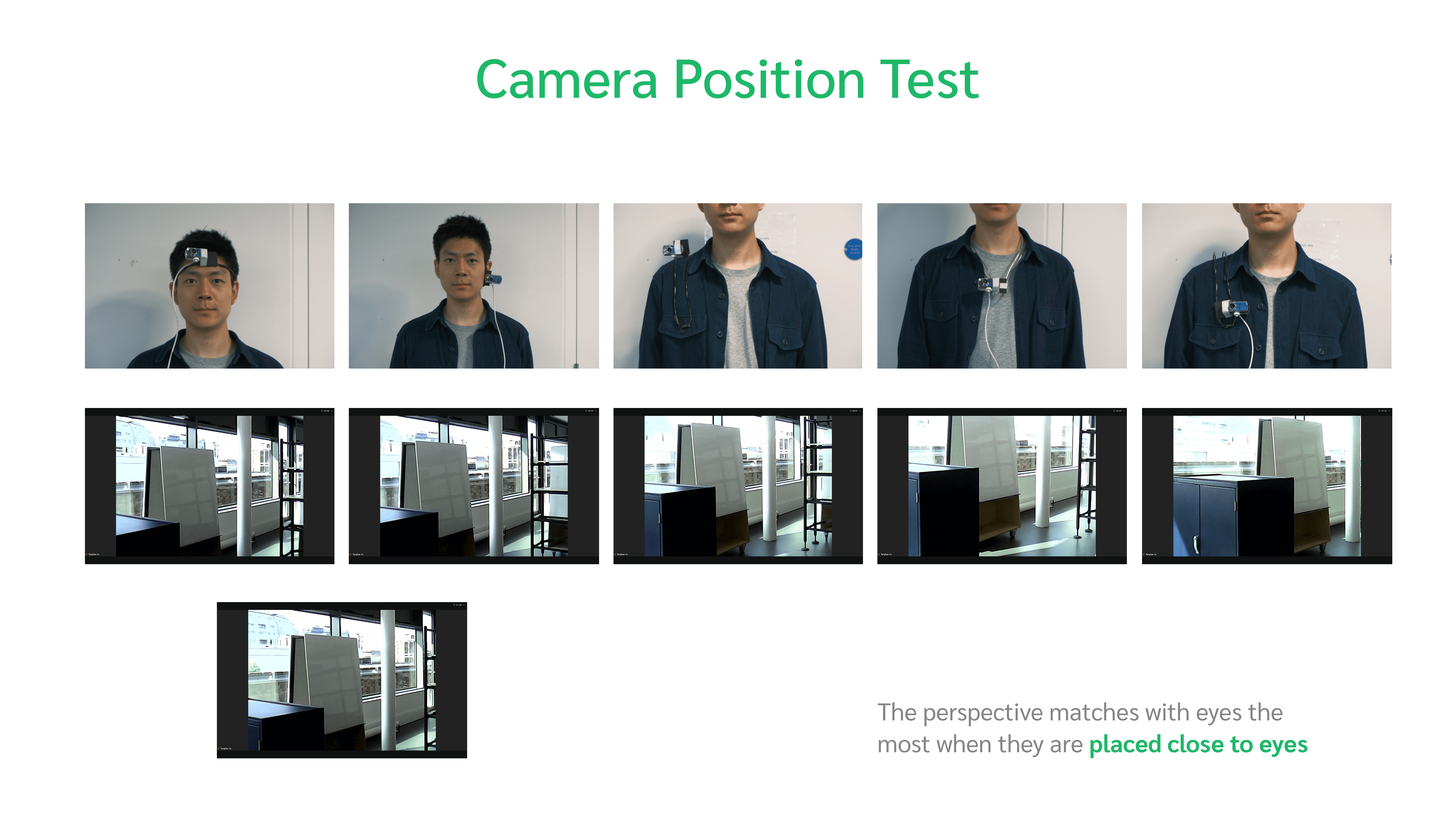

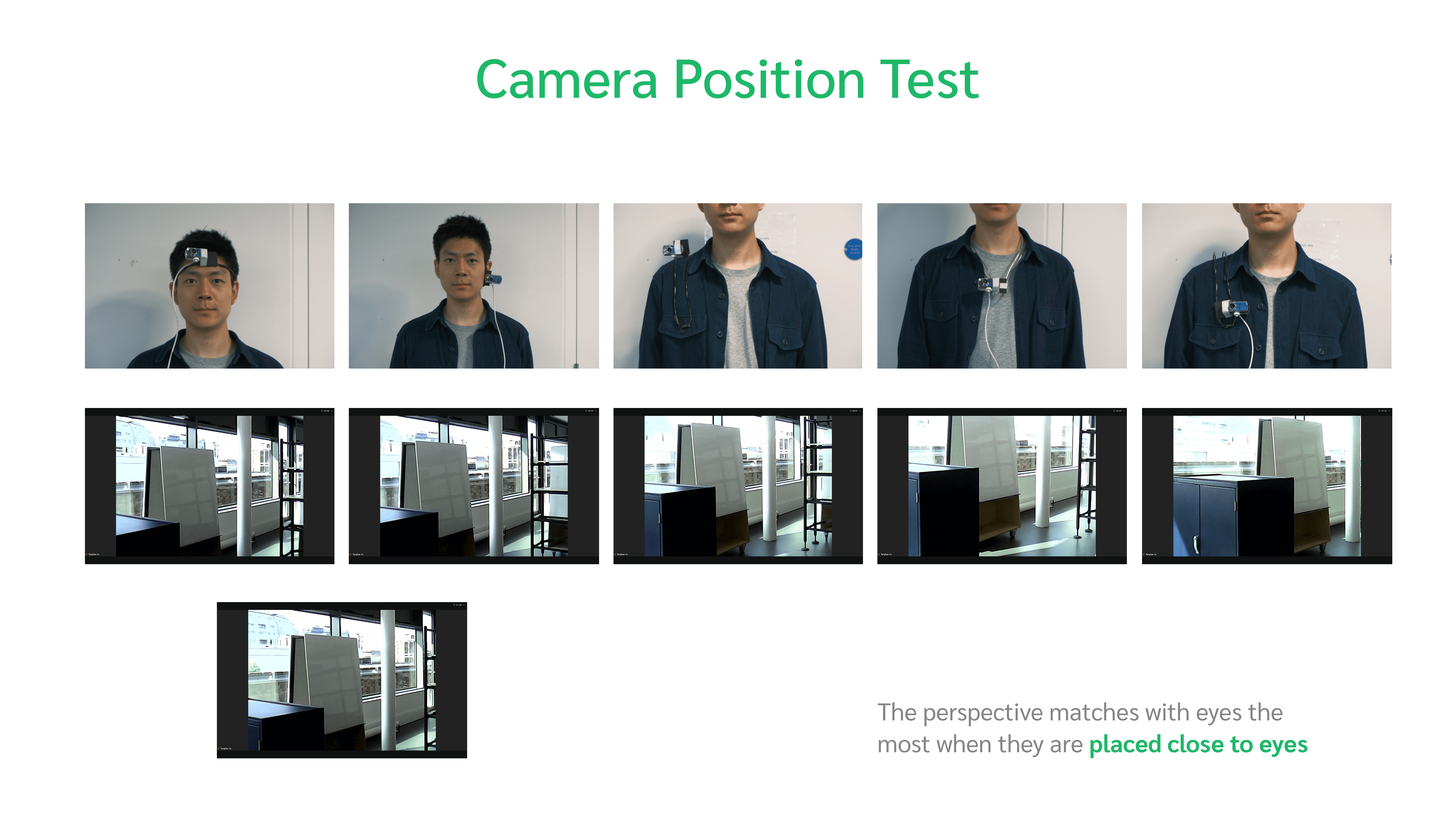

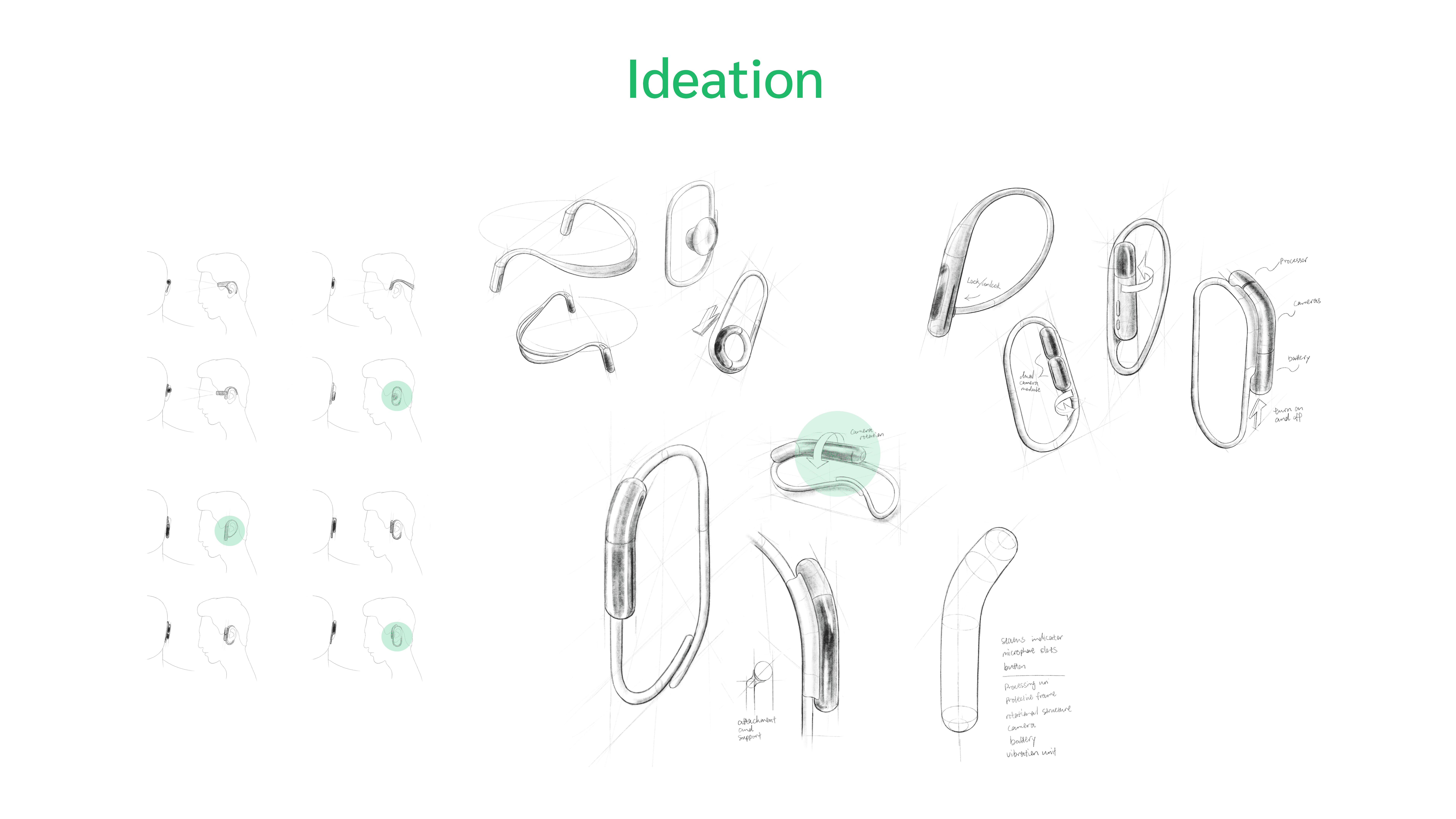

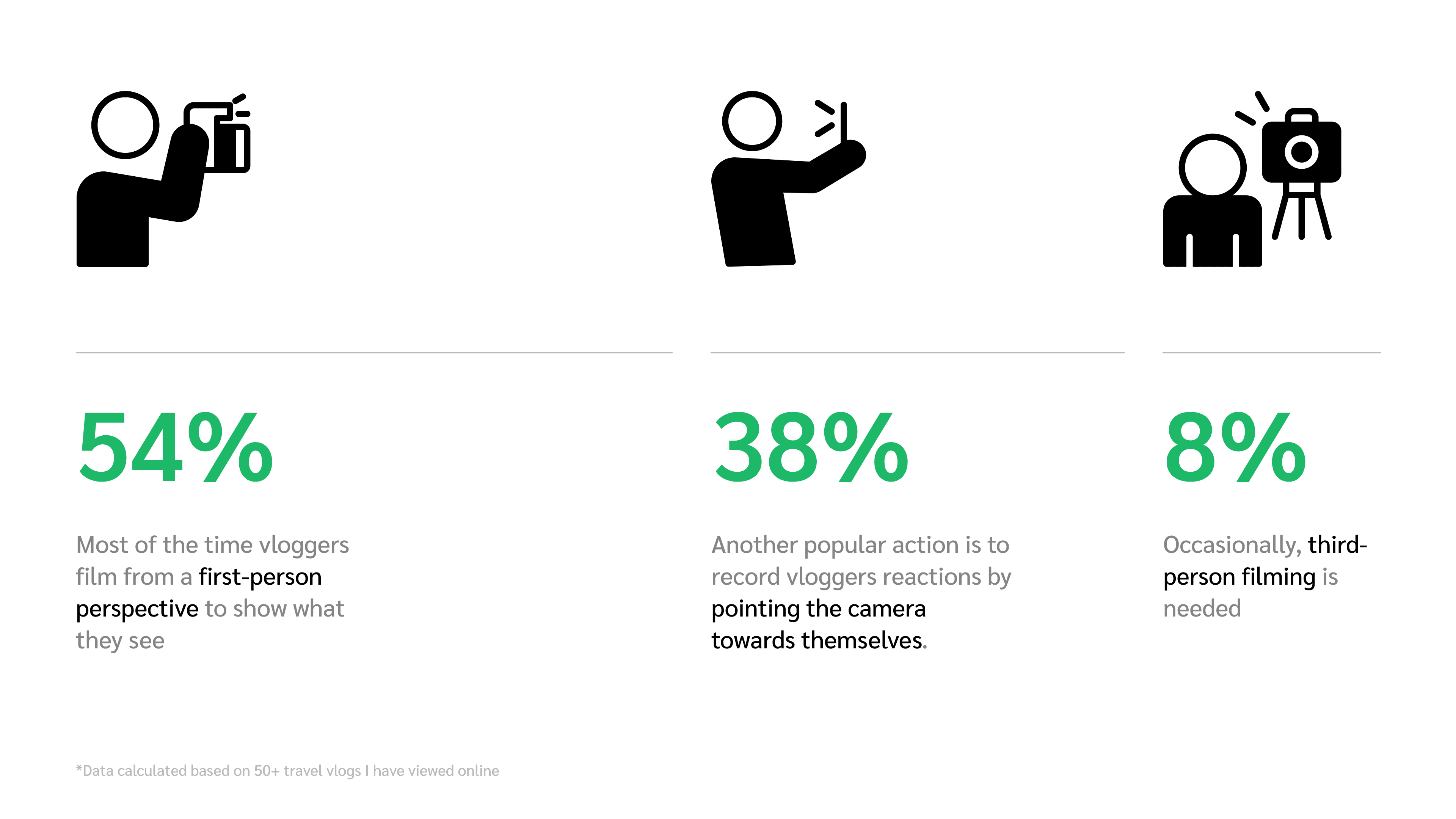

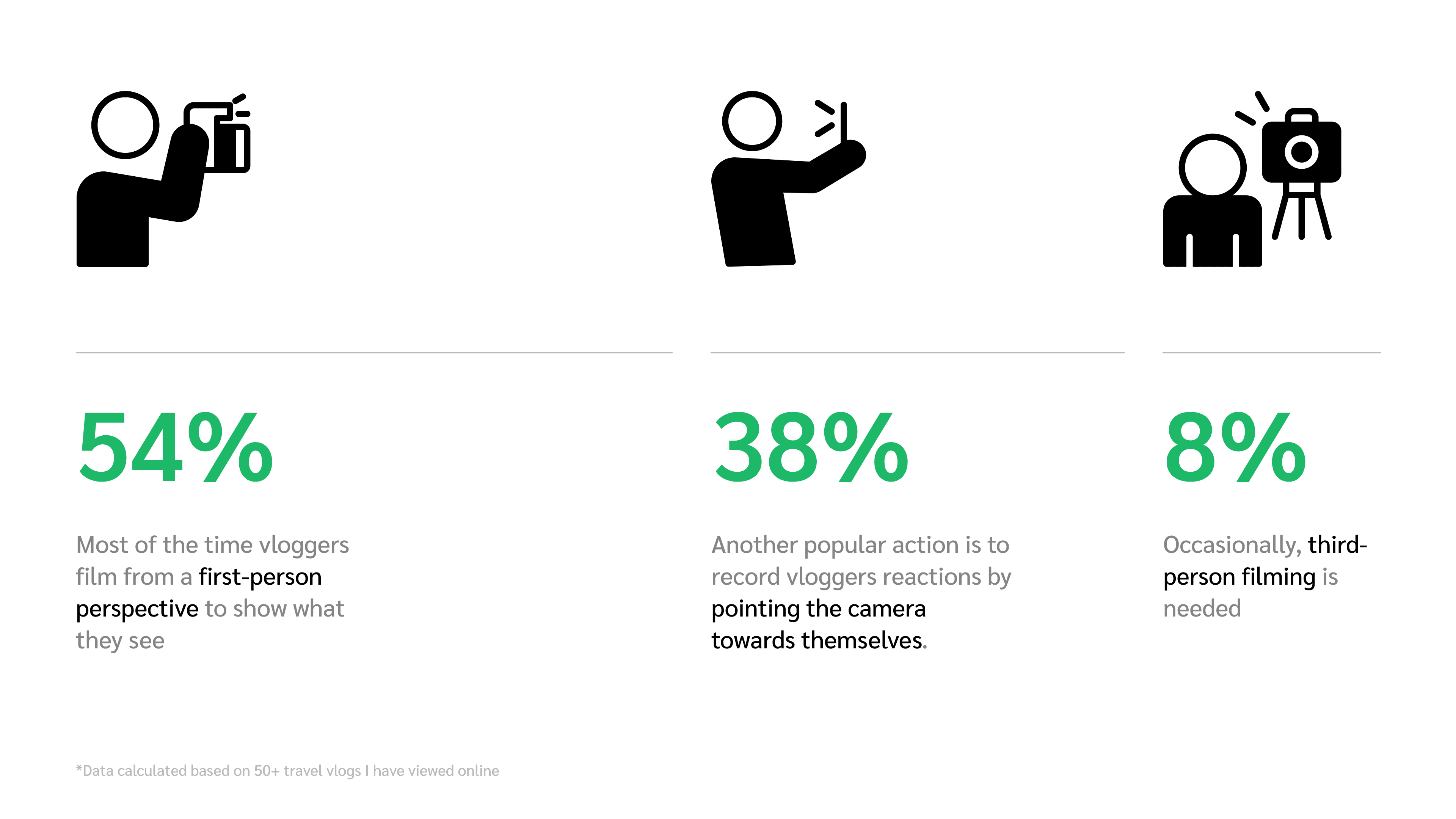

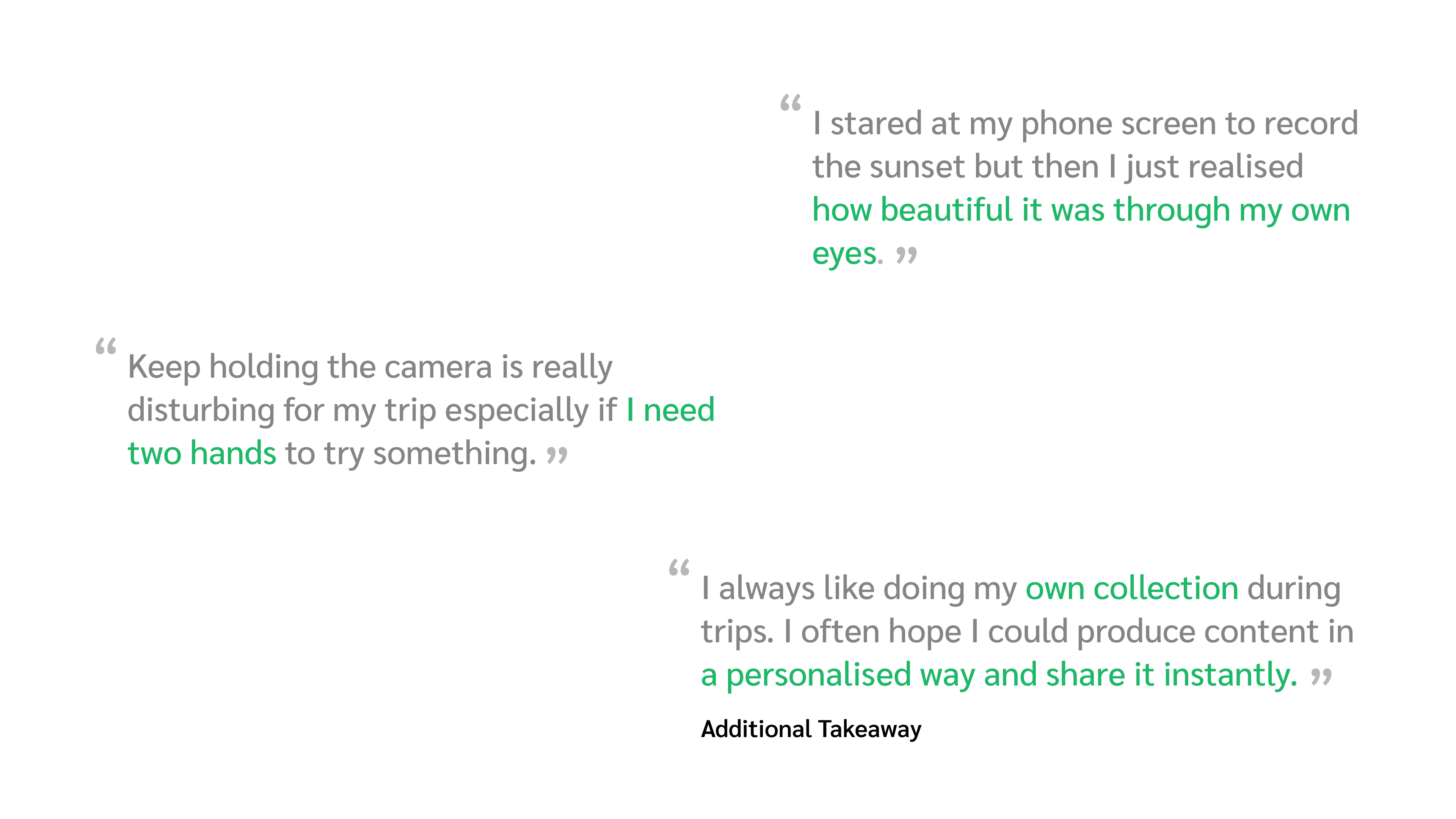

Despite a strong vision for applying emerging AI technologies, our approach remained user-centric. We began with thorough user research, engaging vlogger friends and reviewing numerous vlogs to uncover key insights. One consistent finding was that handheld filming devices are often disruptive, and content creators seek more personalized solutions based on real-time capture. These insights guided our focus towards integrating Visual and Generative AI with wearable designs, while testing various form factors to optimize ergonomics and usability.

Context

In the past few years vlogging has been trending rapidly, especially travel vlog. Here are some data. Among all vlogs, travel vlog takes around 1/3. Travel vlogs also have higher profit increase and there is a 106% annual growth of travel vlog channels.

Challenge

Travel vlogging has been so popular but the recording devices remains traditional. Here we are targeting at amateur vloggers, but even they don’t have many choices.

Most people take hand-held cameras for recording, which really interrupts their travel experience as their hands are always occupied. There are smaller devices like the body cam but it has fixed perspective and are not that flexible to change dynamically.

What if there is an intelligent companion that truly understands your perspective and unleashes your creative exploration?

Concept

LOOP-i is a pair of AI-powered wearable cameras designed for creators, capturing content from an eye-level perspective. Worn primarily on the ears, it provides a natural, immersive view of what users focus on.

LOOP-i leverages AI in two key ways: First, it uses multimodal inputs—such as images, voice, and hand gestures—to automate content recording and simplify the process. Second, it incorporates generative AI, enabling users to creatively transform and stylize their footage based on their surroundings, unlocking new possibilities for personalized content creation.

Process

Despite a strong vision for applying emerging AI technologies, our approach remained user-centric. We began with thorough user research, engaging vlogger friends and reviewing numerous vlogs to uncover key insights. One consistent finding was that handheld filming devices are often disruptive, and content creators seek more personalized solutions based on real-time capture. These insights guided our focus towards integrating Visual and Generative AI with wearable designs, while testing various form factors to optimize ergonomics and usability.

LOOP-i follows head direction by default to capture first person view

Scenario View

Expected Camera View

LOOP-i centers targets in the frame based on creators’ speech and where their fingers are pointing

Scenario View

Expected Camera View

Multimodal Inference (Technical Prototyping)

Voice input and camera visuals are used to infer and track the user’s intended target (Built with OpenAI’s API and OpenCV)

ML Based Gesture Interaction (Technical Prototyping)

Classic crop hand gestures are used to enable intuitive content capture (Supported by Google’s MediaPipe)

The combination of crop gestures and Gen AI allows stylised and creative filming creation

Crop hand poses provides an intuitive way to quickly frame the view

Product Details

The style of design language is approachable and futuristic. The body as well as the support is applied with rounded form. The combination of dark glass, camera and LED provides a feeling that the device is alive and understand people.

LOOP-i is also designed to be used in a diverse ways. By default it is designed be to ear-mounted but can also be attached on palm for selfie or placed on a surface to capture 3rd person-view.

Gesture-based interaction offers more opportunities to capture key moments

Beyond video content generation can be applied to photos and selected targets

LOOP-i

Project Duration

2 Months · Group Project (Led by Me)

My Roles

Project Management, Interaction Designer, Industrial Designer

Goal

Exploring the potential of AI technologies and new hardwares designed to leverage them

Collaboration

OPPO x Royal College of Art

2024

AI Hardware. Wearable Design. Spatial Interaction.

With the advancement of AI technologies, I believe the best way to harness its potential is by integrating it into scenarios where it is truly needed.

LOOP-i is an AI-powered wearable camera that reimagines the vlogging experience. It uses multimodal inputs to perceive creators’ intent and capture what they focus on. By leveraging generative AI, it enables creators to generate dynamic content based on their surroundings.

London Design Festival 2024

OPPO x RCA: Connective Intelligence

Context

In the past few years vlogging has been trending rapidly, especially travel vlog. Here are some data. Among all vlogs, travel vlog takes around 1/3. Travel vlogs also have higher profit increase and there is a 106% annual growth of travel vlog channels.

Challenge

Travel vlogging has been so popular but the recording devices remains traditional. Here we are targeting at amateur vloggers, but even they don’t have many choices.

Most people take hand-held cameras for recording, which really interrupts their travel experience as their hands are always occupied. There are smaller devices like the body cam but it has fixed perspective and are not that flexible to change dynamically.

What if there is an intelligent companion that truly understands your perspective and unleashes your creative exploration?

Concept

LOOP-i is a pair of AI-powered wearable cameras designed for creators, capturing content from an eye-level perspective. Worn primarily on the ears, it provides a natural, immersive view of what users focus on.

LOOP-i leverages AI in two key ways: First, it uses multimodal inputs—such as images, voice, and hand gestures—to automate content recording and simplify the process. Second, it incorporates generative AI, enabling users to creatively transform and stylize their footage based on their surroundings, unlocking new possibilities for personalized content creation.

LOOP-i follows head direction by default to capture first person view

Scenario View

Expected Camera View

LOOP-i centers targets in the frame based on creators’ speech and where their fingers are pointing

Scenario View

Expected Camera View

Multimodal Inference (Technical Prototyping)

Voice input and camera visuals are used to infer and track the user’s intended target (Built with OpenAI’s API and OpenCV)

Crop hand poses provides an intuitive way to quickly frame the view

Gesture-based interaction offers more opportunities to capture key moments

ML Based Gesture Interaction (Technical Prototyping)

Classic crop hand gestures are used to enable intuitive content capture (Supported by Google’s MediaPipe)

The combination of crop gestures and Gen AI allows stylised and creative filming creation

Beyond video content generation can be applied to photos and selected targets

Product Details

The style of design language is approachable and futuristic. The body as well as the support is applied with rounded form. The combination of dark glass, camera and LED provides a feeling that the device is alive and understand people.

LOOP-i is also designed to be used in a diverse ways. By default it is designed be to ear-mounted but can also be attached on palm for selfie or placed on a surface to capture 3rd person-view.

Process

Despite a strong vision for applying emerging AI technologies, our approach remained user-centric. We began with thorough user research, engaging vlogger friends and reviewing numerous vlogs to uncover key insights. One consistent finding was that handheld filming devices are often disruptive, and content creators seek more personalized solutions based on real-time capture. These insights guided our focus towards integrating Visual and Generative AI with wearable designs, while testing various form factors to optimize ergonomics and usability.