DeePixel

2022

Robotic. ML. Interaction Design.

Group Project

Cyber Physical, Innovation Design Engineering (IC x RCA)

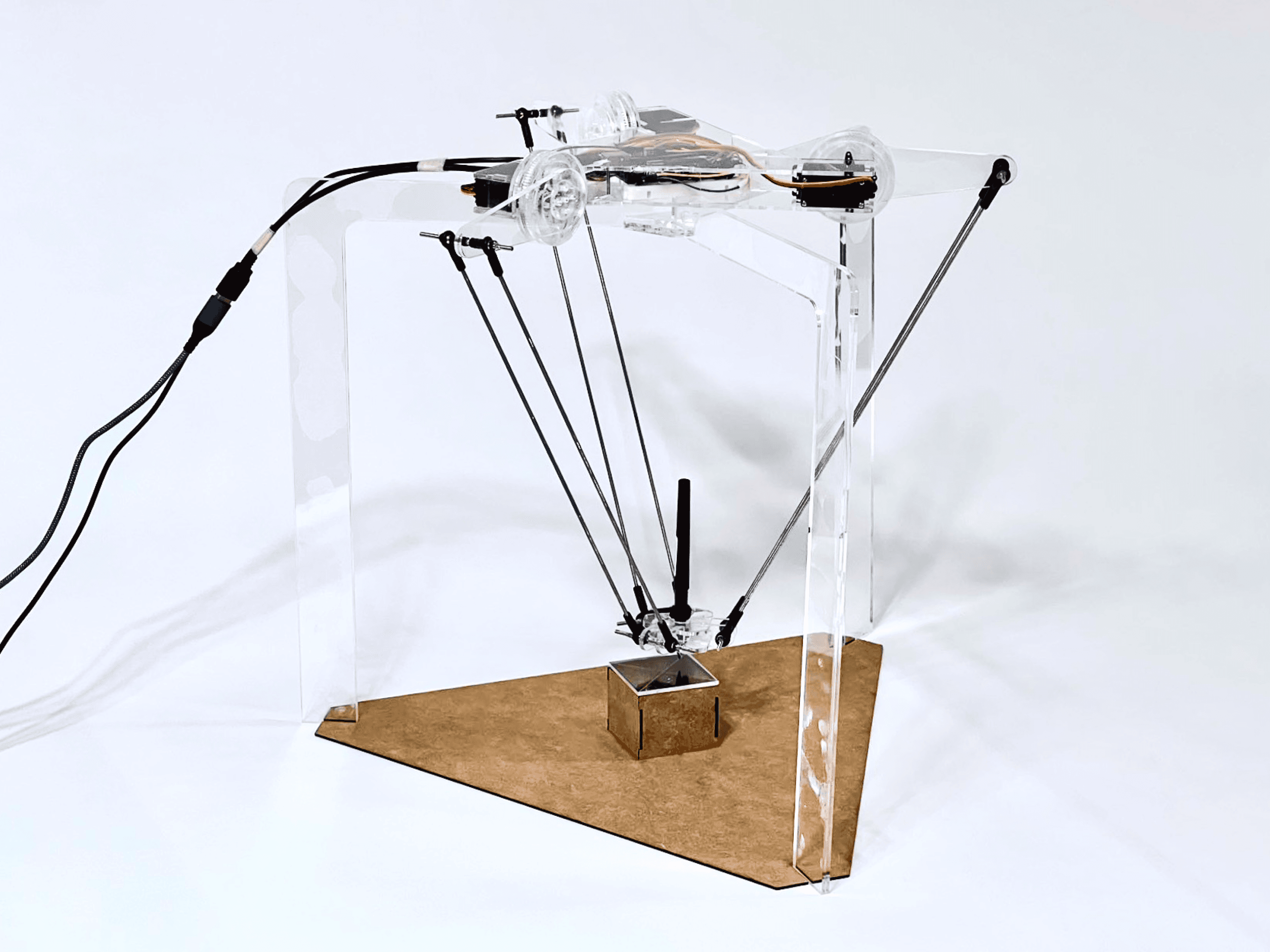

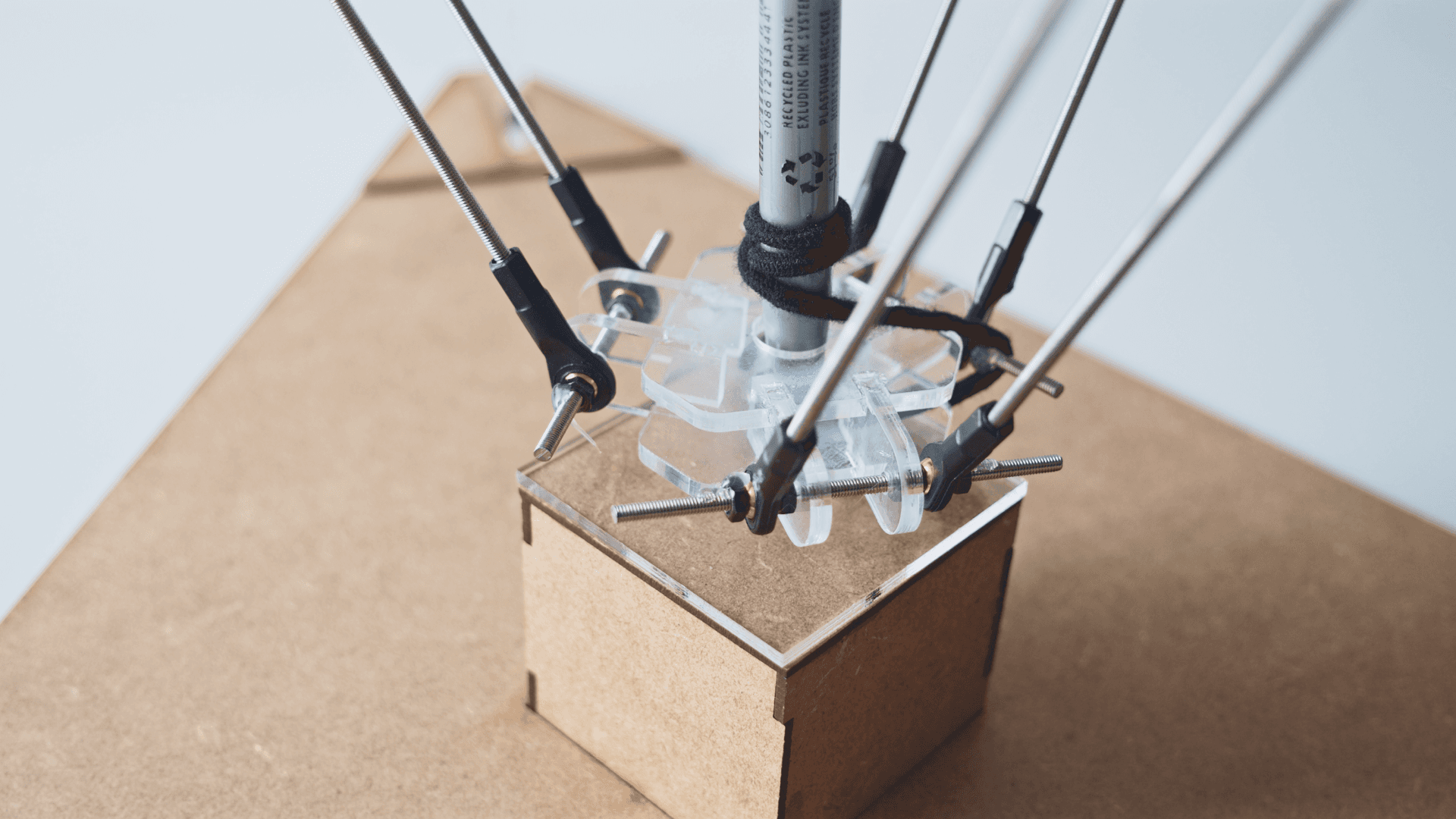

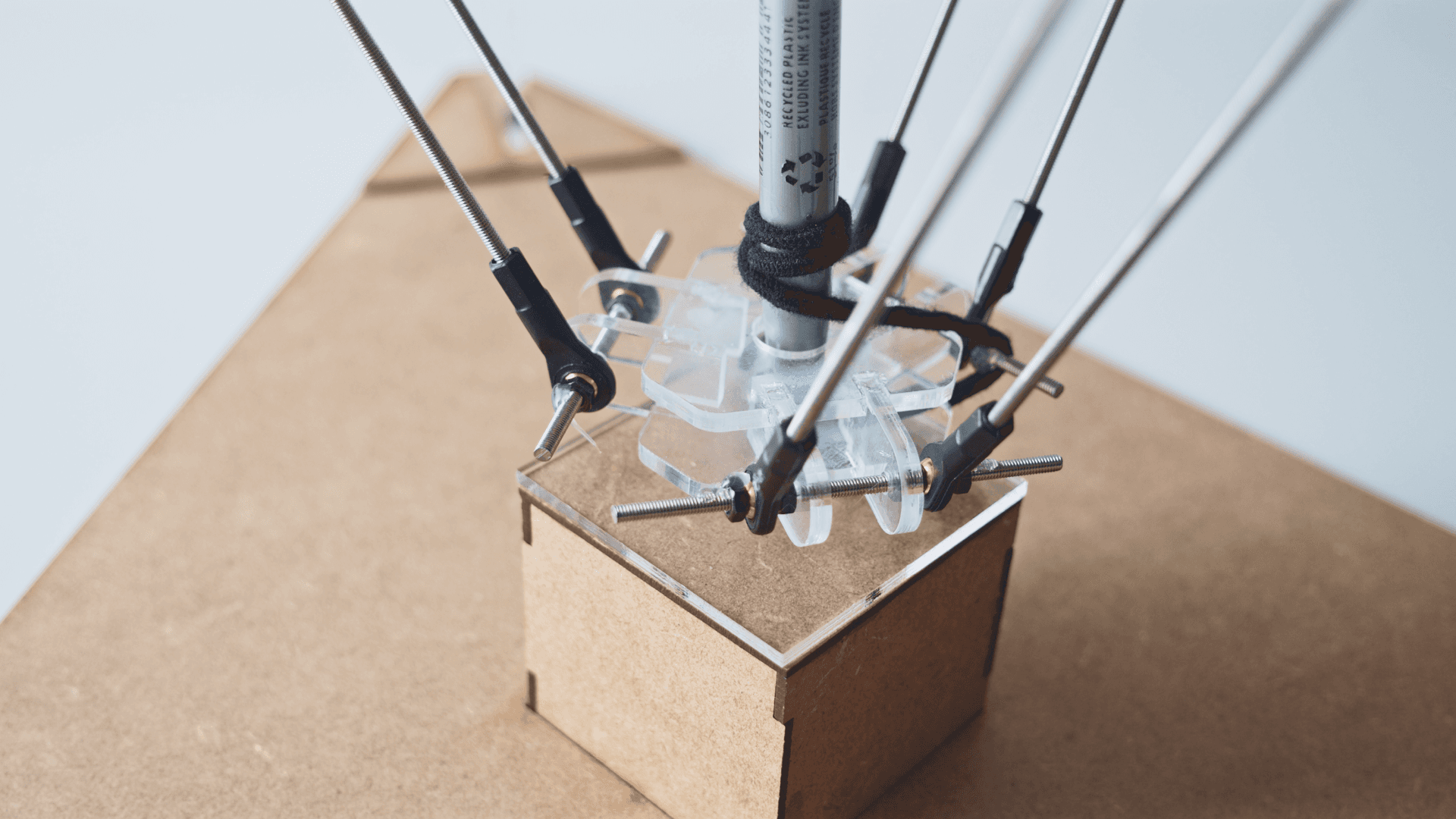

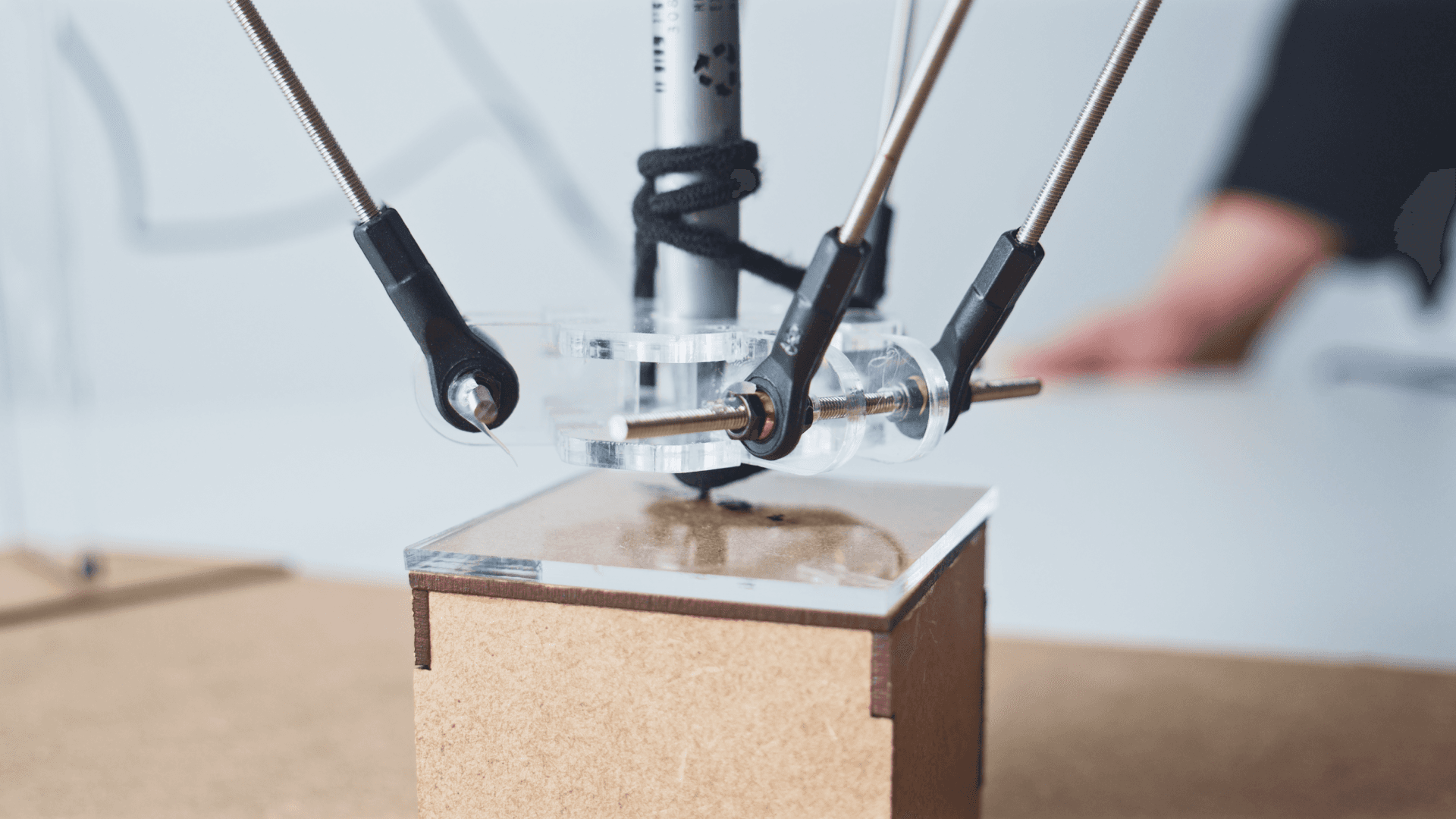

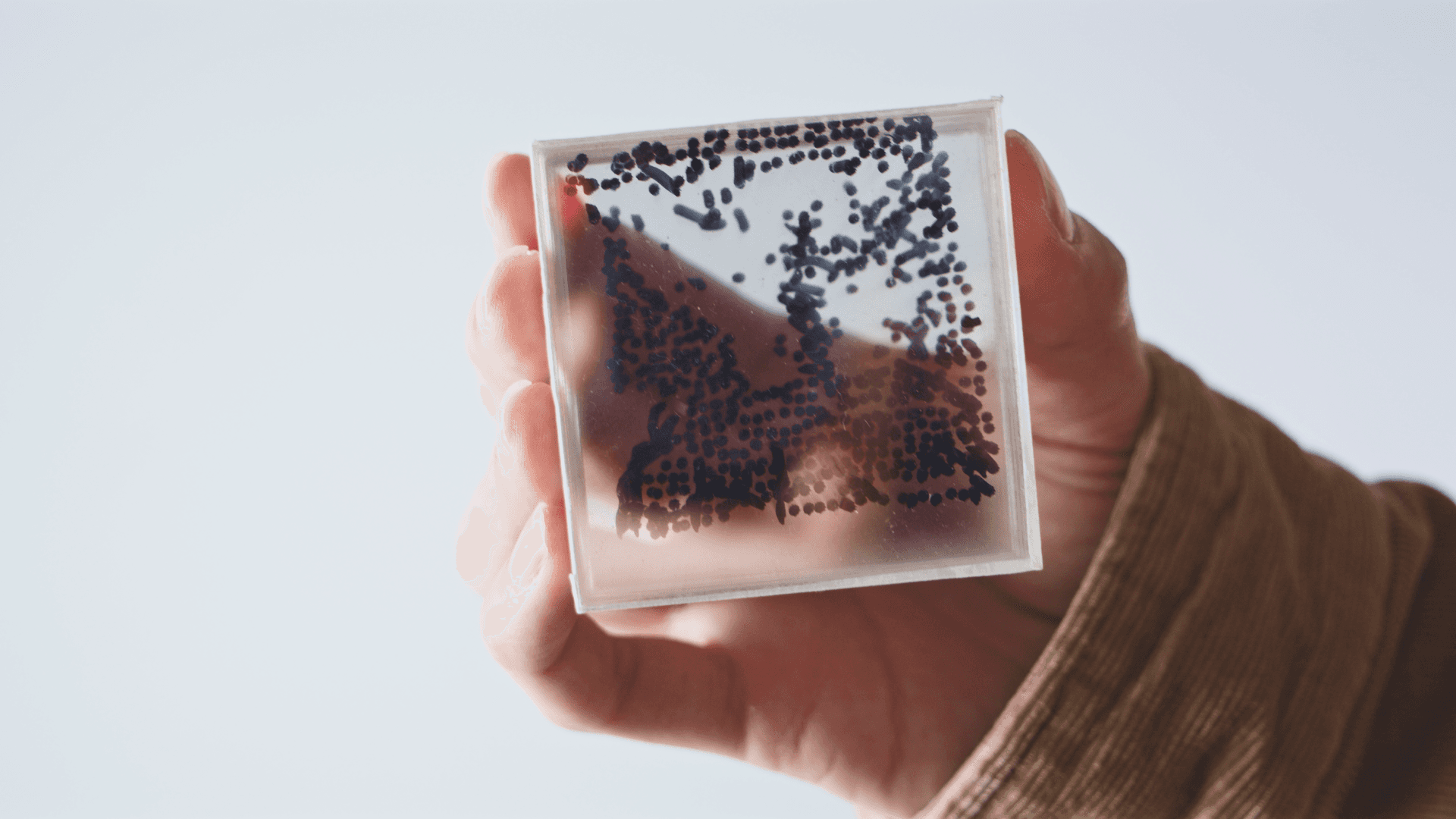

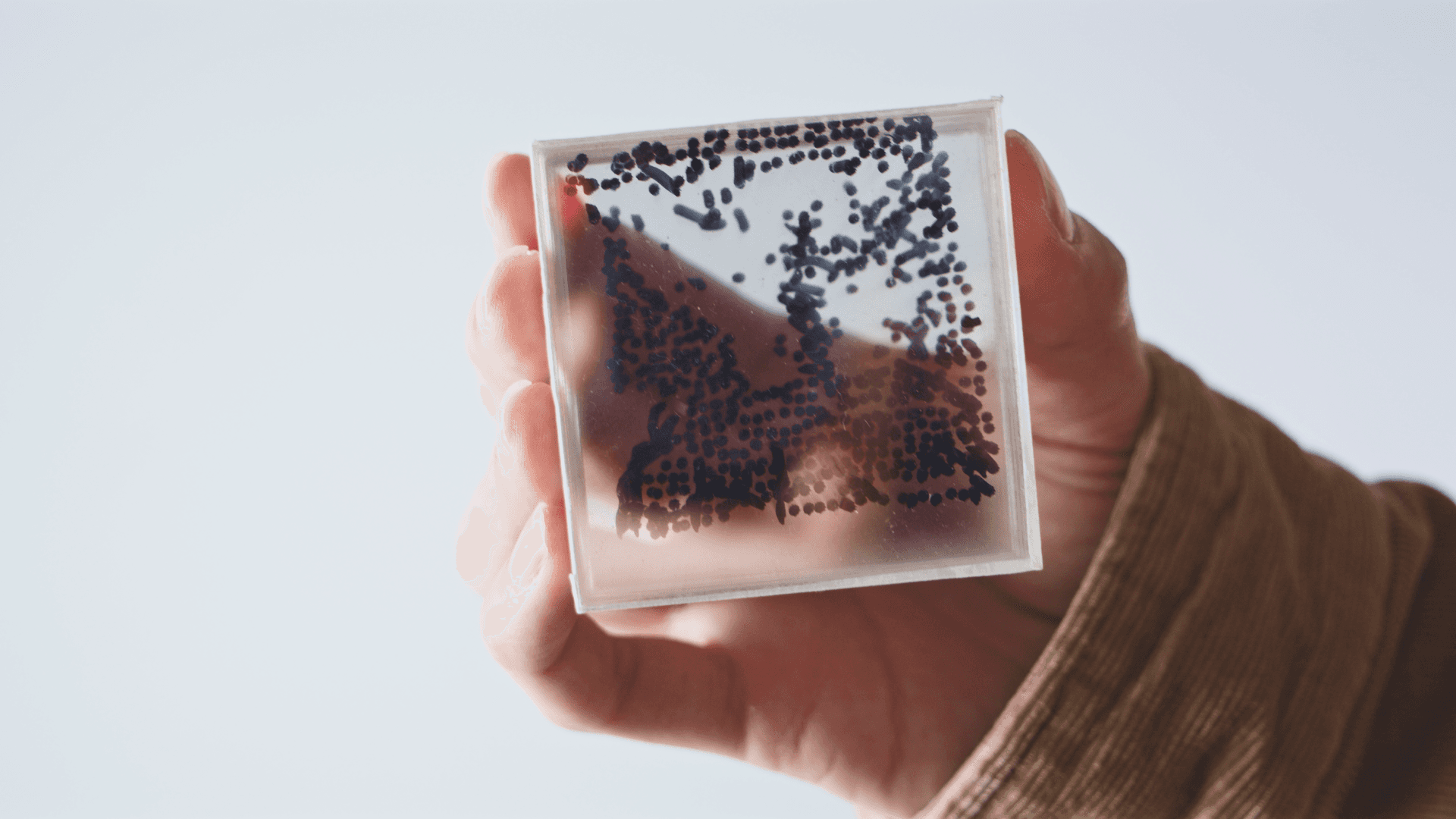

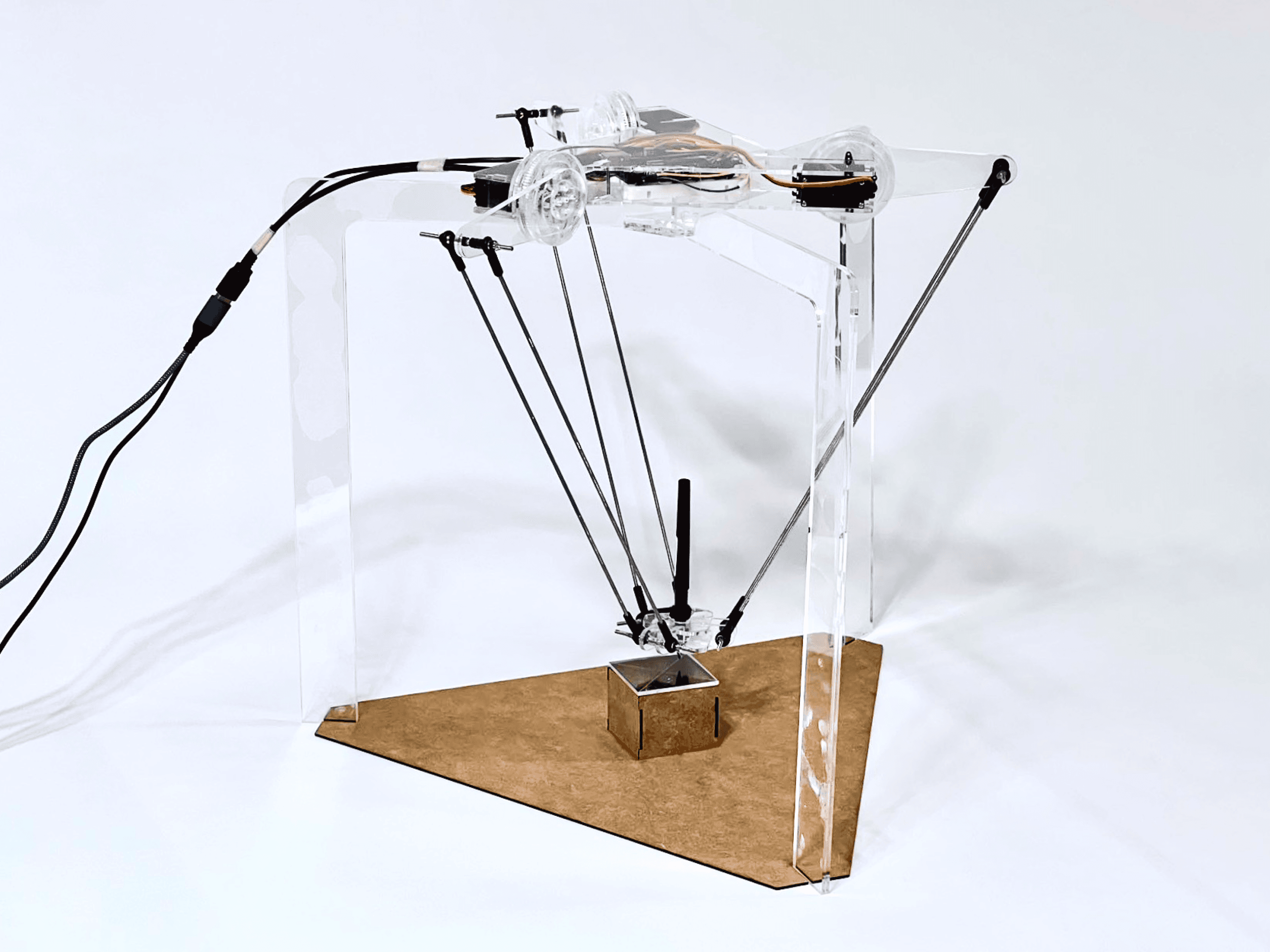

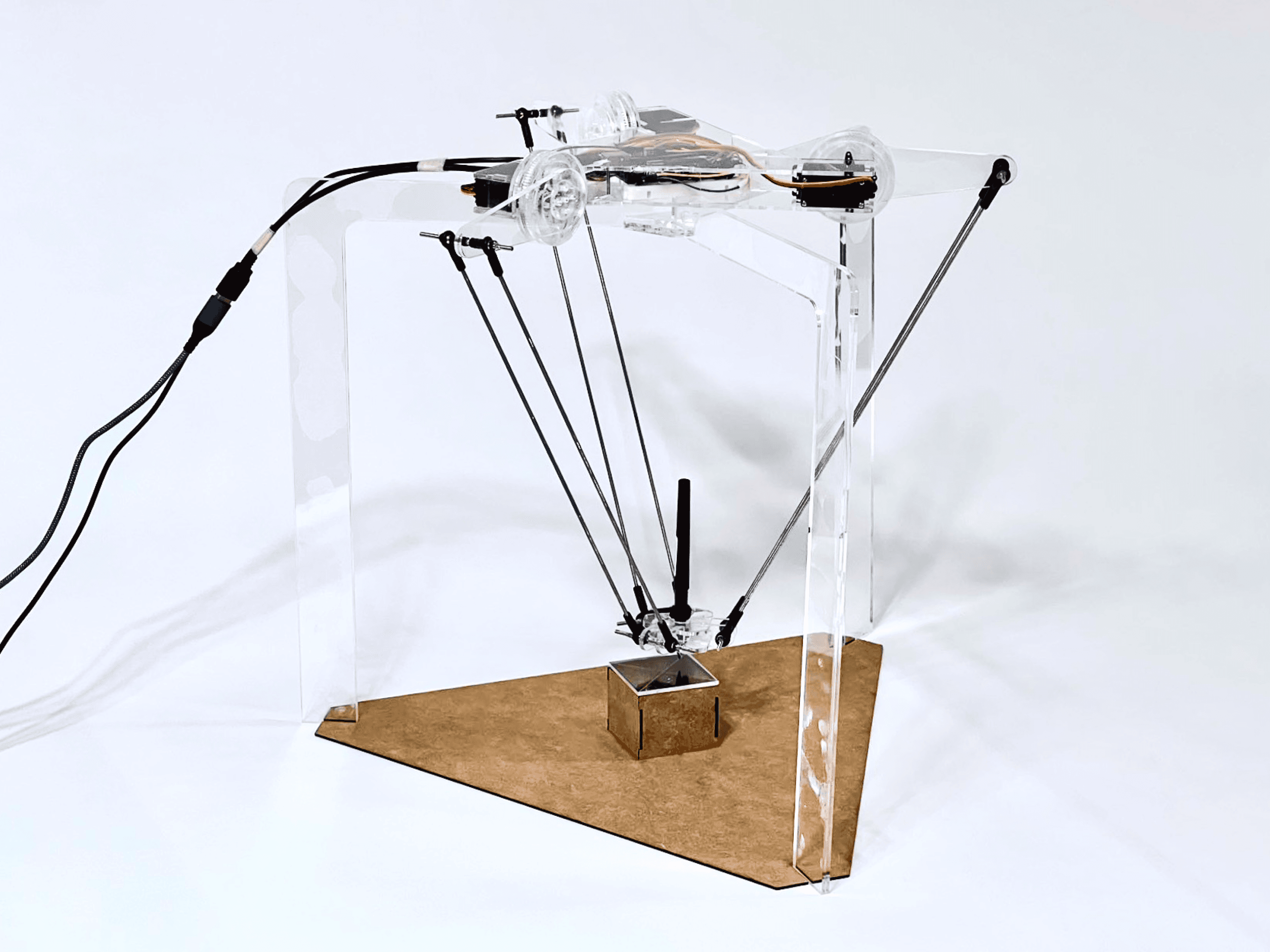

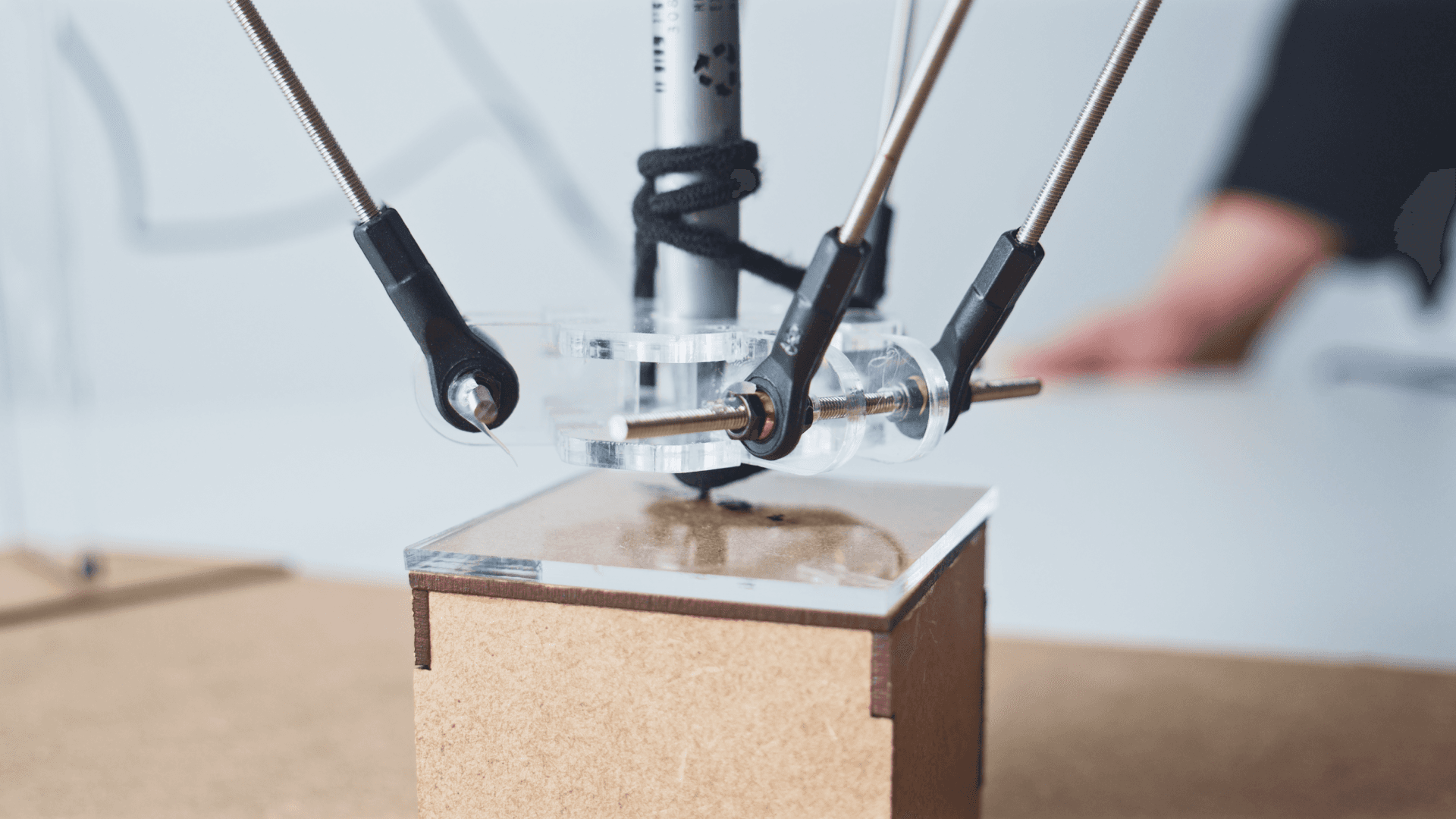

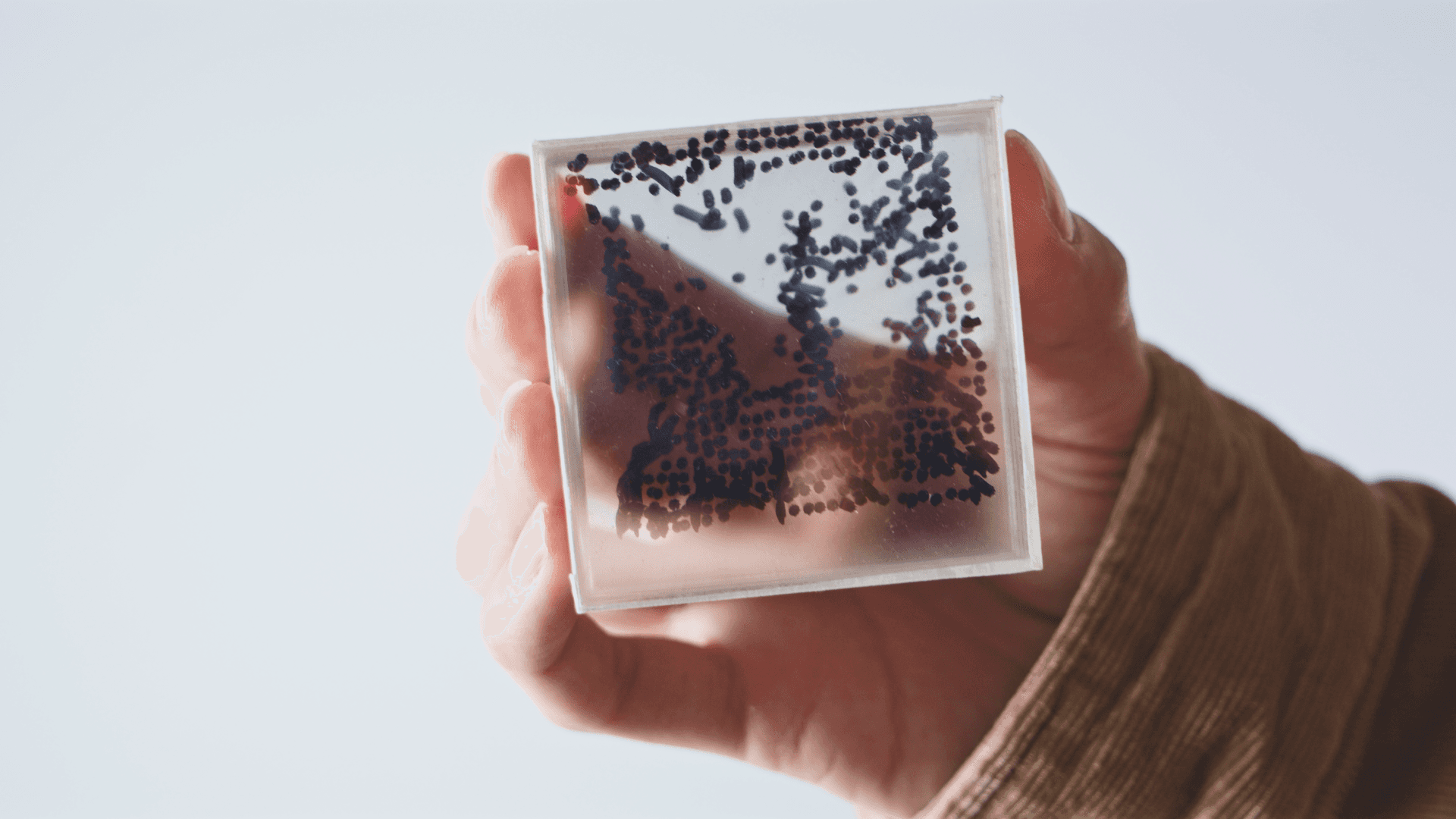

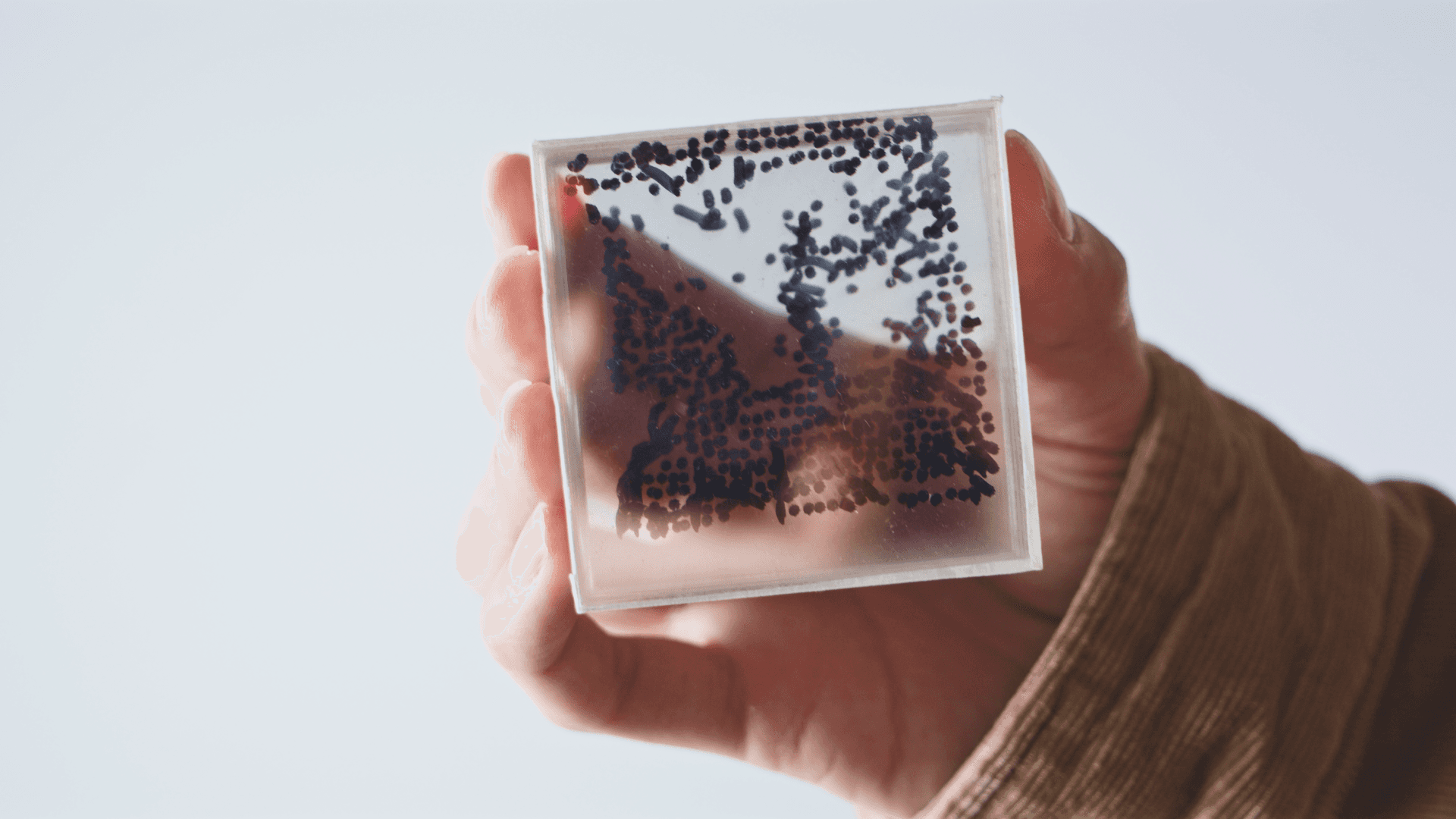

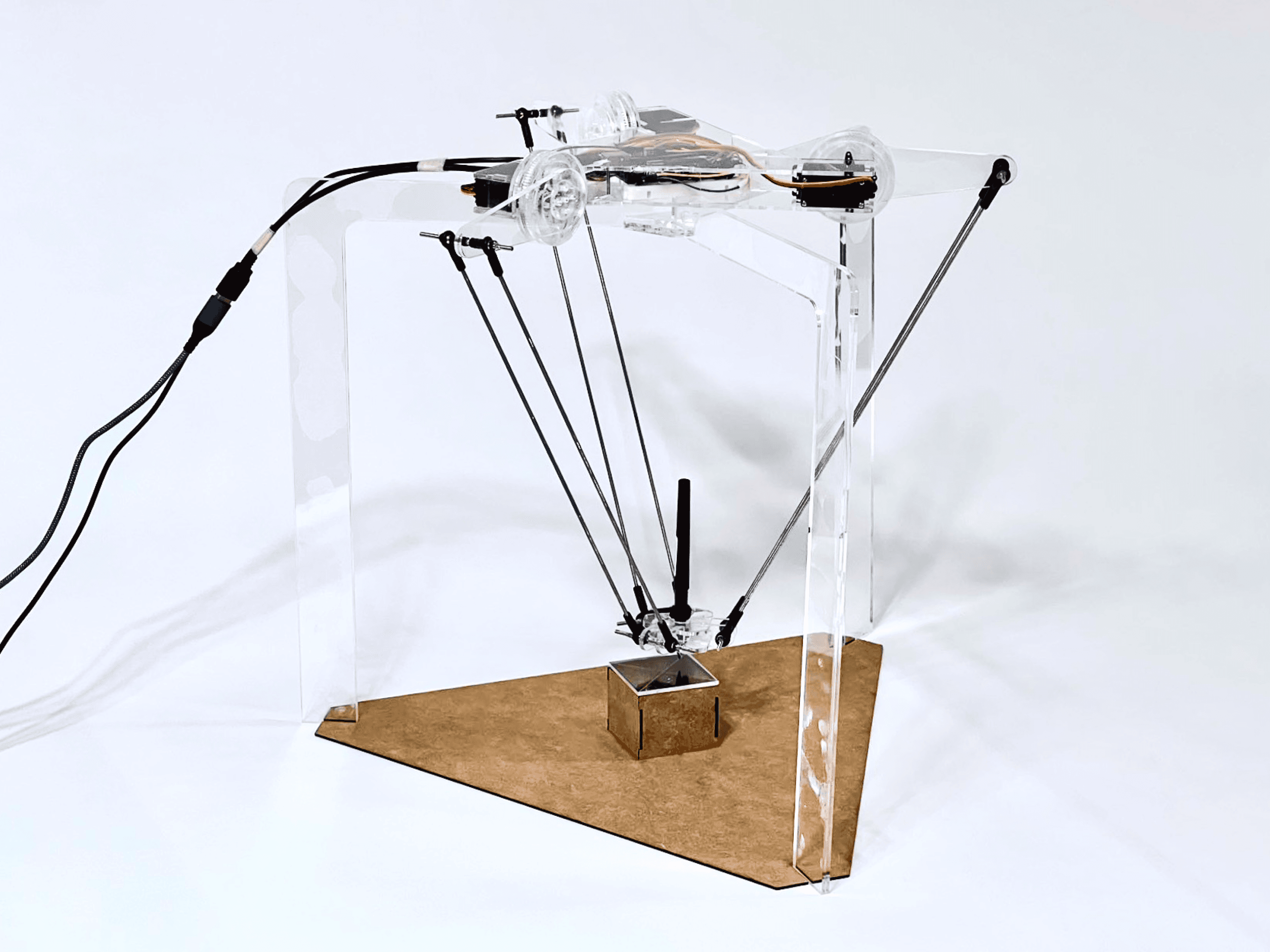

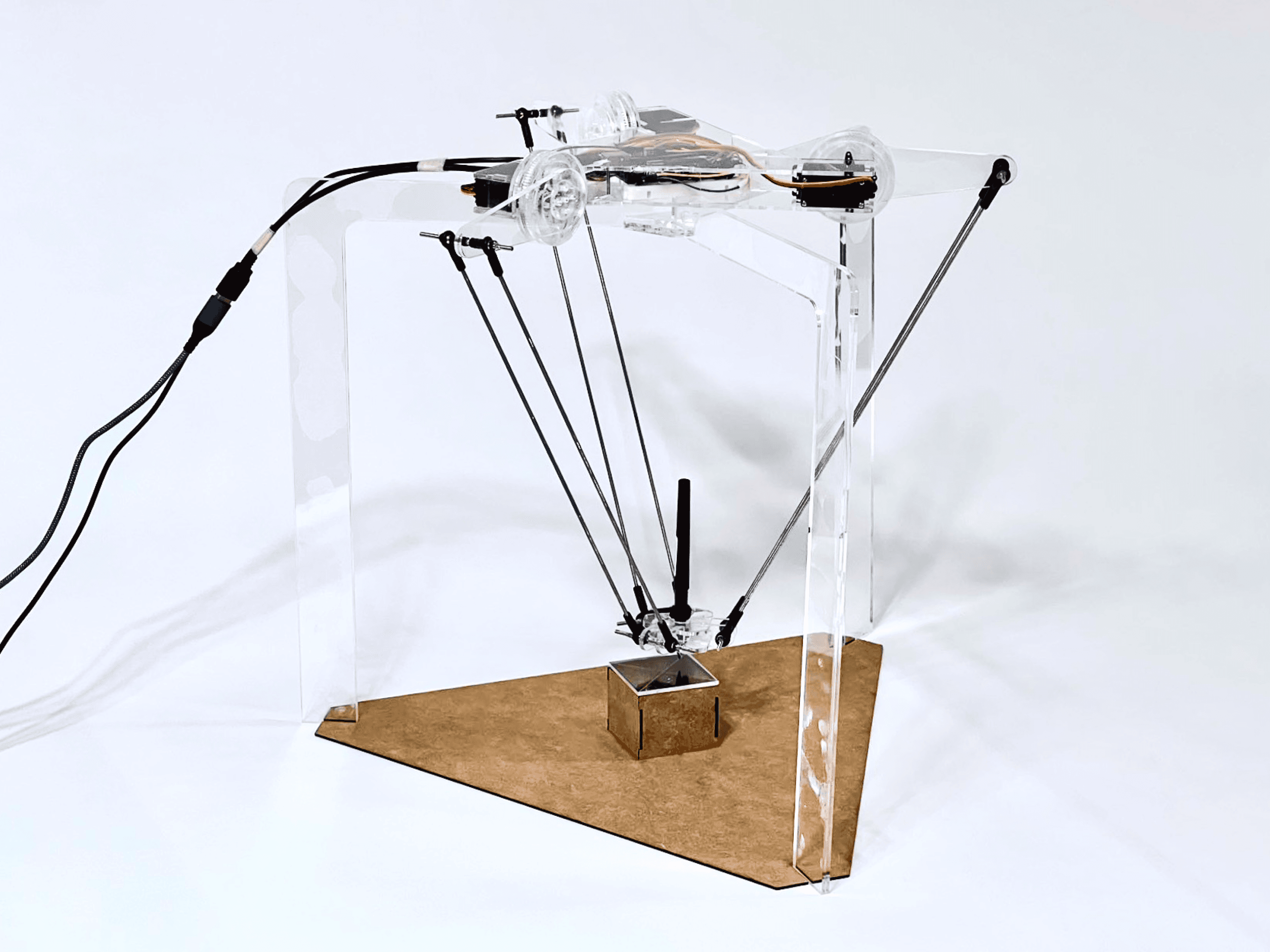

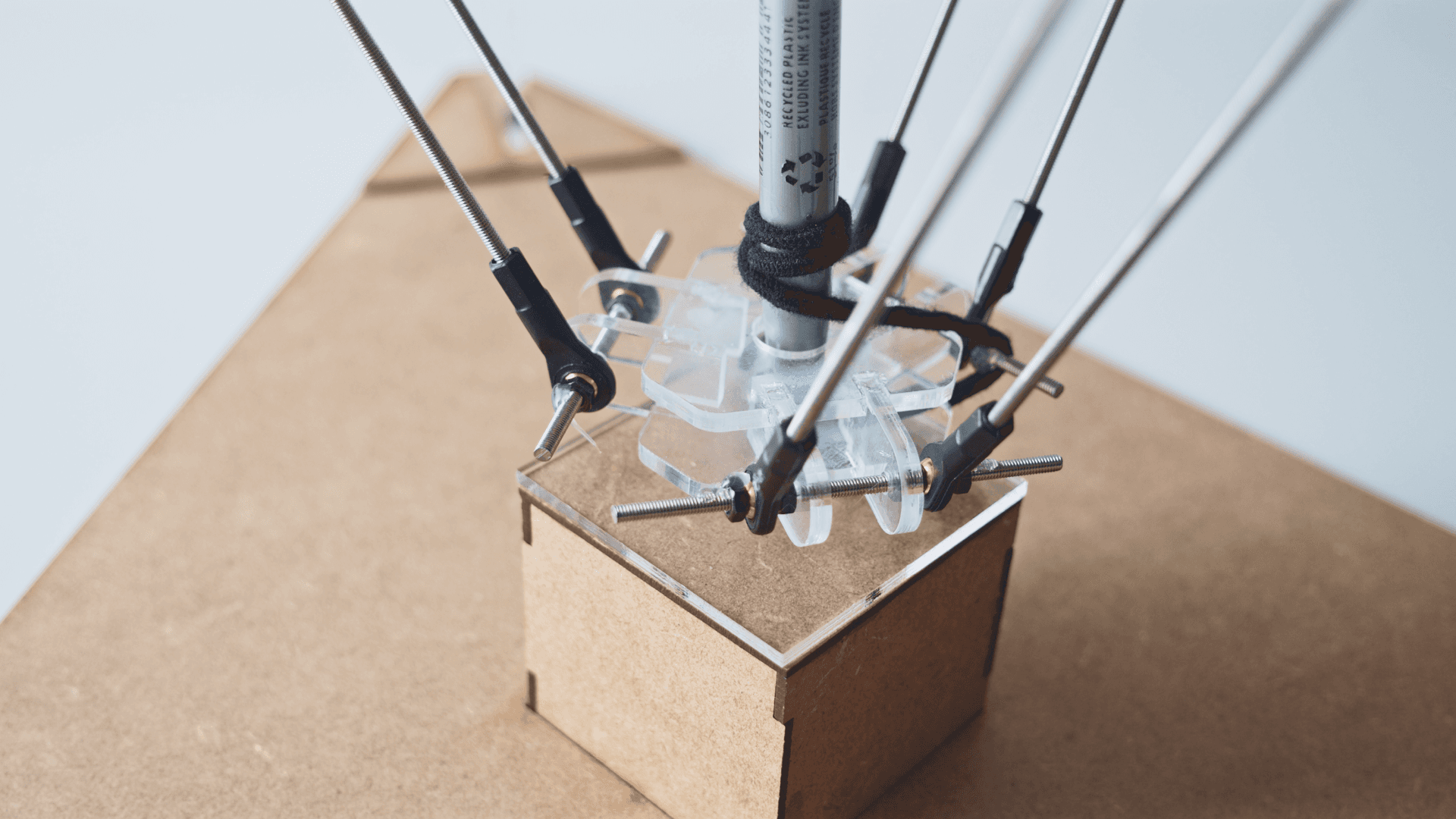

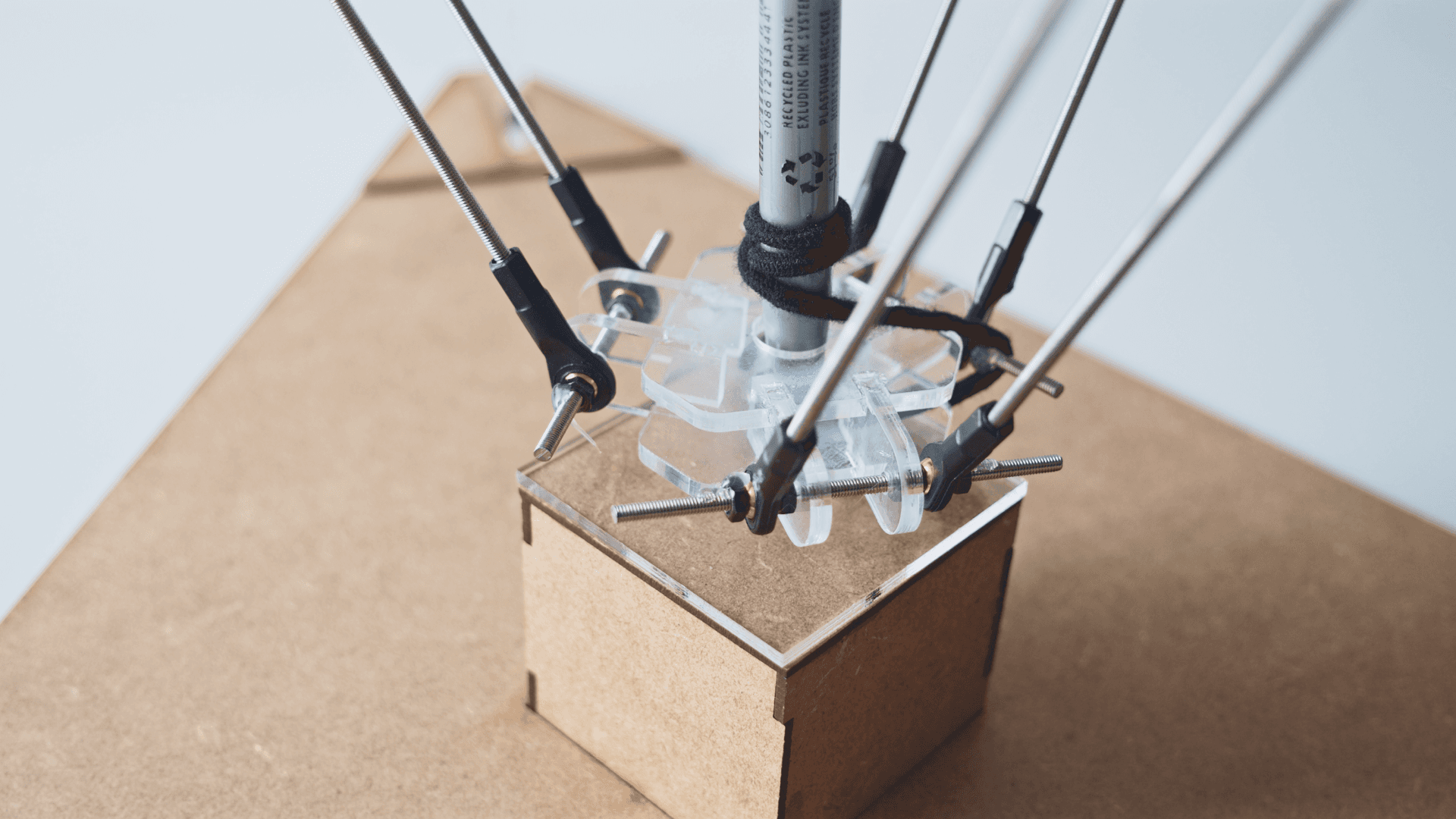

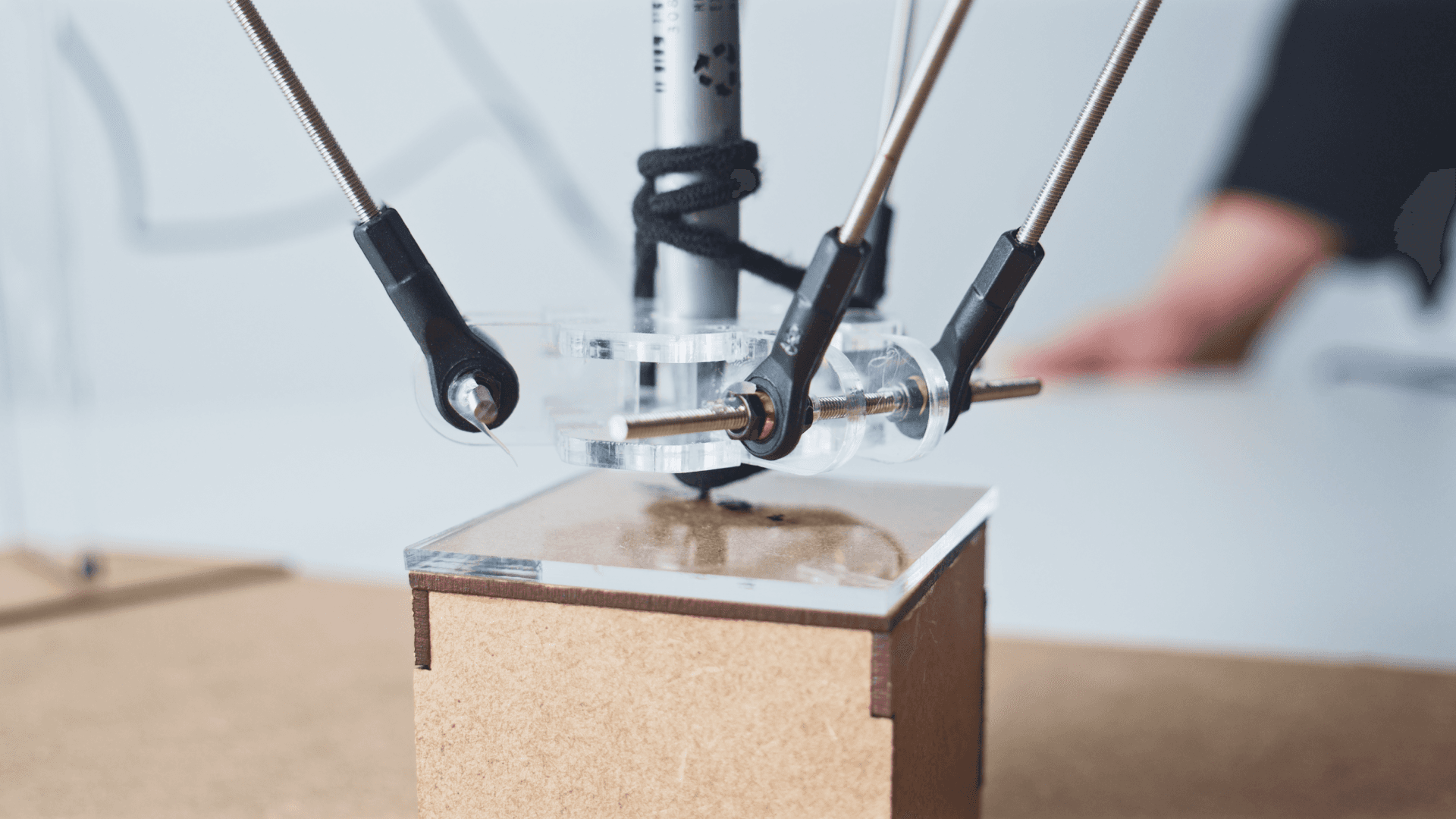

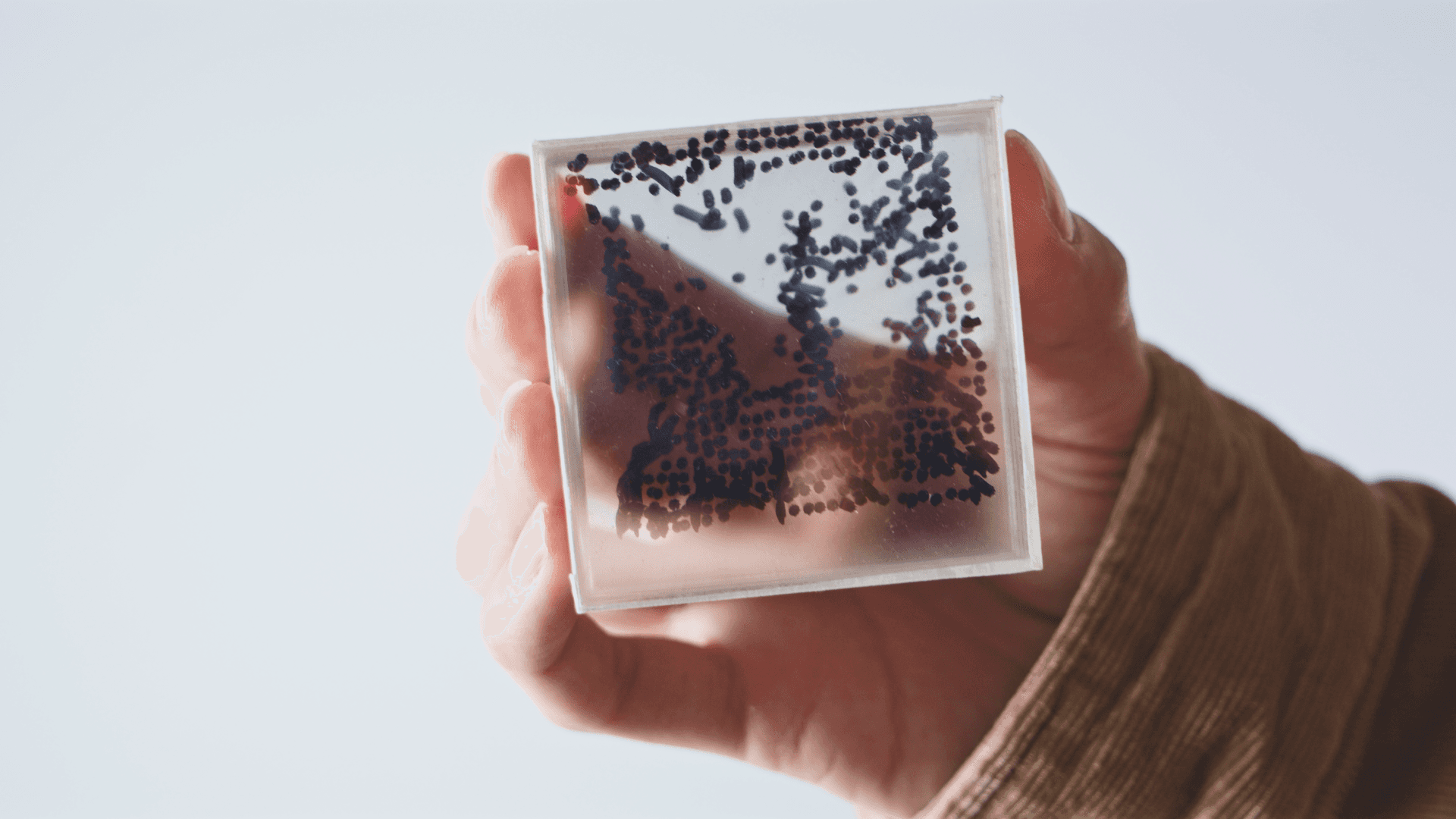

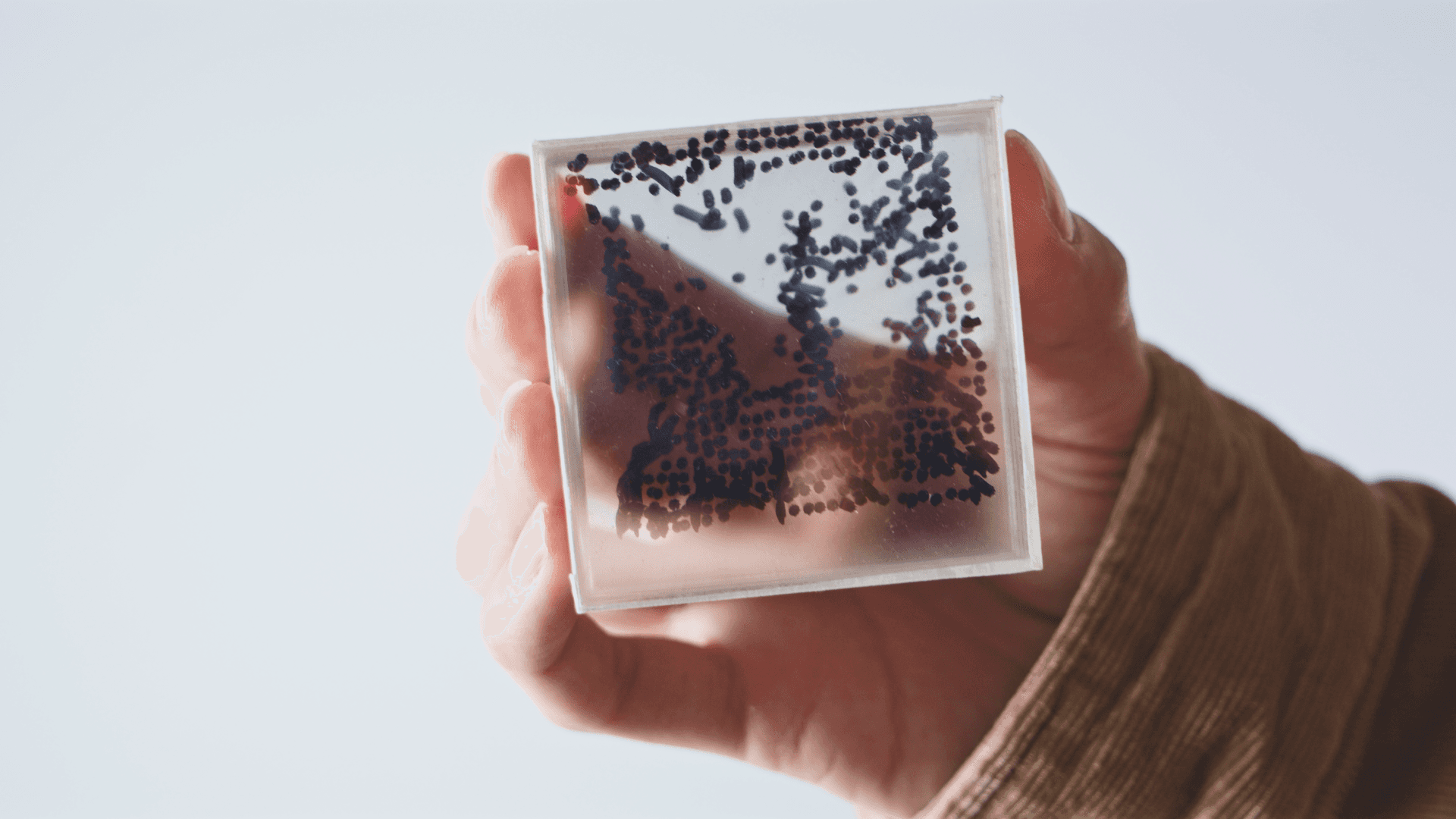

DeePixel reimagines photo presentation by transforming images into 3D experiences. By drawing dense dots on each layer of a stack of acrylic plates, it illustrates photos in three dimensions, deepening the emotional connection between the audience and the scene captured in the photograph.

Context

Photos capture fleeting moments, preserving our precious memories in still frames. Yet, these images are traditionally flat. Imagine if we could bring these snapshots to life by transforming them into three-dimensional displays. This could profoundly enrich our connection to the past, allowing us to relive our cherished memories in a way that feels as real and touching as the moments themselves.

Concept

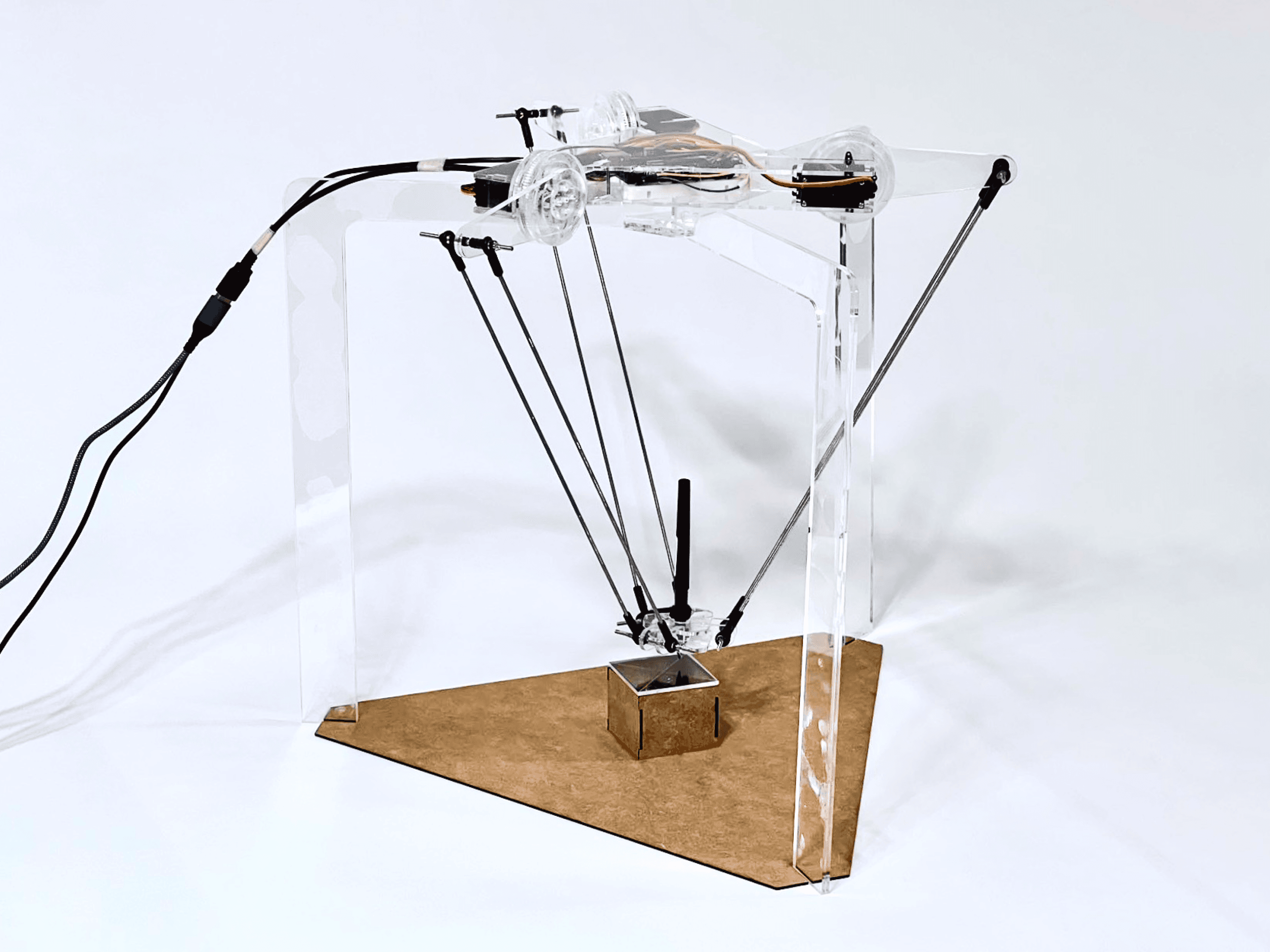

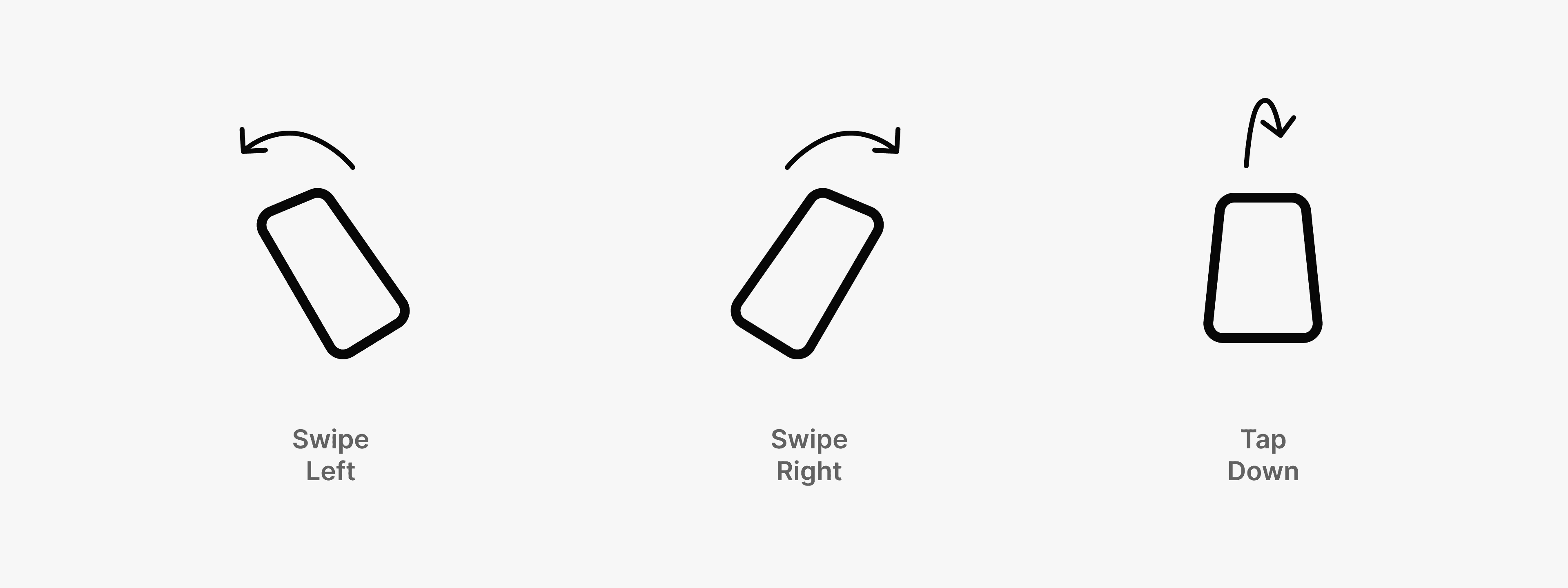

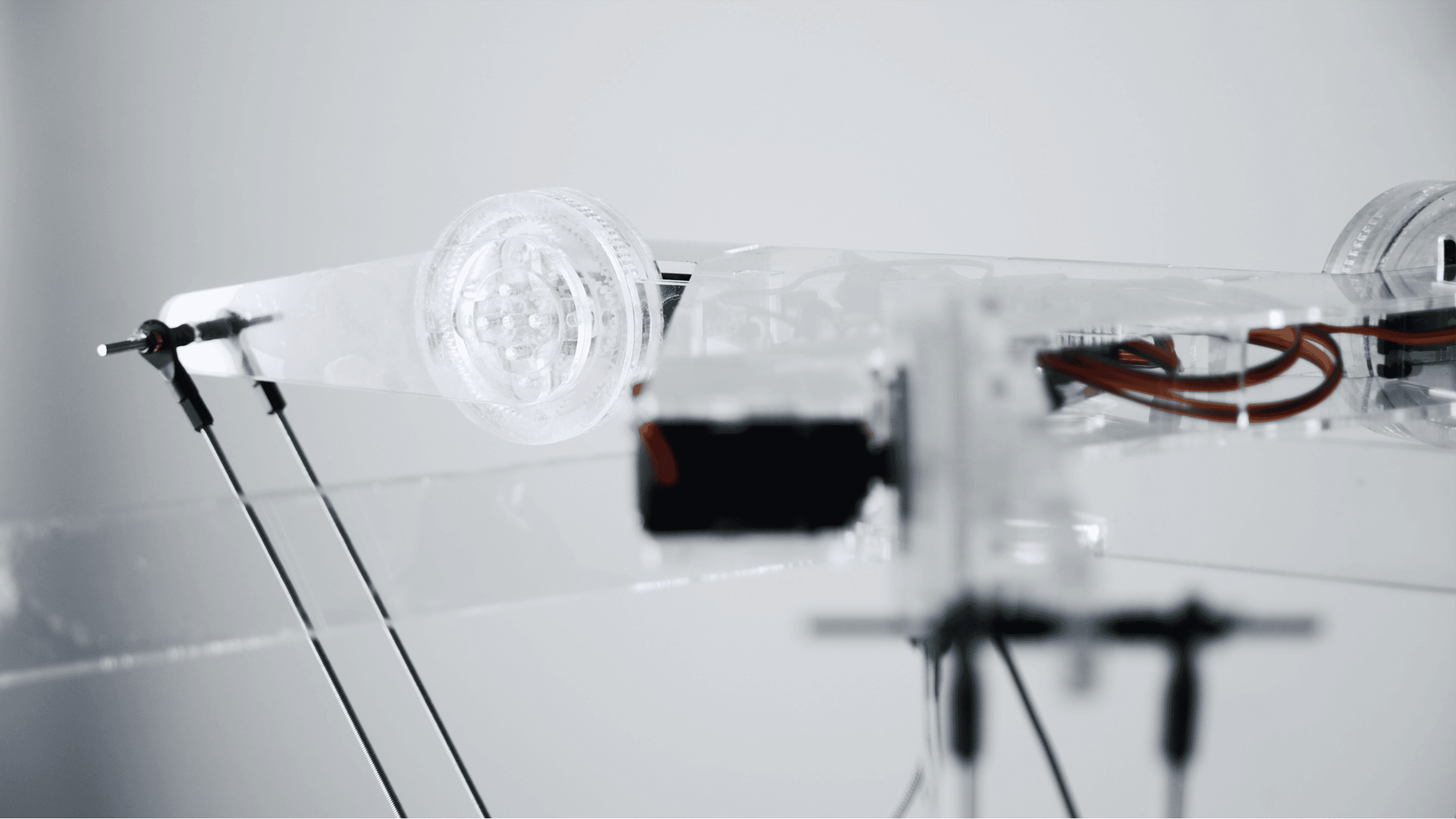

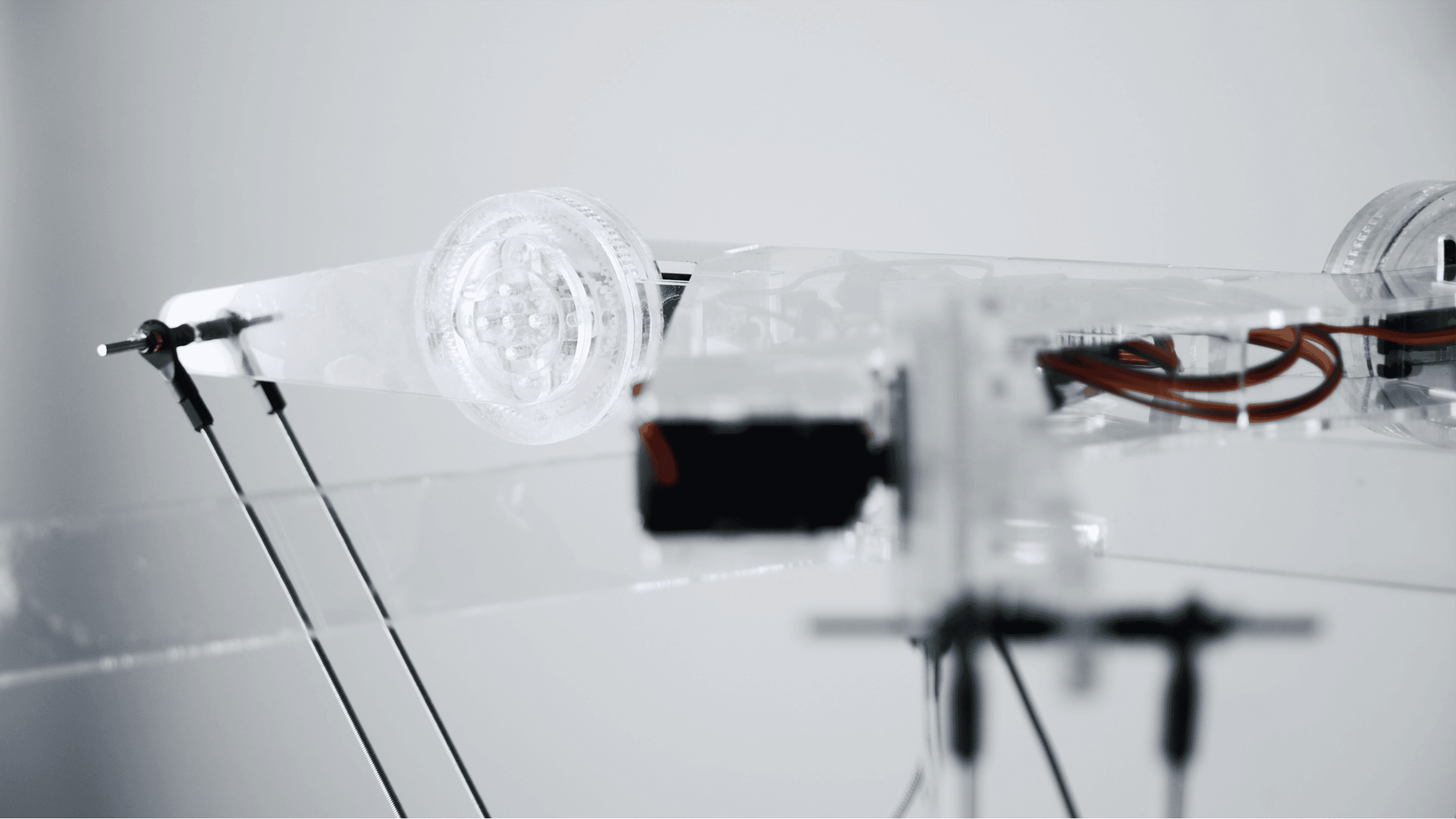

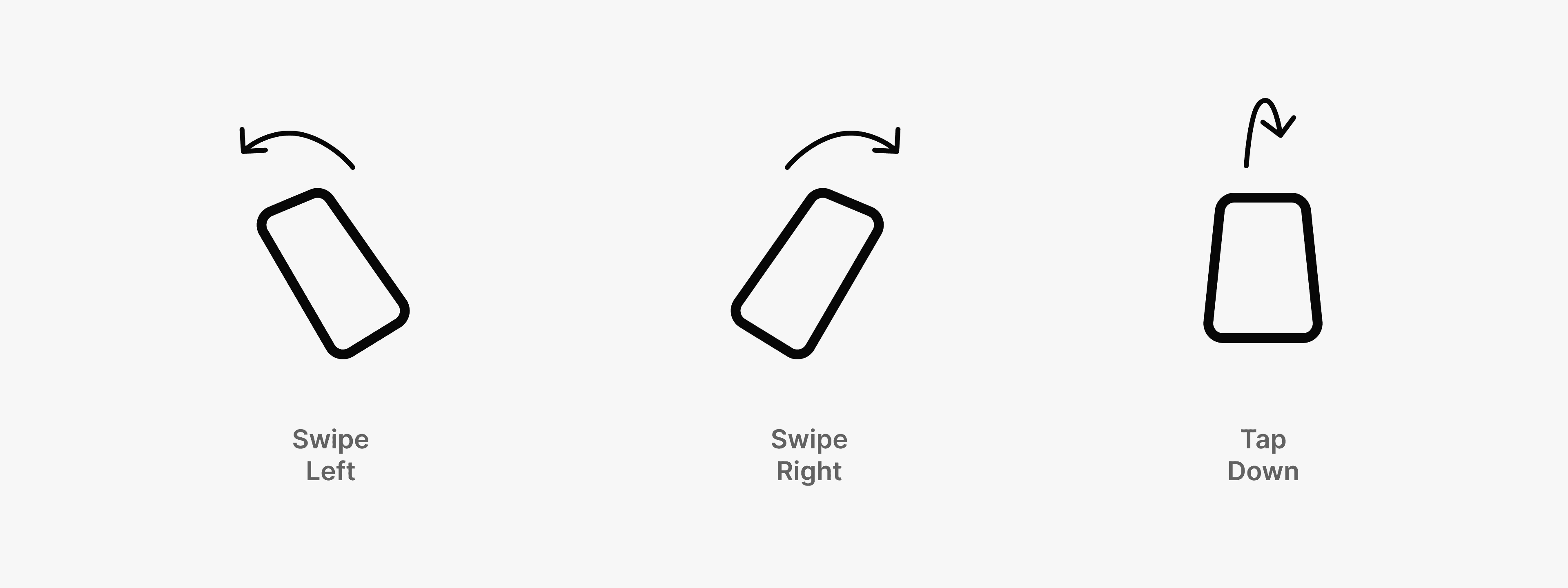

The concept is to create dot style drawings on cubic stack of acrylic boards using a delta robotic installation. By transforming a 2D digital image to a stylised photo with depth information. The Machine Learning powered gesture recognition enables users to drawing styles ranging from abstract to detailed by use their phone with gestures such as swiping left and right. Once selected the installation starts to produce drawings on each acrylic sheet.

Process

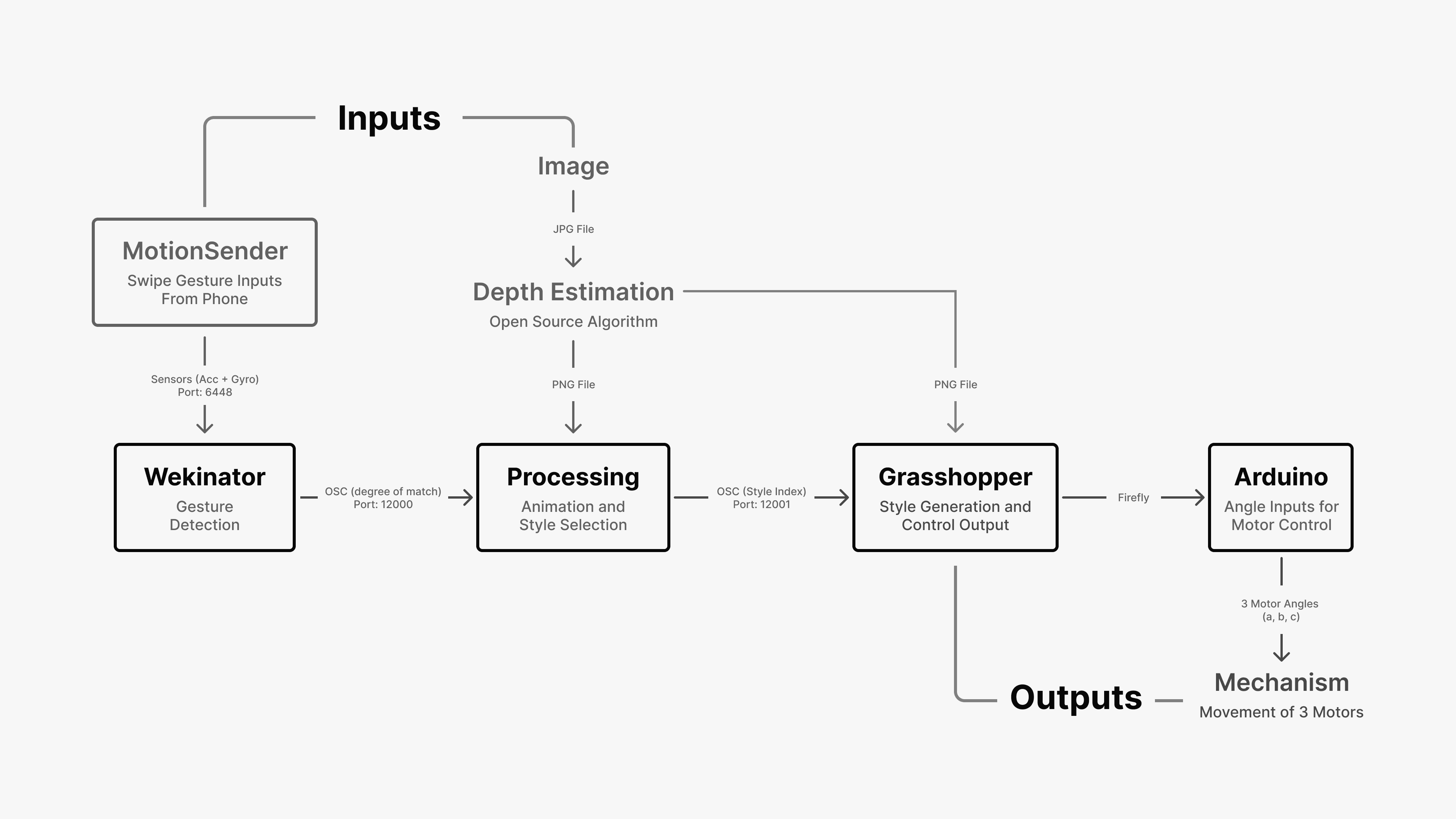

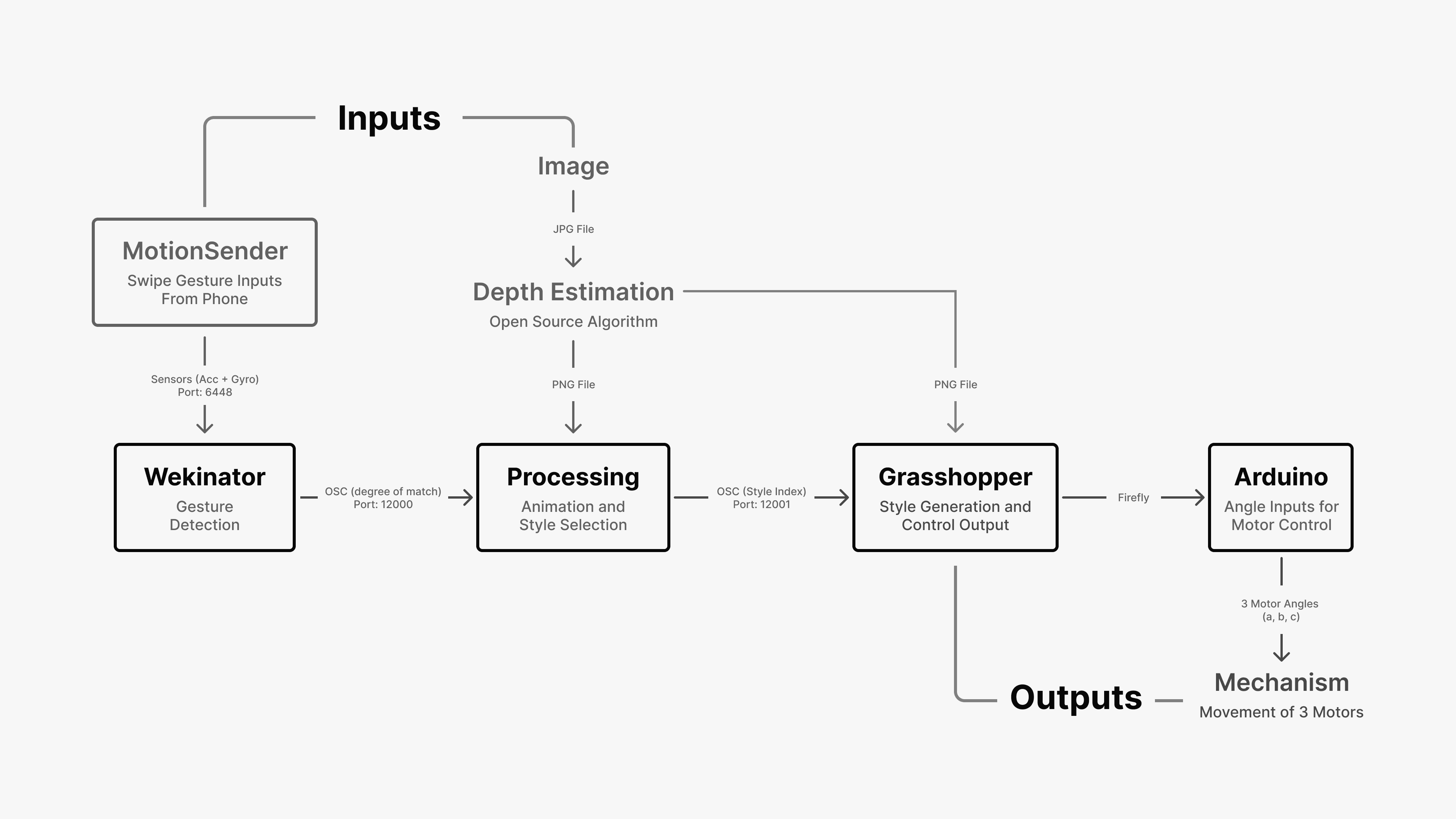

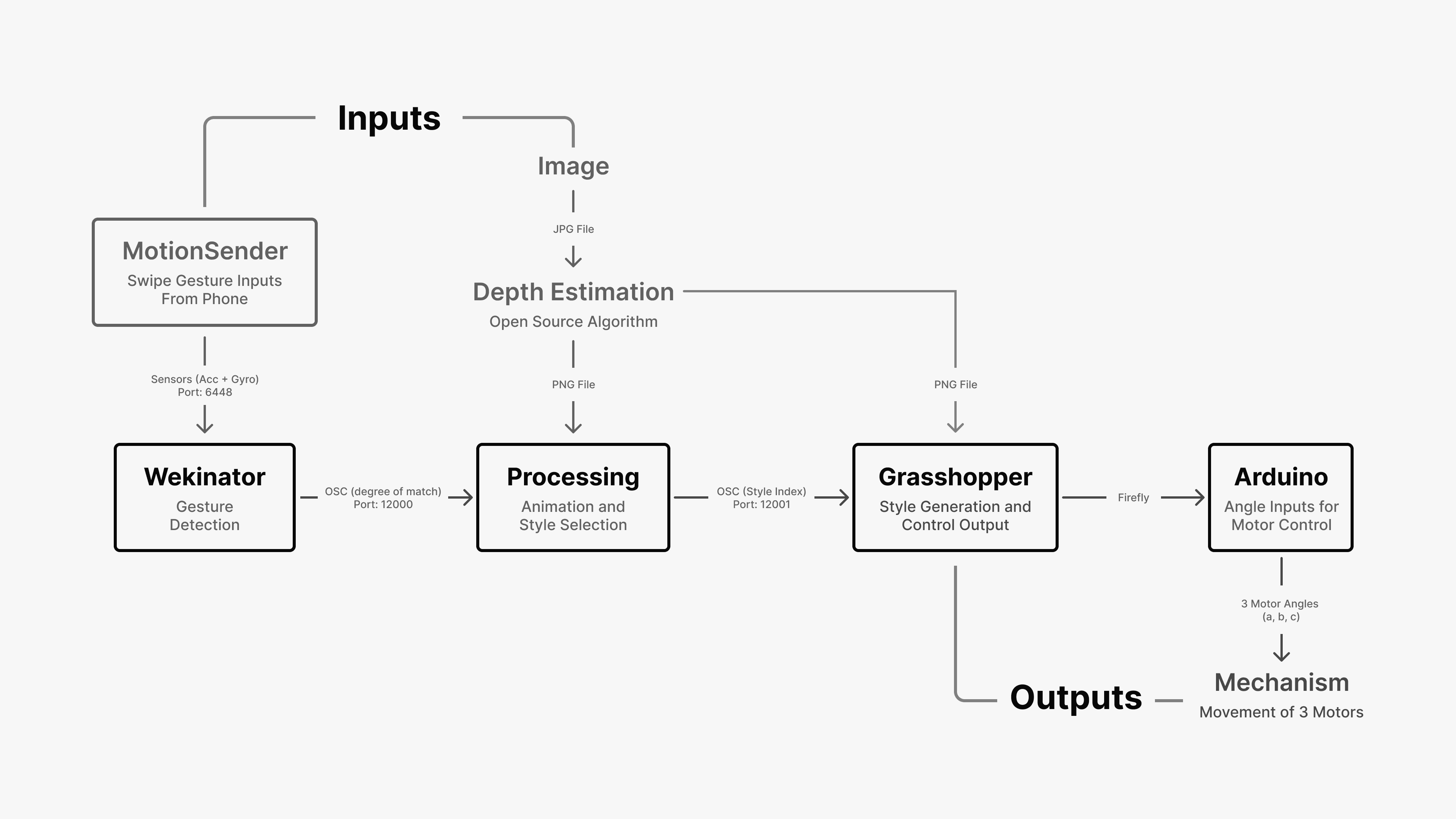

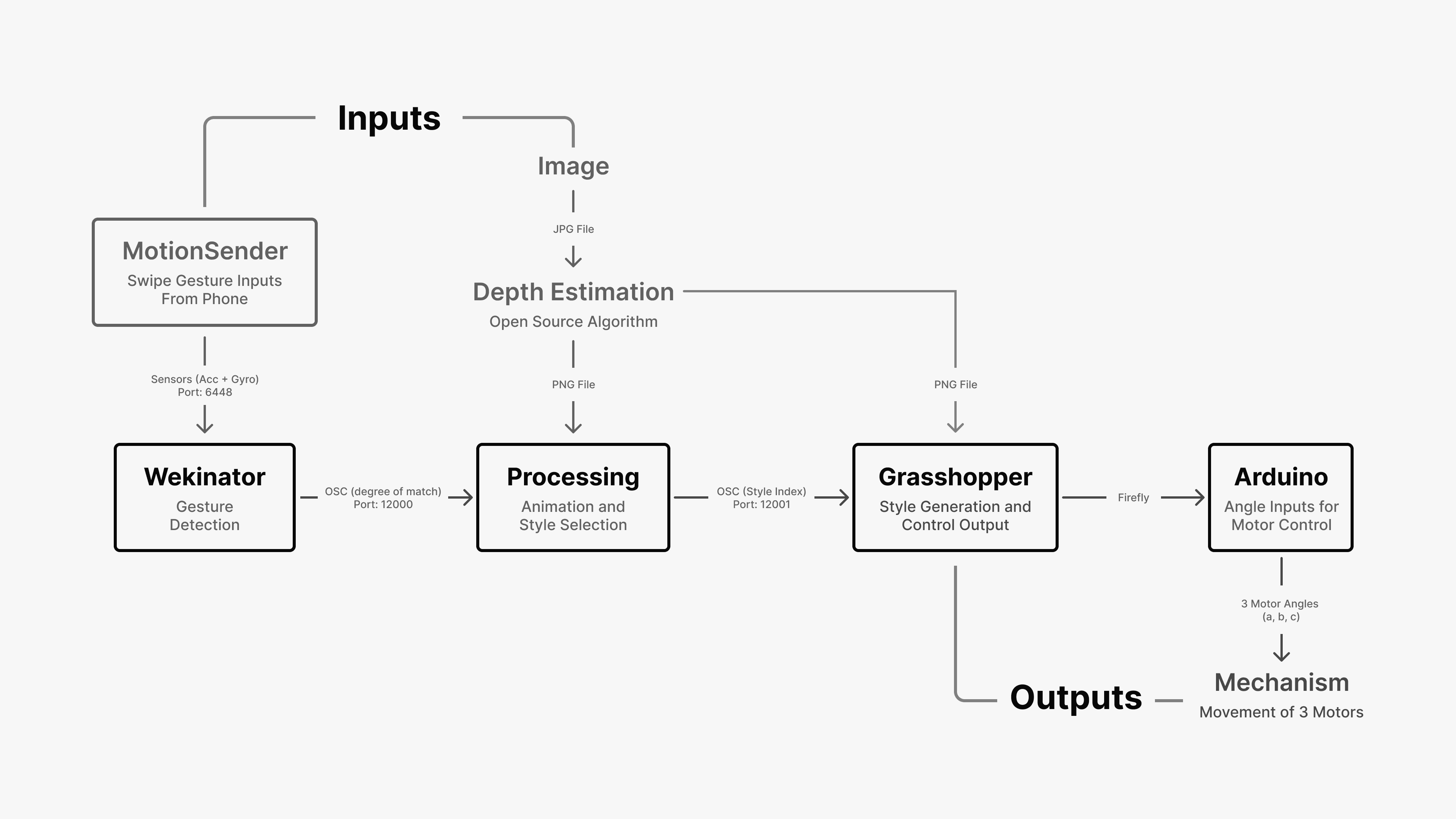

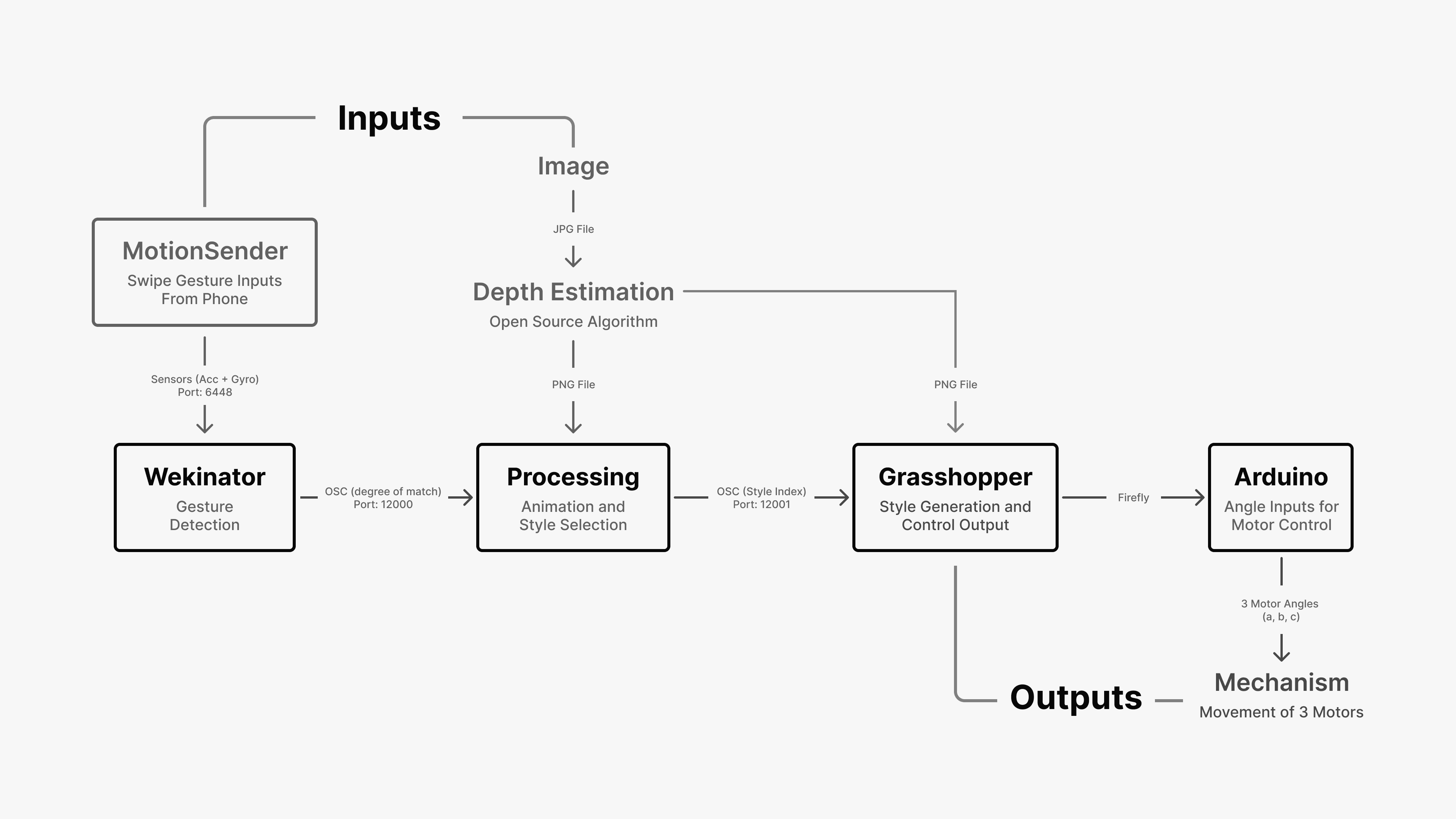

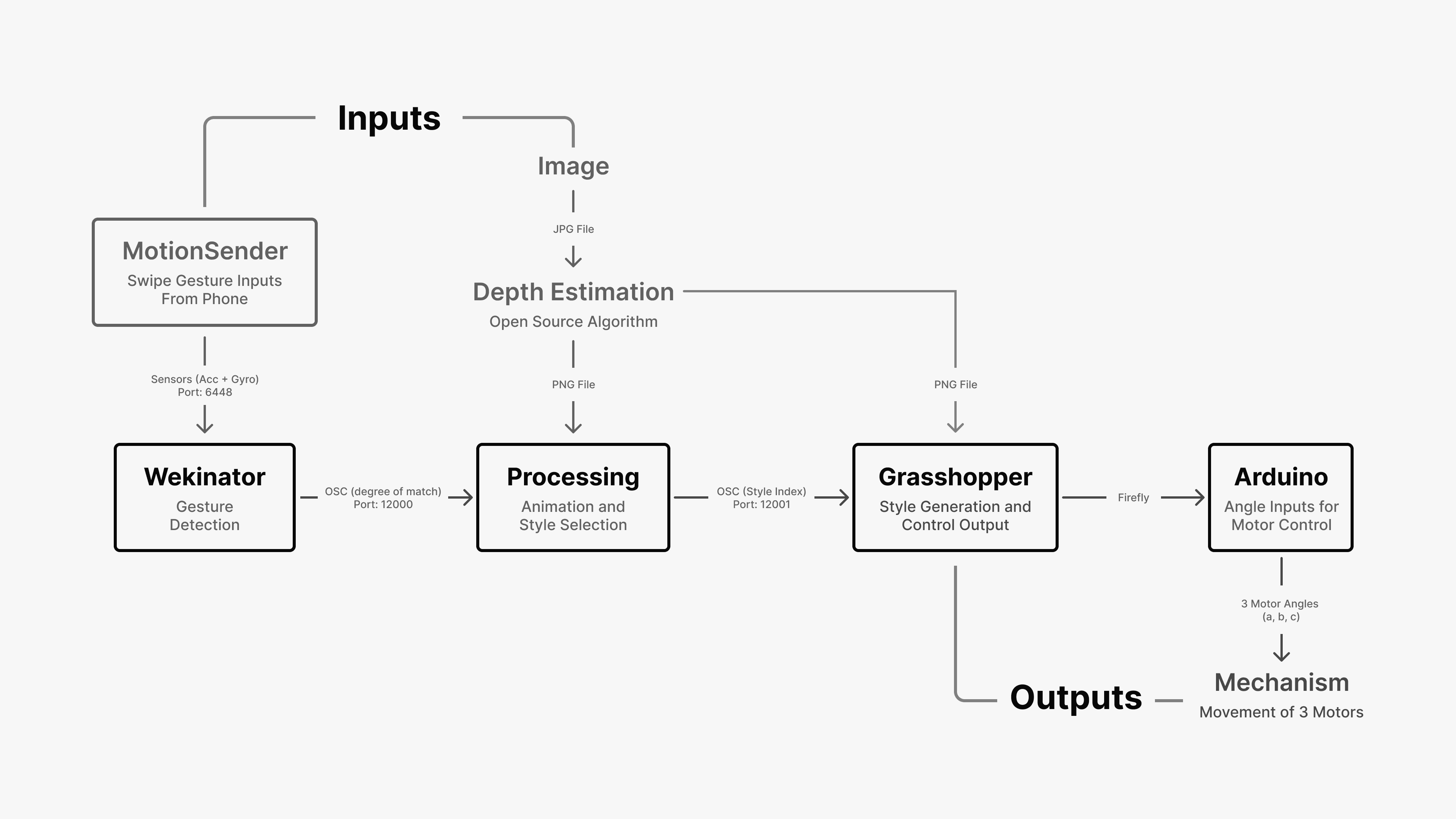

To achieve the transformation of a 2D image into a 3D display, the project is divided into three main parts: image processing, mechanical design, and interaction design, which incorporates gesture recognition through Machine Learning.

DeePixel

2022

Robotic. ML. Interaction Design.

Group Project

Cyber Physical, Innovation Design Engineering (IC x RCA)

DeePixel reimagines photo presentation by transforming images into 3D experiences. By drawing dense dots on each layer of a stack of acrylic plates, it illustrates photos in three dimensions, deepening the emotional connection between the audience and the scene captured in the photograph.

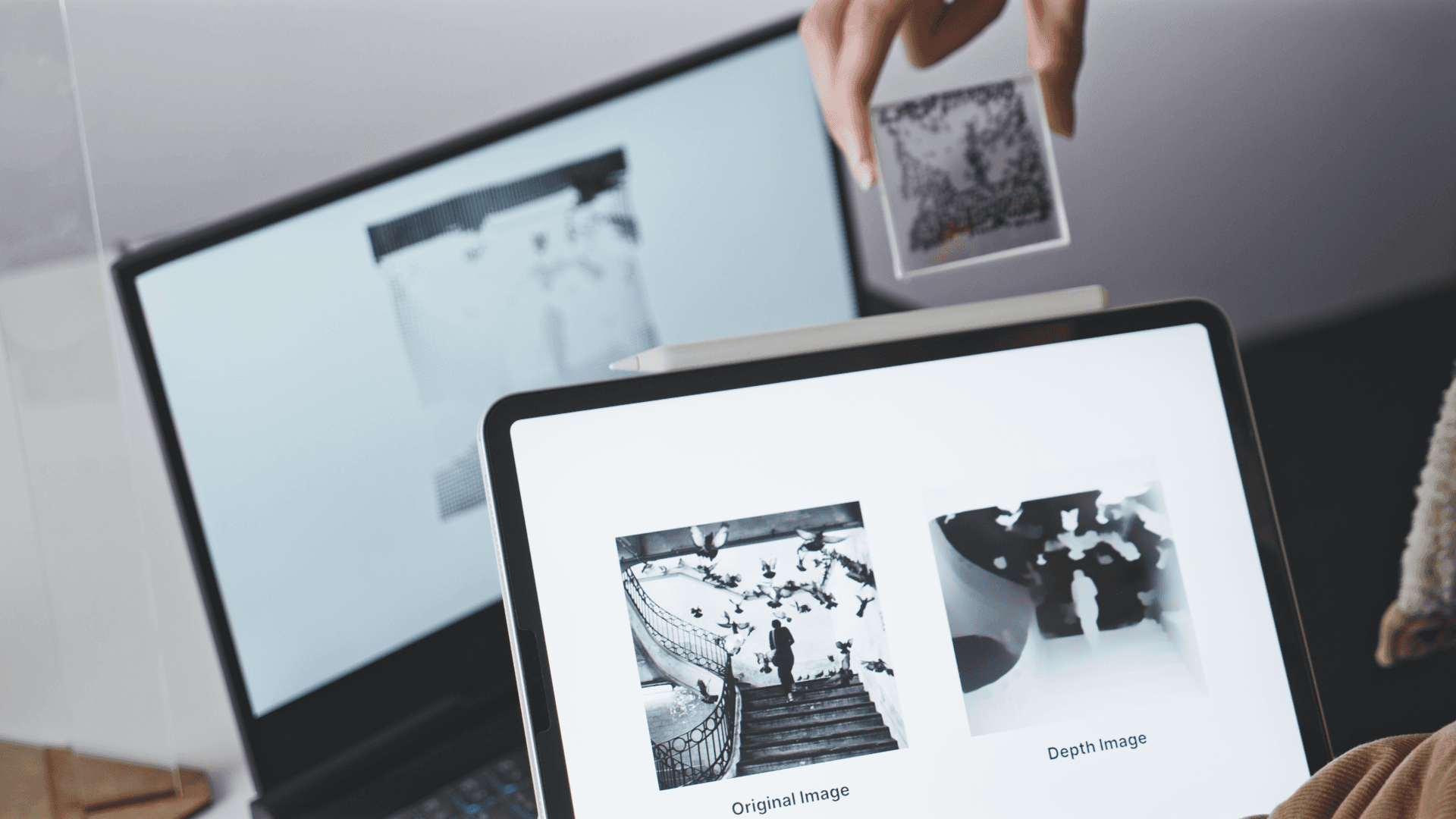

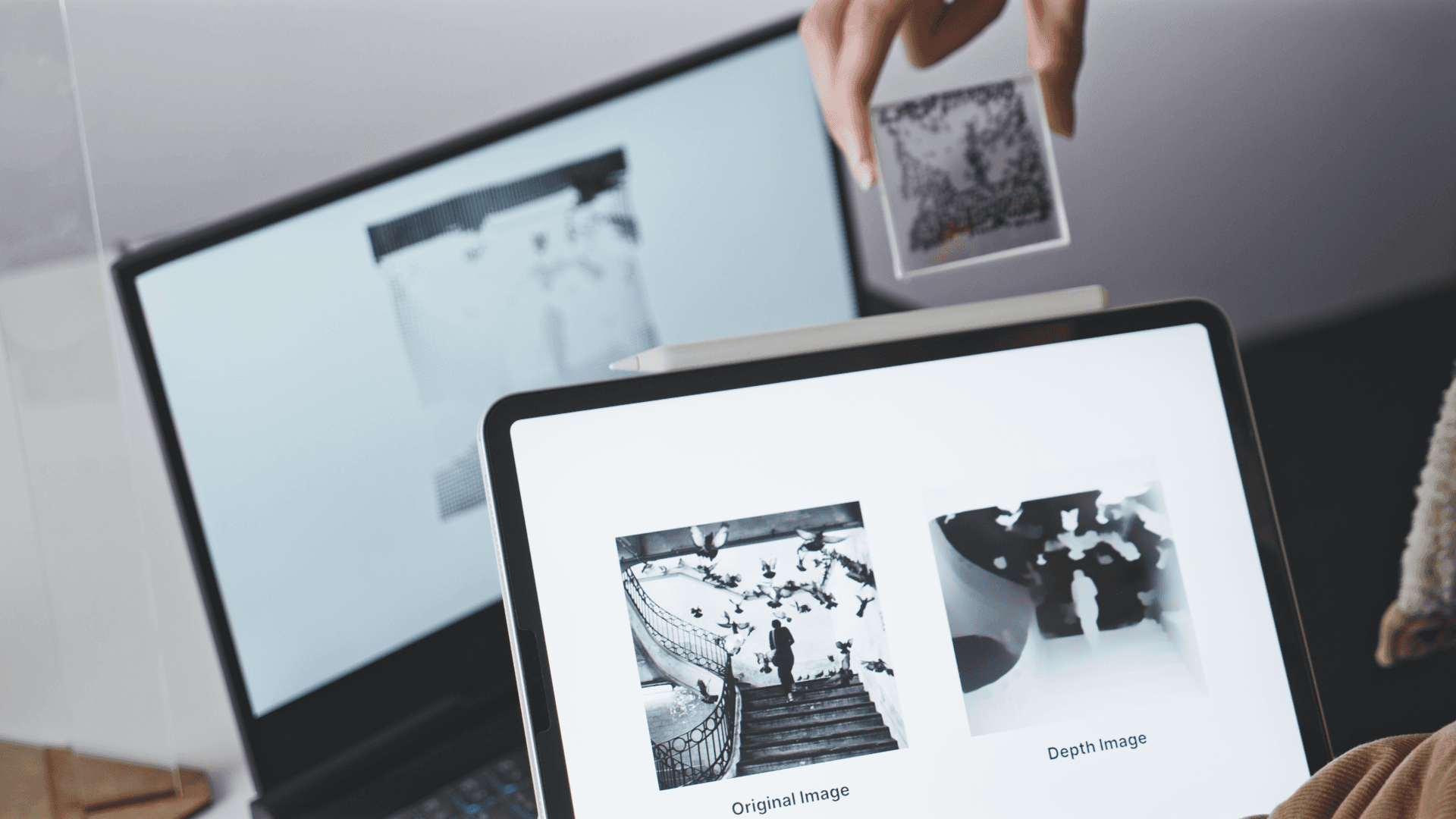

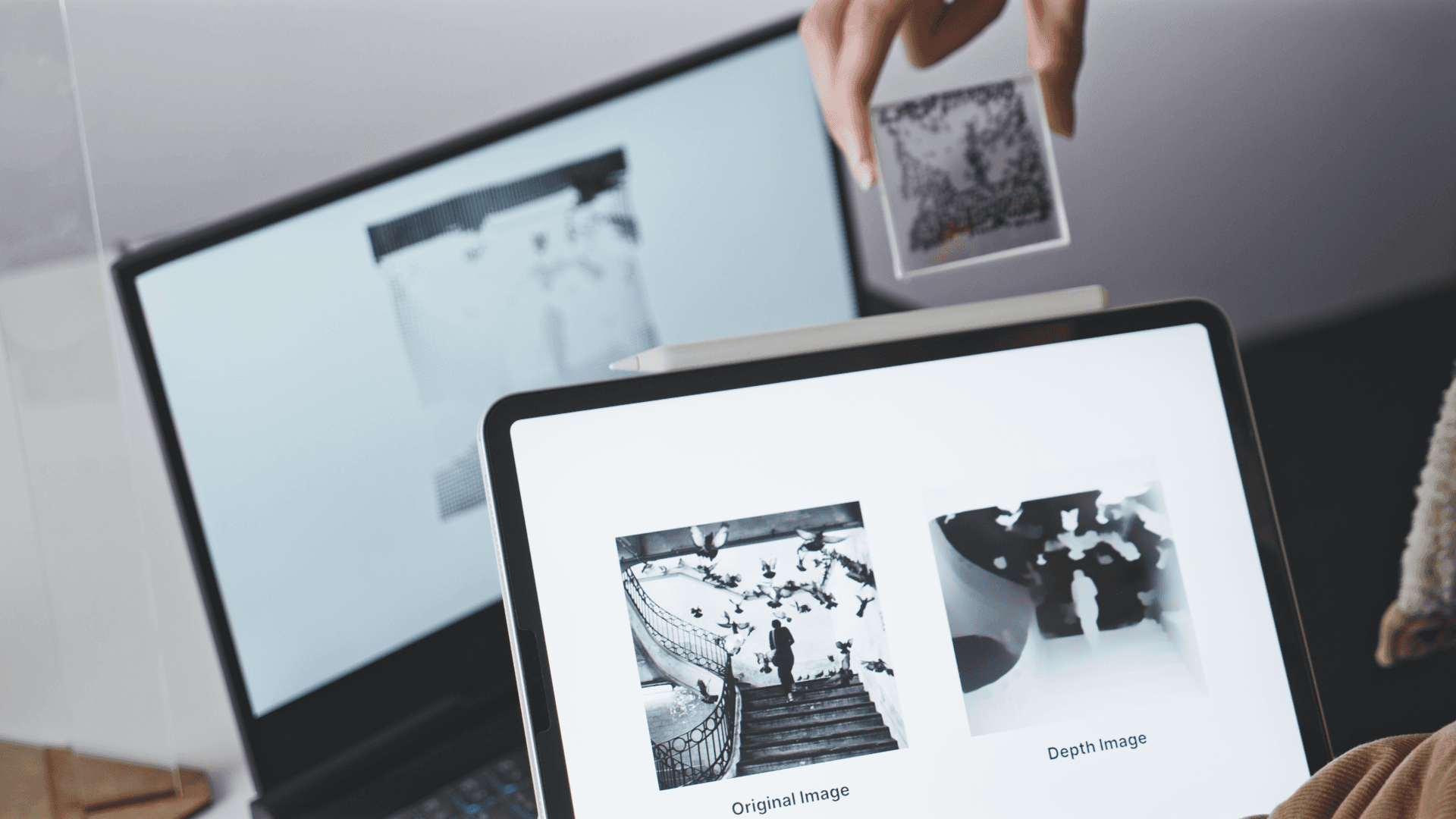

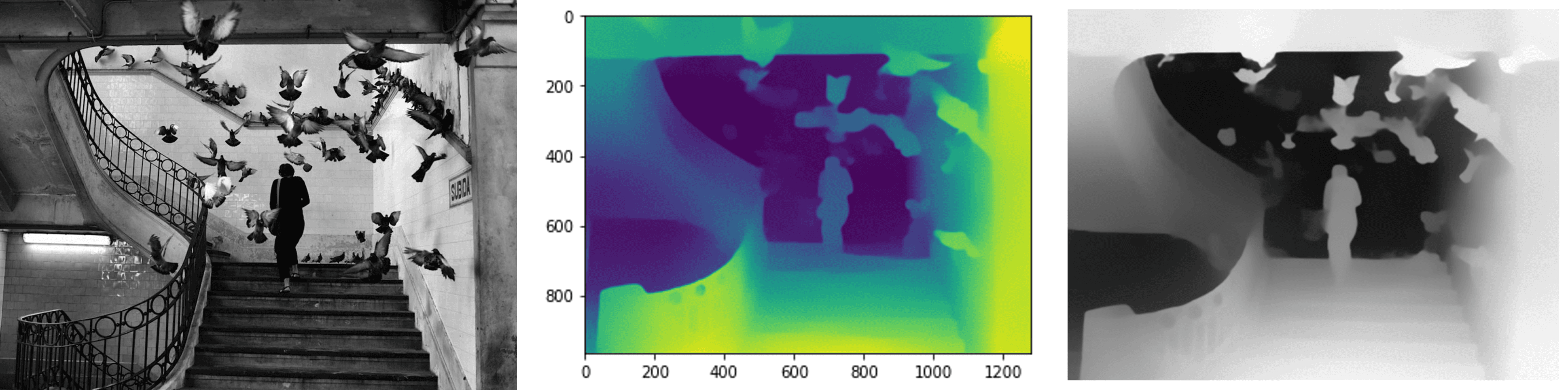

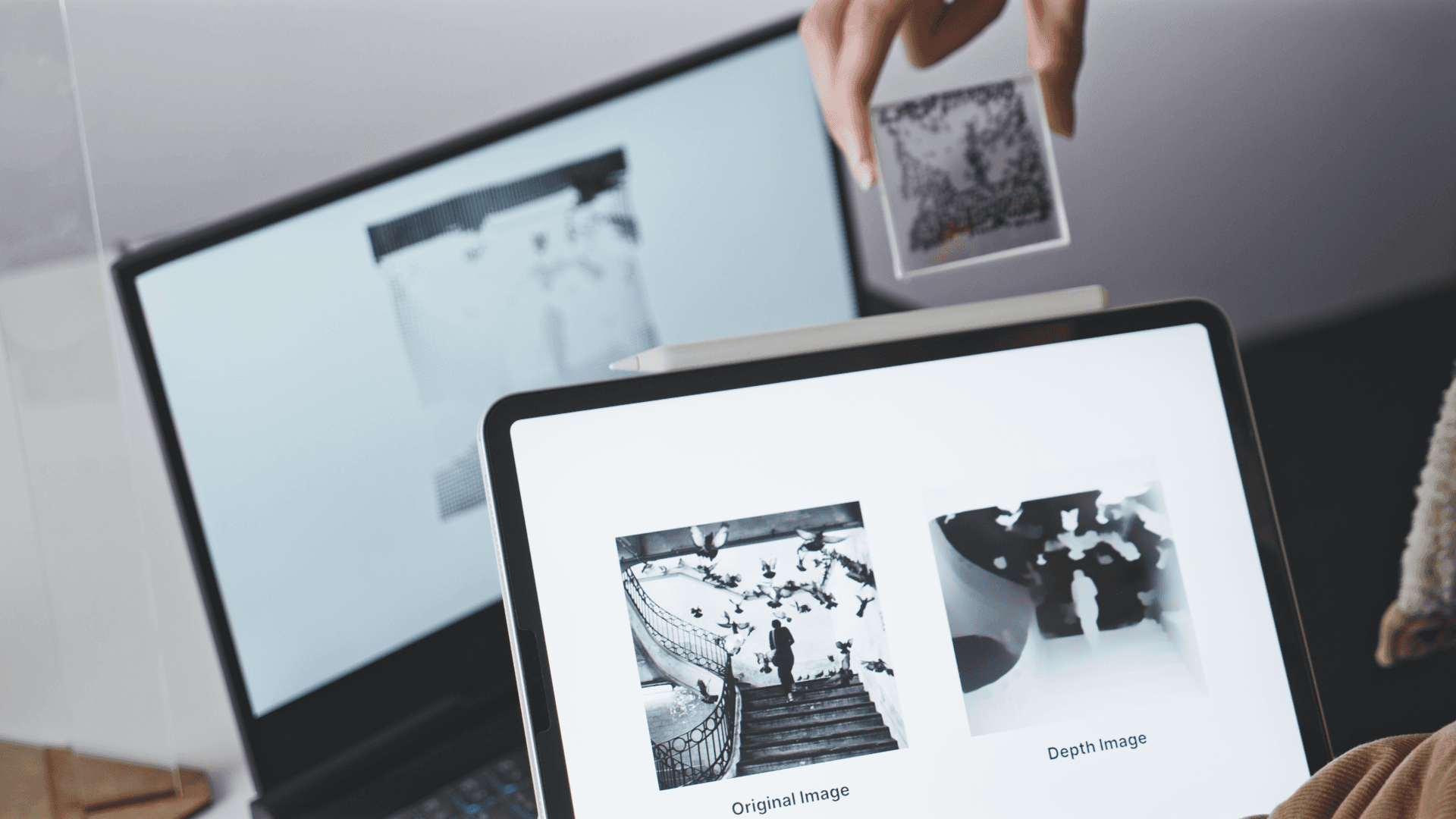

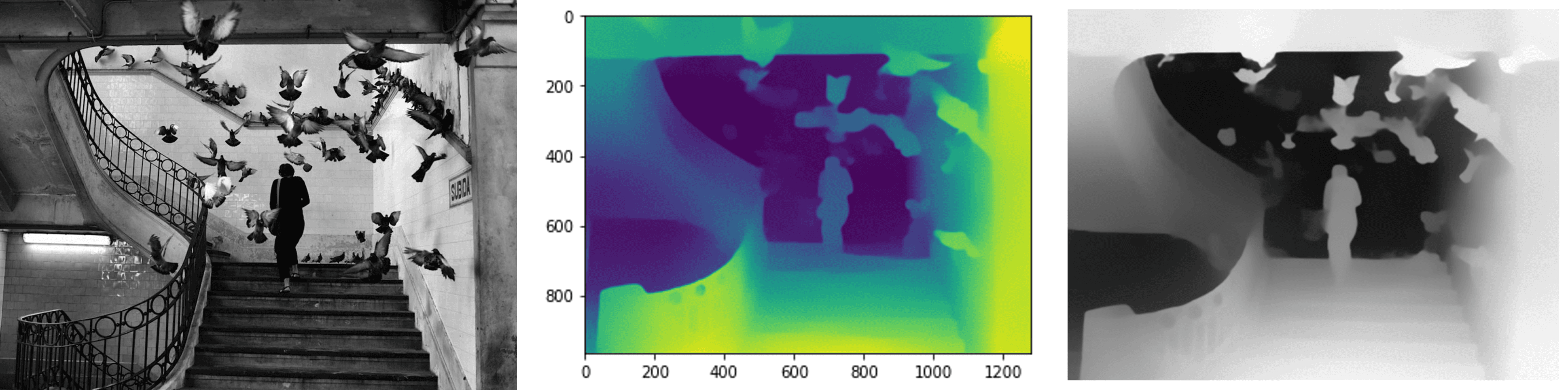

Image Processing

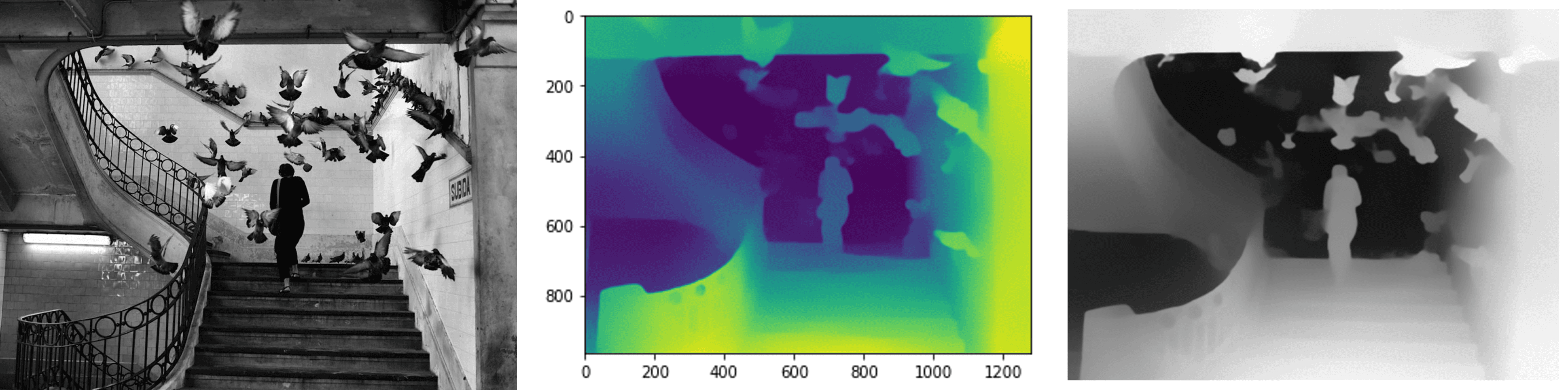

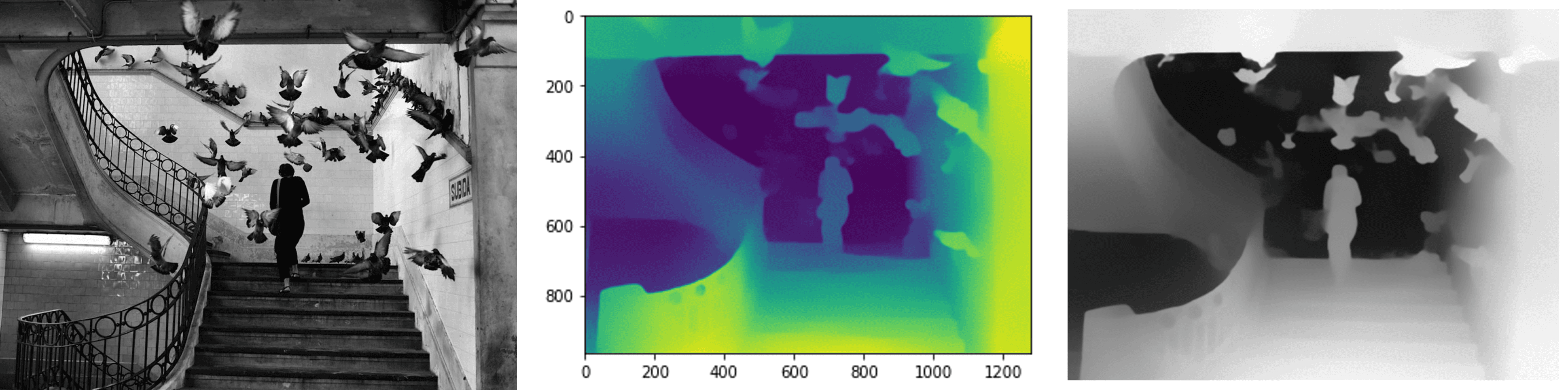

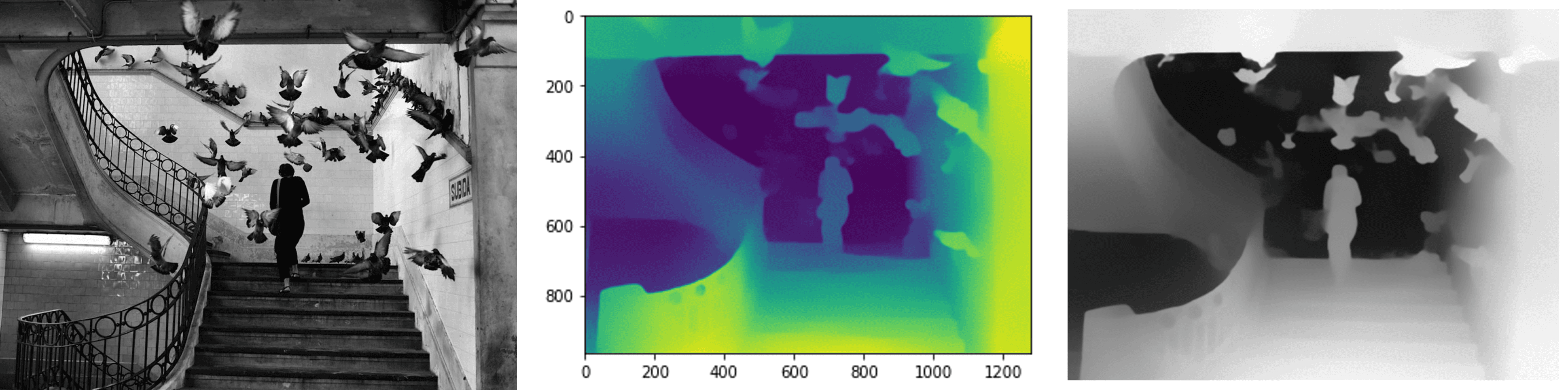

The first step is to process a 2D image input by analysing its depth information.

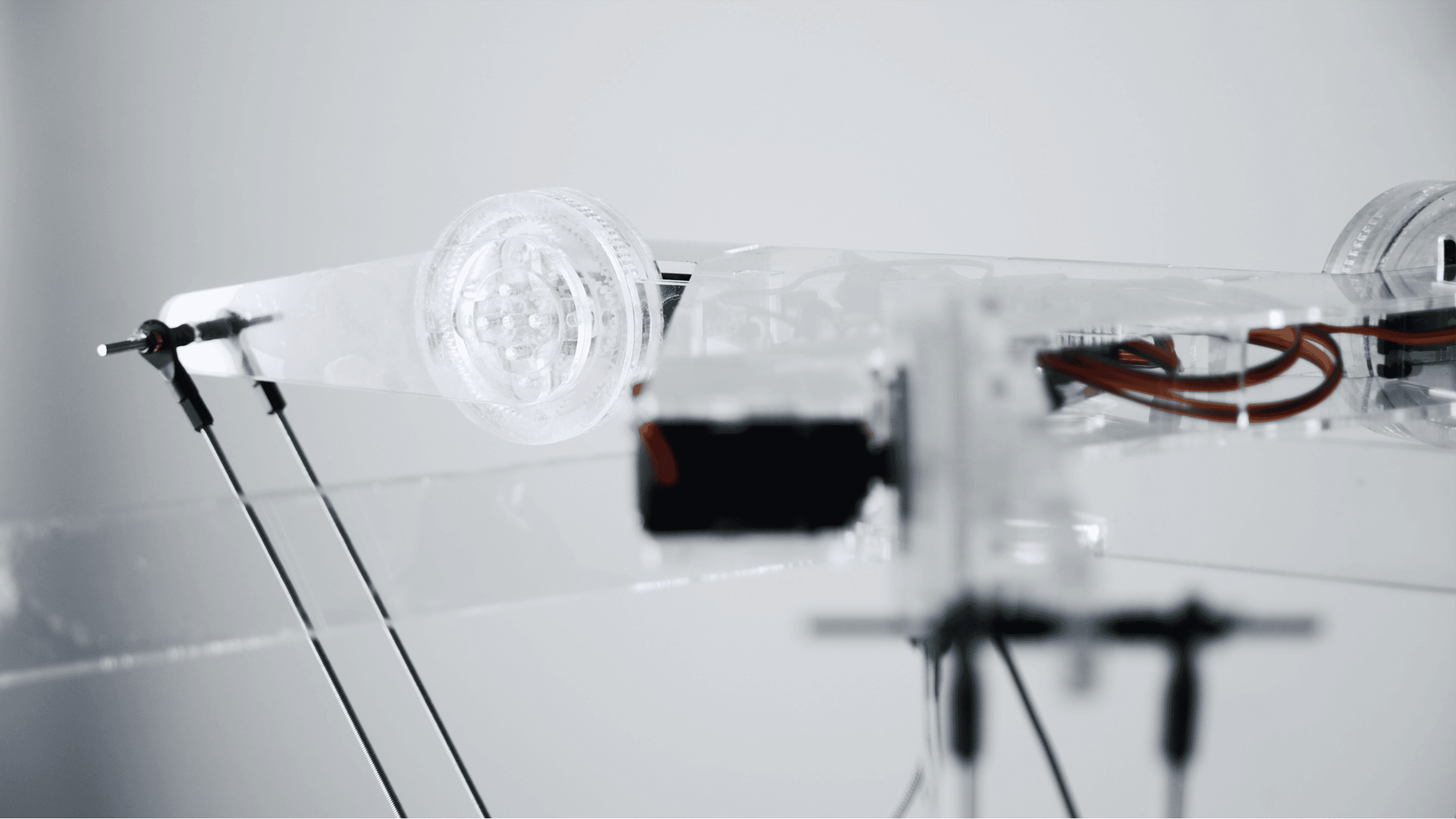

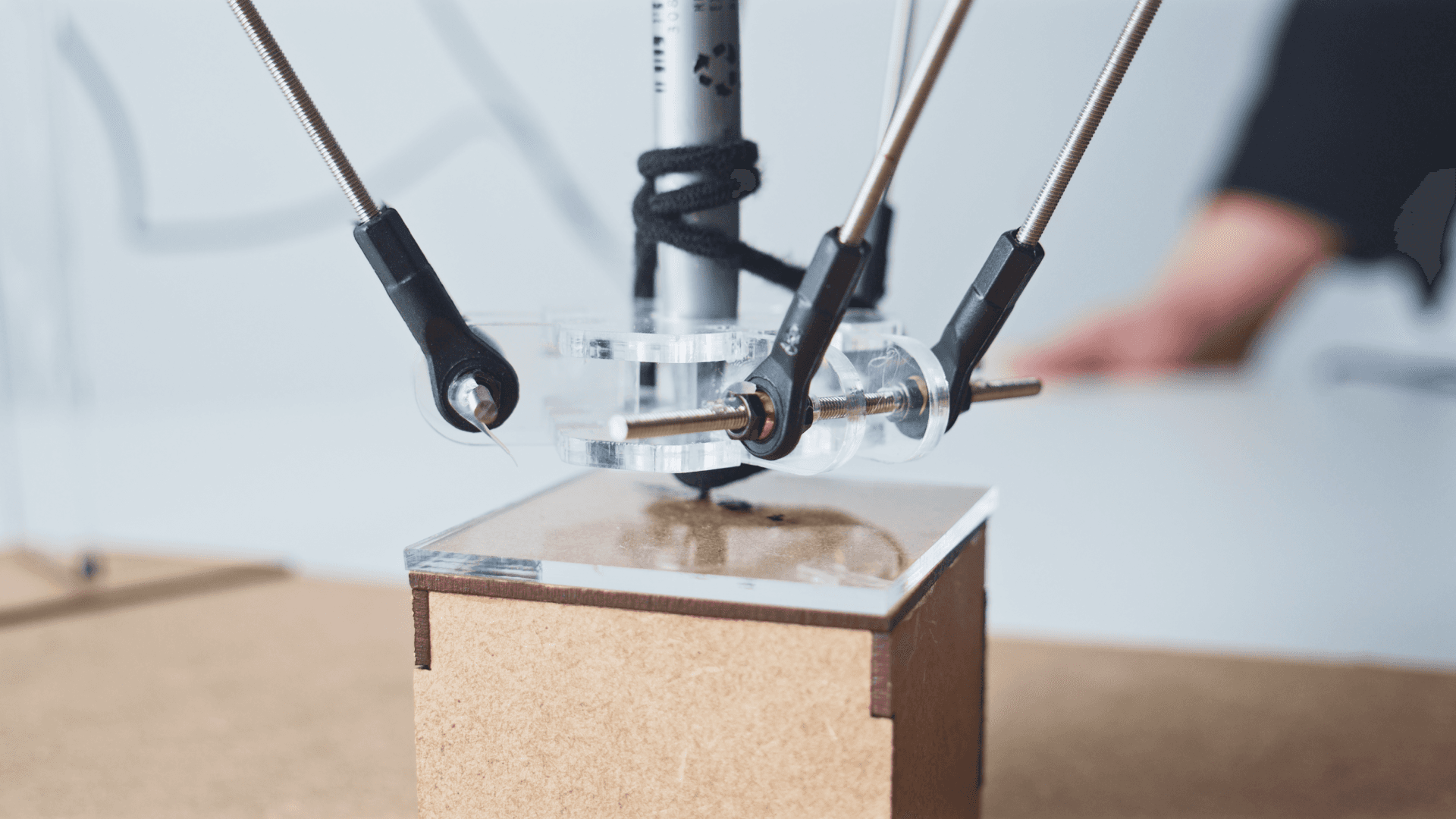

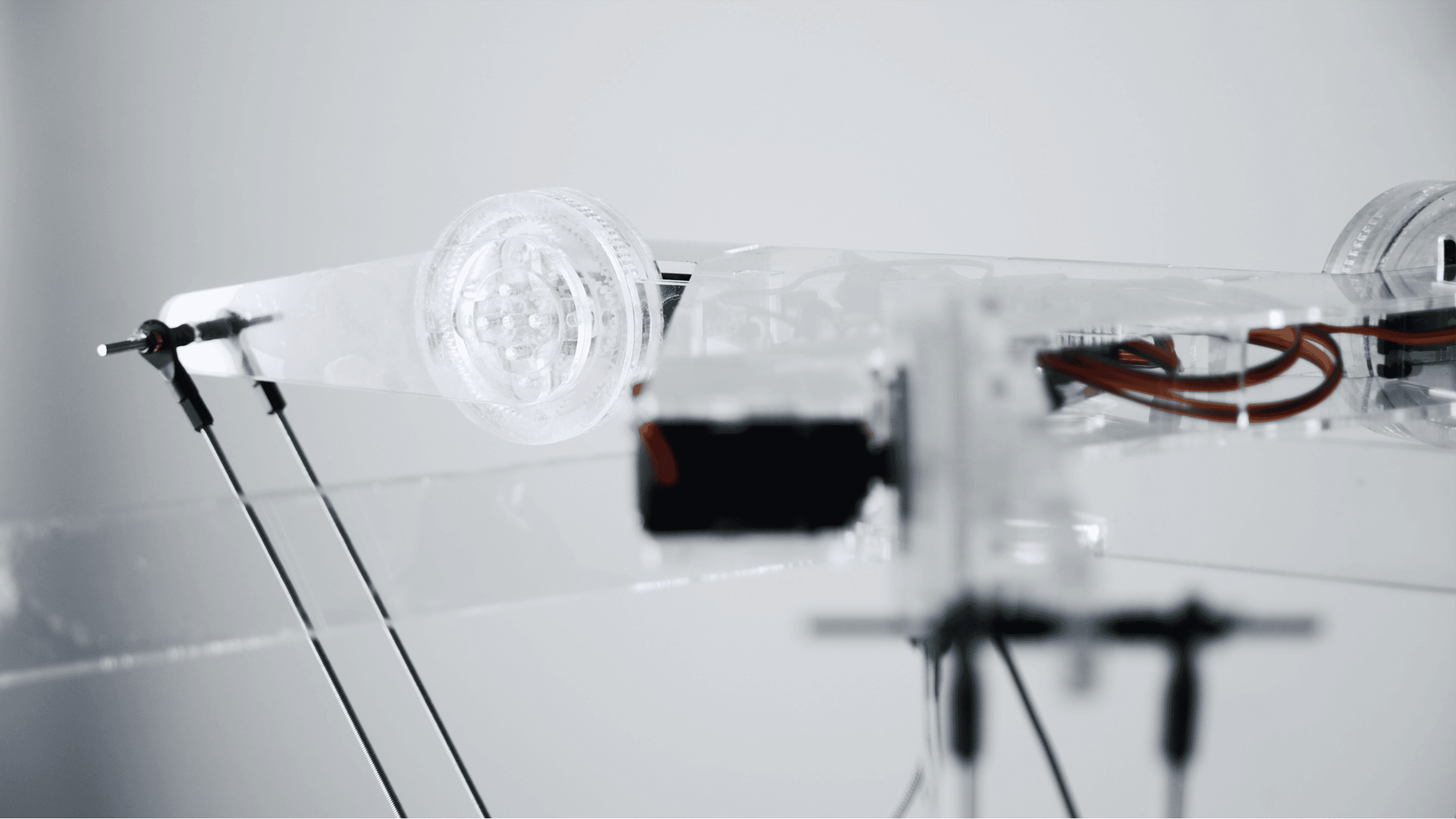

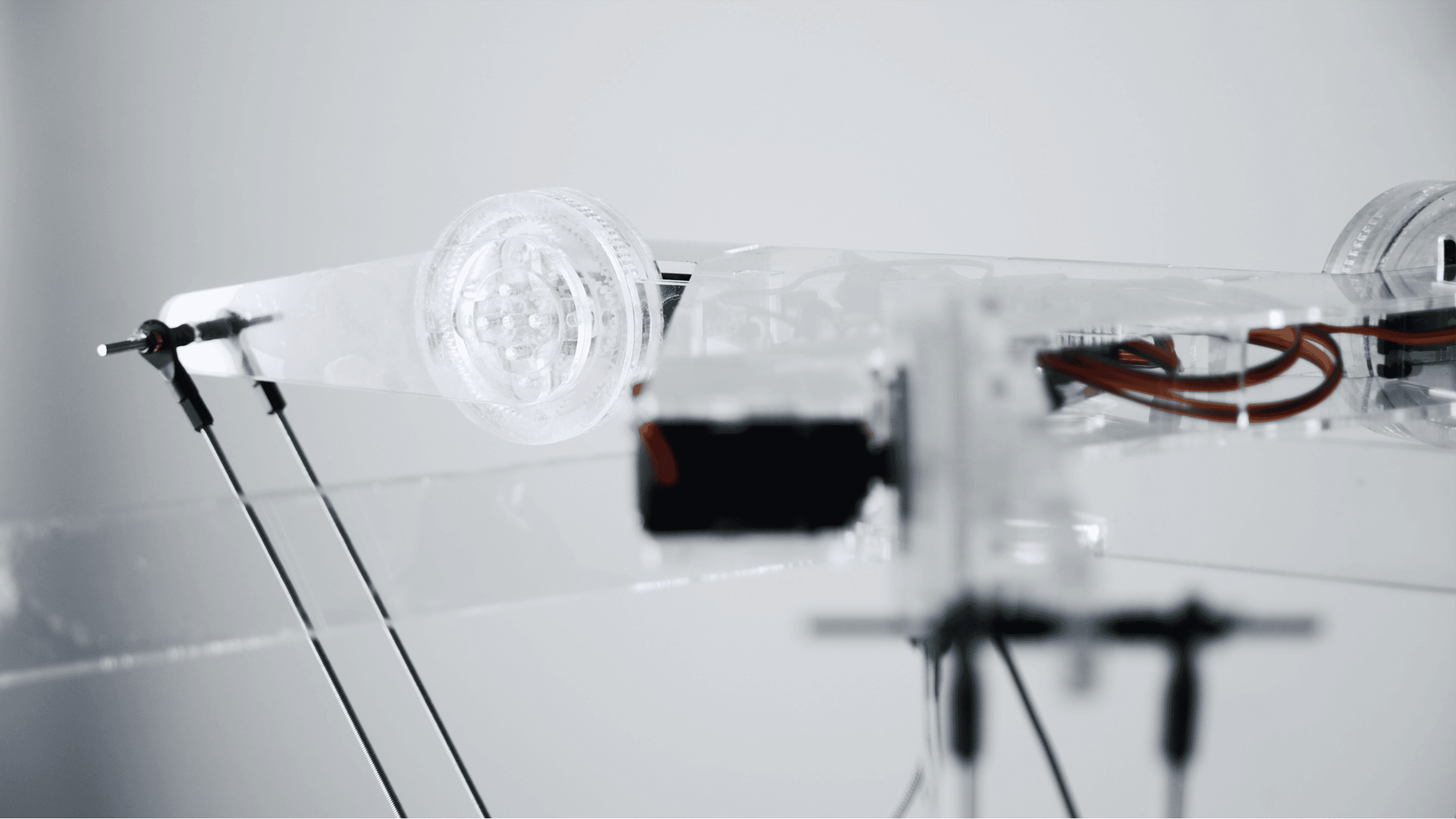

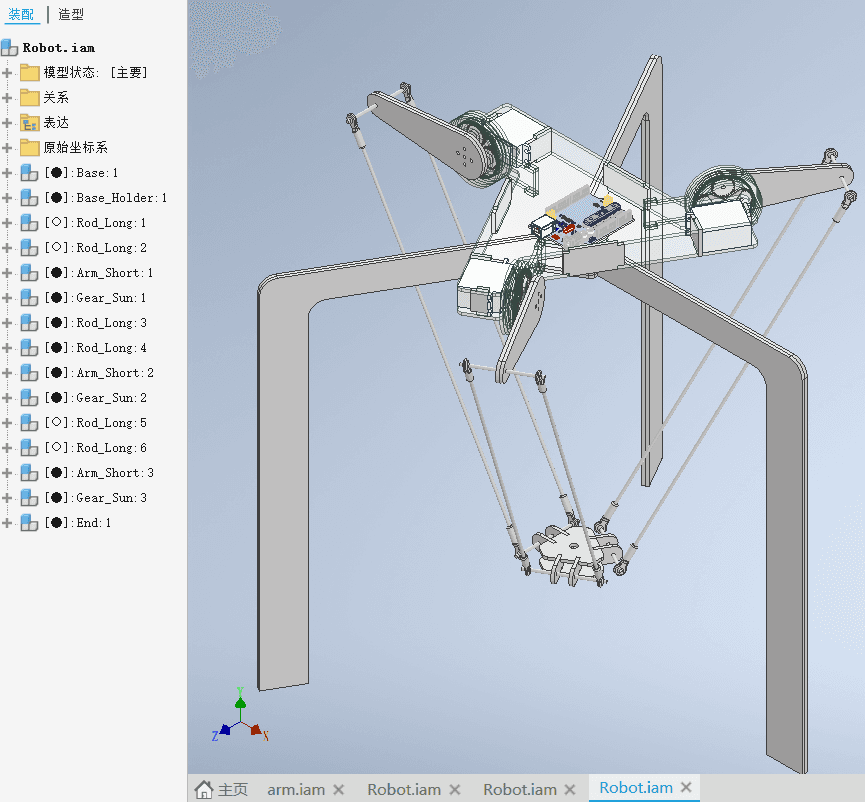

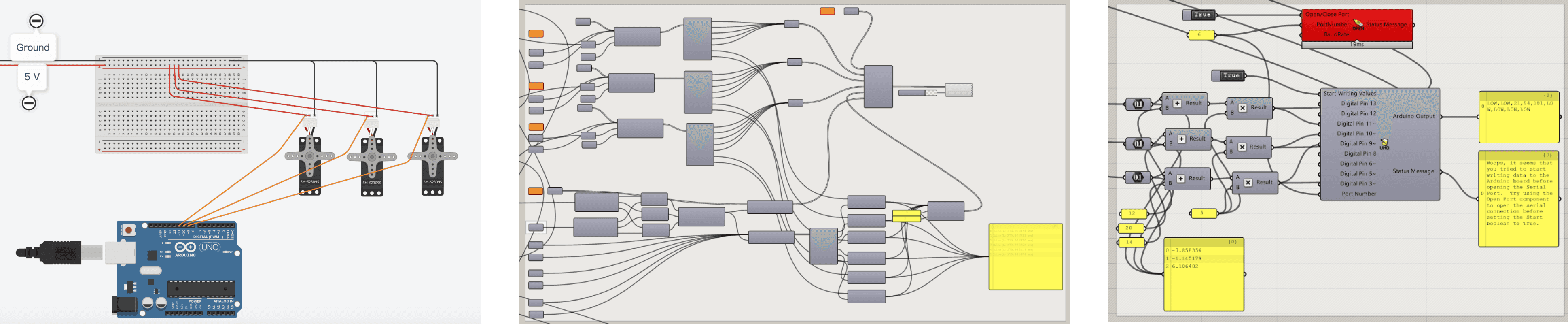

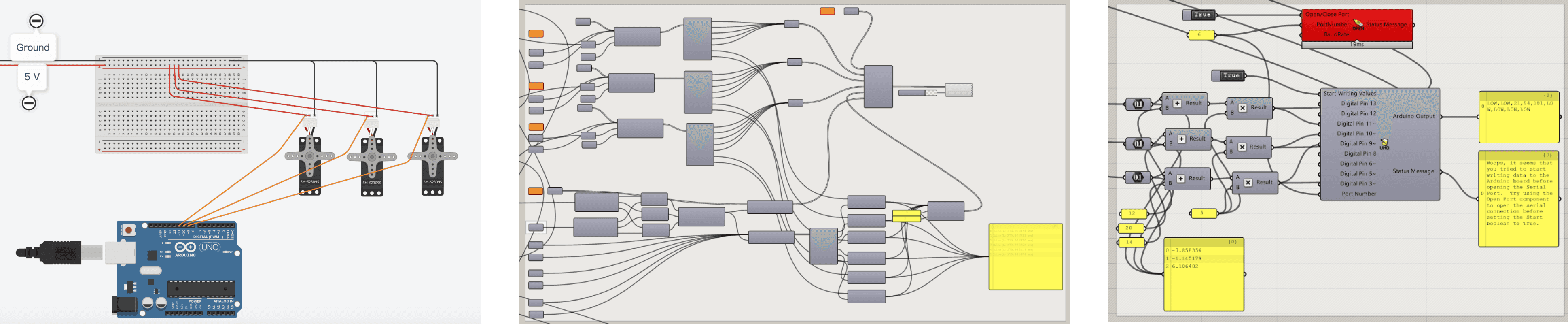

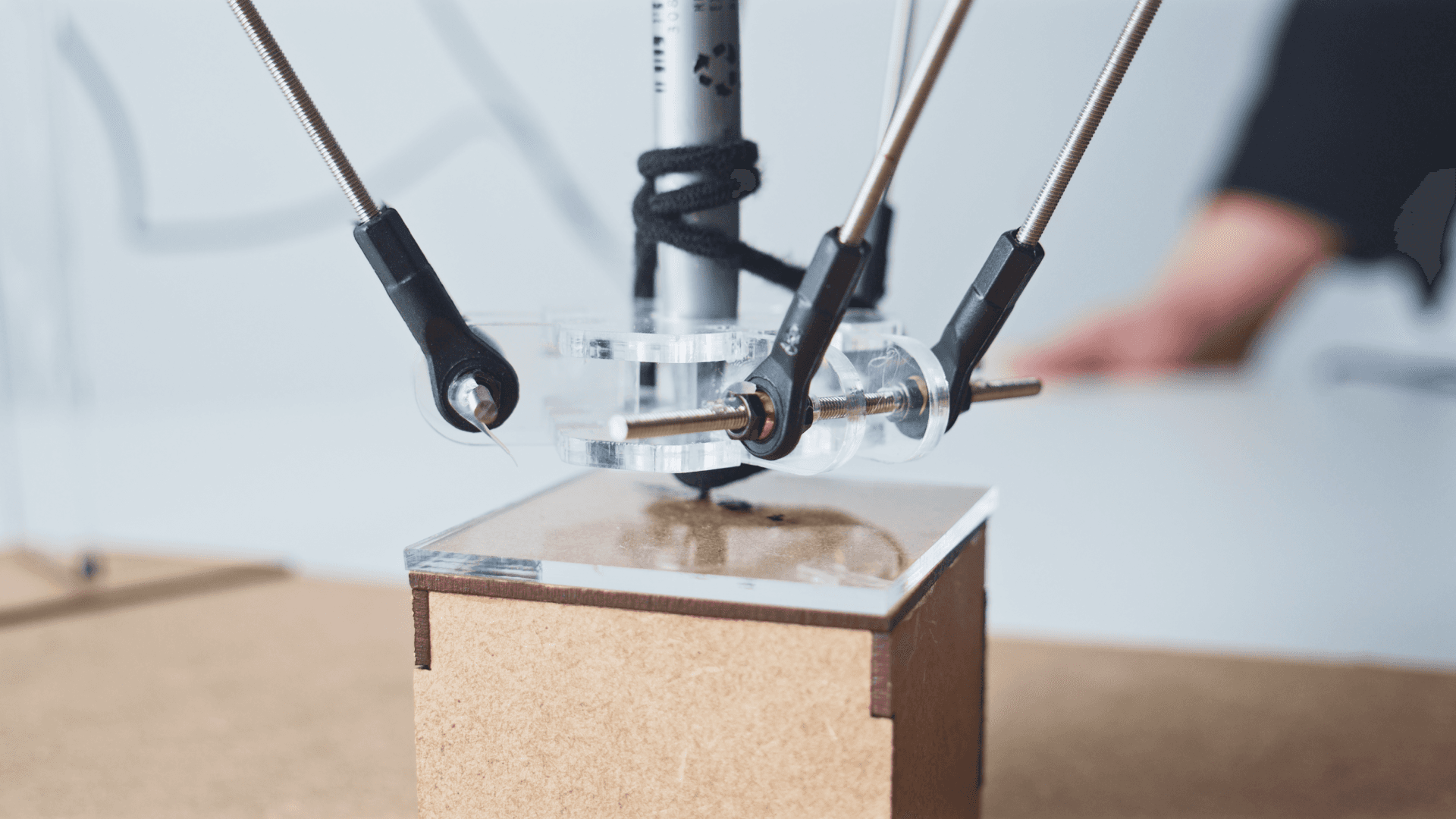

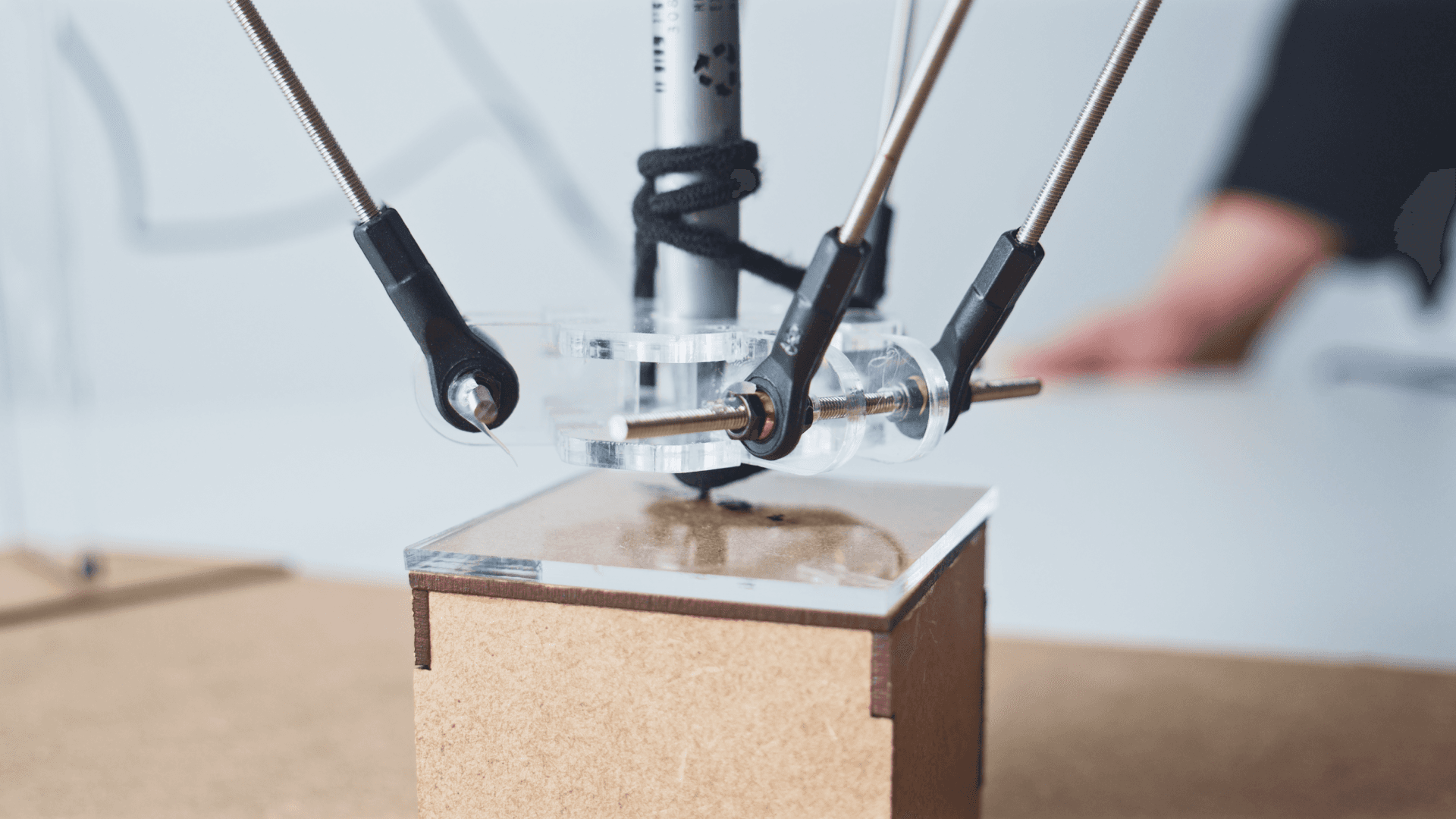

Mechanical Design

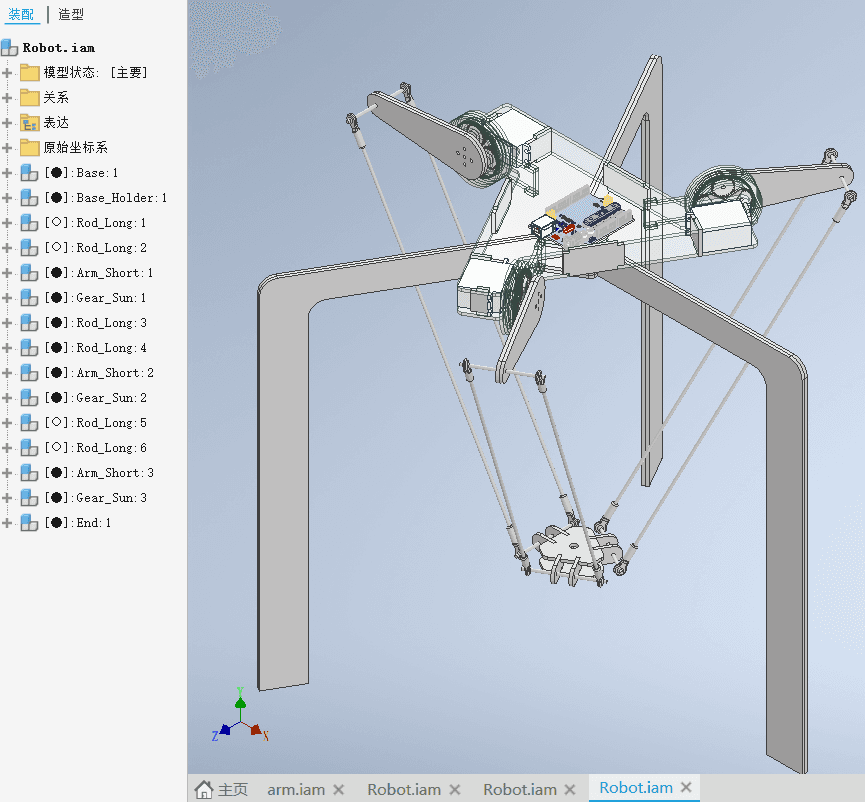

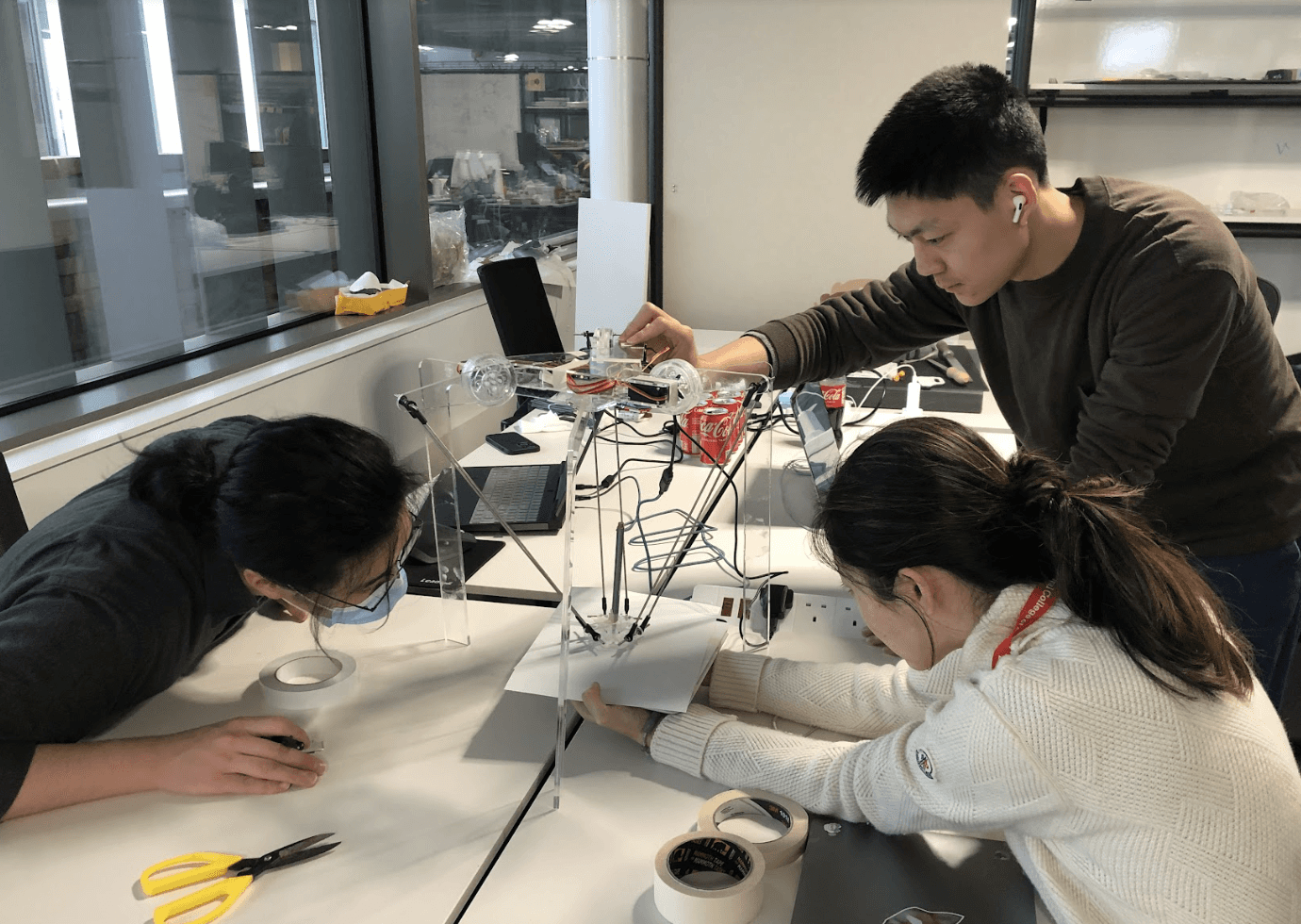

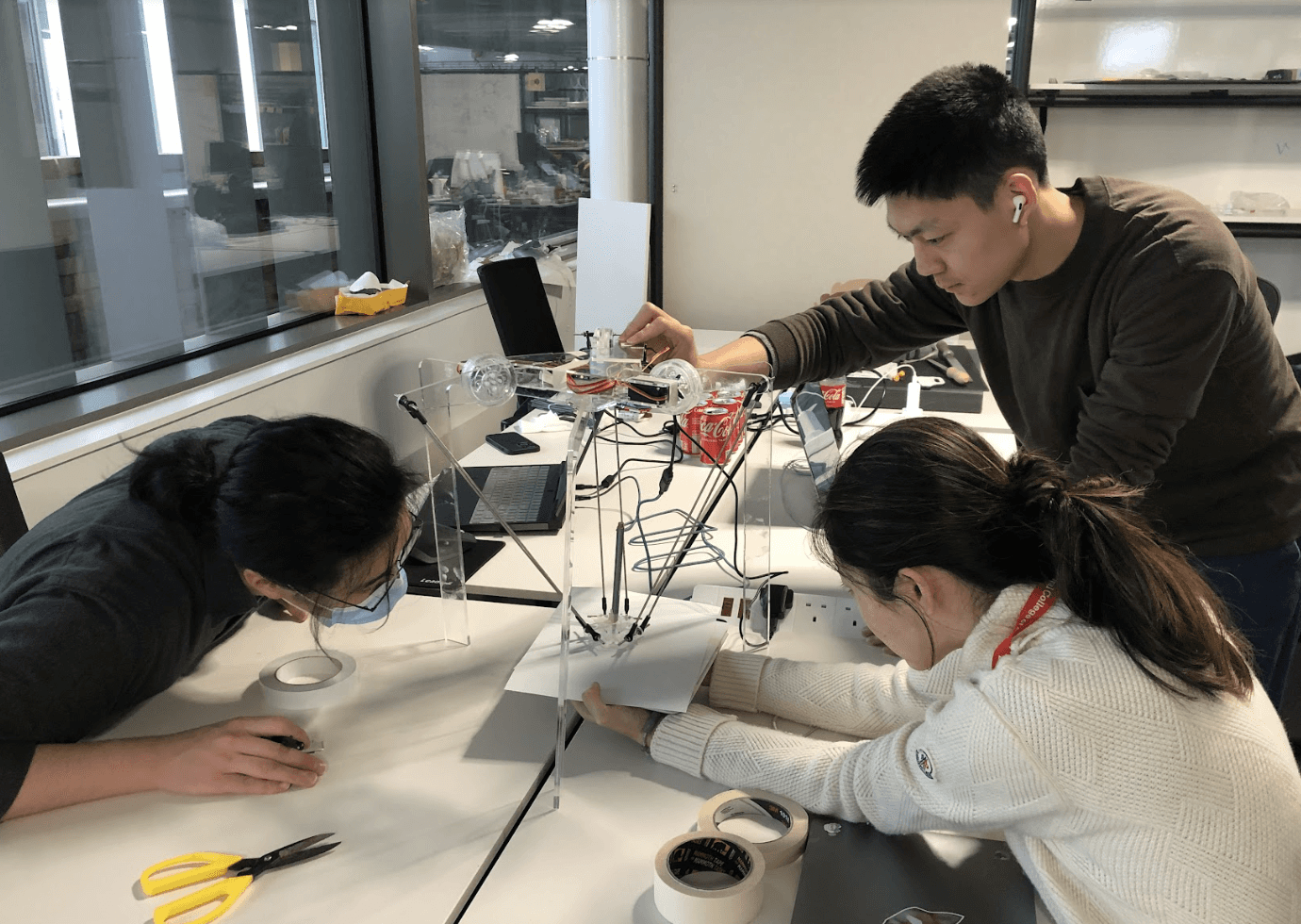

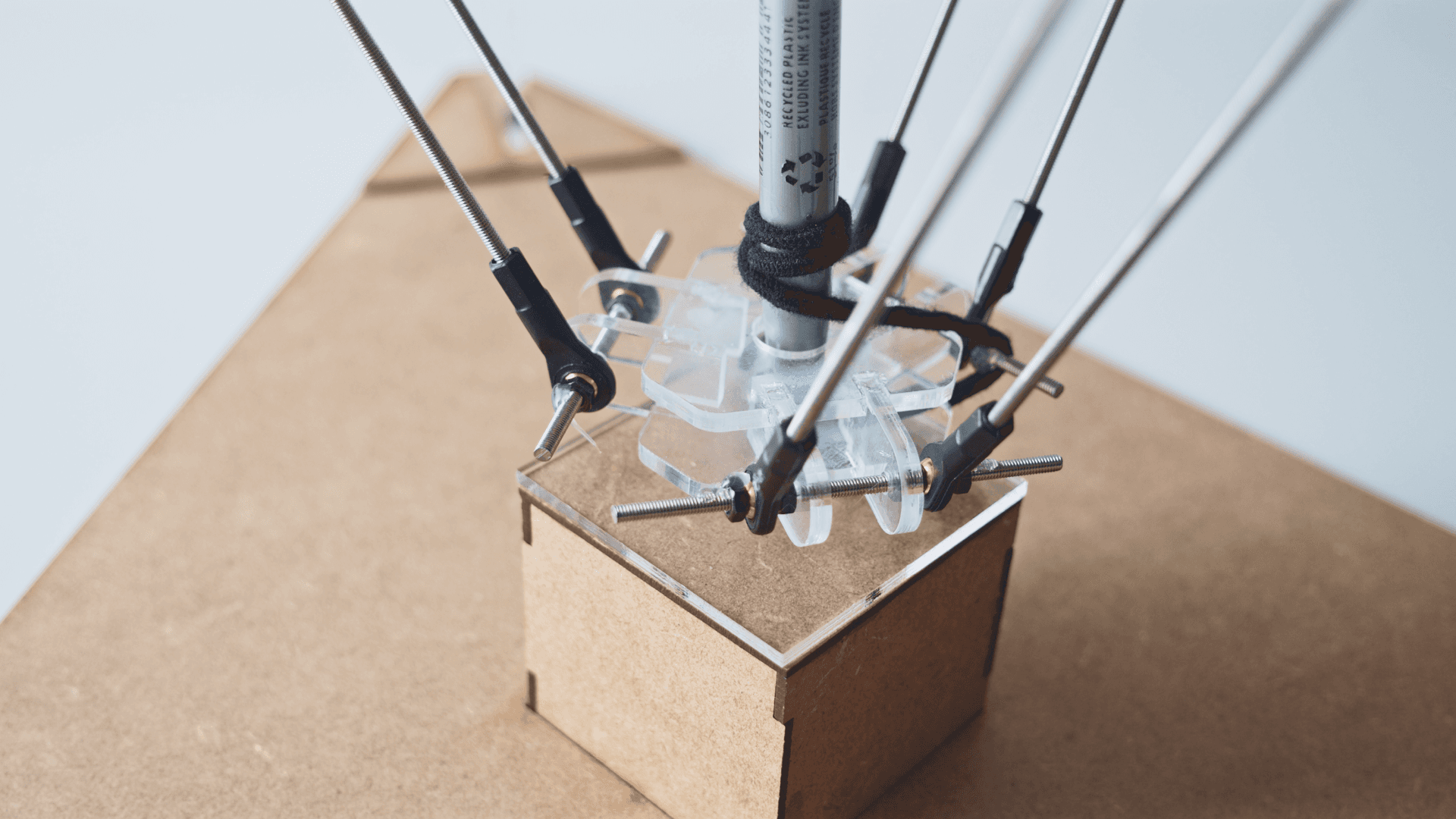

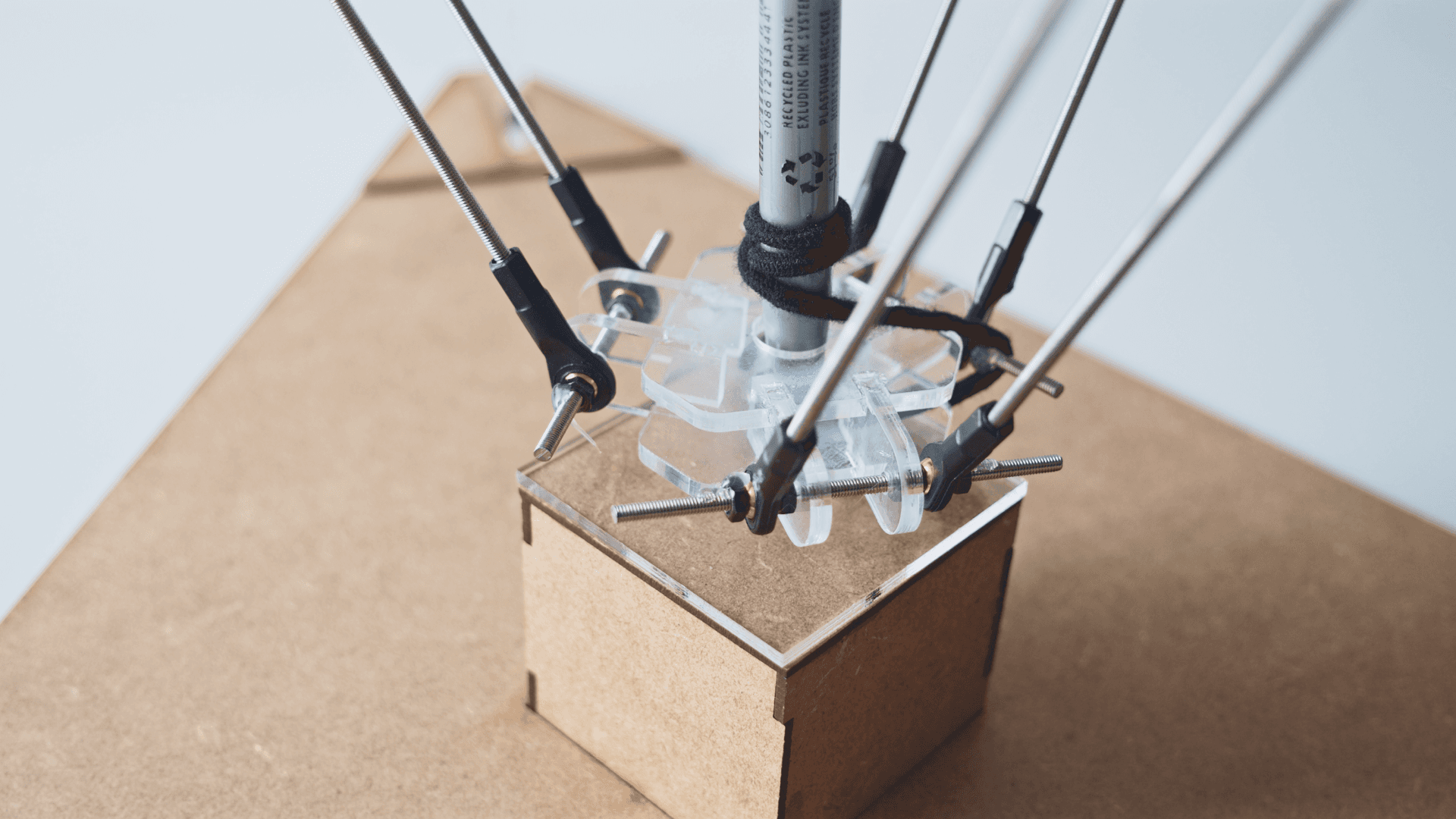

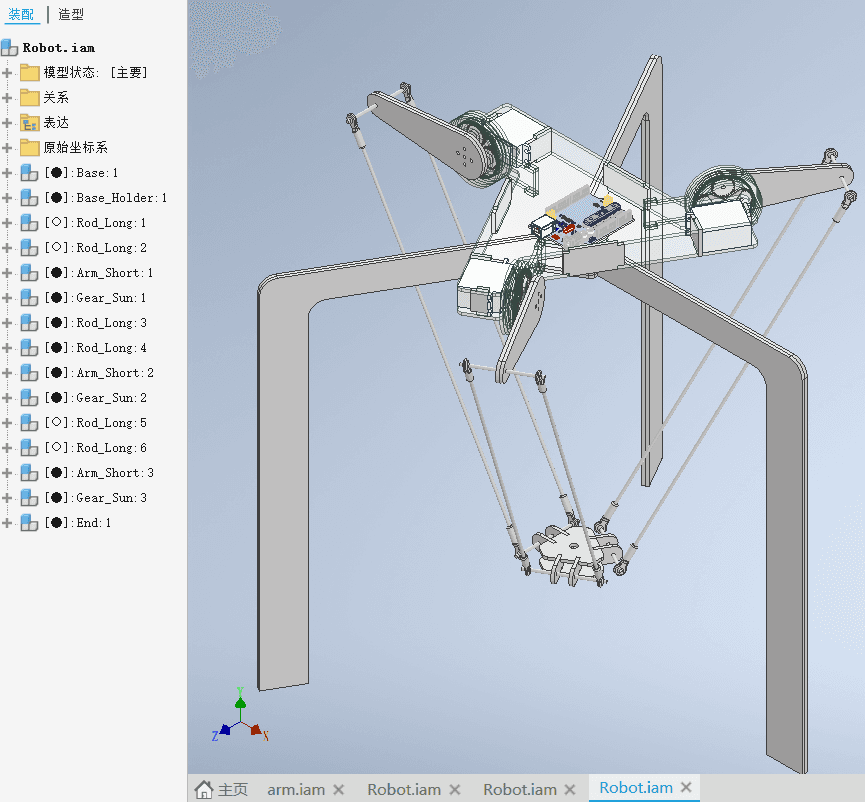

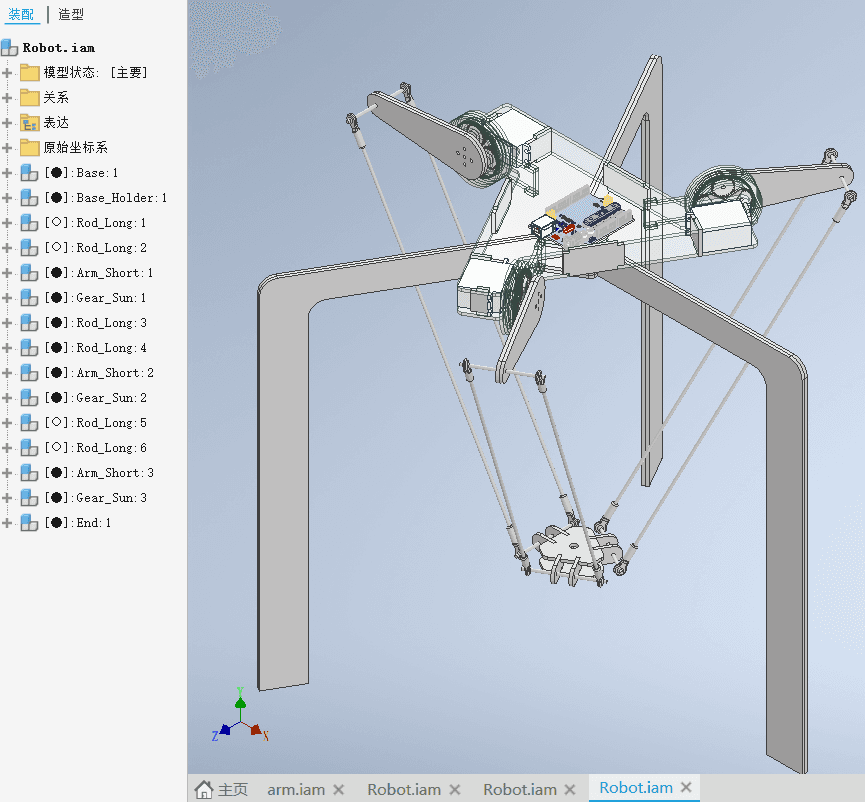

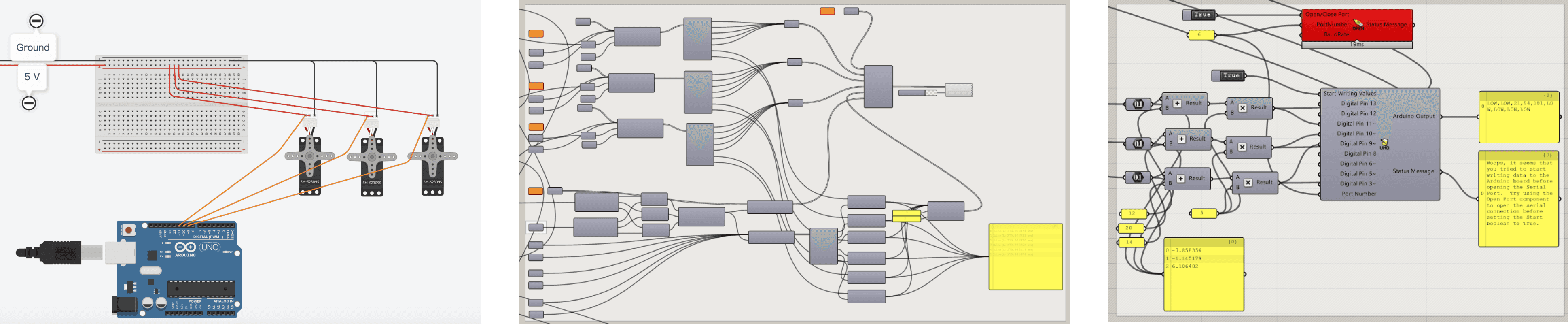

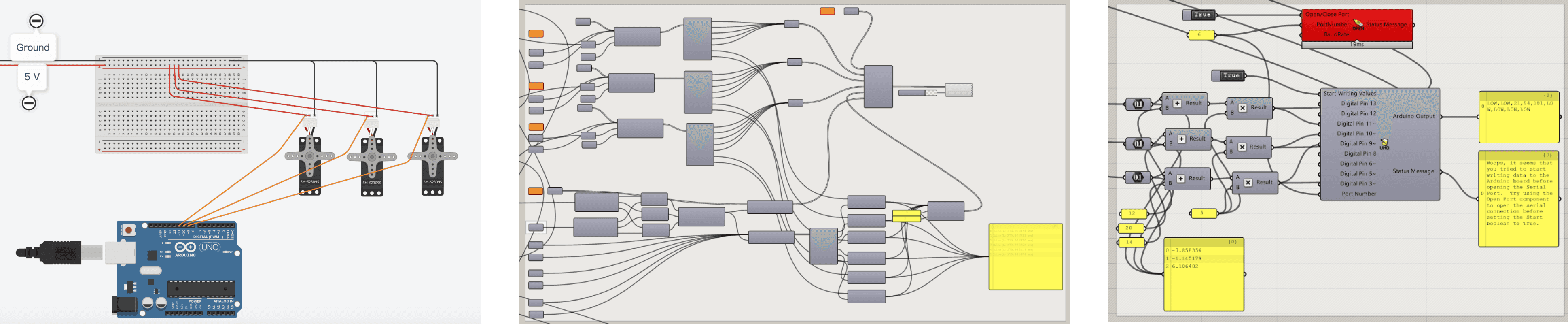

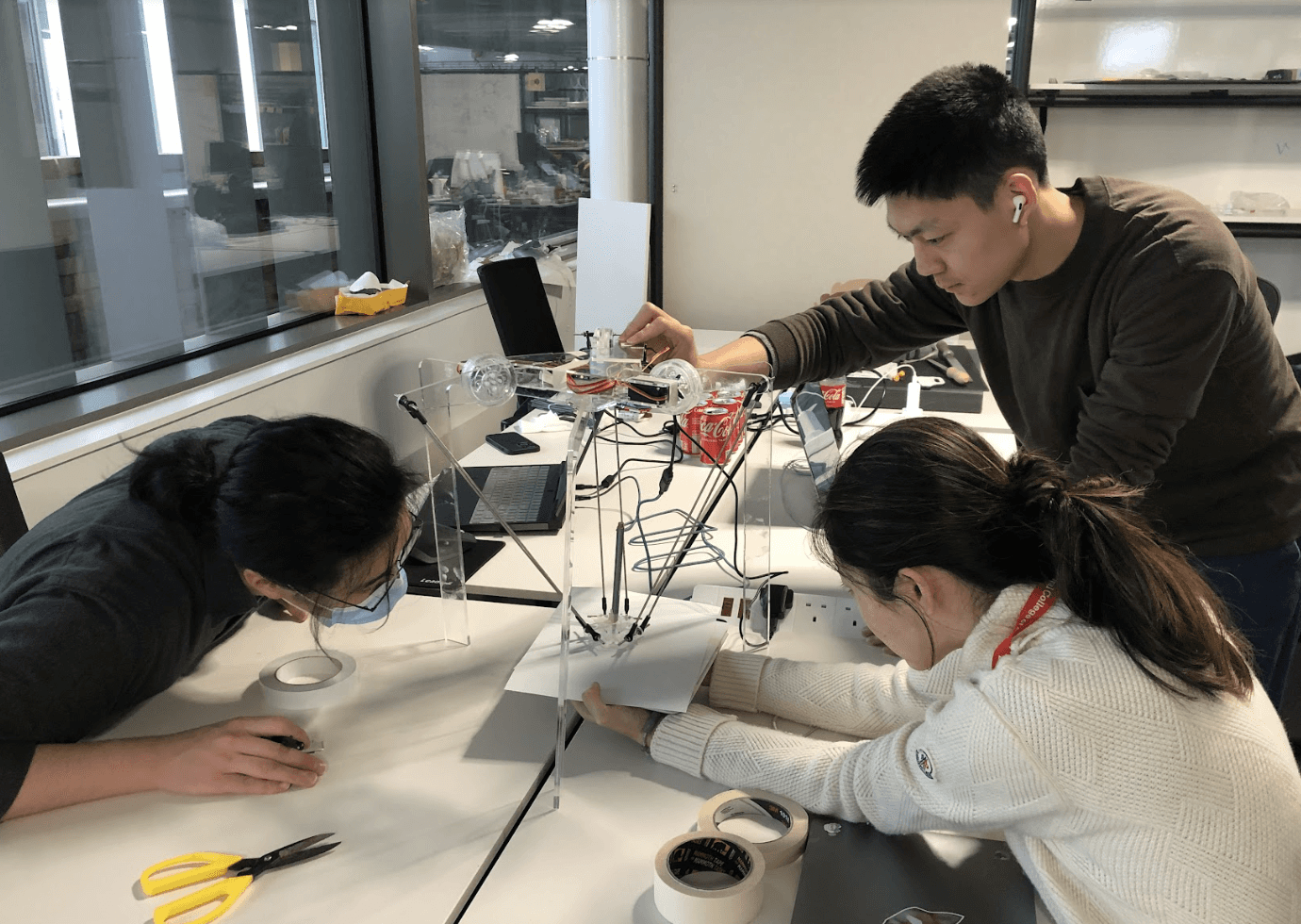

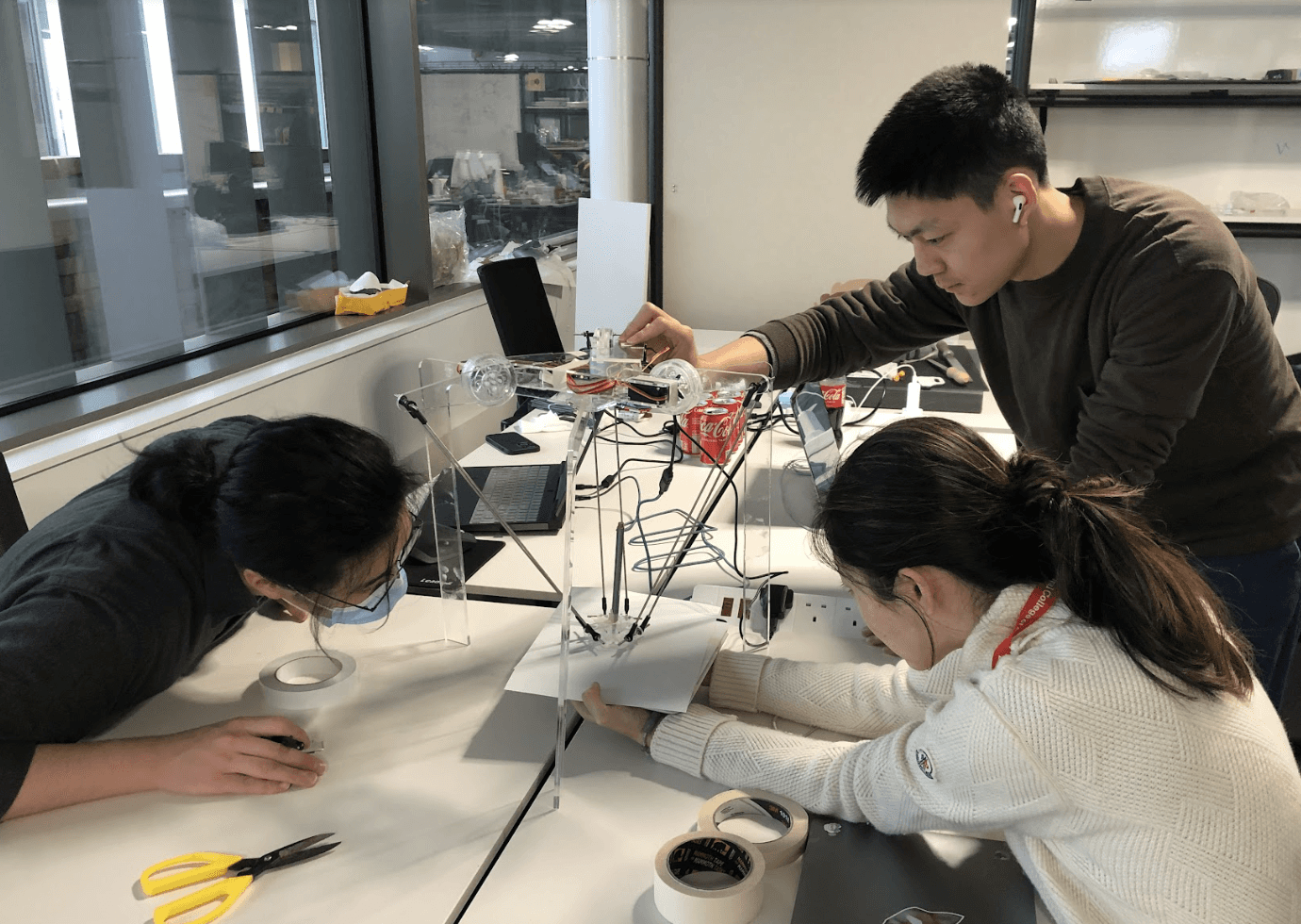

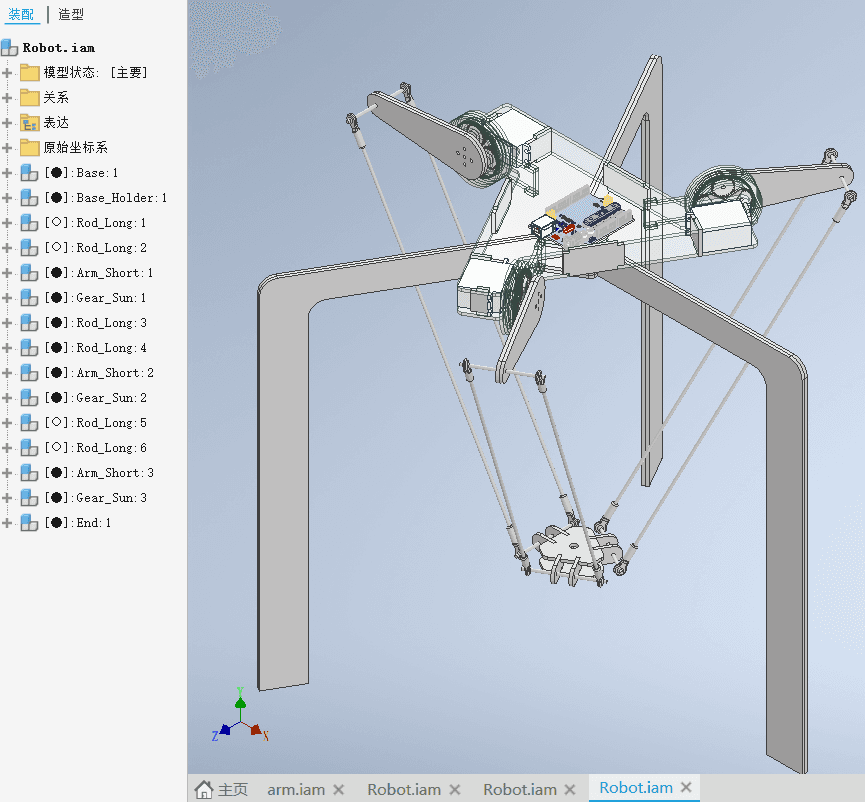

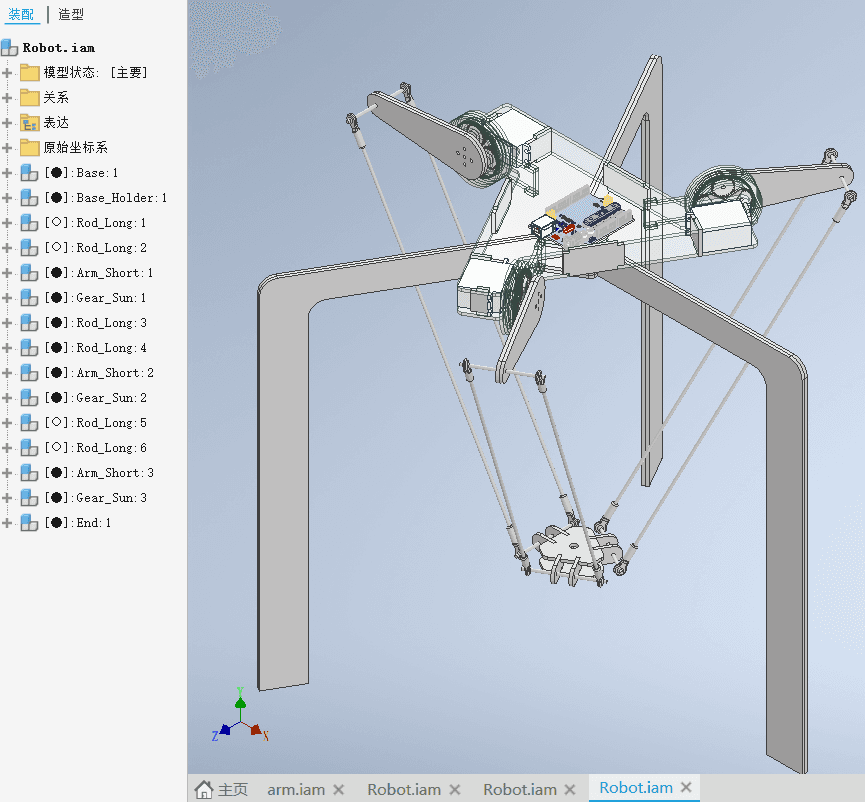

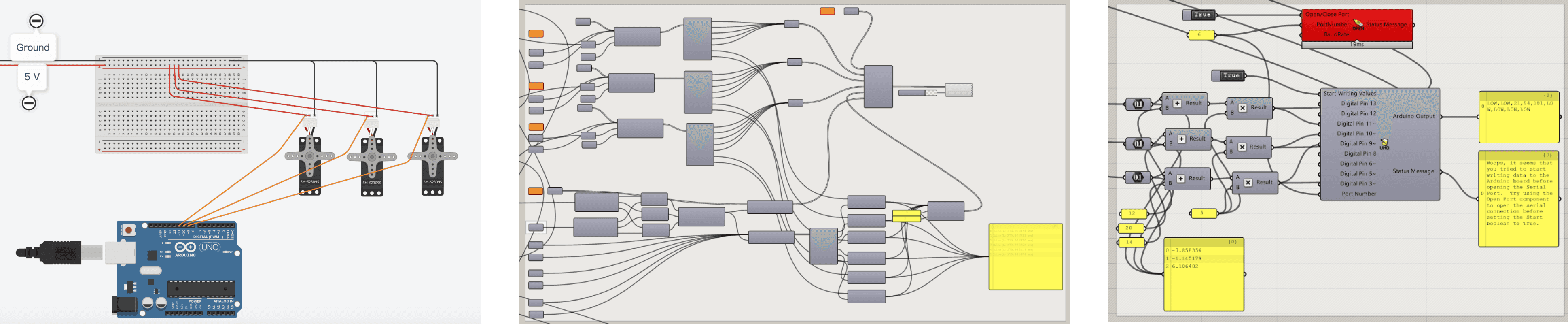

The 3D model and mechanical structure is developed by one of the team members who also builds connection between grasshopper and Arduino.

Interaction Design

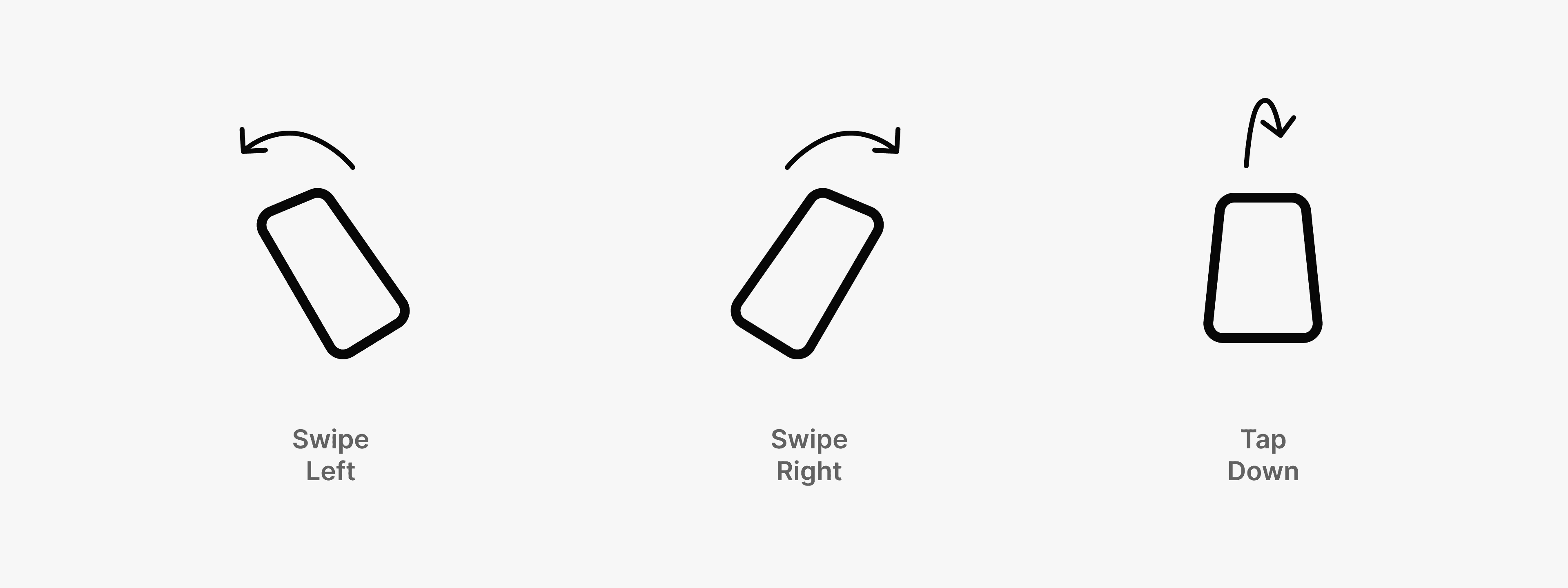

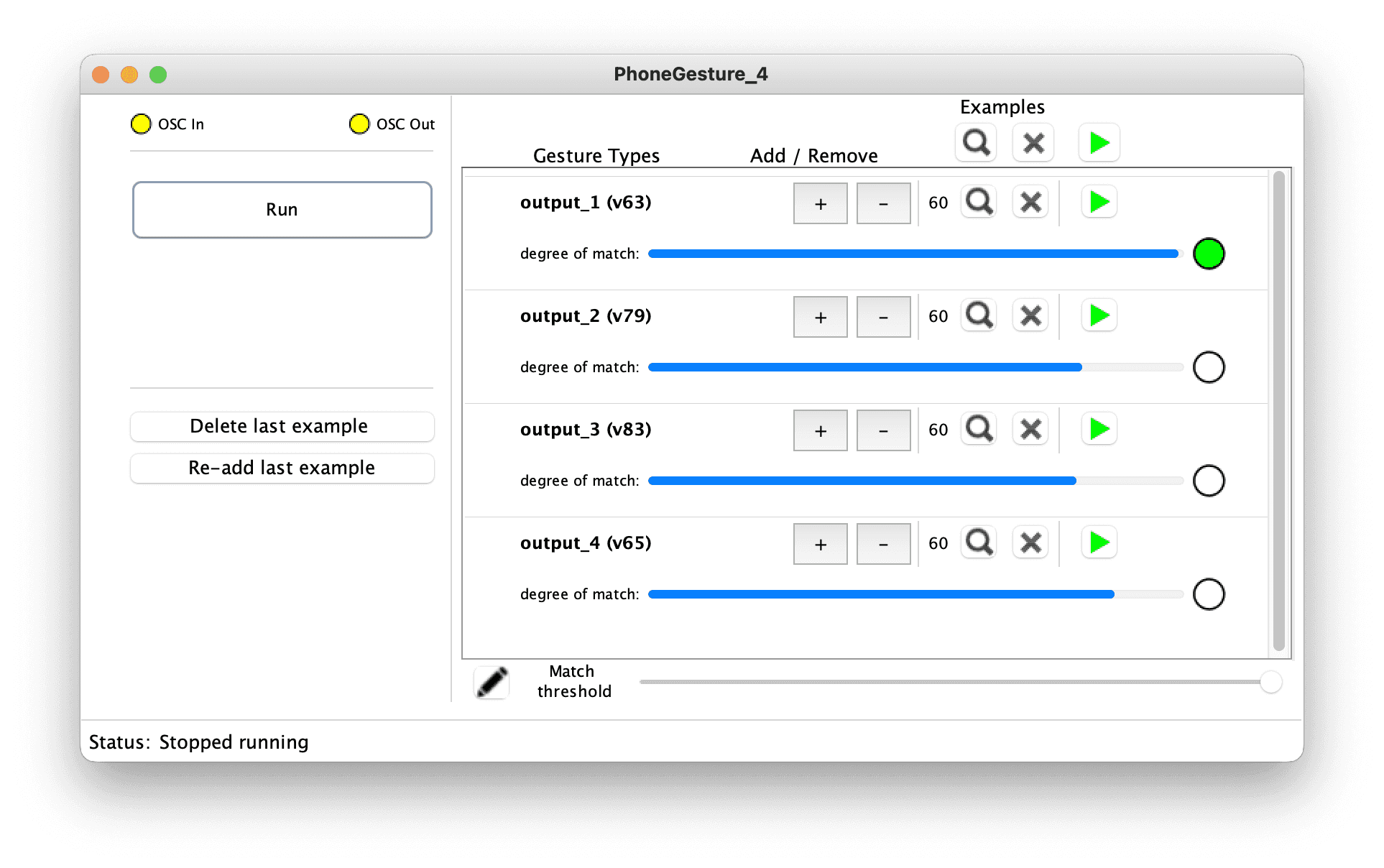

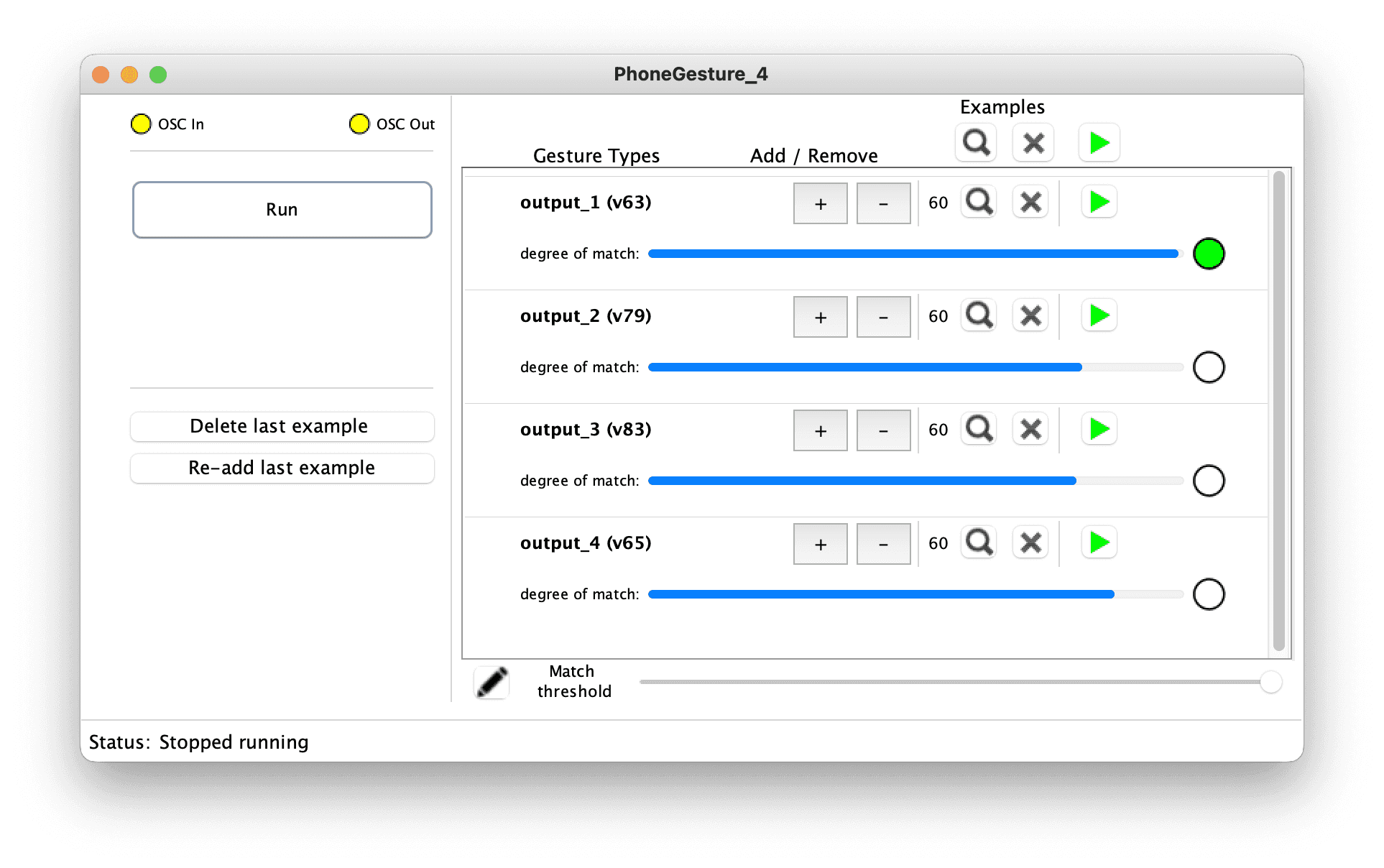

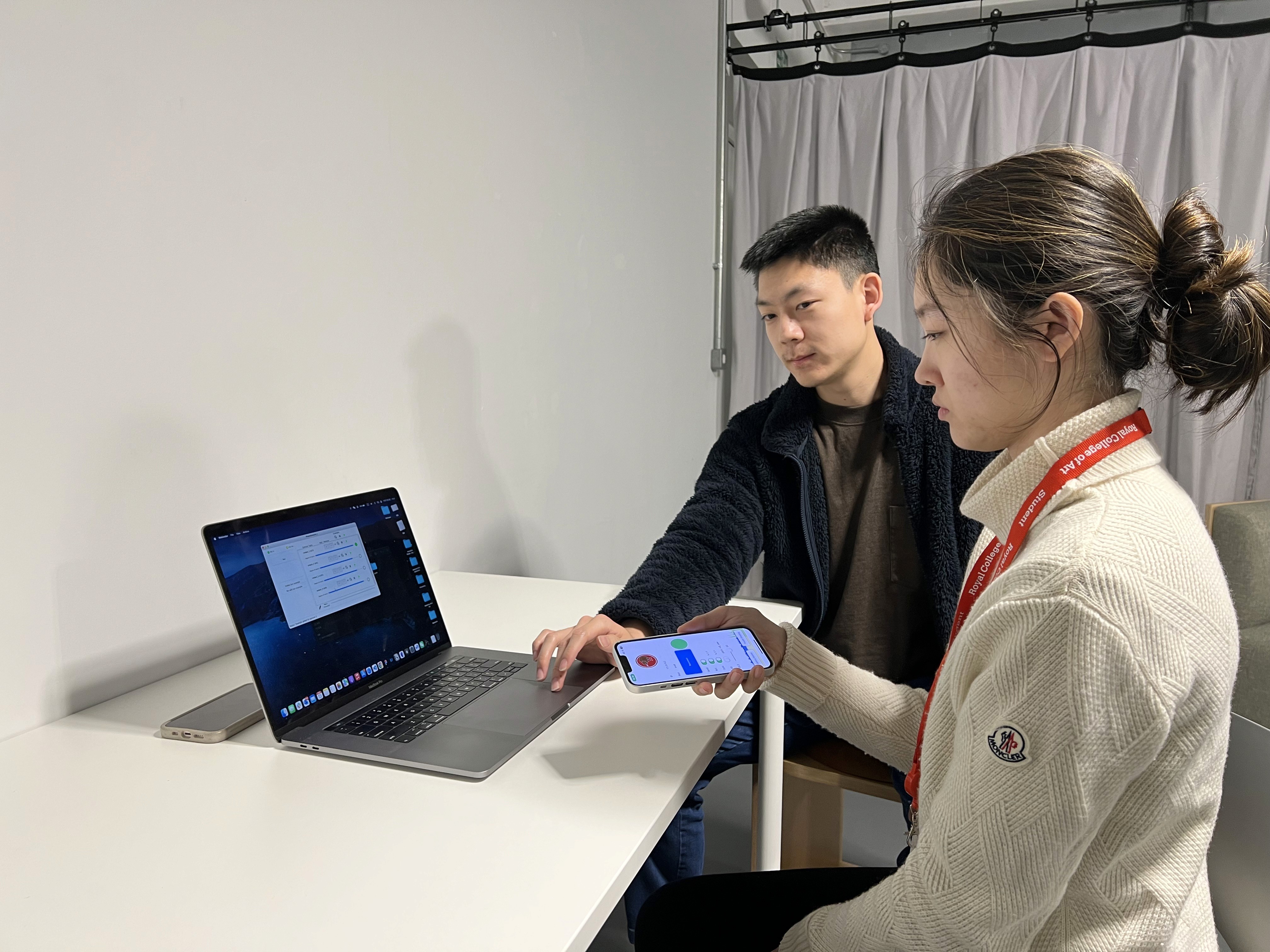

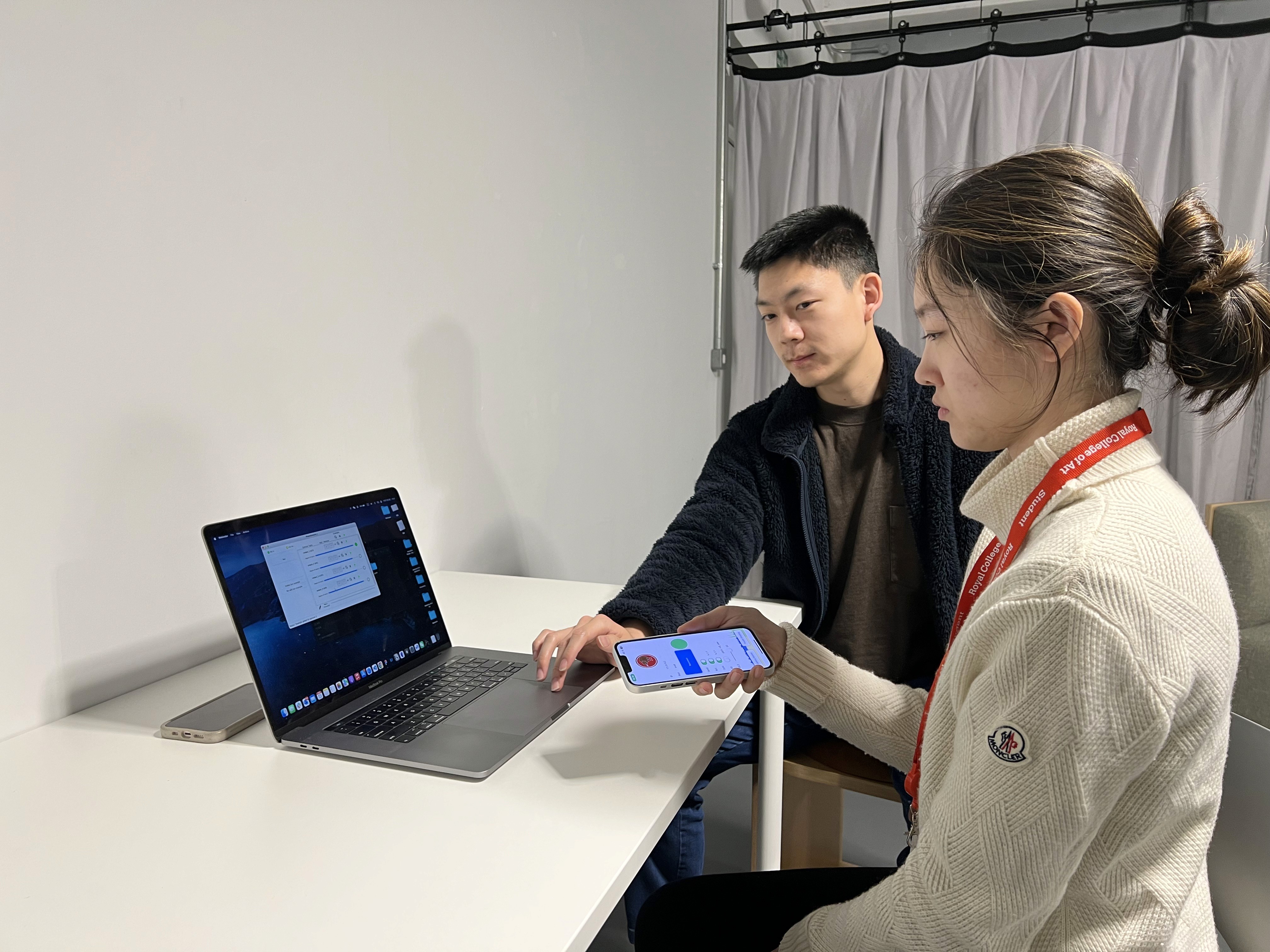

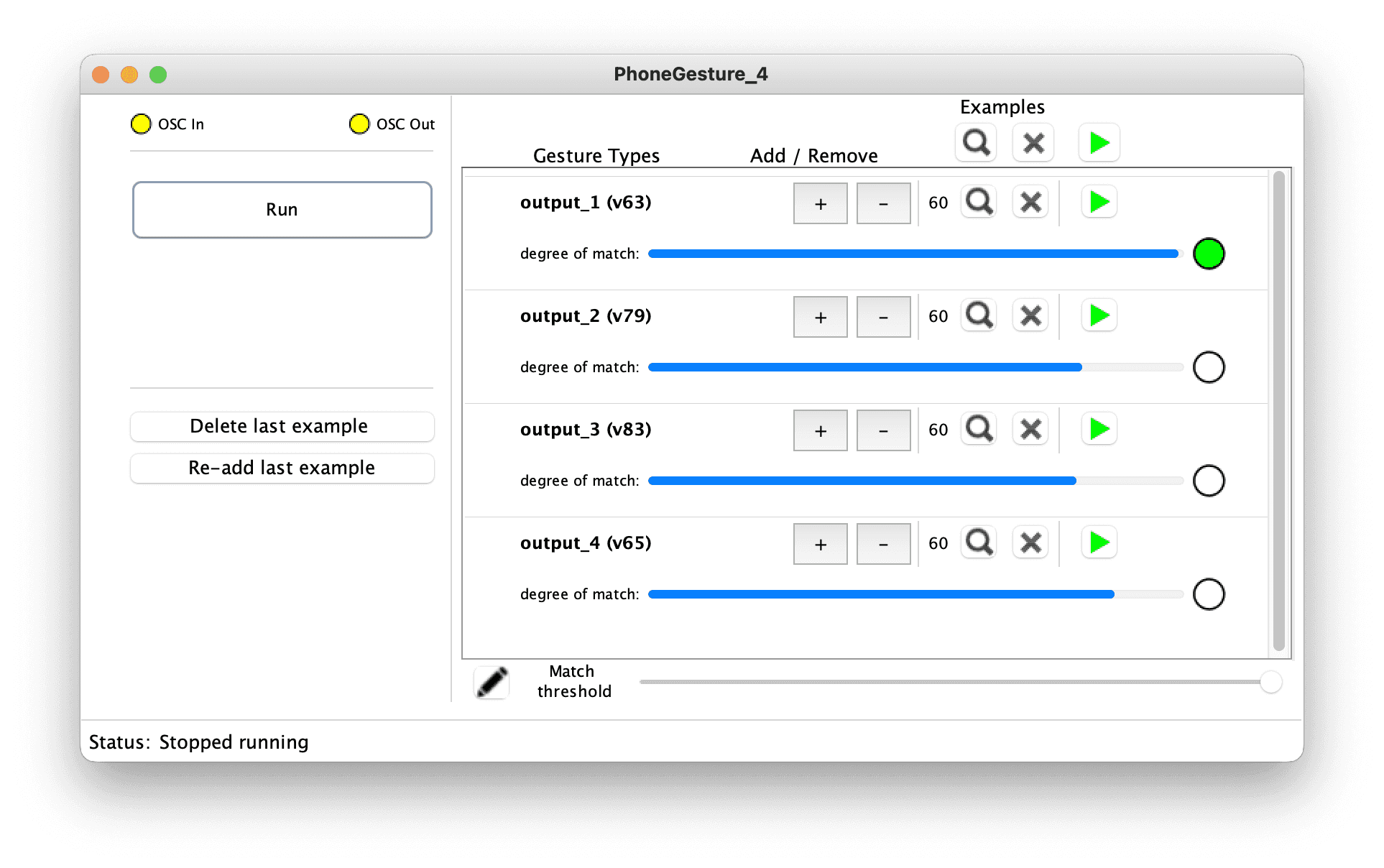

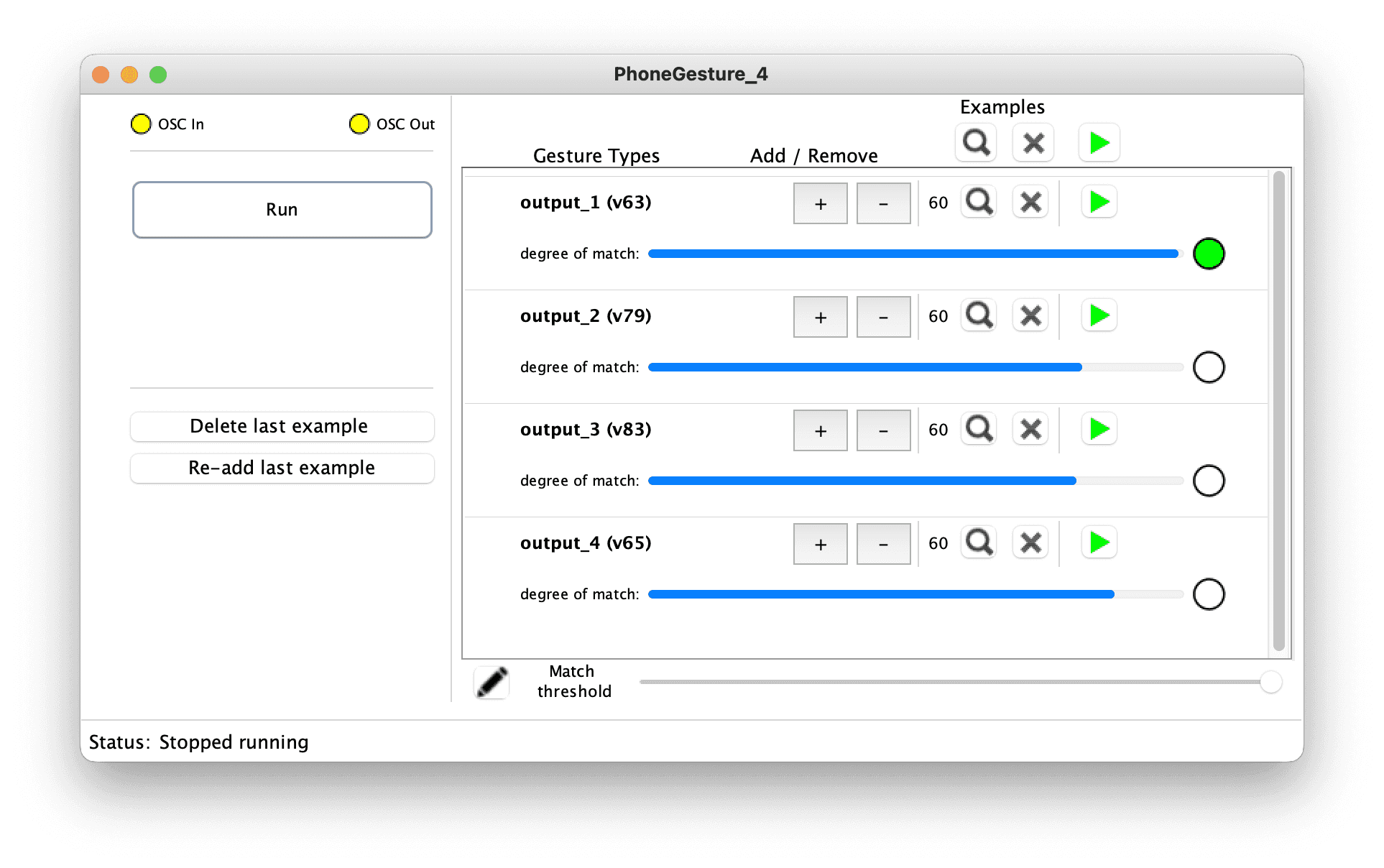

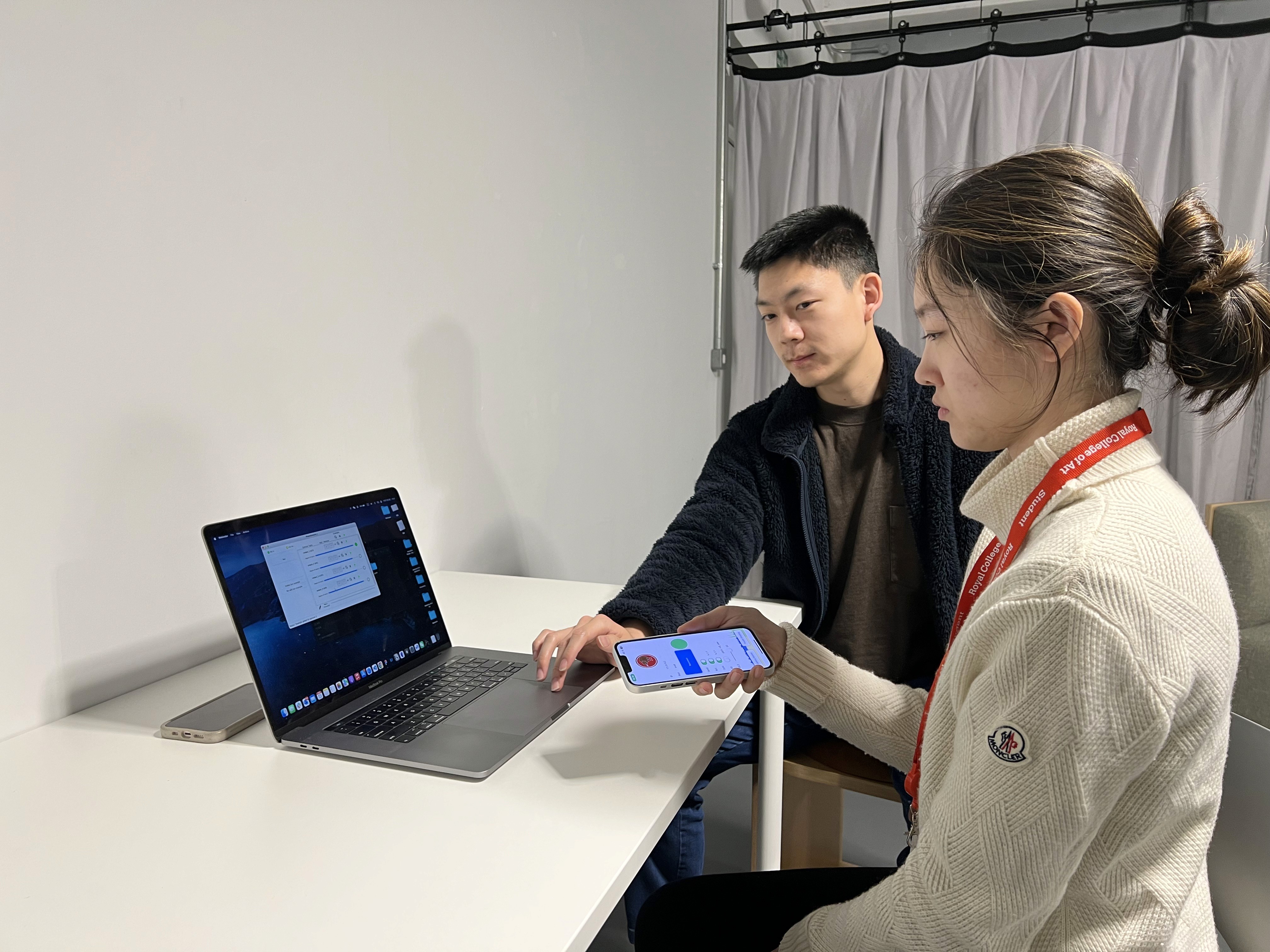

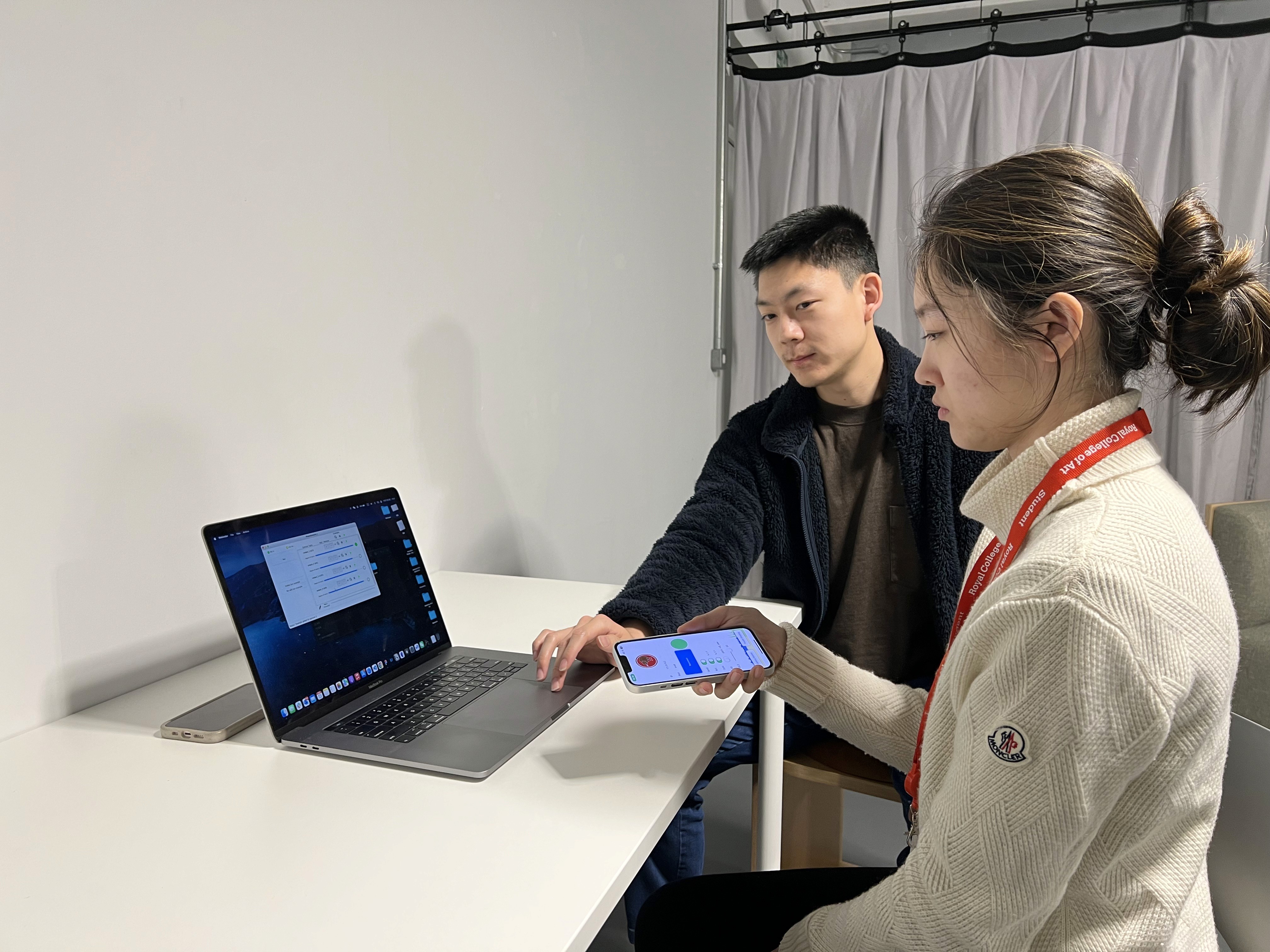

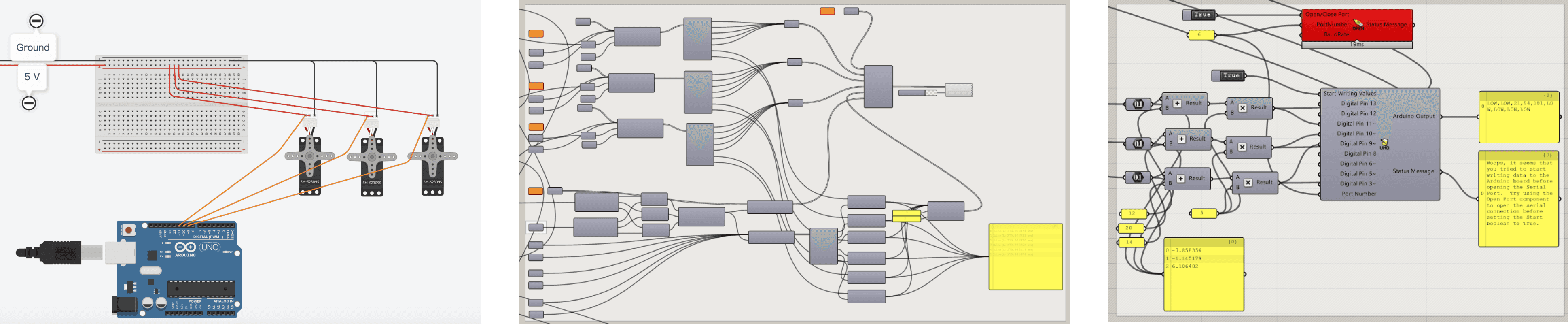

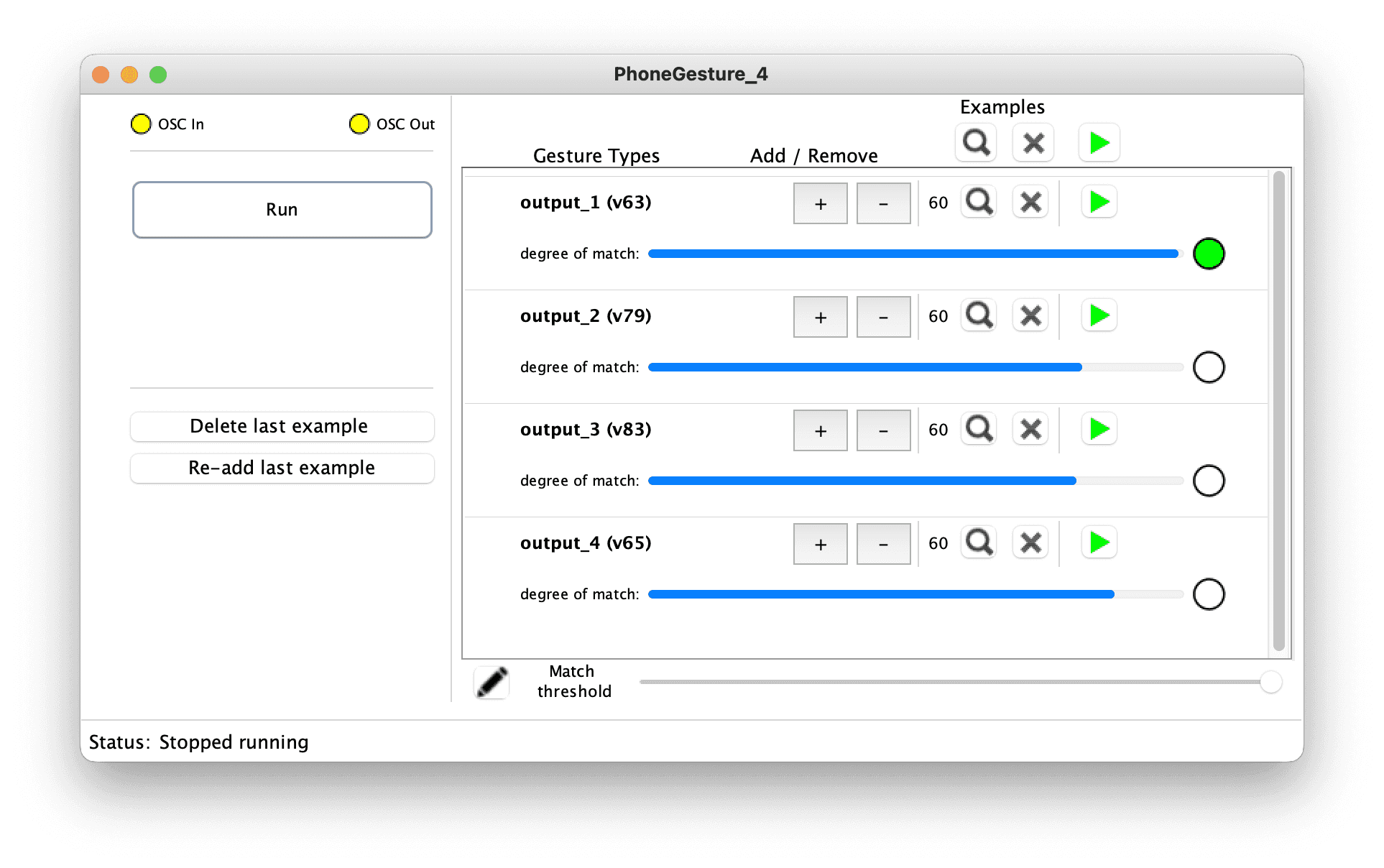

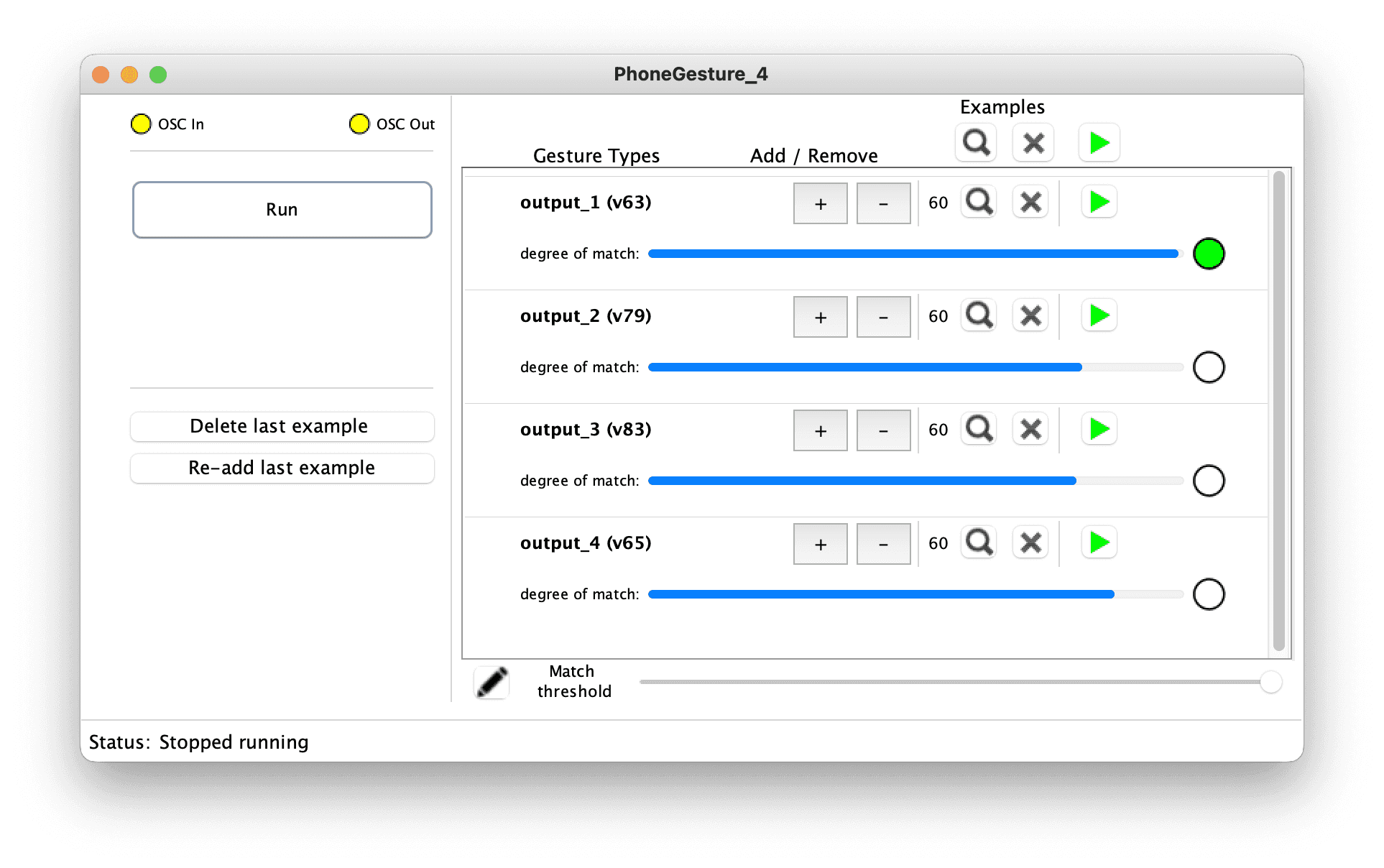

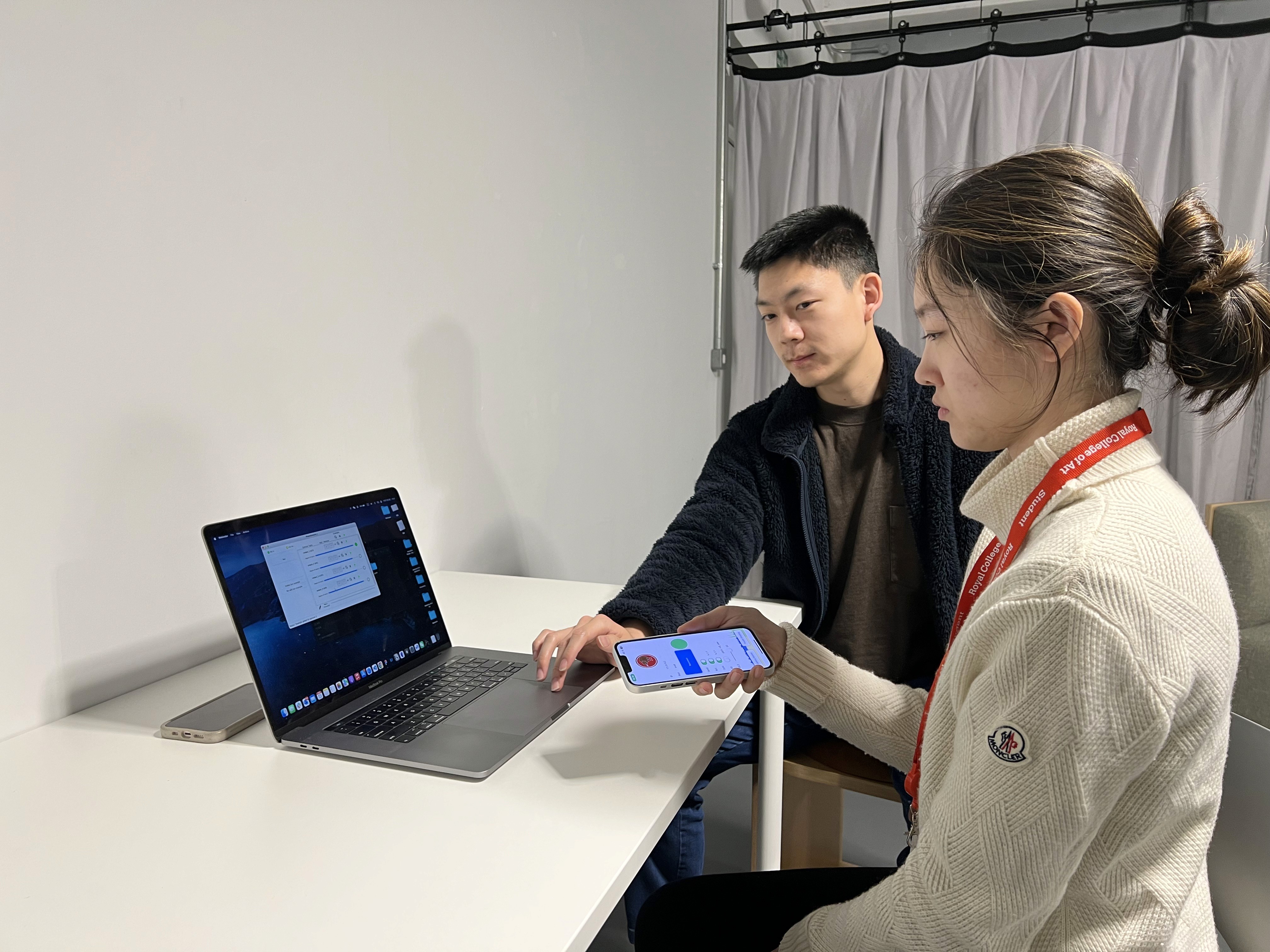

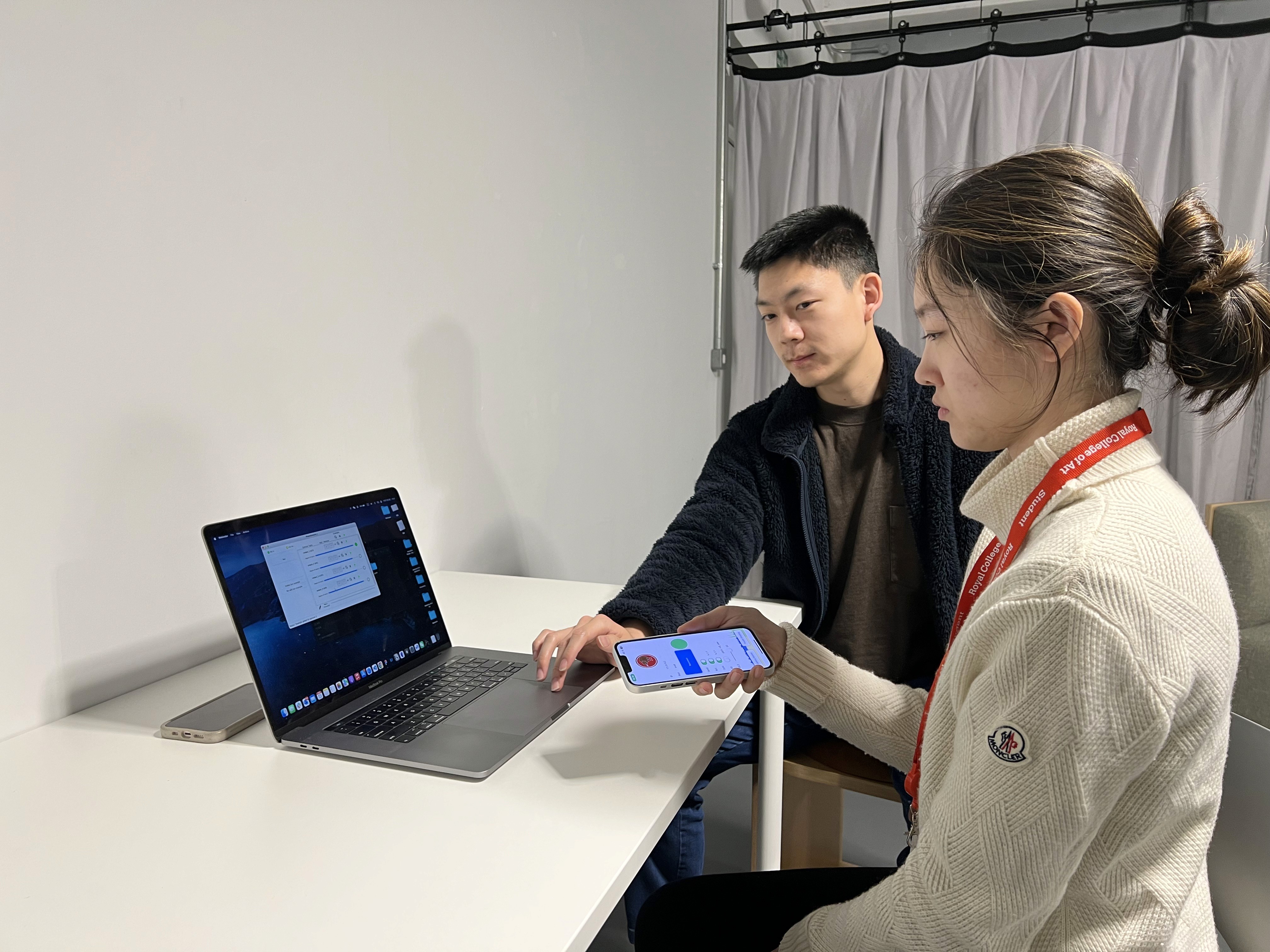

I used Wekinator, a Machine Learning tool, for gesture recognition. By utilising built-in sensors on the phone, users can select drawing styles with intuitive swiping and tapping interactions.

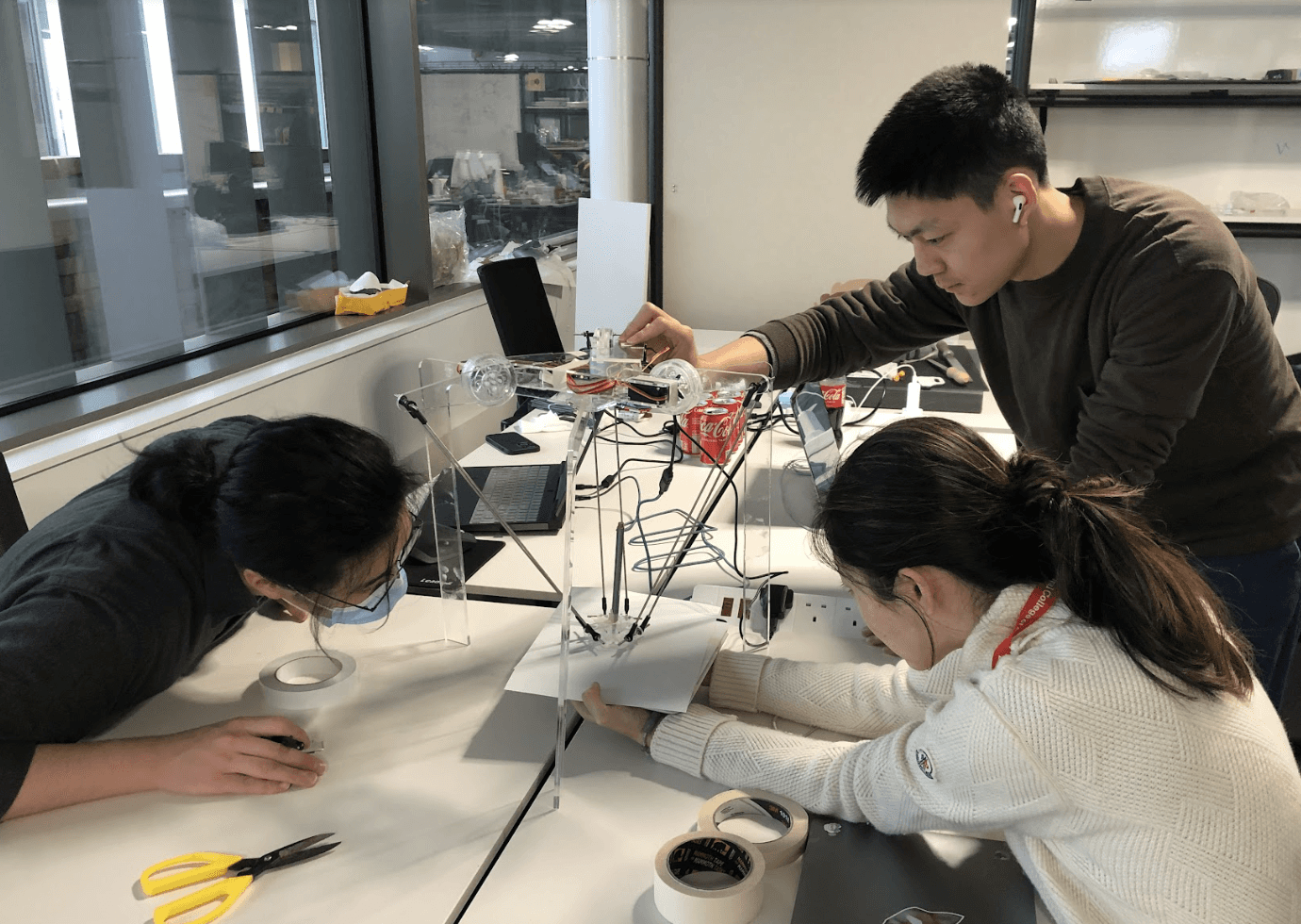

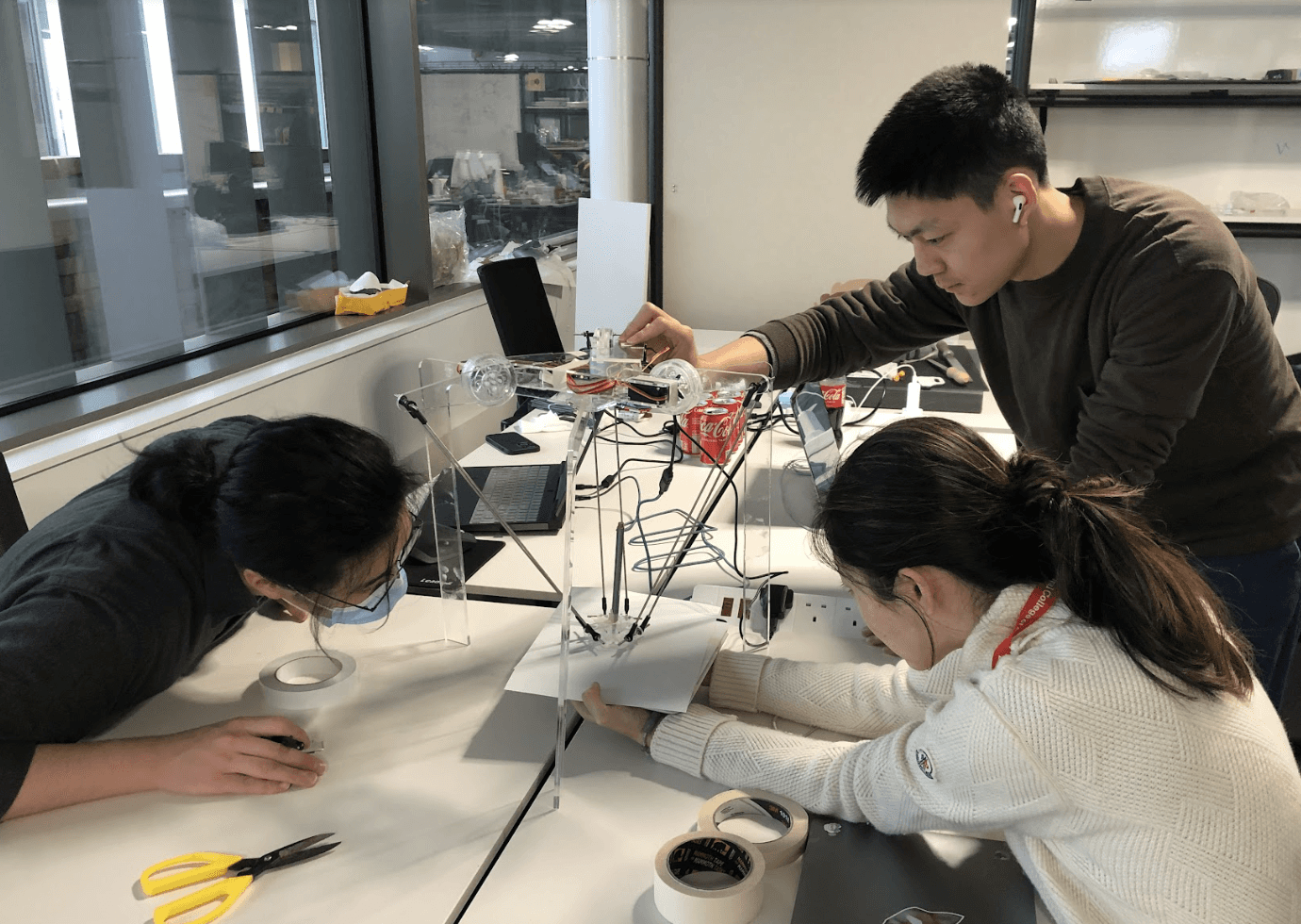

Team

We closely collaborated with each other and were highly engaged to do what each of us is good at. Thanks to my teammates Xiaobo Bi and Yixuan Liu to make it possible.

Context

Photos capture fleeting moments, preserving our precious memories in still frames. Yet, these images are traditionally flat. Imagine if we could bring these snapshots to life by transforming them into three-dimensional displays. This could profoundly enrich our connection to the past, allowing us to relive our cherished memories in a way that feels as real and touching as the moments themselves.

Concept

The concept is to create dot style drawings on cubic stack of acrylic boards using a delta robotic installation. By transforming a 2D digital image to a stylised photo with depth information. The Machine Learning powered gesture recognition enables users to drawing styles ranging from abstract to detailed by use their phone with gestures such as swiping left and right. Once selected the installation starts to produce drawings on each acrylic sheet.

Process

To achieve the transformation of a 2D image into a 3D display, the project is divided into three main parts: image processing, mechanical design, and interaction design, which incorporates gesture recognition through Machine Learning.

Image Processing

The first step is to process a 2D image input by analysing its depth information.

Mechanical Design

The 3D model and mechanical structure is developed by one of the team members who also builds connection between grasshopper and Arduino.

Interaction Design

I used Wekinator, a Machine Learning tool, for gesture recognition. By utilising built-in sensors on the phone, users can select drawing styles with intuitive swiping and tapping interactions.

Team

We closely collaborated with each other and were highly engaged to do what each of us is good at. Thanks to my teammates Xiaobo Bi and Yixuan Liu to make it possible.

DeePixel

2022

Robotic. ML. Interaction Design.

Group Project

Cyber Physical, Innovation Design Engineering (IC x RCA)

DeePixel reimagines photo presentation by transforming images into 3D experiences. By drawing dense dots on each layer of a stack of acrylic plates, it illustrates photos in three dimensions, deepening the emotional connection between the audience and the scene captured in the photograph.

Context

Photos capture fleeting moments, preserving our precious memories in still frames. Yet, these images are traditionally flat. Imagine if we could bring these snapshots to life by transforming them into three-dimensional displays. This could profoundly enrich our connection to the past, allowing us to relive our cherished memories in a way that feels as real and touching as the moments themselves.

Concept

The concept is to create dot style drawings on cubic stack of acrylic boards using a delta robotic installation. By transforming a 2D digital image to a stylised photo with depth information. The Machine Learning powered gesture recognition enables users to drawing styles ranging from abstract to detailed by use their phone with gestures such as swiping left and right. Once selected the installation starts to produce drawings on each acrylic sheet.

Process

To achieve the transformation of a 2D image into a 3D display, the project is divided into three main parts: image processing, mechanical design, and interaction design, which incorporates gesture recognition through Machine Learning.

Image Processing

The first step is to process a 2D image input by analysing its depth information.

Mechanical Design

The 3D model and mechanical structure is developed by one of the team members who also builds connection between grasshopper and Arduino.

Interaction Design

I used Wekinator, a Machine Learning tool, for gesture recognition. By utilising built-in sensors on the phone, users can select drawing styles with intuitive swiping and tapping interactions.

Team

We closely collaborated with each other and were highly engaged to do what each of us is good at. Thanks to my teammates Xiaobo Bi and Yixuan Liu to make it possible.